Paper:

A Feasibility Study on Hand Gesture Intention Interpretation Based on Gesture Detection and Speech Recognition

Dian Christy Silpani, Keishi Suematsu, and Kaori Yoshida

Graduate School of Life Science and System Engineering, Kyushu Institute of Technology

2-4 Hibikino, Wakamatsu-ku, Kitakyushu, Fukuoka 808-0196, Japan

In this paper, considering the characteristics of hand gestures that occur during a natural, unscripted human conversation, we report the results of experiments involving the data analysis of the video recordings of experiments. The recorded conversations between two subjects and the assistant in the experiments (the first author) are analyzed for findings that might be useful to future human-robot interaction (HRI), findings regarding the recognition of hand gestures in natural conversations. In the experiment, hand gestures that appeared naturally during the conversations were manually selected, and their elapsed times and number of appearances were analyzed. In addition, these unscripted conversations were analyzed with a voice recognition system, and the meanings of the hand gestures that appear in the recorded video were interpreted. It was observed that some gestures were made consciously and others unconsciously. Some gestures appeared multiple times with similar meanings and the same intention, especially gestures involving pointing at something to emphasize a sentence or word. Other observations included that there were times when (i) each subject made different hand gestures to convey the same meaning, (ii) both subjects made the same hand gesture but intended different meanings, and (iii) a specific gesture was made by the same subject but with different intended meanings. After considering the results of these experiments, we conclude that it is better to combine gestures with the utterances at the time in order to interpret their intended meanings correctly.

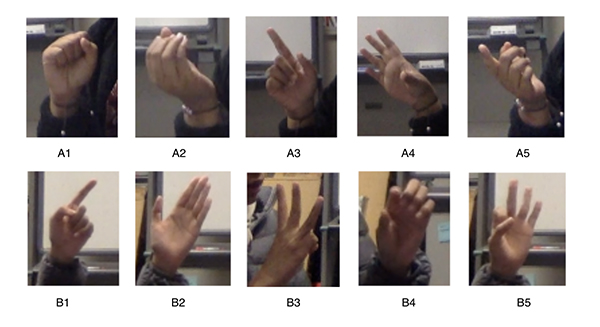

Hand gestures that occurred during natural conversation

- [1] A. Augello, L. Daniela, M. Gentile, D. Ifenthaler, and G. Pilato, “Editorial: robot assisted learning and education,” Front. Robot. AI, Vol.7, 591319, November 2020.

- [2] J.-J. Cabibihan, W. C. So, and S. Pramanik, “Human-Recognizable Robotic Gestures,” Autonomous Mental Development, IEEE Trans., Vol.4, No.4, pp. 305-314, 2012.

- [3] C. Lapusan, C. R. Rad, S. Besoiu, and A. Plesa, “Design of a humanoid robot head for studying human-robot interaction,” 2015 7th Int. Conf. on Electronics, Computers and Artificial Intelligence (ECAI 2015), Bucharest, Romania, 2015.

- [4] M. Tolgyessy, M. Dekan, F. Duchon, J. Rodina, P. Hubinsky, and L. Chovanec, “Foundations of Visual Linear Human-Robot Interaction Via Pointing Gesture Navigation,” Int. J. of Social Robotics, Vol.9, pp. 509-523, 2017.

- [5] A. Tanevska, F. Rea, G. Sandini, L. Canamero, and A. Sciutti, “A Socially Adaptable Framework for Human-Robot Interaction,” Front. Robot. AI, Vol.7, 121, October 2020.

- [6] L. M. Pedro, G. A. P. Cauri, V. L. Belini, R. D. Pechoneri, A. Gonzaga, I. M. Neto, F. Nazareno, and M. Stucheli, “Hand Gesture Recognition for Robot Hand Teleoperation,” ABCM Symposium Series in Mechatronics, Vol.5, Section VII – Robotics, p. 1065, 2012.

- [7] S. Khan, M. E. Ali, S. S. Das, and Md. M. Rahman, “Real Time Hand Gesture Recognition by Skin Color Detection for American Sign Language,” 4th Int. Conf. on Electrical Information and Communication Technology (EICT), Khulna, Bangladesh, pp. 20-22, December 2019.

- [8] D. C. Silpani, K. Suematsu, and K. Yoshida, “A Feasibility Study on Hand Gesture Recognition in Natural Conversation,” 5th IEEE Int. Conf. on Cybernetics (CYBCONF), pp. 085-090, doi: 10.1109/CYBCONF51991.2021.9464141, 2021.

- [9] H. Fakhrurroja, Riyanto, A. Purwarianti, A. S. Prihatmanto, and C. Machbub, “Integration of indonesian speech and hand gesture recognition for controlling humanoid robot,” 15th Int. Conf. on Control, Automation, Robotics and Vision (ICARCV), Singapore, 2018.

- [10] P. Trigueiros, F. Ribeiro, and L. P. Reis, “Hand Gesture Recognition System based in Computer Vision and Machine Learning,” J. M. R. S. Tavares and J. R. M. Natal (Eds.), “Computational Vision and Medical Image Processing IV: VIPIMAGE,” pp. 137-142, 2013.

- [11] R. Meena, K. Jokinen, and G. Wilcock, “Integration of gestures and speech in human-robot interaction,” IEEE 3rd Int. Conf. on Cognitive Infocommunications (CogInfoCom), 2012.

- [12] A. Kendon, “Gesture: Visible Action as Utterance,” Cambridge University Press, doi: w10.1017/CBO9780511807572, 2004.

- [13] Otter Voice Meeting Notes, 2021. https://otter.ai [accessed May 11, 2021]

- [14] Clideo, 2014. https://clideo.com [accessed June 28, 2021]

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.