Paper:

Cross-View Gait Recognition Based on Dual-Stream Network

Xiaoyan Zhao*,**, Wenjing Zhang*, Tianyao Zhang*,***, and Zhaohui Zhang*,***

*School of Automation and Electrical Engineering, University of Science and Technology Beijing

30# Xueyuan Road, Haidian District, Beijing 100083, China

**Shunde Graduate School, University of Science and Technology Beijing

Fo Shan 528399, China

***Beijing Engineering Research Center of Industrial Spectrum Imaging, University of Science and Technology Beijing

30# Xueyuan Road, Haidian District, Beijing 100083, China

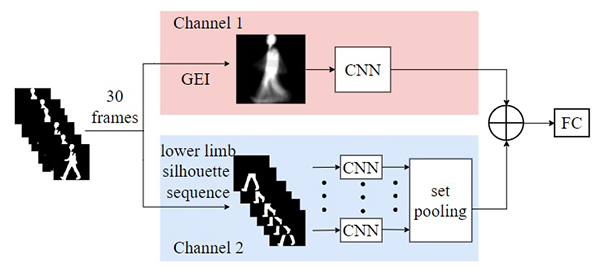

Gait recognition is a biometric identification method that can be realized under long-distance and no-contact conditions. Its applications in criminal investigations and security inspections are thus broad. Most existing gait recognition methods adopted the gait energy image (GEI) for feature extraction. However, the GEI method ignores the dynamic information of gait, which causes the recognition performance to be greatly affected by viewing angle changes and the subject’s belongings and clothes. To solve these problems, in this paper a cross-view gait recognition method that uses a dual-stream network based on the fusion of dynamic and static features (FDSN) is proposed. First, the static features are extracted from the GEI and the dynamic features are extracted from the image sequence of the human’s lower limbs. Then, the two features are fused, and finally, a nearest neighbor classifier is used for classification. Comparative experiments on the CASIA-B dataset created by the Automation Institute of the Chinese Academy of Sciences showed that the FDSN achieves a higher recognition rate than a convolutional neural network (CNN) and Gaitset under changes in viewing angle or clothing. To meet our requirements, in this study a gait image dataset was collected and produced in a campus setting. The experimental results on this dataset show the effectiveness of the FDSN in terms of eliminating the effects of disruptive changes.

The picture is the overall structure of the FDSN network proposed in this paper. It is a dual-stream network based on the fusion of dynamic and static features

- [1] L.-F. Liu, W. Jia, and Y.-H. Zhu, “Survey of Gait Recognition,” Proc. of the Intelligent Computing 5th Int. Conf. on Emerging Intelligent Computing Technology and Applications (ICIC’09), pp. 652-659, 2009.

- [2] M. S. Nixon and J. N. Carter, “Advances in automatic gait recognition,” 6th IEEE Int. Conf. on Automatic Face and Gesture Recognition, pp. 139-144, 2004.

- [3] L. Lee and W. E. L. Grimson, “Gait Analysis for Recognition and Classification,” Proc. of 5th IEEE Int. Conf. on Automatic Face Gesture Recognition, doi: 10.1109/AFGR.2002.1004148, 2002.

- [4] B. Dikovski, G. Madjarov, and D. Gjorgjevikj, “Evaluation of different feature sets for gait recognition using skeletal data from Kinect,” 2014 37th Int. Convention on Information and Communication Technology, Electronics and Microelectronics (MIPRO), pp. 1304-1308, 2014.

- [5] M. Deng, C. Wang, F. Cheng, and W. Zeng, “Fusion of spatial-temporal and kinematic features for gait recognition with deterministic learning,” Pattern Recognition, Vol.67, pp. 186-200, 2017.

- [6] J. Han and B. Bhanu, “Individual recognition using gait energy image,” IEEE Trans. on Pattern Analysis and Machine Intelligence, Vol.28, No.2, pp. 316-322, 2006.

- [7] I. Rida, S. Almaadeed, and A. Bouridane, “Improved gait recognition based on gait energy images,” 2014 26th Int. Conf. on Microelectronics (ICM), pp. 40-43, 2014.

- [8] C. Luo, W. Xu, and C. Zhu, “Robust gait recognition based on partitioning and canonical correlation analysis,” 2015 IEEE Int. Conf. on Imaging Systems and Techniques (IST), pp. 1-5, 2015.

- [9] C. Yan, B. Zhang, and F. Coenen, “Multi-attributes gait identification by convolutional neural networks,” 2015 8th Int. Congress on Image and Signal Processing (CISP), pp. 642-647, 2015.

- [10] M. Alotaibi and A. Mahmood, “Improved Gait recognition based on specialized deep convolutional neural networks,” 2015 IEEE Applied Imagery Pattern Recognition Workshop (AIPR), pp. 1-7, 2015.

- [11] D. Thapar, A. Nigam, D. Aggarwal, and P. Agarwal, “VGR-net: A view invariant gait recognition network,” 2018 IEEE 4th Int. Conf. on Identity, Security, and Behavior Analysis (ISBA), pp. 1-8, 2018.

- [12] Y. Feng, Y. C. Li, and J. B. Luo, “Learning effective Gait features using LSTM,” 2016 23rd Int. Conf. on Pattern Recognition (ICPR), pp. 325-330, 2016.

- [13] Z. Wu, Y. Huang, L. Wang, X. Wang, and T. Tan, “A Comprehensive Study on Cross-View Gait Based Human Identification with Deep CNNs,” IEEE Trans. on Pattern Analysis and Machine Intelligence, Vol.39, No.2, pp. 209-226, 2017.

- [14] R. Liao, C. Cao, E. B. Garcia, S. Yu, and Y. Huang, “Pose-Based Temporal-Spatial Network (PTSN) for Gait Recognition with Carrying and Clothing Variations,” Chinese Conf. on Biometric Recognition (CCBR 2017), Lecture Notes in Computer Science, Vol.10568, pp. 474-483, 2017.

- [15] T. Wolf, M. Babaee, and G. Rigoll, “Multi-view gait recognition using 3D convolutional neural networks,” 2016 IEEE Int. Conf. on Image Processing (ICIP), pp. 4165-4169, 2016.

- [16] H. Chao, Y. He, J. Zhang, and J. Feng, “GaitSet: Regarding Gait as a Set for Cross-View Gait Recognition,” Proc. of the 33rd AAAI Conf. on Artificial Intelligence, pp. 8126-8133, 2019.

- [17] S. Li, Y.P. Dai, K. Hirota, and Z. Zuo. “A Students’ Concentration Evaluation Algorithm Based on Facial Attitude Recognition via Classroom Surveillance Video,” J. Adv. Comput. Intell. Intell. Inform, Vol.24 No.7, pp. 891-899, doi: 10.20965/jaciii.2020.p0891, 2020.

- [18] S. Yu, D. Tan, and T. Tan, “A Framework for Evaluating the Effect of View Angle, Clothing and Carrying Condition on Gait Recognition,” 18th Int. Conf. on Pattern Recognition (ICPR’06), pp. 441-444, 2006.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.