Paper:

Recognition of Hybrid Graphic-Text License Plates

John Anthony C. Jose†, Allysa Kate M. Brillantes, Elmer P. Dadios, Edwin Sybingco, Laurence A. Gan Lim, Alexis M. Fillone, and Robert Kerwin C. Billones

De La Salle University

2401 Taft Avenue, Manila 1004, Philippines

†Corresponding author

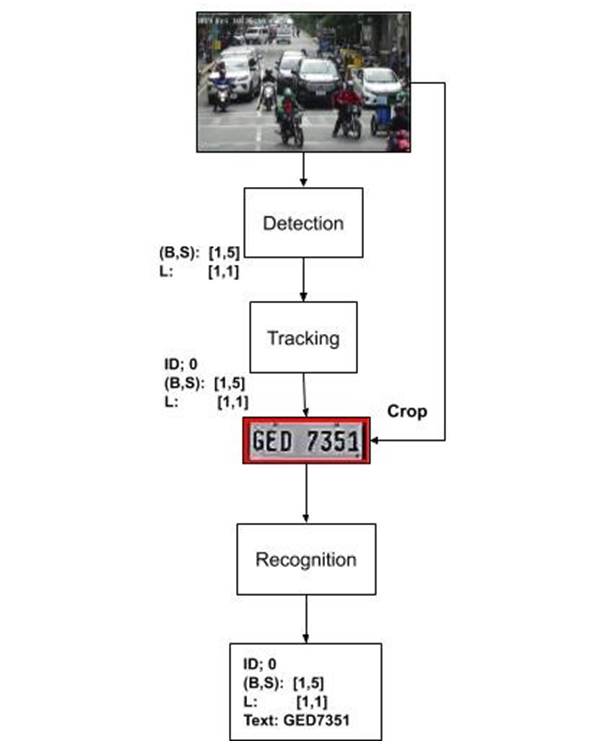

Most automatic license-plate recognition (ALPR) systems use still images and ignore the temporal information in videos. Videos provide rich temporal and motion information that should be considered during training and testing. This study focuses on creating an ALPR system that uses videos. The proposed system is comprised of detection, tracking, and recognition modules. The system achieved accuracies of 81.473% and 84.237% for license-plate detection and classification, respectively.

Proposed ALPR system

- [1] H. Li, P. Wang, and C. Shen, “Toward End-to-End Car License Plate Detection and Recognition With Deep Neural Networks,” IEEE Trans. on Intelligent Transportation Systems, Vol.20 No.3, pp. 1126-1136, doi: 10.1109/TITS.2018.2847291, 2018.

- [2] L. Ferrolino et al., “Vehicle classification through detection and color segmentation of registration plates running on raspberry Pi 3 model B,” Int. J. of Recent Technology and Engineering, Vol.8, No.2, Special Issue 8, pp. 1298-1303, doi: 10.35940/ijrte.B1057.0882S819, 2019.

- [3] S. F. Natividad et al., “Development of a Prototype Traffic Congestion Charging System in the Philippine Setting,” Laguna J. of Engineering and Computer Studies, Vol.3, No.3, pp. 136-158, 2016.

- [4] P. M. J. Chan et al., “Vehicular Movement Tracking by Fuzzy C-means Clustering of Optical Flow Vectors,” 2020 IEEE 12th Int. Conf. on Humanoid, Nanotechnology, Information Technology, Communication and Control, Environment, and Management (HNICEM), doi: 10.1109/HNICEM51456.2020.9400143, 2020.

- [5] P. M. J. Chan et al., “Philippine License Plate Localization Using Genetic Algorithm and Feature Extraction,” 2020 IEEE 12th Int. Conf. on Humanoid, Nanotechnology, Information Technology, Communication and Control, Environment, and Management (HNICEM), doi: 10.1109/HNICEM51456.2020.9400148, 2020.

- [6] J. P. D. Dalida et al., “Development of Intelligent Transportation System for Philippine License Plate Recognition,” 2016 IEEE Region 10 Conf. (TENCON), pp. 3766-3770, doi: 10.1109/TENCON.2016.7848764, 2016.

- [7] A. C. P. Uy et al., “Automated vehicle class and color profiling system based on fuzzy logic,” 2017 5th Int. Conf. on Information and Communication Technology (ICoIC7), doi: 10.1109/ICoICT.2017.8074681, 2017.

- [8] S. Zherzdev and A. Gruzdev, “LPRNet: License Plate Recognition via Deep Neural Networks,” arXiv preprint, arXiv:1806.10447, 2018.

- [9] J. A. C. Jose et al., “Categorizing License Plates Using Convolutional Neural Network with Residual Learning,” 2019 4th Asia-Pacific Conference on Intelligent Robot Systems (ACIRS), pp. 231-234, doi: 10.1109/ACIRS.2019.8935997, 2019.

- [10] F. Xiao and Y. J. Lee, “Video object detection with an aligned spatial-temporal memory,” ECCV 2018: Computer Vision, Lecture Notes in Computer Science, Vol.11212, pp. 494-510, doi: 10.1007/978-3-030-01237-3_30, 2018.

- [11] A. K. M. Brillantes et al., “Detection of fonts and characters with hybrid graphic-text plate numbers,” 2018 IEEE Region 10 Conf. (TENCON 2018), pp. 629-633, doi: 10.1109/TENCON.2018.8650097, 2018.

- [12] A. K. Brillantes et al., “Philippine License Plate Detection and Classification using Faster R-CNN and Feature Pyramid Network,” 2019 IEEE 11th Int. Conf. on Humanoid, Nanotechnology, Information Technology, Communication and Control, Environment, and Management (HNICEM), doi: 10.1109/HNICEM48295.2019.9072754, 2019.

- [13] M. C. E. Amon et al., “Philippine License Plate Character Recognition using Faster R-CNN with InceptionV2,” 2019 IEEE 11th Int. Conf. on Humanoid, Nanotechnology, Information Technology, Communication and Control, Environment, and Management (HNICEM), doi: 10.1109/HNICEM48295.2019.9072753, 2019.

- [14] M. Cruz et al., “Visual-based People Counting and Profiling System for Use in Retail Data Analytics,” 2020 IEEE Int. Conf. on Industrial Engineering and Engineering Management (IEEM), pp. 200-204, doi: 10.1109/IEEM45057.2020.9309920, 2020.

- [15] J. J. Keh et al., “Video-Based Gender Profiling on Challenging Camera Viewpoint for Restaurant Data Analytics,” 2020 IEEE 12th Int. Conf. on Humanoid, Nanotechnology, Information Technology, Communication and Control, Environment, and Management (HNICEM), doi: 10.1109/HNICEM51456.2020.9400115, 2020.

- [16] M. Cruz et al., “A People Counting System for Use in CCTV Cameras in Retail,” 2020 IEEE 12th Int. Conf. on Humanoid, Nanotechnology, Information Technology, Communication and Control, Environment, and Management (HNICEM), doi: 10.1109/HNICEM51456.2020.9400048, 2020.

- [17] S. Ren et al., “Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks,” arXiv preprint, arXiv:1506.01497, 2015.

- [18] T. Lin et al., “Feature Pyramid Networks for Object Detection,” arXiv preprint, arXiv:1612.03144, 2016.

- [19] P. Bergmann, T. Meinhardt, and L. Leal-Taixé, “Tracking Without Bells and Whistles,” 2019 IEEE/CVF Int. Conf. on Computer Vision (ICCV), pp. 941-951, doi: 10.1109/ICCV.2019.00103, 2019.

- [20] J. Baek et al., “What Is Wrong With Scene Text Recognition Model Comparisons? Dataset and Model Analysis,” 2019.

- [21] R. Girshick, “Fast R-CNN,” Proc. of the IEEE Int. Conf. on Computer Vision, pp. 1440-1448, doi: 10.1109/ICCV.2015.169, 2015.

- [22] K. Chen et al., “MMDetection: Open MMLab Detection Toolbox and Benchmark,” arXiv preprint, arXiv:1906.07155, 2019.

- [23] F. Schroff, D. Kalenichenko, and J. Philbin, “FaceNet: A Unified Embedding for Face Recognition and Clustering,” 2015 IEEE Conf. on Computer Vision and Pattern Recognition (CVPR), doi: 10.1109/CVPR.2015.7298682, 2015.

- [24] A. Hermans, L. Beyer, and B. Leibe, “In Defense of the Triplet Loss for Person Re-Identification,” arXiv preprint, arXiv:1703.07737, 2017.

- [25] K. He et al., “Mask R-CNN,” 2017 IEEE Int. Conf. on Computer Vision (ICCV), pp. 2980-2988. doi: 10.1109/ICCV.2017.322, 2017.

- [26] M. Jaderberg et al., “Synthetic Data and Artificial Neural Networks for Natural Scene Text Recognition,” arXiv preprint, arXiv:1406.2227, 2014.

- [27] A. Gupta, A. Vedaldi, and A. Zisserman, “Synthetic Data for Text Localisation in Natural Images,” Proc. of the IEEE Computer Society Conf. on Computer Vision and Pattern Recognition (CVPR), pp. 2315-2324, doi: 10.1109/CVPR.2016.254, 2016.

- [28] M. D. Zeiler, “ADADELTA: An Adaptive Learning Rate Method,” arXiv preprint, arXiv:1212.5701, 2012.

- [29] L. Leal-Taixé et al., “MOTChallenge 2015: Towards a Benchmark for Multi-Target Tracking,” arXiv preprint, arXiv:1504.01942, 2015.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.