Paper:

Automatic Neonatal Alertness State Classification Based on Facial Expression Recognition

Kento Morita*1, Nobu C. Shirai*2, Harumi Shinkoda*3, Asami Matsumoto*4, Yukari Noguchi*5, Masako Shiramizu*6, and Tetsushi Wakabayashi*1

*1Graduate School of Engineering, Mie University

1577 Kurimamachiya-cho, Tsu, Mie 514-8507, Japan

*2Center for Information Technologies and Networks, Mie University

1577 Kurimamachiya-cho, Tsu, Mie 514-8507, Japan

*3Kagoshima Immaculate Heart University

2365 Amatatsu-cho, Satsumasendai, Kagoshima 895-0011, Japan

*4Suzuka University of Medical Science

3500-3 Minamitamagaki, Suzuka, Mie 513-8670, Japan

*5St. Mary College

422 Tubuku-Honmachi, Kurume, Fukuoka 830-8558, Japan

*6Kyushu University Hospital

3-5-25 Maidashi, Higashi-ku, Fukuoka, Fukuoka 812-8582, Japan

Premature babies are admitted to the neonatal intensive care unit (NICU) for several weeks and are generally placed under high medical supervision. The NICU environment is considered to have a bad influence on the formation of the sleep-wake cycle of the neonate, known as the circadian rhythm, because patient monitoring and treatment equipment emit light and noise throughout the day. In order to improve the neonatal environment, researchers have investigated the effect of light and noise on neonates. There are some methods and devices to measure neonatal alertness, but they place on additional burden on neonatal patients or nurses. Therefore, this study proposes an automatic non-contact neonatal alertness state classification method using video images. The proposed method consists of a face region of interest (ROI) location normalization method, histogram of oriented gradients (HOG) and gradient feature-based feature extraction methods, and a neonatal alertness state classification method using machine learning. Comparison experiments using 14 video images of 7 neonatal subjects showed that the weighted support vector machine (w-SVM) using the HOG feature and averaging merge achieved the highest classification performance (micro-F1 of 0.732). In clinical situations, body movement is evaluated primarily to classify waking states. The additional 4 class classification experiments are conducted by combining waking states into a single class, with results that suggest that the proposed facial expression based classification is suitable for the detailed classification of sleeping states.

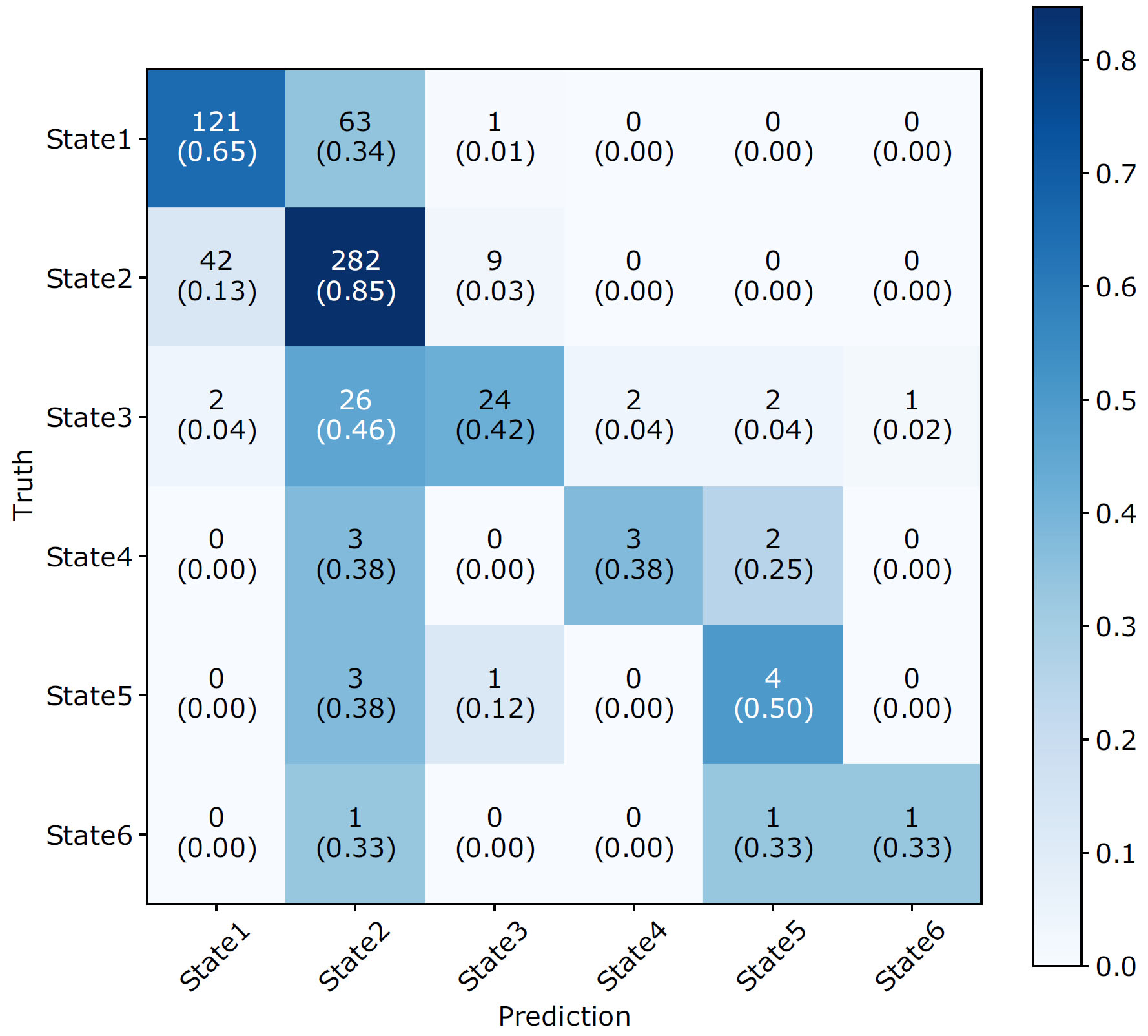

Confusion matrix for the 6-class classification

- [1] H. Blencowe, S. Cousens, M. Z. Oestergaard, D. Chou, A. B. Moller, R. Narwal, A. Adler, C. V. Garcia, S. Rohde, L. Say, and J. E. Lawn, “National, regional, and worldwide estimates of preterm birth rates in the year 2010 with time trends since 1990 for selected countries: a systematic analysis and implications,” Lancet, Vol.379, No.9832, pp. 2162-2172, 2012.

- [2] Ministry of Health, Labour and Welfare, “Vital Statistics of Japan –The latest trends–,” 2017.

- [3] S. Blackburn, “Environmental impact of the NICU on developmental outcomes,” J. of Pediatric Nursing, Vol.13, No.5, pp. 279-289, 1998.

- [4] H. Shinkoda, Y. Kinoshita, R. Mitsutake, F. Ueno, H. Arata, C. Kiyohara, Y. Suetsugu, Y. Koga, K. Anai, M. Shiramizu, M. Ochiai, and T. Kaku, “The influence of premature infants/sleep and physiological response under NICU environment (illuminance, noise) – Seen from circadian variation and comparison of day and night –,” Mie Nursing J., Vol.17, No.1, pp. 35-44, 2015 (in Japanese).

- [5] M. Shiramizu and H. Shinkoda, “A pilot study to examine the most suitable lighting environment for the premature infants: Analysis of amount of activity and physiological response by using Actigraph,” Mie Nursing J., Vol.18, No.1, pp. 15-21, 2016 (in Japanese).

- [6] T. B. Brazelton, “Neonatal Behavioral Assessment Scale,” Spastics International Medical Publications, 1973.

- [7] V. Ibáñez, J. Silva, and O. Cauli, “A survey on sleep assessment methods,” PeerJ, Vol.6, Article No.e4849, 2018.

- [8] W. C. Orr, “Utilization of Polysomnography in the Assessment of Sleep Disorders,” Medical Clinics of North America, Vol.69, No.6, pp. 1153-1167, 1985.

- [9] R. J. Cole, D. F. Kripke, W. Gruen, D. J. Mullaney, and J. C. Gillin, “Automatic Sleep/Wake Identification From Wrist Activity,” Sleep, Vol.15, Issue 5, pp. 461-469, 1992.

- [10] M. Sung, T. M. Adamson, and R. S. C. Horne, “Validation of actigraphy for determining sleep and wake in preterm infants,” Acta Paediatrica, Vol.98, Issue 1, pp. 52-57, 2009.

- [11] E. Nakayama, H. Kobayashi, and N. Yamamoto, “A Basic Study on Sleep-Wake Identification by Wrist Actigraph,” Ishikawa J. of Nursing, Vol.3, No.2, pp. 31-37, 2006 (in Japanese).

- [12] S. Cabon, F. Porée, A. Simon, B. Met-Montot, P. Pladys, O. Rosec, N. Nardi, and G. Carrault, “Audio- and video-based estimation of the sleep stages of newborns in Neonatal Intensive Care Unit,” Biomedical Signal Processing and Control, Vol.52, pp. 362-370, 2019.

- [13] H. F. R. Prechtl, “The behavioural states of the newborn infant (a review),” Brain Research, Vol.76, No.2, pp. 185-212, 1974.

- [14] M. Hattori, K. Morita, T. Wakabayashi, H. Shinkoda, A. Matsumoto, Y. Noguchi, and M. Shiramizu, “Neonatal Sleep-Wake States Estimation in the NICU by Body Movement Detection,” Int. Workshop on Frontiers of Computer Vision (IW-FCV), 2021.

- [15] F. Pedregosa, G. Varoquaux, A. Gramfort, V. Michel, B. Thirion, O. Grisel, M. Blondel, P. Prettenhofer, R. Weiss, V. Dubourg, J. Vanderplas, A. Passos, D. Cournapeau, M. Brucher, M. Perrot, and É. Duchesnay, “Scikit-learn: Machine Learning in Python,” J. of Machine Learning Research, Vol.12, pp. 2825-2830, 2011.

- [16] N. Dalal and T. Bill, “Histograms of oriented gradients for human detection,” 2005 IEEE Computer Society Conf. on Computer Vision and Pattern Recognition (CVPR’05), Vol.1, 2005.

- [17] T. Wakabayashi, S. Tsuruoka, F. Kimura, and Y. Miyake, “Increasing the feature size in handwritten numeral recognition to improve accuracy,” Systems and Computers in Japan, Vol.26, No.8, pp. 35-44, 1995.

- [18] M. Shi, Y. Fujisawa, T. Wakabayashi, and F. Kimura, “Handwritten numeral recognition using gradient and curvature of gray scale image,” Pattern Recognition, Vol.35, No.10, pp. 2051-2059, 2002.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.