Paper:

Visualization Method Corresponding to Regression Problems and Its Application to Deep Learning-Based Gaze Estimation Model

Daigo Kanda, Shin Kawai, and Hajime Nobuhara

Department of Intelligent Interaction Technologies, Graduate School of Systems and Information Engineering, University of Tsukuba

1-1-1 Tennoudai, Tsukuba, Ibaraki 305-8573, Japan

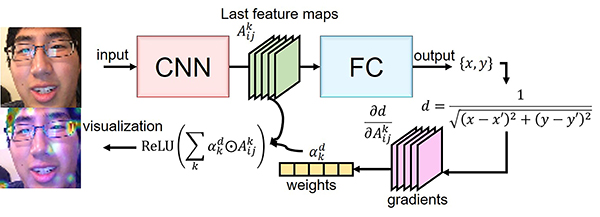

The human gaze contains substantial personal information and can be extensively employed in several applications if its relevant factors can be accurately measured. Further, several fields could be substantially innovated if the gaze could be analyzed using popular and familiar smart devices. Deep learning-based methods are robust, making them crucial for gaze estimation on smart devices. However, because internal functions in deep learning are black boxes, deep learning systems often make estimations for unclear reasons. In this paper, we propose a visualization method corresponding to a regression problem to solve the black box problem of the deep learning-based gaze estimation model. The proposed visualization method can clarify which region of an image contributes to deep learning-based gaze estimation. We visualized the gaze estimation model proposed by a research group at the Massachusetts Institute of Technology. The accuracy of the estimation was low, even when the facial features important for gaze estimation were recognized correctly. The effectiveness of the proposed method was further determined through quantitative evaluation using the area over the MoRF perturbation curve (AOPC).

Grad-CAM variant corresponding to regression problems

- [1] Pew Research Center, “Smartphone Ownership Is Growing Rapidly Around the World, but Not Always Equally,” https://www.pewresearch.org/global/2019/02/05/smartphone-ownership-is-growing-rapidly-around-the-world-but-not-always-equally/ [accessed July 12, 2019]

- [2] D. W. Hansen and Q. Ji, “In the Eye of the Beholder: A Survey of Models for Eyes and Gaze,” IEEE Trans. on Pattern Analysis and Machine Intelligence, Vol.32, No.3, pp. 478-500, 2010.

- [3] K. Krafka, A. Khosla, P. Kellnhofer, H. Kannan, S. Bhandarkar, W. Matusik, and A. Torralba, “Eye Tracking for Everyone,” Proc. of the IEEE Conf. on Computer Vision and Pattern Recognition, pp. 2176-2184, 2016.

- [4] Integrated Innovation Strategy Promotion Council Decision, “AI Strategy 2019 – AI for Everyone: People, Industries, Regions and Governments,” https://www8.cao.go.jp/cstp/english/humancentricai.pdf [accessed July 15, 2019]

- [5] D. Kanda, B. Wang, K. Tomono, S. Kawai, and H. Nobuhara, “Visualization technique for improving gaze estimation models based on deep learning,” 6th Int. Workshop on Advanced Computational Intelligence and Intelligent Informatics (IWACIII 2019), 2019.

- [6] R. R. Selvaraju, M. Cogswell, A. Das, R. Vedantam, D. Parikh, and D. Batra, “Grad-CAM: Visual Explanations from Deep Networks via Gradient-based Localization,” arXive preprint, arXiv: 1610.02391, 2016.

- [7] X. Zhang, Y. Sugano, M. Fritz, and A. Bulling, “It’s Written All over Your Face: Full-Face Appearance-Based Gaze Estimation,” IEEE Conf. on Computer Vision and Pattern Recognition Workshops (CVPRW), pp. 2299-2308, 2017.

- [8] Q. Huang, A. Veeraraghavan, and A. Sabharwal, “TabletGaze: dataset and analysis for unconstrained appearance-based gaze estimation in mobile tablets,” Machine Vision and Applications, Vol.28, No.5-6, pp. 445-461, 2017.

- [9] M. D. Zeiler and R. Fergus, “Visualizing and Understanding Convolutional Networks,” Lecture Notes in Computer Science, Vol.8689, European Conference on Computer Vision, pp. 818-833, 2014.

- [10] J. T. Springenberg, A. Dosovitskiy, T. Brox, and M. Riedmiller, “Striving for simplicity: The all convolutional net,” Proc. of 3rd Int. Conf. on Learning Representations (ICLR 2015), pp. 1-14, 2015.

- [11] G. Montavon, W. Samek, and K.-R. Müller, “Methods for interpreting and understanding deep neural networks,” Digital Signal Processing, Vol.73, pp. 1-15, 2018.

- [12] W. Samek, A. Binder, G. Montavon, S. Lapuschkin, and K.-R. Müller, “Evaluating the Visualization of What a Deep Neural Network Has Learned,” IEEE Trans. on Neural Networks and Learning Systems, Vol.28, No.11, pp. 2660-2673, 2017.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.