Paper:

Study on Development of Humor Discriminator for Dialogue System

Tomohiro Yoshikawa and Ryosuke Iwakura

Graduate School of Engineering, Nagoya University

Furo-cho, Chikusa-ku, Nagoya, Aichi 464-8603, Japan

Studies on automatic dialogue systems, which allow people and computers to communicate with each other using natural language, have been attracting attention. In particular, the main objective of a non-task-oriented dialogue system is not to achieve a specific task but to amuse users through chat and free dialogue. For this type of dialogue system, continuity of the dialogue is important because users can easily get tired if the dialogue is monotonous. On the other hand, preceding studies have shown that speech with humorous expressions is effective in improving the continuity of a dialogue. In this study, we developed a computer-based humor discriminator to perform user- or situation-independent objective discrimination of humor. Using the humor discriminator, we also developed an automatic humor generation system and conducted an evaluation experiment with human subjects to test the generated jokes. A t-test on the evaluation scores revealed a significant difference (P value: 3.5×10-5) between the proposed and existing methods of joke generation.

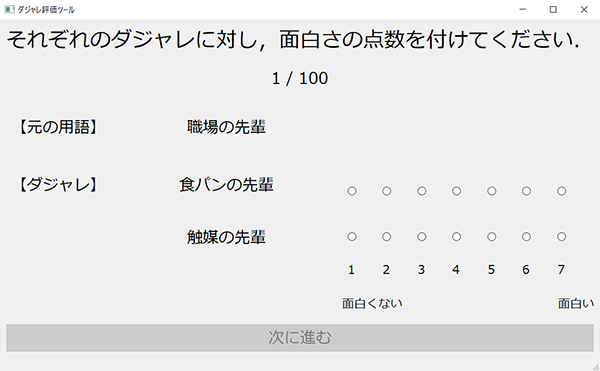

Tool screen for joke evaluation

- [1] V. Zue, S. Seneff, J. R. Glass, J. Polifroni, C. Pao, T. J. Hazen, and L. Hetherington, “JUPlTER: a telephone-based conversational interface for weather information,” IEEE Trans. on Speech and Audio Processing, Vol.8, No.1, pp. 85-96, 2000.

- [2] T. Misu and T. Kawahara, “Speech-Based Interactive Information Guidance System using Question-Answering Technique,” Proc. of the 2007 Int. Conf. on Acoustics, Speech and Signal Processing, Volume 4, pp. 145-148, 2007.

- [3] K. Miyazawa, T. Tokoyo, Y. Masui, N. Matsuo, and H. Kikuchi, “Factors of Interaction in the Spoken Dialogue System with High Desire of Sustainability,” The Trans. of the Institute of Electronics, Information and Communication Engineers A, Vol.95, No.1, pp. 27-36, 2012 (in Japanese).

- [4] T. Yamaguchi, K. Inoue, K. Yoshino, K. Takanashi, N. G. Ward, and T. Kawahara, “Analysis and Prediction of Morphological Patterns of Backchannels for Attentive Listening Agents,” Proc. of the 7th Int. Workshop on Spoken Dialogue Systems, pp. 1-12, 2016.

- [5] S. Kobyashi and M. Hagiwara, “Non-task-oriented dialogue system considering user’s preference and human relations,” Trans. of the Japanese Society for Artificial Intelligence, Vol.31, No.1, Article No.DSF-A_1-10, 2016 (in Japanese).

- [6] Y. Yoshida and M. Hagiwara, “An Automatic Manzai-dialogue Creating System,” Trans. of Japan Society of Kansei Engineering, Vol.11, No.2, pp. 265-272, 2012 (in Japanese).

- [7] R. Mashimo, T. Umetani, T. Kitamura, and A. Nadamoto, “Automatic generation of Japanese traditional funny scenario from web content based on web intelligence,” Proc. of the 17th Int. Conf. on Information Integration and Web-based Applications and Services, Article No.21, 2015.

- [8] S. Saiki and K. Nishimoto, “Enigma Image Searcher: A System for Retrieving Funny Images based on Multistage Word Association,” IPSJ SIG Technical Report: SIG Human-Computer Interaction (HCI), Vol.2016-HCI-167, No.1, 2016 (in Japanese).

- [9] A. Kobayashi, T. Isezaki, T. Mochizuki, T. Nunobiki, and T. Yamada, “Pirot study about Oogiri System, Japanese Comedy Show, with Some Robots,” IPSJ SIG Technical Report: SIG Consumer Devices and Systems (CDS), Vol.2017-CDS-18, No.6, 2017 (in Japanese).

- [10] M. Maeda and T. Onisawa, “Generation of Nazokake Words Considering Funniness and Relation Degrees Among Words,” J. of Japan Society of Kansei Engineering, Vol.5, No.3, pp. 17-22, 2005 (in Japanese).

- [11] H. Yamane and M. Hagiwara, “Oxymoron generation using an association word corpus and a large-scale N-gram corpus,” Soft Computing, Vol.19, No.4, pp. 919-927, 2015.

- [12] M. Yatsu and K. Araki, “Comparison of Pun Detection Methods Using Japanese Pun Corpus,” Proc. of the 11th Int. Conf. on Language Resources and Evaluation (LREC2018), 2018.

- [13] T. Matsui and M. Hagiwara, “Non-task-oriented Dialogue System with Humor Considering Utterances Polarity,” Trans. of Japan Society of Kansei Engineering, Vol.14, No.1, pp. 9-16, 2015.

- [14] Y. Amaya, R. Rzepka, and K. Araki, “Performance Evaluation of Recognition Method of Narrative Humor Using Words Similarity,” Technical Report: JSAI Special Interest Group on Language Sense Processing Engineering (SIG-LSEB), Vol.43, pp. 63-69, 2013 (in Japanese).

- [15] D. Yang, A. Lavie, C. Dyer, and E. Hovy, “Humor recognition and humor anchor extraction,” Proc. of the 2015 Conf. on Empirical Methods in Natural Language Processing, pp. 2367-2376, 2015.

- [16] T. Mikolov, K. Chen, G. Corrado, and J. Dean, “Efficient estimation of word representations in vector space,” arXiv preprint, arXiv:1301.3781, 2013.

- [17] T. Mikolov, I. Sutskever, K. Chen, G. S. Corrado, and J. Dean, “Distributed representations of words and phrases and their compositionality,” Advances in Neural Information Processing Systems 26 (NIPS2013), pp. 3111-3119, 2013.

- [18] M. Yatsu, “A Study on Topic Adaptation and Pun Humor Processing in Integrated Dialogue Systems,” Doctoral thesis, Hokkaido University, 2017 (in Japanese).

- [19] R. Kohavi, “A study of cross-validation and bootstrap for accuracy estimation and model selection,” Proc. of the 14th Int. Joint Conf. on Artificial Intelligence (IJCAI), Volume 2, pp. 1137-1145, 1995.

- [20] National Institute for Japanese Language and Linguistics (NINJAL), “Bunrui-goi-hyo,” 2004 (in Japanese).

- [21] T. Kudo, “Applying Conditional Random Fields to Japanese Morphological Analysis,” Proc. of the 2004 Conf. on Empirical Methods in Natural Language Processing (EMNLP-2004), pp. 230-237, 2004.

- [22] T. Kudo. “Mecab: Yet another part-of-speech and morphological analyzer,” 2005, http://taku910.github.io/mecab/ [accessed October 31, 2019]

- [23] S. Toshinori. “Neologism dictionary based on the language resources on the Web for Mecab,” 2015, https://github.com/neologd/mecab-ipadic-neologd [accessed October 31, 2019]

- [24] N. Amano and K. Kondo, ”On the NTT Psycholinguistic Databases: Lexical Properties of Japanese,” Sanseido, 1999 (in Japanese).

- [25] C. Kano, “A Proposed Syllabus for Kanji Teaching,” J. of Japanese Language Teaching (Nihongo Kyoiku Ronshu), International Student Center, University of Tsukuba, Vol.9, pp. 41-50, 1994 (in Japanese).

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.