Paper:

Visual Monocular Localization, Mapping, and Motion Estimation of a Rotating Small Celestial Body

Naoya Takeishi and Takehisa Yairi

Department of Aeronautics and Astronautics, School of Engineering, The University of Tokyo

7-3-1 Hongo, Bunkyo-ku, Tokyo 113-8656, Japan

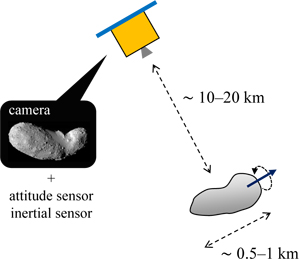

In the exploration of a small celestial body, it is important to estimate the position and attitude of the spacecraft, as well as the geometric properties of the target celestial body. In this paper, we propose a method to concurrently estimate these quantities in a highly automatic manner when measurements from an attitude sensor, inertial sensors, and a monocular camera are given. The proposed method is based on the incremental optimization technique, which works with models for sensor fusion, and a tailored initialization scheme developed to compensate for the absence of range sensors. Moreover, we discuss the challenges in developing a fully automatic navigation framework.*

* This paper is an extended version of a preliminary conference report [1].

The supposed configuration of a spacecraft

- [1] N. Takeishi and T. Yairi, “Dynamic visual simultaneous localization and mapping for asteroid exploration,” Proc. of the 13th Int. Symposium on Artificial Intelligence, Robotics and Automation in Space, 2016.

- [2] S. Thrun et al., “Probabilistic Robotics,” The MIT Press, 2005.

- [3] C. Stachniss et al., “Simultaneous localization and mapping,” Handbook of Robotics, 2nd edition, pp. 1153-1176, Springer, 2016.

- [4] A. Sujiwo et al., “Monocular vision-based localization using ORB-SLAM with LIDAR-aided mapping in real-world robot challenge,” J. of Robotics and Mechatronics, Vol.28, No.4, pp. 479-490, 2016.

- [5] M. Yokozuka and O. Matsumoto, “Accurate localization for making maps to mobile robots using odometry and GPS without scan-matching,” J. of Robotics and Mechatronics, Vol.27, No.4, pp. 410-418, 2015.

- [6] T. Suzuki et al., “3D terrain reconstruction by small unmanned aerial vehicle using SIFT-based monocular SLAM,” J. of Robotics and Mechatronics, Vol.23, No.2, pp. 292-301, 2011.

- [7] R. I. Hartley and A. Zisserman, “Multiple View Geometry in Computer Vision,” Cambridge University Press, 2000.

- [8] M. Kaess et al., “iSAM: Incremental smoothing and mapping,” IEEE Trans. on Robotics, Vol.24, No.6, pp. 1365-1378, 2008.

- [9] B. E. Tweddle. “Computer Vision-based Localization and Mapping of an Unknown, Uncooperative and Spinning Target for Spacecraft,” Ph.D. thesis, The Massachusetts Institute of Technology, 2013.

- [10] B. E. Tweddle et al., “Factor graph modeling of rigid-body dynamics for localization, mapping and parameter estimation of a spinning object in space,” J. of Field Robotics, Vol.32, No.6, pp. 897-933, 2015.

- [11] R. Kuemmerle et al., “g2o: A general framework for graph optimization,” Proc. of IEEE Int. Conf. on Robotics and Automation, pp. 3607-3613, 2011.

- [12] M. Kaess et al., “iSAM2: Incremental smoothing and mapping using the Bayes tree,” The Int. J. of Robotics Research, Vol.31, No.2, pp. 216-235, 2012.

- [13] D. G. Lowe, “Distinctive image features from scale-invariant keypoints,” Int. J. of Computer Vision, Vol.60, pp. 91-110, 2004.

- [14] N. Takeishi et al., “Evaluation of interest-region detectors and descriptors for automatic landmark tracking on asteroids,” Trans. of the Japan Society for Aeronautical and Space Science, Vol.58, No.1, pp. 45-53, 2015.

- [15] M. A. Fischler and R. C. Bolles, “Random sample consensus: A paradigm for model fitting with applications to image analysis sand automated cartography,” Communication of the ACM, Vol.24, No.6, pp. 381-395, 1981.

- [16] D. Nistér, “An efficient solution to the five-point relative pose problem,” IEEE Trans. on Pattern Analysis and Machine Intelligence, Vol.26, No.6, pp. 756-770, 2004.

- [17] V. Lepetit et al., “EPnP: An accurate O(n) solution to the PnP problem,” Int. J. of Computer Vision, Vol.81, No.2, p. 155, 2008.

- [18] Y. Zheng et al., “Revisiting the PnP problem: A fast, general and optimal solution,” Proc. of IEEE Int. Conf. on Computer Vision, pp. 2344-2351, 2013.

- [19] D. S. Bayard and P. B. Brugarolas, “On-board vision-based spacecraft estimation algorithm for small body exploration,” IEEE Trans. on Aerospace and Electronic Systems, Vol.44, No.1, pp. 243-260, 2008.

- [20] S. Augenstein and S. M. Rock, “Improved frame-to-frame pose tracking during vision-only SLAM/SFM with a tumbling target,” Proc. of IEEE Int. Conf. on Robotics and Automation, pp. 3131-3138, 2011.

- [21] C. Cocaud and T. Kubota, “SLAM-based navigation scheme for pinpoint landing on small celestial body,” Advanced Robotics, Vol.26, No.15, pp. 1747-1770, 2012.

- [22] N. Takeishi et al., “Simultaneous estimation of shape and motion of an asteroid for automatic navigation,” Proc. of IEEE Int. Conf. on Robotics and Automation, pp. 2861-2866, 2015.

- [23] M. D. Lichter and S. Dubowsky, “State, shape, and parameter estimation of space objects from range images,” Proc. of IEEE Int. Conf. on Robotics and Automation, Vol.3, pp. 2974-2979, 2004.

- [24] M. D. Lichter, “Shape, motion, and inertial parameter estimation of space objects using teams of cooperative vision sensors,” Ph.D. thesis, The Massachusetts Institute of Technology, 2005.

- [25] U. Hillenbrand and R. Lampariello, “Motion and parameter estimation of a free-floating space object from range data for motion prediction,” Proc. of the 8th Int. Symposium on Artificial Intelligence, Robotics and Automation in Spaces, 2005.

- [26] F. Aghili, “A prediction and motion-planning scheme for visually guided robotic capturing of free-floating tumbling objects with uncertain dynamics,” IEEE Trans. on Robotics, Vol.28, No.3, pp. 634-649, 2012.

- [27] M. Maruya et al., “Navigation shape and surface topography model of Itokawa,” Proc. of AIAA/AAS Astrodynamics Specialist Conf. and Exhibit, AIAA 2006-6659, 2006.

- [28] H. Demura et al., “Pole and global shape of 25143 Itokawa,” Science, Vol.312, No.5778, pp. 1347-1349, 2006.

- [29] K. Shirakawa et al., “Accurate landmark tracking for navigating Hayabusa prior to final descent,” Advances in the Astronautical Sciences, Vol.124, pp. 1817-1826, 2006.

- [30] R. Gaskell et al., “Landmark navigation studies and target characterization in the Hayabusa encounter with Itokawa,” Proc. of AIAA/AAS Astrodynamics Specialist Conf. and Exhibit, AIAA 2006-6660, 2006.

- [31] F. Preusker et al., “Shape model, reference system definition, and cartographic mapping standards for comet 67P/Churyumov-Gerasimenko – stereo-photogrammetric analysis of Rosetta/OSIRIS image data,” Astronomy and Astrophysics, Vol.583, A33, 2015.

- [32] L. Jorda et al., “The global shape, density and rotation of Comet 67P/Churyumov-Gerasimenko from preperihelion Rosetta/OSIRIS observations,” Icarus, Vol.277, pp. 257-278, 2016.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.