Paper:

Audio-Visual Beat Tracking Based on a State-Space Model for a Robot Dancer Performing with a Human Dancer

Misato Ohkita, Yoshiaki Bando, Eita Nakamura, Katsutoshi Itoyama, and Kazuyoshi Yoshii

Graduate School of Informatics, Kyoto University

Yoshida-honmachi, Sakyo-ku, Kyoto 606-8501, Japan

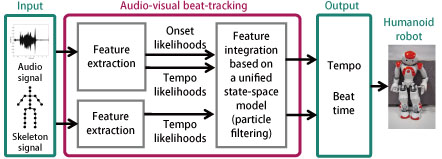

An overview of real-time audio-visual beat-tracking for music audio signals and human dance moves

- [1] Y. Sasaki, S. Masunaga, S. Thompson, S. Kagami, and H. Mizoguchi, “Sound localization and separation for mobile robot teleoperation by tri-concentric microphone array,” J. of Robotics and Mechatronics, Vol.19, No.3, pp. 281-289, 2007.

- [2] Y. Sasaki, M. Kaneyoshi, S. Kagami, H. Mizoguchi, and T. Enomoto, “Pitch-cluster-map based daily sound recognition for mobile robot audition,” J. of Robotics and Mechatronics, Vol.22, No.3, pp. 402-410, 2010.

- [3] Y. Kusuda, “Toyota’s violin-playing robot,” Industrial Robot: An Int. J., Vol.35, No.6, pp. 504-506, 2008.

- [4] K. Petersen, J. Solis, and A. Takanishi, “Development of a aural real-time rhythmical and harmonic tracking to enable the musical interaction with the Waseda flutist robot,” Int. Conf. on Intelligent Robots and Systems (IROS), pp. 2303-2308, 2009.

- [5] K. Murata, K. Nakadai, R. Takeda, H. G. Okuno, T. Torii, Y. Hasegawa, and H. Tsujino, “A beat-tracking robot for human-robot interaction and its evaluation,” Int. Conf. on Humanoid Robots (Humanoids), pp. 79-84, 2008.

- [6] K. Kosuge, T. Takeda, Y. Hirata, M. Endo, M. Nomura, K. Sakai, M. Koizumu, and T. Oconogi, “Partner ballroom dance robot – PBDR –,” SICE J. of Control, Measurement, and System Integration, Vol.1, No.1, pp. 74-80, 2008.

- [7] S. Nakaoka, K. Miura, M. Morisawa, F. Kanehiro, K. Kaneko, S. Kajita, and K. Yokoi, “Toward the use of humanoid robots as assemblies of content technologies – realization of a biped humanoid robot allowing content creators to produce various expressions –,” Synthesiology, Vol.4, No.2, pp. 80-91, 2011.

- [8] W. T. Chu and S. Y. Tsai, “Rhythm of motion extraction and rhythm-based cross-media alignment for dance videos,” IEEE Trans. on Multimedia, Vol.14, No.1, pp. 129-141, 2012.

- [9] T. Shiratori, A. Nakazawa, and K. Ikeuchi, “Rhythmic motion analysis using motion capture and musical information,” Int. Conf. on Multisensor Fusion and Integration for Intelligent Systems (MFI), pp. 89-94, 2003.

- [10] T. Itohara, T. Otsuka, T. Mizumoto, T. Ogata, and H. G. Okuno, “Particle-filter based audio-visual beat-tracking for music robot ensemble with human guitarist,” Int. Conf. on Intelligent Robots and Systems (IROS), pp. 118-124, 2011.

- [11] D. R. Berman, “AVISARME: Audio visual synchronization algorithm for a robotic musician ensemble,” Master’s thesis, University of Maryland, 2012.

- [12] G. Weinberg, A. Raman, and T. Mallikarjuna, “Interactive jamming with shimon: A social robotic musician,” Int. Conf. on Human Robot Interaction (HRI), pp. 233-234, 2009.

- [13] K. Petersen, J. Solis, and A. Takanishi, “Development of a real-time instrument tracking system for enabling the musical interaction with the Waseda flutist robot,” Int. Conf. on Intelligent Robots and Systems (IROS), pp. 313-318, 2008.

- [14] A. Lim, T. Mizumoto, L. K. Cahier, T. Otsuka, T. Takahashi, K. Komatani, T. Ogata, and H. G. Okuno, “Robot musical accompaniment: Integrating audio and visual cues for real-time synchronization with a human flutist,” Int. Conf. on Intelligent Robots and Systems (IROS), pp. 1964-1969, 2010.

- [15] M. Ohkita, Y. Bando, Y. Ikemiya, K. Itoyama, and K. Yoshii, “Audio-visual beat tracking based on a state-space model for a music robot dancing with humans,” Int. Conf. on Intelligent Robots and Systems (IROS), pp. 5555-5560, 2015.

- [16] K. Nakadai, H. G. Okuno, and H. Kitano, “Real-time auditory and visual multiple-speaker tracking for human-robot interaction,” J. of Robotics and Mechatronics, Vol.14, No.5, pp. 479-489, 2002.

- [17] S. Dixon,“ Evaluation of the audio beat tracking system BeatRoot,” J. of New Music Research, Vol.36, No.1, pp. 39-50, 2007.

- [18] M. Goto, “An audio-based real-time beat tracking system for music with or without drum-sounds,” J. of New Music Research, Vol.30, No.2, pp. 159-171, 2001.

- [19] A. M. Stark, M. E. P. Davies, and M. D. Plumbley, “Realtime beat-synchronous analysis of musical audio,” Int. Conf. on Digital Audio Effects (DAFx), pp. 299-304, 2009.

- [20] D. P. W. Ellis, “ Beat tracking by dynamic programming,” J. of New Music Research, Vol.36, No.1, pp. 51-60, 2007.

- [21] M. E. P. Davies and M. D. Plumbley, “Context-dependent beat tracking of musical audio,” IEEE Trans. on Audio, Speech, and Language Processing, Vol.15, No.3, pp. 1009-1020, 2007.

- [22] J. L. Oliveira, G. Ince, K. Nakamura, K. Nakadai, H. G. Okuno, L. P. Reis, and F. Gouyon, “Live assessment of beat tracking for robot audition,” Int. Conf. on Intelligent Robots and Systems (IROS), pp. 992-997, 2012.

- [23] A. Elowsson, “Beat tracking with a cepstroid invariant neural network,” Int. Society for Music Information Retrieval Conf. (ISMIR), pp. 351-357, 2016.

- [24] S. Bddotock, F. Krebs, and G.Widmer, “Joint beat and downbeat tracking with recurrent neural networks,” Int. Society for Music Information Retrieval Conf. (ISMIR), pp. 255-261, 2016.

- [25] F. Krebs, S. Bddotock, M. Dorfer, and G. Widmer, “Downbeat tracking using beat synchronous features with recurrent neural networks,” Int. Society for Music Information Retrieval Conf. (ISMIR), pp. 129-135, 2016.

- [26] S. Durand and S. Essid, “Downbeat detection with conditional random fields and deep learned features,” Int. Society for Music Information Retrieval Conf. (ISMIR), pp. 386-392, 2016.

- [27] C. Guedes, “Extracting musically-relevant rhythmic information from dance movement by applying pitch-tracking techniques to a video signal,” Sound and Music Computing Conf. (SMC), pp. 25-33, 2006.

- [28] M. S. Arulampalam, S. Maskell, N. Gordon, and T. Clapp, “A tutorial on particle filters for online nonlinear/non-Gaussian Bayesian tracking,” IEEE Trans. on Signal Processing, Vol.50, No.2, pp. 174-188, 2002.

- [29] K. Nakadai, T. Takahashi, H. G. Okuno, H. Nakajima, Y. Hasegawa, and H. Tsujino, “Design and implementation of robot audition system ‘hark’ – open source software for listening to three simultaneous speakers,” Advanced Robotics, Vol.24, No.5-6, pp. 739-761, 2010.

- [30] R. A. Rasch, “Synchronization in performed ensemble music,” Acta Acustica united with Acustica, Vol.43, No.2, pp. 121-131, 1979.

- [31] R. Takeda, K. Nakada, K. Komatani, T. Ogata, and H. G. Okuno, “Exploiting known sound source signals to improve ICA-based robot audition in speech separation and recognition,” Int. Conf. on Intelligent Robots and Systems (IROS), pp. 1757-1762, 2007.

- [32] S. Maruo, “Automatic chord recognition for recorded music based on beat-position-dependent hidden semi-Markov model,” Master’s thesis, Kyoto University, 2016.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.