Paper:

Unconstrained Home Appliance Operation by Detecting Pointing Gestures from Multiple Camera Views

Masae Yokota*, Soichiro Majima*, Sarthak Pathak**

, and Kazunori Umeda**

, and Kazunori Umeda**

*Precision Engineering Course, Graduate School of Science and Engineering, Chuo University

1-13-27 Kasuga, Bunkyo-ku, Tokyo 112-8551, Japan

**Department of Precision Mechanics, Faculty of Science and Engineering, Chuo University

1-13-27 Kasuga, Bunkyo-ku, Tokyo 112-8551, Japan

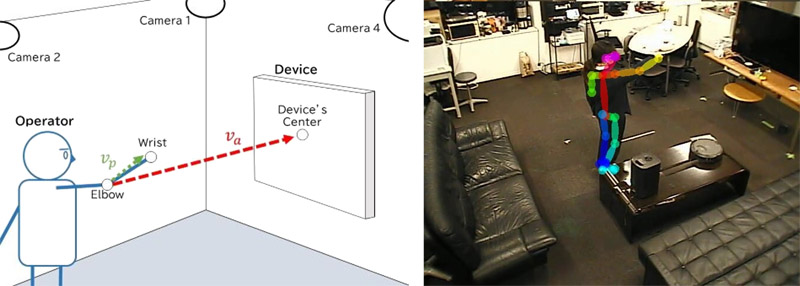

In this paper, we propose a method for manipulating home appliances using arm-pointing gestures. Conventional gesture-based methods are limited to home appliances with known locations or are device specific. In the proposed method, the locations of home appliances and users can change freely. Our method uses object- and keypoint-detection algorithms to obtain the positions of the appliance and operator in real time. Pointing gestures are used to operate the device. In addition, we propose a start gesture algorithm to make the system robust against accidental gestures. We experimentally demonstrated that using the proposed method, home appliances can be operated with high accuracy and robustness, regardless of their location or the user’s location in real environments.

Equipment operation by arm pointing

- [1] D. Coquin, E. Benoit, H. Sawada, and B. Ionescu, “Gestures recognition based on the fusion of hand positioning and arm gestures,” J. Robot. Mechatron., Vol.18, No.6, pp. 751-759, 2006. https://doi.org/10.20965/jrm.2006.p0751

- [2] J. Güttler, D. Bassily, C. Georgoulas, T. Linner, and T. Bock, “Unobtrusive tremor detection while gesture controlling a robotic arm,” J. Robot. Mechatron., Vol.27, No.1, pp. 103-104, 2015. https://doi.org/10.20965/jrm.2015.p0103

- [3] K. Hoshino, T. Kasahara, M. Tomida, and T. Tanimoto, “Gesture-world environment technology for mobile manipulation – Remote control system of a robot with hand pose estimation –,” J. Robot. Mechatron., Vol.24, No.1, pp. 180-190, 2012. https://doi.org/10.20965/jrm.2012.p0180

- [4] A. Dongre, R. Pinto, A. Patkar, and M. Lopes, “Computer cursor control using eye and face gestures,” 2020 11th Int. Conf. on Computing, Communication and Networking Technologies (ICCCNT), 2020. https://doi.org/10.1109/ICCCNT49239.2020.9225311

- [5] F. Deboeverie, S. Roegiers, G. Allebosch, P. Veelaert, and W. Philips, “Human gesture classification by brute-force machine learning for exergaming in physiotherapy,” 2016 IEEE Conf. on Computational Intelligence and Games (CIG), 2016. https://doi.org/10.1109/CIG.2016.7860414

- [6] M. Niitsuma, H. Hashimoto, and H. Hashimoto, “Spatial memory as an aid system for human activity in intelligent space,” IEEE Trans. on Industrial Electronics, Vol.54, No.2, pp. 1122-1131, 2007. https://doi.org/10.1109/TIE.2007.892730

- [7] S. Yan, Y. Ji, and K. Umeda, “A system for operating home appliances with hand positioning in a user-definable command space,” 2020 IEEE/SICE Int. Symp. on System Integration (SII), pp. 366-370, 2020. https://doi.org/10.1109/SII46433.2020.9025978

- [8] Y. Muranaka, M. Al-Sada, and T. Nakajima, “A home appliance control system with hand gesture based on pose estimation,” 2020 IEEE 9th Global Conf. on Consumer Electronics (GCCE), pp. 752-755, 2020. https://doi.org/10.1109/GCCE50665.2020.9291877

- [9] S. Wan, L. Yang, K. Ding, and D. Qiu, “Dynamic gesture recognition based on three-stream coordinate attention network and knowledge distillation,” IEEE Access, Vol.11, pp. 50547-50559, 2023. https://doi.org/10.1109/ACCESS.2023.3278100

- [10] A. I. D. Viaje et al., “Selection of appliance using skeletal tracking of hand to hand-tip for a gesture controlled home automation,” 2020 Int. Conf. on Electronics and Sustainable Communication Systems (ICESC), pp. 575-580, 2020. https://doi.org/10.1109/ICESC48915.2020.9155860

- [11] A. Fernández, L. Bergesio, A. M. Bernardos, J. A. Besada, and J. R. Casar, “A Kinect-based system to enable interaction by pointing in smart spaces,” 2015 IEEE Sensors Applications Symp. (SAS), 2015. https://doi.org/10.1109/SAS.2015.7133613

- [12] M. A. Iqbal, S. K. Asrafuzzaman, M. M. Arifin, and S. K. A. Hossain, “Smart home appliance control system for physically disabled people using Kinect and X10,” 2016 5th Int. Conf. on Informatics, Electronics and Vision (ICIEV), pp. 891-896, 2016. https://doi.org/10.1109/ICIEV.2016.7760129

- [13] T. Ikeda, N. Noda, S. Ueki, and H. Yamada, “Gesture interface and transfer method for AMR by using recognition of pointing direction and object recognition,” J. Robot. Mechatron., Vol.35, No.2, pp. 288-297, 2023. https://doi.org/10.20965/jrm.2023.p0288

- [14] Y. Tamura, M. Sugi, T. Arai, and J. Ota, “Target identification through human pointing gesture based on human-adaptive approach,” J. Robot. Mechatron., Vol.20, No.4, pp. 515-525, 2008. https://doi.org/10.20965/jrm.2008.p0515

- [15] J. Redmon, S. Divvala, R. Girshick, and A. Farhadi, “You only look once: Unified, real-time object detection,” 2016 IEEE Conf. on Computer Vision and Pattern Recognition (CVPR), pp. 779-788, 2016. https://doi.org/10.1109/CVPR.2016.91

- [16] Z. Cao, G. Hidalgo, T. Simon, S.-E. Wei, and Y. Sheikh, “OpenPose: Realtime multi-person 2D pose estimation using part affinity fields,” IEEE Trans. on Pattern Analysis and Machine Intelligence, Vol.43, No.1, pp. 172-186, 2021. https://doi.org/10.1109/TPAMI.2019.2929257

- [17] A. Bochkovskiy, C.-Y. Wang, and H.-Y. M. Liao, “YOLOv4: Optimal speed and accuracy of object detection,” arXiv:2004.10934, 2004. https://doi.org/10.48550/arXiv.2004.10934

- [18] R. Hartley and A. Zisserman, “Multiple View Geometry in Computer Vision,” 2nd Edition, Cambridge University Press, 2004. https://doi.org/10.1017/CBO9780511811685

- [19] J. Brooke, “SUS: A ‘quick and dirty’ usability scale,” P. W. Jordan, B. Thomas, I. L. McClelland, and B. Weerdmeester (Eds.), “Usability Evaluation in Industry,” pp. 189-194, CRC Press, 1996.

- [20] R. Likert, “A technique for the measurement of attitudes,” Archives of Psychology, No.140, 1932.

- [21] Q. Yao, T. Terakawa, M. Komori, H. Fujita, and I. Yasuda, “Effect of viewpoint change on robot hand operation by gesture- and button-based methods,” J. Robot. Mechatron., Vol.34, No.6, pp. 1411-1423, 2022. https://doi.org/10.20965/jrm.2022.p1411

- [22] Y. Ishida and H. Tamukoh, “Semi-automatic dataset generation for object detection and recognition and its evaluation on domestic service robots,” J. Robot. Mechatron., Vol.32, No.1, pp. 245-253, 2020. https://doi.org/10.20965/jrm.2020.p0245

- [23] J. Sauro, “A Practical Guide to the System Usability Scale: Background, Benchmarks & Best Practices,” Measuring Usability LLC, 2011.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.