Paper:

Person Searching Through an Omnidirectional Camera Using CNN in the Tsukuba Challenge

Shingo Nakamura, Tadahiro Hasegawa, Tsubasa Hiraoka, Yoshinori Ochiai, and Shin’ichi Yuta

Shibaura Institute of Technology

3-7-5 Toyosu, Koto-ku, Tokyo 135-8548, Japan

The Tsukuba Challenge is a competition, in which autonomous mobile robots run on a route set on a public road under a real environment. Their task includes not only simple running but also finding multiple specific persons at the same time. This study proposes a method that would realize person searching. While many person-searching algorithms use a laser sensor and a camera in combination, our method only uses an omnidirectional camera. The search target is detected using a convolutional neural network (CNN) that performs a classification of the search target. Training a CNN requires a great amount of data for which pseudo images created by composition are used. Our method is implemented in an autonomous mobile robot, and its performance has been verified in the Tsukuba Challenge 2017.

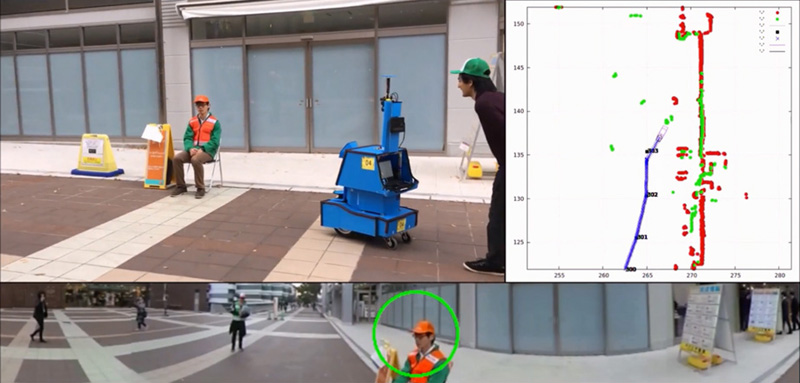

An autonomous robot finding a target person using the proposed method

- [1] S. Yuta, M. Mizukawa, and H. Hashimoto, “Tsukuba Challenge: The Purpose and Results,” J. of the Society of Instrument and Control Engineers, Vol.49, No.9, pp. 572-578, 2010 (in Jpapnese).

- [2] K. Yamauchi, N. Akai, R. Unai, K. Inoue, and K. Ozaki, “Person Detection Method Based on Color Layout in Real World Robot Challenge 2013,” J. Robot. Mechatron., Vol.26, No.2, pp. 151-157, doi:10.20965/jrm.2014.p0151, 2014.

- [3] M. Nomatsu, Y. Suganuma, Y. Yui, and Y. Uchiyama, “Development of an Autonomous Mobile Robot with Self-Localization and Searching Target in a Real Environment,” J. Robot. Mechatron., Vol.27, No.4, pp. 356-364, doi:10.20965/jrm.2015.p0356, 2015.

- [4] J. Eguchi and K. Ozaki, “Development of the Autonomous Mobile Robot for Target-Searching in Urban Areas in the Tsukuba Challenge 2013,” J. Robot. Mechatron., Vol.26, No.2, pp. 166-176, doi:10.20965/jrm.2014.p0166, 2014.

- [5] R. Mitsudome, H. Date, A. Suzuki, T. Tsubouchi, and A. Ohya, “Autonomous Mobile Robot Searching for Persons with Specific Clothing on Urban Walkway,” J. Robot. Mechatron., Vol.29, No.4, pp. 649-659, doi:10.20965/jrm.2017.p0649, 2017.

- [6] S. Akimoto, T. Takahashi, M. Suzuki, Y. Arai, and S. Aoyagi, “Human Detection by Fourier Descriptors and Fuzzy Color Histograms with Fuzzy c-Means Method,” J. Robot. Mechatron., Vol.28, No.4, pp. 491-499, doi:10.20965/jrm.2016.p0491, 2016.

- [7] K. Yamauchi, N. Akai, and K. Ozaki, “Color Extraction Using Multiple Photographs Taken with Different Exposure Time in RWRC,” J. Robot. Mechatron., Vol.27, No.4, pp. 365-373, doi:10.20965/jrm. 2015.p0365, 2015.

- [8] Y. LeCun, L. Bottou, Y. Bengio, and P. Haffner, “Gradient-based learning applied to document recognition,” Proc. of the IEEE, Vol.86, No.11, pp. 2278-2324, doi:10.1109/5.726791, 1998.

- [9] S. Ioffe and C. Szegedy, “Batch Normalization: Accelerating Deep Network Training by Reducing Internal Covariate Shift,” Int. Conf. on Machine Learning, pp. 448-456, 2015.

- [10] N. Srivastava, G. E. Hinton, A. Krizhevsky, I. Sutskever, and R. Salakhutdinov, “Dropout: a simple way to prevent neural networks from overfitting,” J. of Machine Learning Research, Vol.15, No.1, pp. 1929-1958, 2014.

- [11] X. Glorot, A. Bordes, and Y. Bengio, “Deep Sparse Rectifier Neural Networks,” Proc. of the Fourteenth Int. Conf. on Artificial Intelligence and Statistics, PMLR Vol.15, pp. 315-323, 2011.

- [12] A. Krizhevsky, I. Sutskever, and G. E. Hinton, “ImageNet Classification with Deep Convolutional Neural Networks,” Proc. of the 25th Int. Conf. on Neural Information Processing Systems (NIPS2012), pp. 1097-1105, 2012.

- [13] D. P. Kingma and J. Ba, “Adam: A Method for Stochastic Optimization,” Proc. of 3rd Int. Conf. on Learning Representations (ICLR), San Diego, 2015.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.