Paper:

Spherical Video Stabilization by Estimating Rotation from Dense Optical Flow Fields

Sarthak Pathak, Alessandro Moro, Hiromitsu Fujii, Atsushi Yamashita, and Hajime Asama

The University of Tokyo

7-3-1 Hongo, Bunkyo-ku, Tokyo 113-8656, Japan

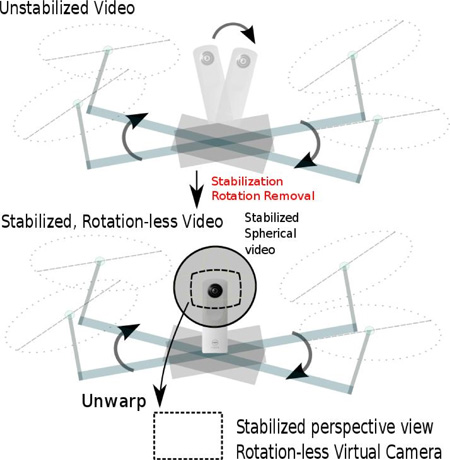

Spherical video stabilization

- [1] S. Hughes, J. Manojlovich, M. Lewis, and J. Gennari, “Camera control and decoupled motion for teleoperation,” Proc. of the IEEE Int. Conf. on Systems, Man and Cybernetics, pp. 1339-1344, October 2003.

- [2] S. Hughes and M. Lewis, “Robotic camera control for remote exploration,” Proc. of the SIGCHI Conf. on Human factors in Computing Systems, pp. 511-517, July 2004.

- [3] D. G Lowe, “Distinctive image features from scale-invariant keypoints,” Int. J of computer vision, Vol.60, No.2, pp. 91-110, January 2004.

- [4] M. A. Fischler and R. C. Bolles, “Random sample consensus: A paradigm for model fitting with applications to image analysis and automated cartography,” Communications of the ACM, Vol.24, No.6, pp. 381-395, June 1981.

- [5] T. Albrecht, T. Tan, G.A.W. West, and T. Ly, “Omnidirectional video stabilisation on a virtual camera using sensor fusion,” Proc. of the Int. Conf. on Control Automation Robotics Vision, pp. 2067-2072, December 2010.

- [6] R. Miyauchi, N. Shiroma, and F. Matsuno, “Development of omni-directional image stabilization system using camera posture information,” Proc. of the IEEE Int. Conf. on Robotics and Biomimetics, pp. 920-925, December 2007.

- [7] K. Kruckel, F. Nolden, A. Ferrein, and I. Scholl, “Intuitive visual teleoperation for ugvs using free-look augmented reality displays,” Proc. of the IEEE Int. Conf. on Robotics and Automation, pp. 4412-4417, May 2015.

- [8] F. Okura, Y. Ueda, T. Sato, and N. Yokoya, “Teleoperation of mobile robots by generating augmented free-viewpoint images,” Proc. of the IEEE/RSJ Int. Conf. on Intelligent Robots and Systems, pp. 665-671, November 2013.

- [9] M. Kamali, A. Banno, J. C. Bazin, I. S. Kweon, and K. Ikeuchi, “Stabilizing omnidirectional videos using 3d structure and spherical image warping,” Proc. of the IAPR Conf. on Machine Vision Applications, pp. 177-180, June 2011.

- [10] A. Torii, M. Havlena, and T. Pajdla, “Omnidirectional image stabilization by computing camera trajectory,” Advances in Image and Video Technology, Springer Berlin Heidelberg, Vol.5414, pp. 71-82, January 2009.

- [11] M. Kamali, S. Ono, and K. Ikeuchi, “An efficient method for detecting and stabilizing shaky parts of videos in vehicle-mounted cameras,” SEISAN KENKYU, Vol.66, pp. 87-94, January 2015.

- [12] S. Kasahara and J. Rekimoto, “Jackin head: An immersive human-human telepresence system,” SIGGRAPH Asia 2015 Emerging Technologies, pp. 14:1-14:3, November 2015.

- [13] A. Makadia, L. Sorgi, and K. Daniilidis, “Rotation estimation from spherical images,” Proc. of the 17th Int. Conf. on Pattern Recognition 2004 (ICPR 2004), Vol.3, pp. 590-593, August 2004.

- [14] Y. Matsushita, E. Ofek, W. Ge, X. Tang, and H. Y. Shum, “Full-frame video stabilization with motion inpainting,” IEEE Trans. on Pattern Analysis and Machine Intelligence, Vol.28, No.7, pp. 1150-1163, May 2006.

- [15] L. Valgaerts, A. Bruhn, M. Mainberger, and J. Weickert, “Dense versus sparse approaches for estimating the fundamental matrix,” Int. J of Computer Vision, Vol.96, pp. 212-234, January 2012.

- [16] R. C. Nelson and J. Aloimonos, “Finding motion parameters from spherical motion fields (or the advantages of having eyes in the back of your head),” Biological Cybernetics, Vol.58, pp. 261-273, March 1988.

- [17] T. W. Hui and R. Chung, “Determining motion directly from normal flows upon the use of a spherical eye platform,” Proc. of the IEEE Conf. on Computer Vision and Pattern Recognition, pp. 2267-2274, June 2013.

- [18] Y. Yagi, W. Nishii, K. Yamazawa, and M. Yachida, “Stabilization for mobile robot by using omnidirectional optical flow,” Proc. of the IEEE/RSJ Int. Conf. on Intelligent Robots and Systems, Vol.2, pp. 618-625, November 1996.

- [19] S. Pathak, A. Moro, A. Yamashita, and H. Asama, “A decoupled virtual camera using spherical optical flow,” Proc. of the IEEE Int. Conf. on Image Processing, September 2016.

- [20] A. Torii, A. Imiya, and N. Ohnishi, “Two-and three-view geometry for spherical cameras,” Proc. of the sixth workshop on omnidirectional vision, camera networks and non-classical cameras, pp. 81-88, October 2005.

- [21] R. I. Hartley, “In defense of the eight-point algorithm,” IEEE Trans. on Pattern Analysis and Machine Intelligence, Vol.19, No.6, pp. 580-593, June 1997.

- [22] G. Farneback, “Two-frame motion estimation based on polynomial expansion,” Lecture Notes in Computer Science, Vol.2749, pp. 363-370, June 2003.

- [23] J. Fujiki, A. Torii, and S. Akaho, “Epipolar geometry via rectification of spherical images,” Proc. of the Third Int. Conf. on Computer Vision/Computer Graphics Collaboration Techniques, pp. 461-471, March 2007.

- [24] A. Pagani and D. Stricker, “Structure from motion using full spherical panoramic cameras,” Proc. of the IEEE Int. Conf. on Computer Vision (Workshops), pp. 375-382, November 2011.

- [25] D. W. Marquardt, “An algorithm for least-squares estimation of nonlinear parameters,” J of the society for Industrial and Applied Mathematics, Vol.11, No.2, pp. 431-441, June 1963.

- [26] H. Taira, Y. Inoue, A. Torii, and M. Okutomi, “Robust feature matching for distorted projection by spherical cameras,” Information and Media Technologies, Vol.10, No.3, pp. 478-482, November 2015.

- [27] P. F. Alcantarilla, J. Nuevo, and A. Bartoli, “Fast explicit diffusion for accelerated features in nonlinear scale spaces,” Proc. of the British Machine Vision Conf., September 2013.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.