Paper:

Communication-Free Adaptive Swarm Robotic System: LLM-Based Decision Making and MARL-Based Multi-Policy Control

Takahiro Yoshida and Yuichiro Sueoka

Department of Mechanical Engineering, Graduate School of Engineering, The University of Osaka

2-1 Yamadaoka, Suita, Osaka 565-0871, Japan

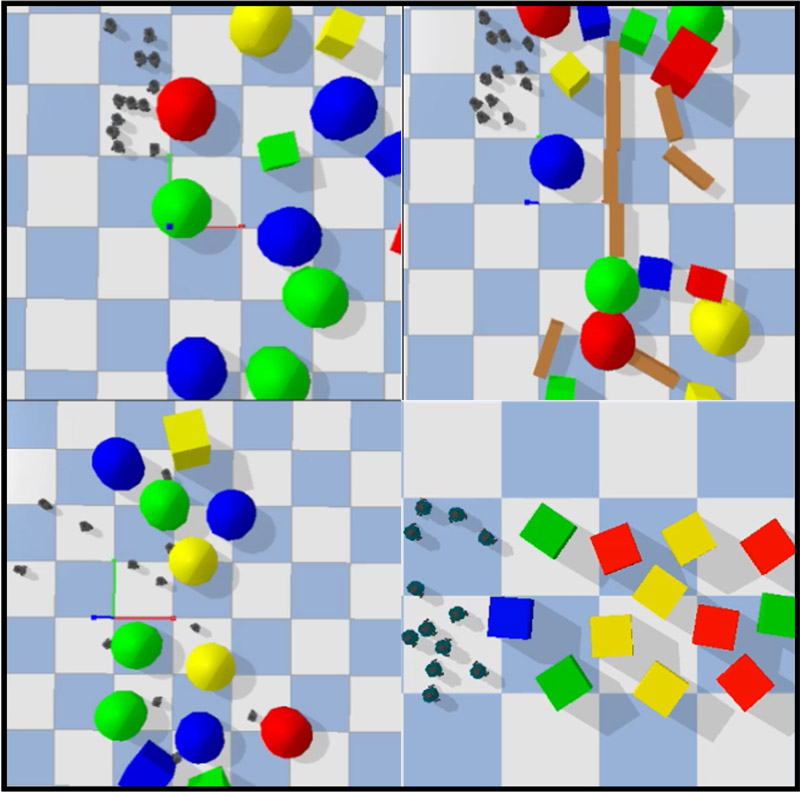

Swarm robotic systems consist of a large number of distributed autonomous robots that coordinate their actions to accomplish diverse tasks beyond the capabilities of a single robot. These systems have recently been considered for deployment in disaster scenarios, where communication is often unstable, making it necessary to achieve adaptive cooperative behavior without relying on explicit communication between robots. In the context of multi-robot systems—including swarm robotic systems—some studies have explored approaches utilizing large language models (LLMs) or other learning-based methods, but few have proposed systems that enable communication-free coordination. In this paper, we propose a system incorporating a novel method that combines high-level decision-making via LLM-based policy selection—guided by questionnaire-style prompts—with low-level control using multiple MARL-trained policies. We consider a complex task scenario in which robots search for a target object and transport it to a designated destination. To evaluate the method, we define implicit consensus as a condition in which a robot selects the same policy as its nearby robots without any explicit communication. The effectiveness of the proposed method is demonstrated through simulated task execution, with particular emphasis on implicit consensus as a key evaluation metric.

LM-driven adaptive swarm robotic system

- [1] E. Şahin, “Swarm robotics: From sources of inspiration to domains of application,” Int. Workshop on Swarm Robotics, pp. 10-20, 2004. https://doi.org/10.1007/978-3-540-30552-1_2

- [2] K. Nagatani, M. Abe, K. Osuka, P. jo Chun, T. Okatani, M. Nishio, S. Chikushi, T. Matsubara, Y. Ikemoto, and H. Asama, “Innovative technologies for infrastructure construction and maintenance through collaborative robots based on an open design approach,” Advanced Robotics, Vol.35, No.11, pp. 715-722, 2021. https://doi.org/10.1080/01691864.2021.1929471

- [3] P. Li, Z. An, S. Abrar, and L. Zhou, “Large language models for multi-robot systems: A survey,” arXiv preprint, arXiv:2502.03814, 2025. https://doi.org/10.48550/arXiv.2502.03814

- [4] J. Chen, C. Yu, X. Zhou, T. Xu, Y. Mu, M. Hu, W. Shao, Y. Wang, G. Li, and L. Shao, “Emos: Embodiment-aware heterogeneous multi-robot operating system with llm agents,” arXiv preprint, arXiv:2410.22662, 2024. https://doi.org/10.48550/arXiv.2410.22662

- [5] K. Liu, Z. Tang, D. Wang, Z. Wang, X. Li, and B. Zhao, “Coherent: Collaboration of heterogeneous multi-robot system with large language models,” arXiv preprint, arXiv:2409.15146, 2024. https://doi.org/10.48550/arXiv.2409.15146

- [6] V. Strobel, M. Dorigo, and M. Fritz, “Llm2swarm: Robot swarms that responsively reason, plan, and collaborate through llms,” arXiv preprint, arXiv:2410.11387, 2024. https://doi.org/10.48550/arXiv.2410.11387

- [7] B. Yu, H. Kasaei, and M. Cao, “Co-navgpt: Multi-robot cooperative visual semantic navigation using large language models,” arXiv preprint, arXiv:2310.07937, 2023. https://doi.org/10.48550/arXiv.2310.07937

- [8] Y. Wang, R. Xiao, J. Y. L. Kasahara, R. Yajima, K. Nagatani, A. Yamashita, and H. Asama, “Dart-llm: Dependency-aware multi-robot task decomposition and execution using large language models,” arXiv preprint, arXiv:2411.09022, 2024. https://doi.org/10.48550/arXiv.2411.09022

- [9] S. S. Kannan, V. L. N. Venkatesh, and B.-C. Min, “Smart-llm: Smart multi-agent robot task planning using large language models,” arXiv preprint, arXiv:2309.10062, 2023. https://doi.org/10.48550/arXiv.2309.10062

- [10] Y. Lakhnati, M. Pascher, and J. Gerken, “Exploring a gpt-based large language model for variable autonomy in a VR-based human-robot teaming simulation,” Frontiers in Robotics and AI, Vol.11, Article No.1347538, 2024. https://doi.org/10.3389/frobt.2024.1347538

- [11] M. Ahn, M. G. Arenas, M. Bennice, N. Brown, C. Chan, B. David, A. Francis, G. Gonzalez, R. Hessmer, T. Jackson, N. J. Joshi, D. Lam, T.-W. E. Lee, A. Luong, S. Maddineni, H. Patel, J. Peralta, J. Quiambao, D. Reyes, R. M. J. Ruano, D. Sadigh, P. Sanketi, L. Takayama, P. Vodenski, and F. Xia, “Vader: Visual affordance detection and error recovery for multi robot human collaboration,” arXiv preprint, arXiv:2405.16021, 2024. https://doi.org/10.48550/arXiv.2405.16021

- [12] K. Garg, S. Zhang, J. Arkin, and C. Fan, “Foundation models to the rescue: Deadlock resolution in connected multi-robot systems,” arXiv preprint, arXiv:2404.06413, 2024. https://doi.org/10.48550/arXiv.2404.06413

- [13] S. Morad, A. Shankar, J. Blumenkamp, and A. Prorok, “Language-conditioned offline RL for multi-robot navigation,” arXiv preprint, arXiv:2407.20164, 2024. https://doi.org/10.48550/arXiv.2407.20164

- [14] T. Godfrey, W. Hunt, and M. D. Soorati, “Marlin: Multi-agent reinforcement learning guided by language-based inter-robot negotiation,” arXiv preprint, arXiv:2410.14383, 2025. https://doi.org/10.48550/arXiv.2410.14383

- [15] J. Orr and A. Dutta, “Multi-agent deep reinforcement learning for multi-robot applications: A survey,” Sensors, Vol.23, No.7, Article No.3625, 2023. https://doi.org/10.3390/s23073625

- [16] T. Niwa, K. Shibata, and T. Jimbo, “Multi-agent reinforcement learning and individuality analysis for cooperative transportation with obstacle removal,” F. Matsuno, S. Azuma, and M. Yamamoto (Eds.), “Distributed Autonomous Robotic Systems,” pp. 202-213, Springer, 2022. https://doi.org/10.1007/978-3-030-92790-5_16

- [17] G. Eoh and T.-H. Park, “Cooperative object transportation using curriculum-based deep reinforcement learning,” Sensors, Vol.21, No.14, Article No.4780, 2021. https://doi.org/10.3390/s21144780

- [18] L. Zhang, Y. Sun, A. Barth, and O. Ma, “Decentralized control of multi-robot system in cooperative object transportation using deep reinforcement learning,” IEEE Access, Vol.8, pp. 184109-184119, 2020. https://doi.org/10.1109/ACCESS.2020.3025287

- [19] Y. Sueoka, T. Yoshida, and K. Osuka, “Reinforcement learning of scalable, flexible and robust cooperative transport behavior using the transformer encoder,” A. Nilles, K. H. Petersen, T. L. Lam, A. Prorok, M. Rubenstein, and M. Otte (Eds.), “Distributed Autonomous Robotic Systems,” Springer, 2025. https://doi.org/10.1007/978-3-032-04584-3_20

- [20] A. Vaswani, N. Shazeer, N. Parmar, J. Uszkoreit, L. Jones, A. N. Gomez, Ł. Kaiser, and I. Polosukhin, “Attention is all you need,” 31st Conf. on Neural Information Processing Systems (NIPS 2017), 2017.

- [21] G. Serapio-García, M. Safdari, C. Crepy, L. Sun, S. Fitz, P. Romero, M. Abdulhai, A. Faust, and M. Matarić, “Personality traits in large language models,” arXiv preprint, arXiv:2307.00184, 2023. https://doi.org/10.48550/arXiv.2307.00184

- [22] C. Yu, A. Velu, E. Vinitsky, J. Gao, Y. Wang, A. Bayen, and Y. Wu, “The surprising effectiveness of PPO in cooperative, multi-agent games,” arXiv preprint, arXiv:2103.01955, 2021. https://doi.org/10.48550/arXiv.2103.01955

- [23] J. Schulman, P. Moritz, S. Levine, M. Jordan, and P. Abbeel, “High-dimensional continuous control using generalized advantage estimation,” arXiv preprint, arXiv:1506.02438, 2015. https://doi.org/10.48550/arXiv.1506.02438

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.