Paper:

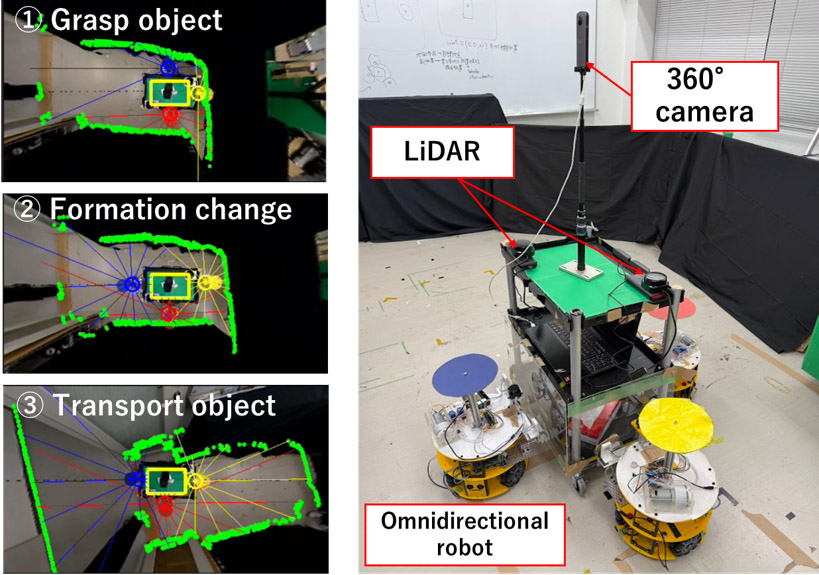

Formation Control of a Cooperative Transportation System with Multiple Robots Using a State Machine with an Integrated Sensing System with an Omnidirectional Camera and LiDARs

Nobutomo Matsunaga*

and Taisei Matsuo**

and Taisei Matsuo**

*Faculty of Advanced Science and Technology, Kumamoto University

2-39-1 Kurokami, Chuo-ku, Kumamoto, Kumamoto 860-8555, Japan

**Graduate School of Science and Technology, Kumamoto University

2-39-1 Kurokami, Chuo-ku, Kumamoto, Kumamoto 860-8555, Japan

In cooperative transportation, multiple robots share work that is difficult to perform using a single robot. This transformation enables a flexible combination of robots to transport objects, enabling efficient operation according to the situation. In recent years, the cooperative transportation of objects has been studied using formation-change algorithms with reinforcement learning. Although individual tasks, such as transport or formation change, have been studied, the coordination of all tasks in cooperative transport and control has not been discussed. In this paper, a formation-control system using a state machine is proposed for transportation tasks in a complex environment. First, reinforcement learning algorithms specialized for multiple agents were used to change the formation. As precise environmental sensing in the vicinity of a formation is required for cooperative transport, an integrated sensing system that shares omnidirectional camera and light detection and ranging (LiDAR) sensor information with all the transport robots was constructed. Next, the formation was controlled using a state machine with an integrated virtual LiDAR sensor. Finally, two scenarios with multiple robots were demonstrated to evaluate the effectiveness of the proposed system.

Formation control of a cooperative transportation system with multiple robots

- [1] L. F. C. Ccari and P. R. Yanyachi, “A novel neural network-based robust adaptive formation control for cooperative transport of a payload using two underactuated quadcopters,” IEEE Access, Vol.11, pp. 36015-36028, 2023. https://doi.org/10.1109/ACCESS.2023.3265957

- [2] X. Chen, Y. Fan, G. Wang, and Y. Jiang, “Adaptive formation control of multi-quadrotors cooperative transportation,” 9th Int. Conf. on Automation, Control and Robotics Engineering (CACRE), pp. 291-297, 2024. https://doi.org/10.1109/CACRE62362.2024.10635022

- [3] X. An et al., “Multi-robot systems and cooperative object transport: Communications, platforms, and challenges,” IEEE Open J. of the Computer Society, Vol.4, pp. 23-36, 2023. https://doi.org/10.1109/OJCS.2023.3238324

- [4] H. Yamaguchi, A. Nishijima, and A. Kawakami, “Control of two manipulation points of a cooperative transportation system with two car-like vehicles following parametric curve paths,” Robotics and Autonomous Systems, Vol.63, Part 1, pp. 165-178. 2015. https://doi.org/10.1016/j.robot.2014.07.007

- [5] X. Li et al., “Cooperative target enclosing and tracking control with obstacles avoidance for multiple nonholonomic mobile robots,” Applied Sciences, Vol.12, No.6, Article No.2876, 2022. https://doi.org/10.3390/app12062876

- [6] A. Yufka and M. Ozkan, “Formation-based control scheme for cooperative transportation by multiple mobile robots,” Int. J. of Advanced Robotic Systems, Vol.12, No.9, Article No.120, 2015. https://doi.org/10.5772/60972

- [7] T. Iori et al., “Modeling and control of a cooperative conveyance system with multiple AGVs,” IFAC-PapersOnLine, Vol.56, No.2, pp. 6382-6387, 2023. https://doi.org/10.1016/j.ifacol.2023.10.829

- [8] S. Yasuda, T. Kumagai, and H. Yoshida, “Cooperative transportation robot system using risk-sensitive stochastic control,” 2021 IEEE/RSJ Int. Conf. on Intelligent Robots and Systems, pp. 5981-5988, 2021. https://doi.org/10.1109/IROS51168.2021.9636238

- [9] J. Alonso-Mora, S. Baker, and D. Rus, “Multi-robot formation control and object transport in dynamic environments via constrained optimization,” The Int. J. of Robotics Research, Vol.36, No.9, pp. 1000-1021, 2017. https://doi.org/10.1177/0278364917719333

- [10] N. Matsunaga, K. Murata, and H. Okajima, “Robust cooperative transport system with model error compensator using multiple robots with suction cups,” J. Robot. Mechatron., Vol.35, No.6, pp. 1583-1592, 2023. https://doi.org/10.20965/jrm.2023.p1583

- [11] A. Budiyanto and N. Matsunaga, “Deep Dyna-Q for rapid learning and improved formation achievement in cooperative transportation,” Automation, Vol.4, No.3, pp. 210-231, 2023. https://doi.org/10.3390/automation4030013

- [12] A. Budiyanto, K. Azetsu, and N. Matsunaga, “Accelerated transfer learning for cooperative transportation formation change via SDPA-MAPPO (Scaled Dot Product Attention-Multi-Agent Proximal Policy Optimization),” Automation, Vol.5, No.4, pp. 597-612, 2024. https://doi.org/10.3390/automation5040034

- [13] J. Boo and D. Chwa, “Fuzzy integral sliding mode observer-based formation control of mobile robots with kinematic disturbance and unknown leader and follower velocities,” IEEE Access, Vol.10, pp. 76926-76938, 2022. https://doi.org/10.1109/ACCESS.2022.3192839

- [14] T. T. H. T. Nguyen, T. T. Dao, T. B. Ngo, and V. A. Phi, “Self-driving car navigation with single-beam LiDAR and neural networks using JavaScript,” IEEE Access, Vol.12, pp. 190203-190219, 2024. https://doi.org/10.1109/ACCESS.2024.3511572

- [15] S. Chen et al., “NDT-LOAM: A real-time lidar odometry and mapping with weighted NDT and LFA,” IEEE Sensors J., Vol.22, No.4, pp. 3660-3671, 2022. https://doi.org/10.1109/JSEN.2021.3135055

- [16] F. Wang et al., “A method coupling NDT and VGICP for registering UAV-LiDAR and LiDAR-SLAM point clouds in plantation forest plots,” Forests, Vol.15, No.12, Article No.2186, 2024. https://doi.org/10.3390/f15122186

- [17] R. B. Hamarsudi, I. K. Wibowo, and M. M. Bachtiar, “Radial search lines method for estimating soccer robot position using an omnidirectional camera,” 2020 Int. Electronics Symp., pp. 271-276, 2020. https://doi.org/10.1109/IES50839.2020.9231901

- [18] J. Caracotte, F. Morbidi, and E. M. Mouaddib, “Photometric stereo with twin-fisheye cameras,” 25th Int. Conf. on Pattern Recognition, pp. 5270-5277, 2021. https://doi.org/10.1109/ICPR48806.2021.9412357

- [19] T. A. C. Garcia, M. B. Campos, L. F. Castanheiro, A. Maria, and G. Tommaselli, “A proposal to integrate ORB-SLAM fisheye and convolutional neural networks for outdoor terrestrial mobile mapping,” 2021 IEEE Int. Geoscience and Remote Sensing Symp., pp. 578-581, 2021. https://doi.org/10.1109/IGARSS47720.2021.9554752

- [20] R. Lowe et al., “Multi-agent actor-critic for mixed cooperative-competitive environments,” arXiv:1706.02275, 2017. https://doi.org/10.48550/arXiv.1706.02275

- [21] K. Murata, K. Miyazaki, and N. Matsunaga, “Experiment of cooperative transportation using multi-robots by multi-agent deep deterministic policy gradient,” 13th Asian Control Conf., pp. 1120-1123, 2022. https://doi.org/10.23919/ASCC56756.2022.9828156

- [22] H. Okajima, “Model error compensator for adding robustness toward existing control systems,” IFAC-PapersOnLine, Vol.56, No.2, pp. 3622-3629, 2023. https://doi.org/10.1016/j.ifacol.2023.10.1524

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.