Paper:

Observable Point Cloud Filtering from Travel Path for Self-Localization in Wide-Area Environments

Kazuma Yagi, Shugo Nishimura, Tatsuki Matsunaga, Kazuyo Tsuzuki

, Seiji Aoyagi, and Yasushi Mae

, Seiji Aoyagi, and Yasushi Mae

Kansai University

3-3-35 Yamate-cho, Suita, Osaka 564-8680, Japan

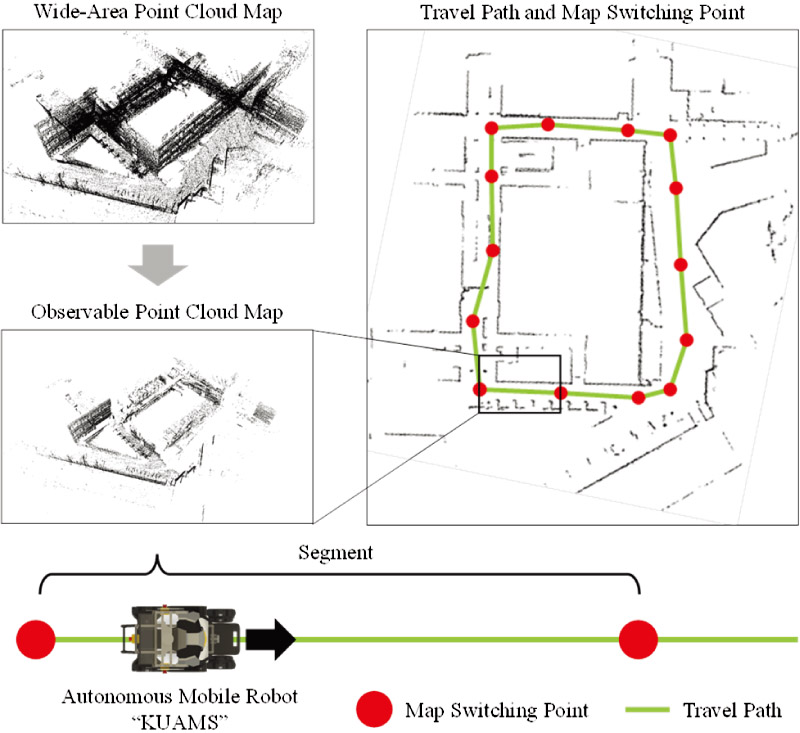

A filtering method for observable point clouds from travel path is proposed to improve the efficiency of each point in the point cloud map for self-localization of autonomous mobile robots. The method involves retaining only the point clouds along the planned travel path of the mobile robot that can be observed by it. These points constitute the observable point cloud map. By performing ray casting from the position of the depth sensor along the travel path, observable regions are extracted whereas unobserved point clouds, such as those behind obstacles or out of the sensor range, are removed. The effectiveness of the method is evaluated through comparative experiments involving self-localization using both an original wide-area point cloud map and the observable point cloud maps. A new metric called localization contribution per point is introduced to quantify the contribution of each point, in the point cloud map, to self-localization. The experimental results demonstrate the efficiency of the observable point cloud map when used for self-localization.

Conceptual diagram of the proposed procedure

- [1] T. Hasegawa, H. Miyoshi, and S. Yuta, “Experimental study of seamless switch between GNSS- and LiDAR-based self-localization,” J. Robot. Mechatron., Vol.35, No.6, pp. 1514-1523, 2023. https://doi.org/10.20965/jrm.2023.p1514

- [2] A. Poulose, M. Baek, and D. S. Han, “Point cloud map generation and localization for autonomous vehicles using 3D lidar scans,” Proc. of the 27th Asia-Pacific Conf. on Communications (APCC), pp. 336-341, 2022. https://doi.org/10.1109/APCC55198.2022.9943630

- [3] Q. Chen, Y. Jin, Y. Chen, Y. Yang, X. Lei, and X. Li, “Global localization of point cloud based on segmentation and learning-based descriptor,” Proc. of the IEEE Int. Conf. on Systems, Man., and Cybernetics (SMC), pp. 1942-1947, 2022. https://doi.org/10.1109/SMC53654.2022.9945176

- [4] L. Zhang, Q. Wang, T. Jiang, S. Gu, Z. Ma, J. Liu, S. Luo, and F. Neri, “Research on feature extraction method based on point cloud roughness,” Proc. of the 2022 IEEE Int. Conf. on Mechatronics and Automation (ICMA), pp. 1568-1573, 2022. https://doi.org/10.1109/ICMA54s519.2022.9856165

- [5] K. Ishikawa, K. Otomo, H. Osaki, and T. Odaka, “Path planning using a flow of pedestrian traffic in an unknown environment,” J. Robot. Mechatron., Vol.35, No.6, pp. 1460-1468, 2023. https://doi.org/10.20965/jrm.2023.p1460

- [6] Y. Tazaki, K. Wada, H. Nagano, and Y. Yokokohji, “Robust posegraph optimization using proximity points,” J. Robot. Mechatron., Vol.35, No.6, pp. 1480-1488, 2023. https://doi.org/10.20965/jrm.2023.p1480

- [7] W. Wang, B. Wang, P. Zhao, C. Chen, R. Clark, B. Yang, A. Markham, and N. Trigoni, “PointLoc: Deep pose regressor for LiDAR point cloud localization,” IEEE Sensors J., Vol.22, No.1, pp. 959-969, 2022. https://doi.org/10.1109/JSEN.2021.3128683

- [8] K. Vidanapathirana, P. Moghadam, B. Harwood, M. Zhao, S. Sridharan, and C. Fookes, “Locus: LiDAR-based place recognition using spatiotemporal higher-order pooling,” Proc. of the IEEE Int. Conf. on Robotics and Automation (ICRA), pp. 5075-5081, 2021. https://doi.org/10.1109/ICRA48506.2021.9560915

- [9] J.-Y. Yim and J.-Y. Sim, “Compression of large-scale 3D point clouds based on joint optimization of point sampling and feature extraction,” Proc. IEEE/CVF Conf. on Computer Vision and Pattern Recognition (CVPR), 2024. https://doi.org/10.48550/arXiv.2412.07302

- [10] P. A. van der Linden, D. W. Romero, and E. J. Bekkers, “Learned gridification for efficient point cloud processing,” Proc. of the 40th Int. Conf. on Machine Learning (ICML), 2023.

- [11] J. Luo, Y. Wang, and L. Gui, “Real-time point cloud registration for vehicle localization based on prior map dynamic loading,” Proc. of the 7th Int. Conf. on Intelligent Robotics and Control Engineering (IRCE), pp. 263-268, 2024. https://doi.org/10.1109/IRCE62232.2024.10739804

- [12] J. Zhang and S. Singh, “LOAM: Lidar odometry and mapping in real-time,” Robotics: Science and Systems Conf. (RSS), 2014.

- [13] P. Habibiroudkenar, R. Ojala, and K. Tammi, “DynaHull: Density-centric dynamic point filtering in point clouds,” J. Intell. Robot. Syst., Vol.110, Article No.165, 2024. https://doi.org/10.1007/s10846-024-02203-2

- [14] Q. Zhang, D. Duberg, R. Geng, M. Jia, L. Wang, and P. Jensfelt, “A dynamic points removal benchmark in point cloud maps,” Proc. of the 26th IEEE Int. Conf. on Intelligent Transportation Systems (ITSC), pp. 608-614, 2023. https://doi.org/10.1109/ITSC57777.2023.10422094

- [15] Y. Duan, J. Peng, Y. Zhang, J. Ji, and Y. Zhang, “PFilter: Building persistent maps through feature filtering for fast and accurate LiDAR-based SLAM,” Proc. IEEE/RSJ Int. Conf. Intelligent Robots and Systems (IROS), pp. 11087-11093, 2022. https://doi.org/10.1109/IROS47612.2022.9981566

- [16] M. A. Othman, M. K. Zakaria, A. H. Adom, S. S. M. Noh, and N. H. H. M. Hanif, “3D SLAM using voxel grid filter on wheeled mobile robot,” Int. J. of Recent Technology and Engineering (IJRTE), Vol.8, No.1S4, pp. 260-265, 2019. https://doi.org/10.35940/ijrte.A1044.0781S419

- [17] C. Qu, Y. Zhang, K. Huang, S. Wang, and Y. Yang, “Point clouds outlier removal method based on improved Mahalanobis and completion,” IEEE Robotics and Automation Letters, Vol.8, No.1, pp. 17-24, 2023. https://doi.org/10.1109/LRA.2022.3221315

- [18] M. Himmelsbach, F. v. Hundelshausen, and H.-J. Wuensche, “Fast segmentation of 3D point clouds for ground vehicles,” Proc. of the 2010 IEEE Intelligent Vehicles Symp. (IV), pp. 560-565, 2010. https://doi.org/10.1109/IVS.2010.5548059

- [19] Y. Zhou, G. Peng, H. Duan, Z. Wu, and X. Zhu, “A point cloud segmentation method based on ground point cloud removal and multi-scale twin range image,” Proc. of the 42nd Chinese Control Conf. (CCC), pp. 4602-4609, 2023.

- [20] B. Anand, M. Senapati, V. Barsaiyan, and P. Rajalakshmi, “LiDAR-INS/GNSS-based real-time ground removal, segmentation, and georeferencing framework for smart transportation,” IEEE Trans. Instrum. Meas., Vol.70, Article No.8504611, 2021. https://doi.org/10.1109/TIM.2021.3117661

- [21] A. Hornung, K. M. Wurm, M. Bennewitz, C. Stachniss, and W. Burgard, “OctoMap: An efficient probabilistic 3D mapping framework based on octrees,” Autonomous Robots, Vol.34, No.3, pp. 189-206, 2013. https://doi.org/10.1007/s10514-012-9321-0

- [22] K. Koide, M. Yokozuka, S. Oishi, and A. Banno, “GLIM: 3D range-inertial localization and mapping with GPU-accelerated scan matching factors,” Robotics and Autonomous Systems, Vol.170, Article No.104574, 2024. https://doi.org/10.1016/j.robot.2024.104750

- [23] P. Biber and W. Straßer, “The normal distributions transform: A new approach to laser scan matching,” Proc. of the IEEE/RSJ Int. Conf. on Intelligent Robots and Systems (IROS), pp. 2743-2748, 2003. https://doi.org/10.1109/IROS.2003.1249285

- [24] R. B. Rusu and S. Cousins, “3D is here: Point Cloud Library (PCL),” Proc. of the IEEE Int. Conf. on Robotics and Automation (ICRA), 2011. https://doi.org/10.1109/ICRA.2011.5980567

- [25] M. Magnusson, “The three-dimensional normal-distributions transform-an efficient representation for registration, surface analysis, and loop detection,” Ph.D. thasis, Örebro University, 2009.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.