Paper:

Autonomous Method for a Mobile Robot in a Corridor Using Only a Depth Camera that Recognizes the Floor and Wall

Tomoki Sugimoto, Naohiro Sugita, and Kazuya Sato

Department of Mechanical Engineering, Faculty of Science and Engineering, Saga University

1 Honjo, Saga, Saga 840-8502, Japan

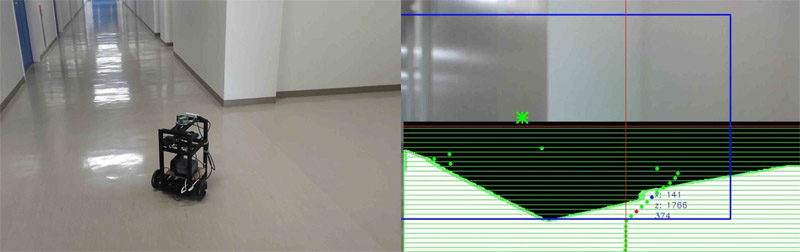

Most of the developed mobile robots are equipped with LiDAR sensors and use SLAM for localization to move autonomously. Moreover, when operating a mobile robot using SLAM, changing the route requires specialized knowledge, and not everyone can operate it. Mobile robots are expected to patrol a predetermined route within a closed indoor space, such as security guards or transporting goods. In such cases, autonomous traveling is possible if the robot can recognize the route it is traveling without creating a map of the environment, as with SLAM. In this study, we developed an autonomous control system that uses camera images mounted on a mobile robot to calculate a straight path based on the distinct recognition of floor and wall surfaces using instance segmentation with deep learning. According to the result that the recognition area of the floor surface expanded in the direction of the branching in the branched path, it was determined that the branching was possible. In addition, when traveling along a path with a wall in front of it, a problem occurred because the target path could not be generated owing to the loss of the floor surface in the recognition results. Therefore, we recognized the wall surface using point-cloud processing and generated a target path. The proposed system allows a mobile robot to autonomously patrol a simple route, such as a corridor, by simply specifying the patrol path, such as straight ahead or turning left or right. Autonomous running experiments were conducted on a mobile robot in a corridor, including a junction point, to verify the effectiveness of the proposed system. This method allows autonomous route patrolling by a mobile robot indoors, without requiring specialized knowledge, such as SLAM, and can also be used to change routes.

Robot recognizes floors/walls to navigate

- [1] S. Nahavandi, R. Alizadehsani, D. Nahavandi, S. Mohamed, N. Mohajer, M. Rokonuzzaman, and I. Hossain, “A Comprehensive Review on Autonomous Navigation,” arXiv preprint, arXiv:2212.1280, 2022. https://doi.org/10.48550/arXiv.2212.12808

- [2] L. Sun, R. P. Singh, and F. Kanehiro, “Visual SLAM Framework Based on Segmentation with the Improvement of Loop Closure Detection in Dynamic Environments,” J. Robot. Mechatron., Vol.33, No.6, pp. 1385-1397, 2021. https://doi.org/10.20965/jrm.2021.p1385

- [3] T. Shimoda, S. Koga, and K. Sato, “Autonomous Motion Control of a Mobile Robot Using Marker Recognition via Deep Learning in GPS-Denied Environments,” J. Robot. and Mechatron., Vol.35, No.1, pp. 136-144, 2023. https://doi.org/10.20965/jrm.2023.p0136

- [4] R. Miyamoto, M. Adachi, H. Ishida, T. Watanabe, K. Matsutani, H. Komatsuzaki, S. Sakata, R. Yokota, and S. Kobayashi, “Visual Navigation Based on Semantic Segmentation Using Only a Monocular Camera as an External Sensor,” J. Robot. Mechatron., Vol.32, No.6, pp. 1137-1153, 2020. https://doi.org/10.20965/jrm.2020.p1137

- [5] M. Adachi, K. Honda, and R. Miyamoto, “Turning at Intersections Using Virtual LiDAR Signals Obtained from a Segmentation Result,” J. Robot. Mechatron., Vol.35, No.2, pp. 347-361, 2023. https://doi.org/10.20965/jrm.2023.p0347

- [6] M. Wada, Y. Ueda, J. Morioka, M. Adachi, and R. Miyamoto, “Dataset Creation for Semantic Segmentation Using Colored Point Clouds Considering Shadows on Traversable Area,” J. Robot. Mechatron., Vol.35, No.6, pp. 1406-1418, 2023. https://doi.org/10.20965/jrm.2023.p1406

- [7] M. Adachi, K. Honda, J. Xue, H. Sudo, Y. Ueda, Y. Yuda, M. Wada, and R. Miyamoto, “Practical Implementation of Visual Navigation Based on Semantic Segmentation for Human-Centric Environments,” J. Robot. Mechatron., Vol.35, No.6, pp. 1419-1434, 2023. https://doi.org/10.20965/jrm.2023.p1419

- [8] Y. Ueda, M. Adachi, J. Morioka, M. Wada, and R. Miyamoto, “Data Augmentation for Semantic Segmentation Using a Real Image Dataset Captured Around the Tsukuba City Hall,” J. Robot. Mechatron., Vol.35, No.6, pp. 1450-1459, 2023. https://doi.org/10.20965/jrm.2023.p1450

- [9] D. Bolya, C. Zhou, F. Xiao and Y. J. Lee, “YOLACT: Real-Time Instance Segmentation,” 2019 IEEE/CVF Int. Conf. on Computer Vision (ICCV), pp. 9157-9166, 2019.

- [10] M. A. Fischler and R. C. Bolles, “Random Sample Consensus: A Paradigm for Model Fitting with Applications to Image Analysis and Automated Cartography,” Communications of the ACM, Vol.24, No.6, pp. 381-395, 1981. https://doi.org/10.1145/358669.358692

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.