Paper:

Autonomous Navigation of Mobile Robot Based on Visual Information and GPS—Path Planning by Semantic Segmentation with the A* Algorithm and Obstacle Avoidance by Kernel Density Estimation—

Shinichiro Suga*, Haruki Ishii*, Tomokazu Takahashi*, Masato Suzuki*

, Kazuyo Tsuzuki**

, Kazuyo Tsuzuki**

, Yasushi Mae*, and Seiji Aoyagi*,†

, Yasushi Mae*, and Seiji Aoyagi*,†

*Department of Mechanical Engineering, Faculty of Engineering Science, Kansai University

3-3-35 Yamate-cho, Suita, Osaka 564-8680, Japan

†Corresponding author

**Department of Architecture, Faculty of Environmental and Urban Engineering, Kansai University

3-3-35 Yamate-cho, Suita, Osaka 564-8680, Japan

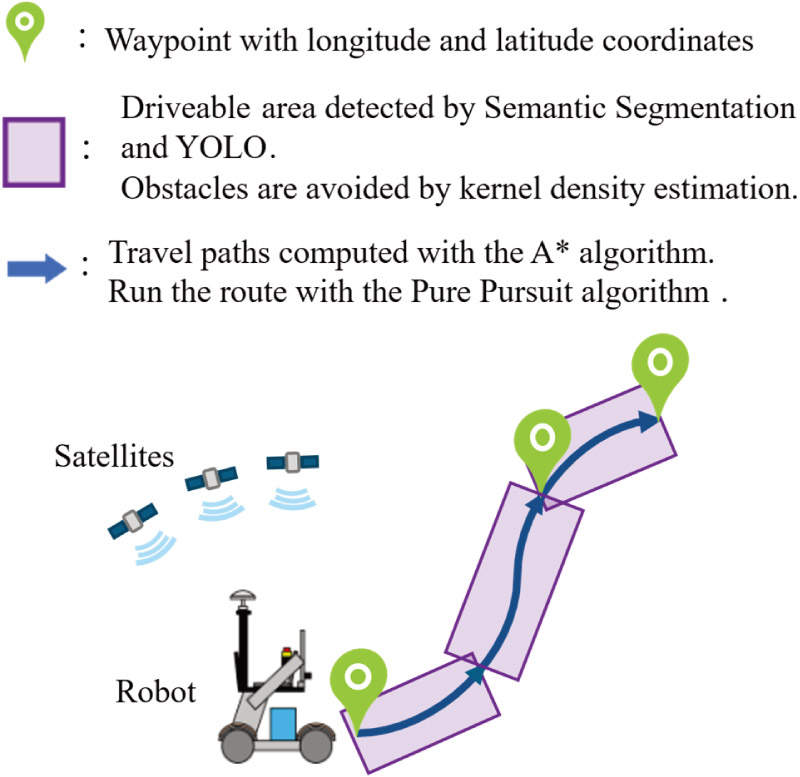

The mainstream approach employing light detection and ranging (LiDAR) estimates the self-position of mobile robot by matching the point cloud acquired during navigation with that recorded in advance, in order to autonomously navigate to the goal point. However, this method is problematic in that it is vulnerable to environmental changes and that much effort and expenses are required to construct and update the point cloud map. Thus, in this paper, we propose an autonomous navigation method that does not require constructing a point cloud map by visiting the site in advance and is robust against environmental changes. The proposed method carries out autonomous navigation by using RTK-GNSS, and deep-learning algorithm of semantic segmentation and YOLO, A* algorithm for path planning, and pure pursuit algorithm for path navigation. Furthermore, obstacle avoidance is carried out using semantic segmentation, YOLO, and kernel density estimation. We conducted a navigation experiment, in which a 300 m section was autonomously navigated, thus verifying the validity of proposed method.

Mobile robot navigation using vision and GPS

- [1] T. Shan, B. Englot, D. Meyers, W. Wang, C. Ratti, and D. Rus, “LIO-SAM: Tightly-coupled lidar inertial odometry via smoothing and mapping,” 2020 IEEE/RSJ Int. Conf. on Intelligent Robots and Systems (IROS), pp. 5135-5142, 2020. https://doi.org/10.1109/IROS45743.2020.9341176

- [2] K. Koide, M. Yokozuka, S. Oishi, and A. Banno, “GLIM: 3D range-inertial localization and mapping with GPU-accelerated scan matching factors,” Robotics and Autonomous Systems, Vol.179, Article No.104750, 2024. https://doi.org/10.1016/j.robot.2024.104750

- [3] D. Fox, W. Burgard, F. Dellaert, and S. Thrun, “Monte carlo localization: Efficient position estimation for mobile robots,” Proc. of the 16th National Conf. on Artificial Intelligence and the 11th Innovative Applications of Artificial Intelligence Conf. Innovative Applications of Artificial Intelligence (AAAI’99/IAAI’99), pp. 343-349, 1999.

- [4] M. Magnusson, A. Lilienthal, and T. Duckett, “Scan registration for autonomous mining vehicles using 3D-NDT,” J. of Field Robotics, Vol.24, Issue 10, pp. 803-827, 2007. https://doi.org/10.1002/rob.20204

- [5] C. Campos, R. Elvira, J. J. G. Rodríguez, J. M. M. Montiel, and J. D. Tardós, “ORB-SLAM3: An accurate open-source library for visual, visual–inertial, and multimap SLAM,” IEEE Trans. on Robotics, Vol.37, No.6, pp. 1874-1890, 2021. https://doi.org/10.1109/TRO.2021.3075644

- [6] J. Bao, X. Yao, H. Tang, and A. Song, “Outdoor navigation of a mobile robot by following GPS waypoints and local pedestrian lane,” 2018 IEEE 8th Annual Int. Conf. on CYBER Technology in Automation, Control, and Intelligent Systems (CYBER), pp. 198-203, 2018. https://doi.org/10.1109/CYBER.2018.8688182

- [7] J. Galvis, D. Pediaditis, K. S. Almazrouei, and N. Aspragathos, “An autonomous navigation approach based on bird’s-eye view semantic maps,” 2023 27th Int. Conf. on Methods and Models in Automation and Robotics (MMAR), pp. 81-86, 2023. https://doi.org/10.1109/MMAR58394.2023.10242482

- [8] A. Miho et al., “Practical implementation of visual navigation based on semantic segmentation for human-centric environments,” J. Robot. Mechatron., Vol.35, No.6, pp. 1419-1434, 2023. https://doi.org/10.20965/jrm.2023.p1419

- [9] Y. Onozuka, R. Matsumi, and M. Shino, “Autonomous mobile robot navigation independent of road boundary using driving recommendation map,” 2021 IEEE/RSJ Int. Conf. on Intelligent Robots and Systems (IROS), pp. 4501-4508, 2021. https://doi.org/10.1109/IROS51168.2021.9636635

- [10] M. Wada et al., “Dataset creation for semantic segmentation using colored point clouds considering shadows on traversable area,” J. Robot. Mechatron., Vol.35, No.6, pp. 1406-1418, 2023. https://doi.org/10.20965/jrm.2023.p1406

- [11] Y. Ueda et al., “Data augmentation for semantic segmentation using a real image dataset captured around the Tsukuba City Hall,” J. Robot. Mechatron., Vol.35, No.6, pp. 1450-1459, 2023. https://doi.org/10.20965/jrm.2023.p1450

- [12] P. E. Hart, N. J. Nilsson, and B. Raphael, “A formal basis for the heuristic determination of minimum cost paths,” IEEE Trans. on Systems Science and Cybernetics, Vol.4, No.2, pp. 100-107, 1968. https://doi.org/10.1109/TSSC.1968.300136

- [13] J. Redmon, S. Divvala, R. Girshick, and A. Farhadi, “You only look once: Unified, real-time object detection,” 2016 IEEE Conf. on Computer Vision and Pattern Recognition (CVPR), pp. 779-788, 2016. https://doi.org/10.1109/CVPR.2016.91

- [14] R. C. Coulter, “Implementation of the pure pursuit path tracking algorithm,” Camegie Mellion University, 1992.

- [15] M. Rosenblatt, “Remarks on some nonparametric estimates of a density function,” The Annals of Mathematical Statistics, Vol.27, Issue 3, pp. 832-837, 1956. https://doi.org/10.1214/aoms/1177728190

- [16] E. Parzen, “On estimation of a probability density function and mode,” The Annals of Mathematical Statistics, Vol.33, Issue 3, pp. 1065-1076, 1962. https://doi.org/10.1214/aoms/1177704472

- [17] O. Ronneberger, P. Fischer, and T. Brox, “U-Net: Convolutional networks for biomedical image segmentation,” Medical Image Computing and Computer-Assisted Intervention (MICCAI 2015), pp. 234-241, 2015. https://doi.org/10.1007/978-3-319-24574-4_28

- [18] H. Zhao, J. Shi, X. Qi, X. Wang, and J. Jia, “Pyramid scene parsing network,” 2017 IEEE Conf. on Computer Vision and Pattern Recognition (CVPR), pp. 6230-6239, 2017. https://doi.org/10.1109/CVPR.2017.660

- [19] L.-C. Chen, Y. Zhu, G. Papandreou, F. Schroff, and H. Adam, “Encoder-decoder with atrous separable convolution for semantic image segmentation,” Proc. of the European Conf. on Computer Vision (ECCV 2018), pp. 833-851, 2018. https://doi.org/10.1007/978-3-030-01234-2_49

- [20] E. Xie, W. Wang, Z. Yu, A. Anandkumar, J. M. Alvarez, and P. Luo, “Segformer: Simple and efficient design for semantic segmentation with transformers,” Proc. of the 35th Int. Conf. on Neural Information Processing Systems (NIPS’21), pp. 12077-12090, 2021.

- [21] M. Chen, Z. Cai, and Y. Wang, “A method for mobile robot obstacle avoidance based on stereo vision,” IEEE 10th Int. Conf. on Industrial Informatics, pp. 94-98, 2012. https://doi.org/10.1109/INDIN.2012.6300848

- [22] K. Watanabe, T. Kato, and S. Maeyama, “Obstacle avoidance for mobile robots using an image-based fuzzy controller,” 39th Annual Conf. of the IEEE Industrial Electronics Society (IECON 2013), pp. 6392-6397, 2013. https://doi.org/10.1109/IECON.2013.6700188

- [23] Y. Li and Y. Liu, “Vision-based Obstacle Avoidance Algorithm for Mobile Robot,” 2020 Chinese Automation Congress (CAC), pp. 1273-1278, 2020. https://doi.org/10.1109/CAC51589.2020.9326906

- [24] D. Fox, W. Burgard, and S. Thrun, “The dynamic window approach to collision avoidance,” IEEE Robot. Autom. Mag., Vol.4, No.1, pp. 23-33, 1997. https://doi.org/10.1109/100.580977

- [25] M. Cordts, M. Omran, S. Ramos, T. Scharwächter, M. Enzweiler, R. Benenson, U. Franke, S. Roth, and B. Schiele, “The Cityscapes dataset,” CVPR Workshop on The Future of Datasets in Vision, 2015.

- [26] M. Cordts, M. Omran, S. Ramos, T. Rehfeld, M. Enzweiler, R. Benenson, U. Franke, S. Roth, and B. Schiele, “The cityscapes dataset for semantic urban scene understanding,” Proc. of the IEEE Conf. on Computer Vision and Pattern Recognition (CVPR), 2016. https://doi.org/10.1109/CVPR.2016.350

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.