Paper:

Automatic Driving Experiment of a Personal Vehicle Using Detection of Steady-State Visual Evoked Potentials by Measurement Objects in Mixed Reality

Nobutomo Matsunaga*

, Tao Jogamine**, and Chihiro Mori**

, Tao Jogamine**, and Chihiro Mori**

*Faculty of Advanced Science and Technology, Kumamoto University

2-39-1 Kurokami, Chuo-ku, Kumamoto 860-8555, Japan

**Graduate School of Science and Technology, Kumamoto University

2-39-1 Kurokami, Chuo-ku, Kumamoto 860-8555, Japan

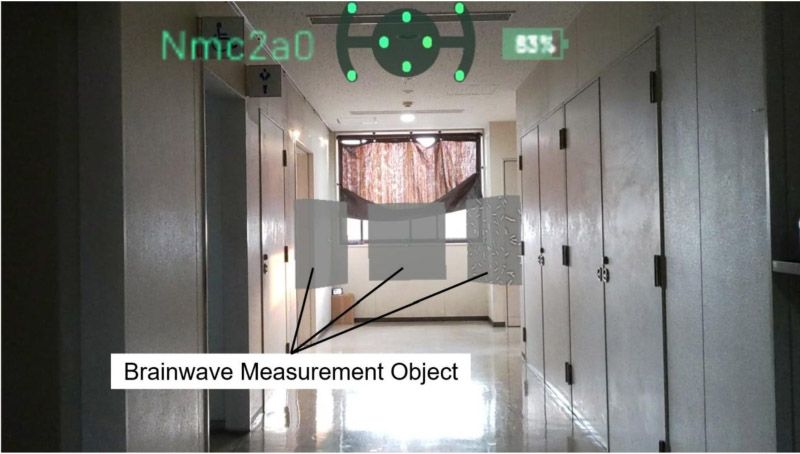

With the aging population, personal vehicles (PVs) have become widely used and are important mobility devices for the elderly. Driving a PV for the elderly involves risks such as collisions. Therefore, driving assistance and a training system utilizing mixed reality (MR) have been developed. However, as these systems are controlled manually, safe operation cannot be guaranteed, particularly for users who are not good at operating them. In this study, an autonomous driving system for a PV using a brain-computer interface is proposed. Measurement objects generating flicker stimuli are projected onto the MR device, and steady-state visual evoked potentials in the visual cortex are detected. Using the automatic driving system, safe operation is realized without stopping, as the intention of the traveling direction of the PV is estimated. The effectiveness of the proposed system is demonstrated through experiments, and the driving workloads are evaluated using NASA-TLX.

Travel direction of PV is estimated using an SSVEP device

- [1] Y. Kanuki and N. Ohta, “Development of autonomous robot with simple navigation system for Tsukuba Challenge 2015,” J. Robot. Mechatron., Vol.28, No.4, pp. 432-440, 2016. https://doi.org/10.20965/jrm.2016.p0432

- [2] Y. Kanuki, N. Ohta, and N. Nakazawa, “Development of autonomous moving robot using appropriate technology for Tsukuba Challenge,” J. Robot. Mechatron., Vol.35, No.2, pp. 279-287, 2023. https://doi.org/10.20965/jrm.2023.p0279

- [3] H. Darweesh et al., “Open source integrated planner for autonomous navigation in highly dynamic environments,” J. Robot. Mechatron., Vol.29, No.4, pp. 668-684, 2017. https://doi.org/10.20965/jrm.2017.p0668

- [4] L. Joseph and J. Cacace, “Mastering ROS for robotics programming: Best practices and troubleshooting solutions when working with ROS,” 3rd Edition, Packt Publishing, 2021.

- [5] Y. Xu, Q. Wang, J. Lillie, V. Kamat, and C. Menassa, “CoNav Chair: Design of a ROS-based smart wheelchair for shared control navigation in the built environment,” arXiv:2501.09680, 2025. https://doi.org/10.48550/arXiv.2501.09680

- [6] W.-Y. Chen et al., “Wheelchair-related accidents: Relationship with wheelchair-using behavior in active community wheelchair users,” Archives of Physical Medicine and Rehabilitation, Vol.92, No.6, pp. 892-898, 2011. https://doi.org/10.1016/j.apmr.2011.01.008

- [7] L. Zhang, Y. Sun, A. Barth, and O. Ma, “Decentralized control of multi-robot system in cooperative object transportation using deep reinforcement learning,” IEEE Access, Vol.8, pp. 184109-184119, 2020. https://doi.org/10.1109/ACCESS.2020.3025287

- [8] S. Holt, “Virtual reality, augmented reality and mixed reality: For astronaut mental health; and space tourism, education and outreach,” Acta Astronautica, Vol.203, pp. 436-446, 2023. https://doi.org/10.1016/j.actaastro.2022.12.016

- [9] M. Walker, T. Phung, T. Chakraborti, T. Williams, and D. Szafir, “Virtual, augmented, and mixed reality for human-robot interaction: A survey and virtual design element taxonomy,” ACM Trans. on Human-Robot Interaction, Vol.12, No.4, Article No.43, 2023. https://doi.org/10.1145/3597623

- [10] S. Rokhsaritalemi, A. Sadeghi-Niaraki, and S.-M. Choi, “A review on mixed reality: Current trends, challenges and prospects,” Applied Sciences, Vol.10, No.2, Article No.636, 2020. https://doi.org/10.3390/app10020636

- [11] M. Asano, Y. Yamada, T. Kunii, M. Koeda, and H. Noborio, “Use of mixed reality in attachment of surgical site measurement robot to surgical bed,” J. Robot. Mechatron., Vol.36, No.3, pp. 694-703, 2024. https://doi.org/10.20965/jrm.2024.p0694

- [12] S. Blomqvist, S. Seipel, and M. Engström, “Using augmented reality technology for balance training in the older adults: A feasibility pilot study,” BMC Geriatrics, Vol.21, Article No.144, 2021. https://doi.org/10.1186/s12877-021-02061-9

- [13] N. Matsunaga, R. Kimura, H. Ishiguro, and H. Okajima, “Driving assistance of welfare vehicle with virtual platoon control method which has collision avoidance function using mixed reality,” 2018 IEEE Int. Conf. on Systems, Man, and Cybernetics, pp. 1915-1920, 2018. https://doi.org/10.1109/SMC.2018.00331

- [14] Y. Takeuchi, N. Matsunaga, and H. Okajima, “Driving instruction and training of welfare vehicle controlled by virtual platoon scheme using sharing system of AR,” 19th Int. Conf. on Control, Automation and Systems, pp. 1438-1443, 2019. https://doi.org/10.23919/ICCAS47443.2019.8971764

- [15] K. Tanaka, S. Fukumoto, N. Matsunaga, and H. Okajima, “Obstacle avoidance of welfare vehicle with head mounted display using spatial mapping of driving environment.” 19th Int. Conf. on Control, Automation and Systems, pp. 1222-1227, 2019. https://doi.org/10.23919/ICCAS47443.2019.8971541

- [16] A. Saibene, M. Caglioni, S. Corchs, and F. Gasparini, “EEG-based BCIs on motor imagery paradigm using wearable technologies: A systematic review,” Sensors, Vol.23, No.5, Article No.2798, 2023. https://doi.org/10.3390/s23052798

- [17] G. Prapas, P. Angelidis, P. Sarigiannidis, S. Bibi, and M. G. Tsipouras, “Connecting the brain with augmented reality: A systematic review of BCI-AR systems,” Applied Sciences, Vol.14, No.21, Article No.9855, 2024. https://doi.org/10.3390/app14219855

- [18] F. De Pace, F. Manuri, M. Bosco, A. Sanna, and H. Kaufmann, “Supporting human-robot interaction by projected augmented reality and a brain interface,” IEEE Trans. on Human-Machine Systems, Vol.54, No.5, pp. 599-608, 2024. https://doi.org/10.1109/THMS.2024.3414208

- [19] S. Kim, S. Lee, H. Kang, S. Kim, and M. Ahn, “P300 brain–computer interface-based drone control in virtual and augmented reality,” Sensors, Vol.21, No.17, Article No.5765, 2021. https://doi.org/10.3390/s21175765

- [20] A. S. A. Medina et al., “Electroencephalography-based brain-computer interfaces in rehabilitation: A bibliometric analysis (2013–2023),” Sensors, Vol.24, No.22, Article No.7125, 2024. https://doi.org/10.3390/s24227125

- [21] C. Liu et al., “Evoked potential changes in patients with Parkinson’s disease,” Brain and Behavior, Vol.7, No.5, Article No.e00703, 2017. https://doi.org/10.1002/brb3.703

- [22] N. Chio and E. Quiles-Cucarella, “A bibliometric review of brain–computer interfaces in motor imagery and steady-state visually evoked potentials for applications in rehabilitation and robotics,” Sensors, Vol.25, No.1, Article No.154, 2025. https://doi.org/10.3390/s25010154

- [23] P. Wolf and T. Götzelmann, “VEPdgets: Towards richer interaction elements based on visually evoked potentials,” Sensors, Vol.23, No.22, Article No.9127, 2023. https://doi.org/10.3390/s23229127

- [24] A. Sanna, F. Manuri, J. Fiorenza, and F. De Pace, “BARI: An affordable brain-augmented reality interface to support human–robot collaboration in assembly tasks,” Information, Vol.13, No.10, Article No.460, 2022. https://doi.org/10.3390/info13100460

- [25] X. Zhao, Y. Chu, J. Han, and Z. Zhang, “SSVEP-based brain–computer interface controlled functional electrical stimulation system for upper extremity rehabilitation,” IEEE Trans. on Systems, Man, and Cybernetics: Systems, Vol.46, No.7, pp. 947-956, 2016. https://doi.org/10.1109/TSMC.2016.2523762

- [26] D. W.-K. Ng and S. Y. Goh, “Indirect control of an autonomous wheelchair using SSVEP BCI,” J. Robot. Mechatron., Vol.32, No.4, pp. 761-767, 2020. https://doi.org/10.20965/jrm.2020.p0761

- [27] C. Uyanik, M. A. Khan, R. Das, J. P. Hansen, and S. Puthusserypady, “Brainy home: A virtual smart home and wheelchair control application powered by brain computer interface,” Proc. of the 15th Int. Joint Conf. on Biomedical Engineering Systems and Technologies, Vol.1, pp. 134-141, 2022. https://doi.org/10.5220/0010785800003123

- [28] C. Mori, K. Okuzono, and N. Matsunaga, “Toward a safe steering of personal vehicle with intention detection system using mixed reality and steady state visual evoked potentials,” 2024 SICE Festival with Annual Conf., pp. 469-474, 2024.

- [29] A. Cao, K. K. Chintamani, A. K. Pandya, and R. D. Ellis, “NASA TLX: Software for assessing subjective mental workload,” Behavior Research Methods, Vol.41, No.1, pp. 113-117, 2009. https://doi.org/10.3758/BRM.41.1.113

- [30] L. Devigne et al., “Design of an immersive simulator for assisted power wheelchair driving,” 2017 Int. Conf. on Rehabilitation Robotics, pp. 995-1000, 2017. https://doi.org/10.1109/ICORR.2017.8009379

- [31] H. Rivera-Flor, K. A. Hernandez-Ossa, B. Longo, and T. Bastos, “Evaluation of task workload and intrinsic motivation in a virtual reality simulator of electric-powered wheelchairs,” Procedia Computer Science, Vol.160, pp. 641-646, 2019. https://doi.org/10.1016/j.procs.2019.11.034

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.