Paper:

Real-Time Multi-Viewpoint Object Detection for High-Speed Omnidirectional Scanning Shooting System

Hongyu Dong*

, Shaopeng Hu**

, Shaopeng Hu**

, Feiyue Wang*

, Feiyue Wang*

, Kohei Shimasaki*

, Kohei Shimasaki*

, and Idaku Ishii*

, and Idaku Ishii*

*Smart Robotics Lab., Graduate School of Advanced Science and Engineering, Hiroshima University

1-4-1 Kagamiyama, Higashi-hiroshima, Hiroshima 739-8527, Japan

**Digital Monozukuri (Manufacturing) Education and Research Center, Hiroshima University

1-4-1 Kagamiyama, Higashi-hiroshima, Hiroshima 739-8527, Japan

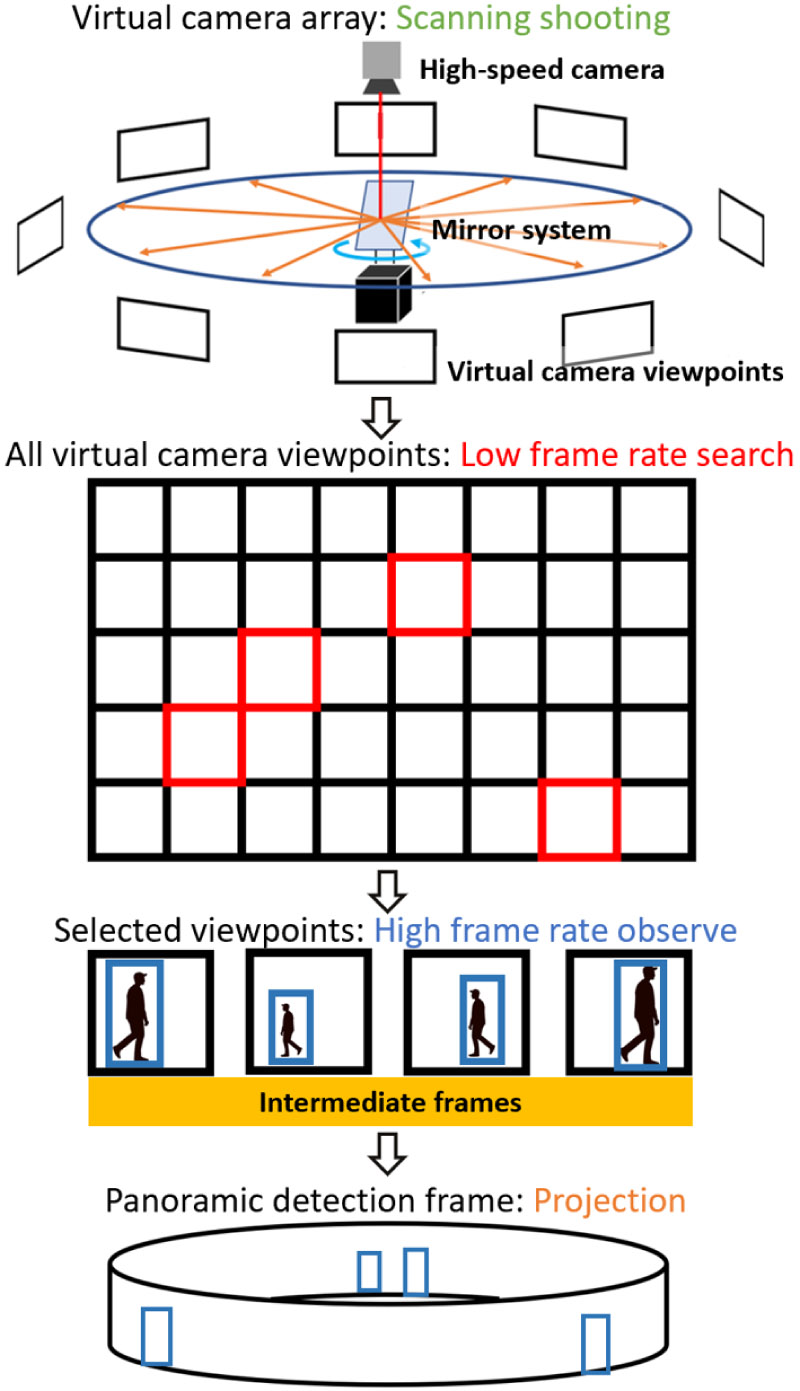

Obtaining object-detection results through multi-viewpoint systems is a promising approach for gathering the surrounding information for mobile robots. In this study, we realized a low delay real-time multi-viewpoint object detection for an omnidirectional scanning shooting system by proposing a dual-stage detection approach with intermediate frames. This approach can synchronously search for valid viewpoints containing the desired objects, and perform low-update-delay real-time object detection on selected viewpoints with intermediate frames. We generated panoramic detection frames as real-time system output by projecting the individual viewpoint detection results. In an outdoor experimental scenario with 10 fps omnidirectional scanning shooting accommodating up to 40 viewpoints, our approach generated 20 fps real-time panoramic detection frames presenting the movement of the observed targets.

Dual-stage multi-viewpoint detection

- [1] S. Ezatzadeh, M. R. Keyvanpour, and S. V. Shojaedini, “A human fall detection framework based on multi-camera fusion,” J. Exp. Theor. Artif. Intell., Vol.34, No.6, pp. 905-924, 2021. https://doi.org/10.1080/0952813X.2021.1938696

- [2] W. Zhang, H. Zhou, S. Sun, Z. Wang, J. Shi, and C. C. Loy, “Robust multi-modality multi-object tracking,” 2019 IEEE/CVF Int. Conf. on Computer Vision (ICCV), pp. 2365-2374, 2019. https://doi.org/10.1109/ICCV.2019.00245

- [3] Y. Zhang, P. Sun, Y. Jiang, D. Yu, F. Weng, Z. Yuan, P. Luo, W. Liu, and X. Wang, “Bytetrack: Multi-object tracking by associating every detection box,” European Conf. on Computer Vision (ECCV 2022), 2022. https://doi.org/10.1007/978-3-031-20047-2_1

- [4] M. Hirano, K. Iwakuma, and Y. Yamakawa, “Multiple High-Speed Vision for Identical Objects Tracking,” J. Robot. Mechatron., Vol.34, No.5, pp. 1073-1084, 2022. https://doi.org/10.20965/jrm.2022.p1073

- [5] M. A. Rahman and Y. Wang, “Optimizing intersection-over-union in deep neural networks for image segmentation,” Advances in Visual Computing, Int. Symp. on Visual Computing (ISVC) 2016, pp. 234-244, 2016. https://doi.org/10.1007/978-3-319-50835-1_22

- [6] J. Luiten, A. Osep, P. Dendorfer, P. Torr, A. Geiger, L. Leal-Taixé, and B. Leibe, “Hota: A higher order metric for evaluating multi-object tracking,” Int. J. of Computer Vision, Vol.129, pp. 548-578, 2021. https://doi.org/10.1007/s11263-020-01375-2

- [7] A. Geiger, P. Lenz, and R. Urtasun, “Are we ready for autonomous driving? the kitti vision benchmark suite,” 2012 IEEE Conf. on Computer Vision and Pattern Recognition (CVPR 2012), pp. 3354-3361, 2012.

- [8] H. Caesar, V. Bankiti, A. H. Lang, S. Vora, V. E. Liong, Q. Xu, A. Krishnan, Y. Pan, G. Baldan, and O. Beijbom, “nuScenes: A multimodal dataset for autonomous driving,” arXiv preprint, arXiv:1903.11027, 2019. https://doi.org/10.48550/arXiv.1903.11027

- [9] S. Tanaka and Y. Inoue, “Outdoor human detection with stereo omnidirectional cameras,” J. Robot. Mechatron., Vol.32, No.6, pp. 1193-1199, 2020. https://doi.org/10.20965/jrm.2020.p1193

- [10] S. Nakamura, T. Hasegawa, T. Hiraoka, Y. Ochiai, and S. Yuta, “Person Searching Through an Omnidirectional Camera Using CNN in the Tsukuba Challenge,” J. Robot. Mechatron., Vol.30, No.4, pp. 540-551, 2018. https://doi.org/10.20965/jrm.2018.p0540

- [11] G. Tong, H. Chen, Y. Li, X. Du, and Q. Zhang, “Object detection for panoramic images based on MS-RPN structure in traffic road scenes,” IET Computer Vision, Vol.13, No.5, pp. 500-506, 2019. https://doi.org/10.1049/iet-cvi.2018.5304,

- [12] J. Guerrero-Viu, C. Fernandez-Labrador, C. Demonceaux, and J. J. Guerrero, “What’s in my Room? Object Recognition on Indoor Panoramic Images,” 2020 IEEE Int. Conf. on Robotics and Automation (ICRA 2020), pp. 567-573, 2020. https://doi.org/10.1109/ICRA40945.2020.9197335

- [13] W. Yang, Y. Qian, J.-K. Kämäräinen, F. Cricri, and L. Fan, “Object detection in equirectangular panorama,” 2018 24th Int. Conf. on Pattern Recognition (ICPR 2018), pp. 2190-2195, 2018. https://doi.org/10.1109/ICPR.2018.8546070

- [14] R. Kawanishi, A. Yamashita, and T. Kaneko, “Three-Dimensional Environment Model Construction from an Omnidirectional Image Sequence,” J. Robot. Mechatron., Vol.21, No.5, pp. 574-582, 2009. https://doi.org/10.20965/jrm.2009.p0574

- [15] P. Pérez, M. Gangnet, and A. Blake, “Poisson image editing,” ACM Trans. Graph., Vol.22, Issue 3, pp. 313-318, 2003. https://doi.org/10.1145/882262.882269

- [16] A. A. Efros and T. K. Leung, “Texture synthesis by non-parametric sampling,” Proc. of the 7th IEEE Int. Conf. on Computer Vision, Vol.2, pp. 1033-1038, 1999. https://doi.org/10.1109/ICCV.1999.790383

- [17] Y. Xiong and K. Pulli, “Fast panorama stitching for high-quality panoramic images on mobile phones,” IEEE Trans. Consum. Electron., Vol.56, Issue 2, pp. 298-306, 2010. https://doi.org/10.1109/TCE.2010.5505931

- [18] M. Jiang, Z. Zhang, K. Shimasaki, S. Hu, and I. Ishii, “Multi-Thread AI Cameras Using High-Speed Active Vision System,” J. Robot. Mechatron., Vol.34, No.5, pp. 1053-1062, 2022. https://doi.org/doi:10.20965/jrm.2022.p1053

- [19] Q. Li, M. Chen, Q. Gu, and I. Ishii, “A flexible calibration algorithm for high-speed bionic vision system based on galvanometer,” 2022 IEEE/RSJ Int. Conf. on Intelligent Robots and Systems (IROS), pp. 4222-4227, 2022. https://doi.org/10.1109/IROS47612.2022.9981947

- [20] X. Long, L. Ma, H. Jiang, Z. Li, Y. Chen, and Q. Gu, “Mechanical particle filter-based active vision system for fast wide-area multiobject detection,” IEEE Trans. on Instrum. Meas., Vol.71, Article No.9510113, 2022. https://doi.org/10.1109/TIM.2022.3201949

- [21] S. Hu, H. Dong, K. Shimasaki, M. Jiang, T. Senoo, and I. Ishii, “Omnidirectional Panoramic Video System With Frame-by-Frame Ultrafast Viewpoint Control,” IEEE Robot. Autom. Lett., Vol.7, Issue 2, pp. 4086-4093, 2022. https://doi.org/10.1109/LRA.2022.3150484

- [22] S. Che, M. Boyer, J. Meng, D. Tarjan, J. W. Sheaffer, and K. Skadron, “A performance study of general-purpose applications on graphics processors using CUDA,” J. of Parallel and Distributed Computing, Vol.68, Issue 10, pp. 1370-1380, 2008. https://doi.org/10.1016/j.jpdc.2008.05.014

- [23] J. Ghorpade, J. Parande, M. Kulkarni, and A. Bawaskar, “GPGPU processing in CUDA architecture,” arXiv preprint, arXiv:1202.4347, 2012. https://doi.org/10.5121/acij.2012.3109

- [24] S. Hu, Y. Matsumoto, T. Takaki, and I. Ishii, “Monocular stereo measurement using high-speed catadioptric tracking,” Sensors, Vol.17, Issue 8, Article No.1839, 2017. https://doi.org/10.3390/s17081839

- [25] S. Hu, W. Lu, K. Shimasaki, M. Jiang, T. Senoo, and I. Ishii, “View and scanning-depth expansion photographic microscope using ultrafast switching mirrors,” IEEE Trans. Instrum. Meas., Vol.71, Article No.4001813, 2022. https://doi.org/10.1109/TIM.2022.3147331

- [26] M. Jiang, R. Sogabe, K. Shimasaki, S. Hu, T. Senoo, and I. Ishii, “500-fps omnidirectional visual tracking using three-axis active vision system,” IEEE Trans. Instrum. Meas., Vol.70, Article No.8501411, 2021. https://doi.org/10.1109/TIM.2021.3053971

- [27] S. Hu, K. Shimasaki, M. Jiang, T. Senoo, and I. Ishii, “A simultaneous multi-object zooming system using an ultrafast pan-tilt camera,” IEEE Sens. J., Vol.21, Issue 7, pp. 9436-9448, 2021. https://doi.org/10.1109/JSEN.2021.3054425

- [28] J. Dai, Y. Li, K. He, and J. Sun, “R-FCN: Object detection via region-based fully convolutional networks,” Proc. of the 30th Int. Conf. on Neural Information Processing Systems (NIPS’16), pp. 379-387, 2016.

- [29] R. Girshick, “Fast R-CNN,” Proc. IEEE Int. Conf. Comput. Vis., pp. 1440-1448, 2015. https://doi.org/10.1109/ICCV.2015.169

- [30] T.-Y. Lin, P. Goyal, R. Girshick, K. He, and P. Dollár, “Focal loss for dense object detection,” Proc. IEEE Int. Conf. Comput. Vis., pp. 2980-2988, 2017. https://doi.org/10.1109/ICCV.2017.324

- [31] W. Liu, D. Anguelov, D. Erhan, C. Szegedy, S. Reed, C.-Y. Fu, and A. C. Berg, “SSD: Single shot MultiBox detector,” European Conf. on Computer Vision (ECCV 2016), pp. 21-37, 2016. https://doi.org/10.1007/978-3-319-46448-0_2

- [32] P. Jiang, D. Ergu, F. Liu, Y. Cai, and B. Ma, “A review of yolo algorithm developments,” Procedia Computer Science, Vol.199, pp. 1066-1073, 2022. https://doi.org/10.1016/j.procs.2022.01.135

- [33] J. Redmon, S. Divvala, R. Girshick, and A. Farhadi, “You only look once: Unified, real-time object detection,” Proc. IEEE Conf. Comput. Vis. Pattern Recognit., pp. 779-788, 2016. https://doi.org/10.1109/CVPR.2016.91

- [34] J. Redmon and A. Farhadi, “YOLO9000: Better, Faster, Stronger,” Proc. IEEE Conf. Comput. Vis. Pattern Recognit., pp. 7263-7271, 2017. https://doi.org/10.1109/CVPR.2017.690

- [35] J. Redmon and A. Farhadi, “YOLOv3: An Incremental Improvement,” arXiv preprint, arXiv:1804.02767, 2018. https://doi.org/10.48550/arXiv.1804.02767

- [36] A. Bochkovskiy, C.-Y. Wang, and H.-Y. M. Liao, “YOLOv4: Optimal Speed and Accuracy of Object Detection,” arXiv preprint, arXiv:2004.10934, 2020. https://doi.org/10.48550/arXiv.2004.10934

- [37] C. Li, L. Li, H. Jiang, K. Weng, Y. Geng, L. Li, Z. Ke, Q. Li, M. Cheng, W. Nie et al., “YOLOv6: A Single-Stage Object Detection Framework for Industrial Applications,” arXiv preprint, arXiv:2209.02976, 2022. https://doi.org/10.48550/arXiv.2209.02976

- [38] Z. Ge, S. Liu, F. Wang, Z. Li, and J. Sun, “YOLOX: Exceeding YOLO Series in 2021,” arXiv preprint, arXiv:2107.08430, 2021. https://doi.org/10.48550/arXiv.2107.08430

- [39] C.-Y. Wang, A. Bochkovskiy, and H.-Y. M. Liao, “YOLOv7: Trainable Bag-of-Freebies Sets New State-of-the-Art for Real-Time Object Detectors,” Proc. IEEE/CVF Conf. Comput. Vis. Pattern Recognit., pp. 7464-7475, 2023. https://doi.org/10.1109/CVPR52729.2023.00721

- [40] L. Wang, X. Zhang, W. Qin, X. Li, L. Yang, Z. Li, L. Zhu, H. Wang, J. Li, and H. Liu, “CAMO-MOT: Combined Appearance-Motion Optimization for 3D Multi-Object Tracking with Camera-LiDAR Fusion,” arXiv preprint, arXiv:2209.02540, 2022. https://doi.org/10.48550/arXiv.2209.02540

- [41] A. Shenoi, M. Patel, J. Gwak, P. Goebel, A. Sadeghian, H. Rezatofighi, R. Martin-Martin, and S. Savarese, “Jrmot: A real-time 3d multi-object tracker and a new large-scale dataset,” 2020 IEEE/RSJ Int. Conf. on Intelligent Robots and Systems (IROS 2020), pp. 10335-10342, 2020. https://doi.org/10.1109/IROS45743.2020.9341635

- [42] M. Chaabane, P. Zhang, J. R. Beveridge, and S. O’Hara, “Deft: Detection embeddings for tracking,” arXiv preprint, arXiv:2102.02267, 2021. https://doi.org/10.48550/arXiv.2102.02267

- [43] A. Kim, A. Ošep, and L. Leal-Taixé, “EagerMOT: 3D Multi-Object Tracking via Sensor Fusion,” 2021 IEEE Int. Conf. on Robotics and Automation (ICRA), pp. 11315-11321, 2021. https://doi.org/10.1109/ICRA48506.2021.9562072

- [44] X. Weng, J. Wang, D. Held, and K. Kitani, “3D Multi-Object Tracking: A Baseline and New Evaluation Metrics,” 2020 IEEE/RSJ Int. Conf. on Intelligent Robots and Systems (IROS), pp. 10359-10366, 2020. https://doi.org/10.1109/IROS45743.2020.9341164

- [45] X. Wang, C. Fu, Z. Li, Y. Lai, and J. He, “DeepFusionMOT: A 3D Multi-Object Tracking Framework Based on Camera-LiDAR Fusion with Deep Association,” IEEE Robot. Autom. Lett., Vol.7, Issue 3, pp. 8260-8267, 2022. https://doi.org/10.1109/LRA.2022.3187264

- [46] X. Wang, C. Fu, J. He, S. Wang, and J. Wang, “StrongFusionMOT: A Multi-Object Tracking Method Based on LiDAR-Camera Fusion,” IEEE Sens. J., Vol.23, Issue 11, pp. 11241-11252, 2023. https://doi.org/10.1109/JSEN.2022.3226490

- [47] G. Jocher, A. Chaurasia, A. Stoken, J. Borovec, NanoCode012, Y. Kwon, K. Michael, TaoXie, J. Fang, imyhxy, Lorna, Z. Yifu, C. Wong, Abhiram V, D. Montes, Z. Wang, C. Fati, J. Nadar, Laughing, UnglvKitDe, V. Sonck, tkianai, yxNONG, P. Skalski, A. Hogan, D. Nair, M. Strobel, and M. Jain, “ultralytics/yolov5: v7.0 – YOLOv5 SOTA Realtime Instance Segmentation,” 2022. https://doi.org/10.5281/zenodo.3908559

- [48] P. J. Burt and E. H. Adelson, “A multiresolution spline with application to image mosaics,” ACM Trans. Graph., Vol.2, Issue 4, pp. 217-236, 1983. https://doi.org/10.1145/245.247

- [49] T. Porter and T. Duff, “Compositing digital images,” ACM SIGGRAPH Computer Graphics, Vol.18, Issue 3, pp. 253-259, 1984. https://doi.org/10.1145/964965.808606

- [50] E. Rublee, V. Rabaud, K. Konolige, and G. Bradski, “ORB: An efficient alternative to SIFT or SURF,” 2011 Int. Conf. on Computer Vision, pp. 2564-2571, 2011. https://doi.org/10.1109/ICCV.2011.6126544

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.