Paper:

6D Object Tracking Using Multi-Camera Fast-PWP3D

Yuta Mizuno, Zejing Zhao

, Daigo Fujiwara

, Daigo Fujiwara

, Satoshi Suzuki

, Satoshi Suzuki

, and Akio Namiki

, and Akio Namiki

Chiba University

1-33 Yayoi-cho, Inage-ku, Chiba, Chiba 263-8522, Japan

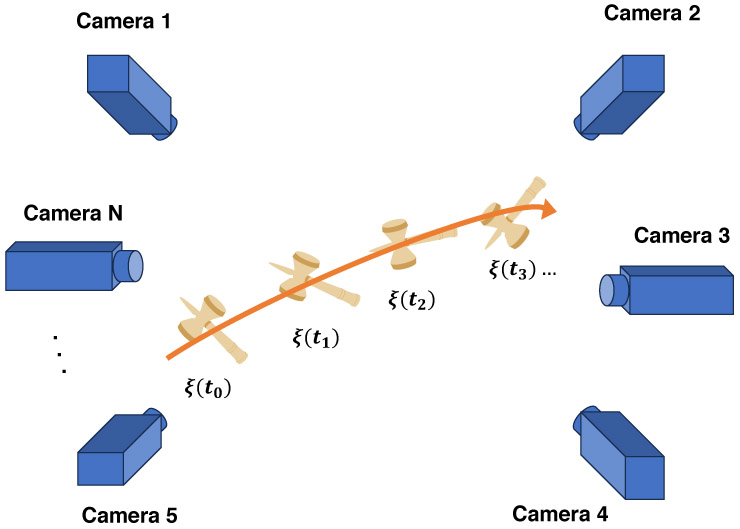

In robotics, realizing 6D tracking, that is, tracking the three-dimensional position and orientation of moving objects in real-time, is a significant challenge. In this study, we extend fast pixel-wise posterior 3D (Fast-PWP3D), a method for estimating the position and posture simultaneously. The extended method involves the segmentation of the target region from multiple camera images using a 3D target model. The energy function was modified to handle multiviewpoint posture estimation. Consequently, the accuracy of target estimation and robustness against occlusion were improved.

Position and orientation of a moving object estimated using multiple cameras

- [1] V. A. Prisacariu and I. D. Reid, “Pwp3d: Real-time segmentation and tracking of 3d objects,” Int. J. of Computer Vision, Vol.98, pp. 335-354, 2012. https://doi.org/10.1007/s11263-011-0514-3

- [2] Y. Liu, P. Sun, and A. Namiki, “Target tracking of moving and rotating object by high-speed monocular active vision,” IEEE Sensors J., Vol.20, No.12, pp. 6727-6744, 2020. https://doi.org/10.1109/JSEN.2020.2976202

- [3] D. F. DeMenthon and L. S. Davis, “Model-based object pose in 25 lines of code,” Int. J. of Computer Vision, Vol.15, pp. 123-141, 1995. https://doi.org/10.1007/BF01450852

- [4] V. Lepetit, F. Moreno-Noguer, and P. Fua, “Epnp: An accurate o(n) solution to the pnp problem,” Int. J. of Computer Vision, Vol.81, pp. 155-166, 2009. https://doi.org/10.1007/s11263-008-0152-6

- [5] S. Hinterstoisser, S. Holzer, C. Cagniart, S. Ilic, K. Konolige, N. Navab, and V. Lepetit, “Multimodal templates for real-time detection of texture-less objects in heavily cluttered scenes,” 2011 Int. Conf. on Computer Vision, pp. 858-865, 2011. https://doi.org/10.1109/ICCV.2011.6126326

- [6] T. Drummond and R. Cipolla, “Real-time visual tracking of complex structures,” IEEE Trans. on Pattern Analysis and Machine Intelligence, Vol.24, No.7, pp. 932-946, 2002. https://doi.org/10.1109/TPAMI.2002.1017620

- [7] X. Deng, A. Mousavian, Y. Xiang, F. Xia, T. Bretl, and D. Fox, “Poserbpf: A Rao–Blackwellized particle filter for 6-d object pose tracking,” IEEE Trans. on Robotics, Vol.37, No.5, pp. 1328-1342, 2021. https://doi.org/10.1109/TRO.2021.3056043

- [8] A. Kendall, M. Grimes, and R. Cipolla, “Posenet: A convolutional network for real-time 6-dof camera relocalization,” Proc. of the IEEE Int. Conf. on Computer Vision, pp. 2938-2946, 2015. https://doi.org/10.1109/ICCV.2015.336

- [9] M. Rad and V. Lepetit, “Bb8: A scalable, accurate, robust to partial occlusion method for predicting the 3d poses of challenging objects without using depth,” Proc. of the IEEE Int. Conf. on Computer Vision, pp. 3828-3836, 2017. https://doi.org/10.1109/ICCV.2017.413

- [10] Y. Xiang, T. Schmidt, V. Narayanan, and D. Fox, “Posecnn: A convolutional neural network for 6d object pose estimation in cluttered scenes,” arXiv preprint, arXiv:1711.00199, 2017. https://doi.org/10.48550/arXiv.1711.00199

- [11] G. Marullo, L. Tanzi, P. Piazzolla, and E. Vezzetti, “6d object position estimation from 2d images: A literature review,” Multimedia Tools and Applications, Vol.82, No.16, pp. 24605-24643, 2023. https://doi.org/10.1007/s11042-022-14213-z

- [12] K. Fujita and T. Tasaki, “Pynet: Poseclass and yaw angle output network for object pose estimation,” J. Robot. Mechatron., Vol.35, No.1, pp. 8-17, 2023. https://doi.org/10.20965/jrm.2023.p0008

- [13] K. Yabashi and T. Tasaki, “Multiview object pose estimation using viewpoint weight based on shared object representation,” J. Robot. Mechatron., Vol.37, No.2, pp. 310-321, 2025. https://doi.org/10.20965/jrm.2025.p0310

- [14] Y. Li, G. Wang, X. Ji, Y. Xiang, and D. Fox, “Deepim: Deep iterative matching for 6d pose estimation,” Proc. of the European Conf. on Computer Vision (ECCV), pp. 695-711, 2018. https://doi.org/10.1007/978-3-030-01231-1_42

- [15] C. Wang, D. Xu, Y. Zhu, R. Martín-Martín, C. Lu, L. Fei-Fei, and S. Savarese, “Densefusion: 6d object pose estimation by iterative dense fusion,” Proc. of the IEEE/CVF Conf. on Computer Vision and Pattern Recognition, pp. 3343-3352, 2019. https://doi.org/10.1109/CVPR.2019.00346

- [16] R. F. Salas-Moreno, R. A. Newcombe, H. Strasdat, P. H. J. Kelly, and A. J. Davison, “Slam++: Simultaneous localisation and mapping at the level of objects,” Proc. of the IEEE Conf. on Computer Vision and Pattern Recognition, pp. 1352-1359, 2013. https://doi.org/10.1109/CVPR.2013.178

- [17] K. Tobita and K. Mima, “Azimuth angle detection method combining akaze features and optical flow for measuring movement accuracy,” J. Robot. Mechatron., Vol.35, No.2, pp. 371-379, 2023. https://doi.org/10.20965/jrm.2023.p0371

- [18] S. Osher and J. A. Sethian, “Fronts propagating with curvature-dependent speed: Algorithms based on hamilton-jacobi formulations,” J. of Computational Physics, Vol.79, No.1, pp. 12-49, 1988. https://doi.org/10.1016/0021-9991(88)90002-2

- [19] S. Osher, R. Fedkiw, and K. Piechor, “Level set methods and dynamic implicit surfaces,” Appl. Mech. Rev., Vol.57, No.3, Article No.B15, 2004. https://doi.org/10.1115/1.1760520

- [20] C. Schmaltz, B. Rosenhahn, T. Brox, D. Cremers, J. Weickert, L. Wietzke, and G. Sommer, “Region-based pose tracking,” Iberian Conf. on Pattern Recognition and Image Analysis (IbPRIA 2007), pp. 56-63, 2007. https://doi.org/10.1007/978-3-540-72849-8_8

- [21] J. Gall, B. Rosenhahn, and H.-P. Seidel, “Drift-free tracking of rigid and articulated objects,” 2008 IEEE Conf. on Computer Vision and Pattern Recognition, 2008. https://doi.org/10.1109/CVPR.2008.4587558

- [22] T. Brox, B. Rosenhahn, J. Gall, and D. Cremers, “Combined region and motion-based 3d tracking of rigid and articulated objects,” IEEE Trans. on Pattern Analysis and Machine Intelligence, Vol.32, No.3, pp. 402-415, 2009. https://doi.org/10.1109/TPAMI.2009.32

- [23] S. Dambreville, R. Sandhu, A. Yezzi, and A. Tannenbaum, “Robust 3d pose estimation and efficient 2d region-based segmentation from a 3d shape prior,” 10th European Conf. on Computer Vision (ECCV 2008), pp. 169-182, 2008. https://doi.org/10.1007/978-3-540-88688-4_13

- [24] V. A. Prisacariu, O. Kähler, D. W. Murray, and I. D. Reid, “Real-time 3d tracking and reconstruction on mobile phones,” IEEE Trans. on Visualization and Computer Graphics, Vol.21, No.5, pp. 557-570, 2014. https://doi.org/10.1109/TVCG.2014.2355207

- [25] H. Tjaden, U. Schwanecke, and E. Schomer, “Real-time monocular pose estimation of 3d objects using temporally consistent local color histograms,” Proc. of the IEEE Int. Conf. on Computer Vision, pp. 124-132, 2017. https://doi.org/10.1109/ICCV.2017.23

- [26] J. Hexner and R. R. Hagege, “2d-3d pose estimation of heterogeneous objects using a region based approach,” Int. J. of Computer Vision, Vol.118, No.1, pp. 95-112, 2016. https://doi.org/10.1007/s11263-015-0873-2

- [27] S. Zhao, L. Wang, W. Sui, H.-y. Wu, and C. Pan, “3d object tracking via boundary constrained region-based model,” 2014 IEEE Int. Conf. on Image Processing (ICIP), pp. 486-490, 2014. https://doi.org/10.1109/ICIP.2014.7025097

- [28] H. Tjaden, U. Schwanecke, E. Schömer, and D. Cremers, “A region-based gauss-newton approach to real-time monocular multiple object tracking,” IEEE Trans. on Pattern Analysis and Machine Intelligence, Vol.41, No.8, pp. 1797-1812, 2018. https://doi.org/10.1109/TPAMI.2018.2884990

- [29] L. Zhong, X. Zhao, Y. Zhang, S. Zhang, and L. Zhang, “Occlusion-aware region-based 3d pose tracking of objects with temporally consistent polar-based local partitioning,” IEEE Trans. on Image Processing, Vol.29, pp. 5065-5078, 2020. https://doi.org/10.1109/TIP.2020.2973512

- [30] Y. Liu and A. Namiki, “Articulated object tracking by high-speed monocular rgb camera,” IEEE Sensors J., Vol.21, No.10, pp. 11899-11915, 2021. https://doi.org/10.1109/JSEN.2020.3032059

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.