Development Report:

Harmful Animal Detection Using Visual Information for Wire-Type Mobile Robots

Takahiro Doi

, Atsuki Mizuta, and Kouhei Nagumo

, Atsuki Mizuta, and Kouhei Nagumo

Kanazawa Institute of Technology

7-1 Ohgigaoka, Nonoichi, Ishikawa 921-8501, Japan

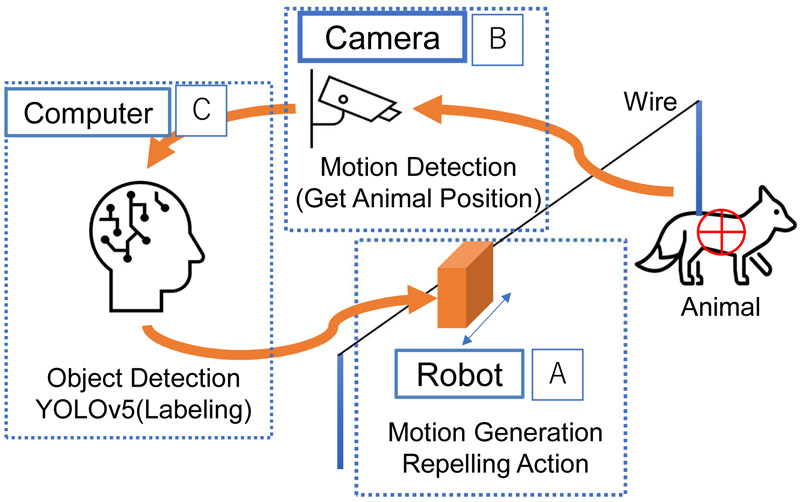

Recently, the damage caused by harmful animals to crops and to people’s homes has been increasing, and this has become a problem. As of FY2022, damage to crops caused by wild birds and beasts amounted to 15,563 million yen. To reduce damage, electric fences are often installed between forested areas, the habitat of harmful animals, and fields or people’s homes. Electric fences are effective when properly installed and maintained. However, they have disadvantages, such as the risk of electrical leakage if grass or trees come into contact with the wires, and cannot be used during the winter season, making them difficult to operate in uneven terrain. In addition, the labor and costs involved in installation are significant, and considering the recent shortage of agricultural workers in Japan, it is difficult to maintain the current status quo. As a countermeasure for this problem, the authors developed a simple and easy-to-use robot system that can be easily installed in mountain forests, using a moving mechanism with overhead wires and visual information. In particular, this study describes the development of software for detecting harmful animals using visual information. The developed software is characterized by its ability to detect harmful animals with higher accuracy by combining a motion detection algorithm and the object recognition model YOLOv5.

A robot system for harmful animals

- [1] C. Sunahata, S. Abiko, and T. Nakakura, “Report on a survey of harmful animals control using the Super Monster Wolf,” IPSJ SIG Technical Report, Vol.2018-CLE-26, Article No.3, 2018 (in Japanese).

- [2] T. Doi et al., “Catenary mobile robot system to prevent harmful animal intrusions,” J. of the Robotics Society of Japan, Vol.41, No.10, pp. 846-849, 2023 (in Japanese). https://doi.org/10.7210/jrsj.41.846

- [3] M. Fujitake, M. Inoue, and T. Yoshimi, “Development of an automatic tracking camera system integrating image processing and machine learning,” J. Robot. Mechatron., Vol.33, No.6, pp. 1303-1314, 2021. https://doi.org/10.20965/jrm.2021.p1303

- [4] A. Nakajima, H. Oku, K. Motegi, and Y. Shiraishi, “Wild animal recognition method for videos using a combination of deep learning and motion detection,” The Japanese J. of the Institute of Industrial Applications Engineers, Vol.9, No.1, pp. 38-45, 2021 (in Japanese). https://doi.org/10.12792/jjiiae.9.1.38

- [5] J. Redmon, S. Divvala, R. Girshick, and A. Farhadi, “You only look once: Unified, real-time object detection,” 2016 IEEE Conf. on Computer Vision and Patten Recognition, pp. 779-788, 2016. https://doi.org/10.1109/CVPR.2016.91

- [6] T.-Y. Lin et al., “Microsoft COCO: Common objects in context,” Proc. of the 13th European Conf. on Computer Vision, Part 5, pp. 740-755, 2014. https://doi.org/10.1007/978-3-319-10602-1_48

- [7] G. Bradski, “Detailed explanation OpenCV-Image processing and recognition using computer vision libraries,” p. 328, O’Reilly Japan, Inc., 2013 (in Japanese).

- [8] P. F. Alcantarilla, J. Nuevo, and A. Bartoli, “Fast explicit diffusion for accelerated features in nonlinear scale spaces,” Proc. of the 24th British Machine Vision Conf., Article No.13, 2013. https://doi.org/10.5244/C.27.13

- [9] T. Cover and P. Hart, “Nearest neighbor pattern classification,” IEEE Trans. on Information Theory, Vol.13, No.1, pp. 21-27, 1967. https://doi.org/10.1109/TIT.1967.1053964

- [10] J. E. Fischer, L. M. Bachmann, and R. Jaeschke, “A readers’ guide to the interpretation of diagnostic test properties: Clinical example of sepsis,” Intensive Care Medicine, Vol.29, No.7, pp. 1043-1051, 2003. https://doi.org/10.1007/s00134-003-1761-8

- [11] R. R. Selvaraju et al., “Grad-CAM: Visual explanations from deep networks via gradient-based localization,” 2017 IEEE Int. Conf. on Computer Vision, pp. 618-626, 2017. https://doi.org/10.1109/ICCV.2017.74

- [12] M. B. Muhammad and M. Yeasin, “Eigen-CAM: Class activation map using principal components,” 2020 Int. Joint Conf. on Neural Networks, 2020.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.