Paper:

Utilizing High-Speed 3D Vision for a Commercial Robotic Arm: Direct Integration and the Dynamic Compensation Approach

Shouren Huang*,†

, Wenhe Wang*,**, Leo Miyashita*

, Wenhe Wang*,**, Leo Miyashita*

, Kenichi Murakami***

, Kenichi Murakami***

, Yuji Yamakawa**

, Yuji Yamakawa**

, and Masatoshi Ishikawa*

, and Masatoshi Ishikawa*

*Research Institute for Science and Technology, Tokyo University of Science

6-3-1 Niijuku, Katsushika-ku, Tokyo 125-8585, Japan

†Corresponding author

**Interfaculty Initiative in Information Studies, The University of Tokyo

4-6-1 Komaba, Meguro-ku, Tokyo 153-8505, Japan

***Institute of Industrial Science, The University of Tokyo

4-6-1 Komaba, Meguro-ku, Tokyo 153-8505, Japan

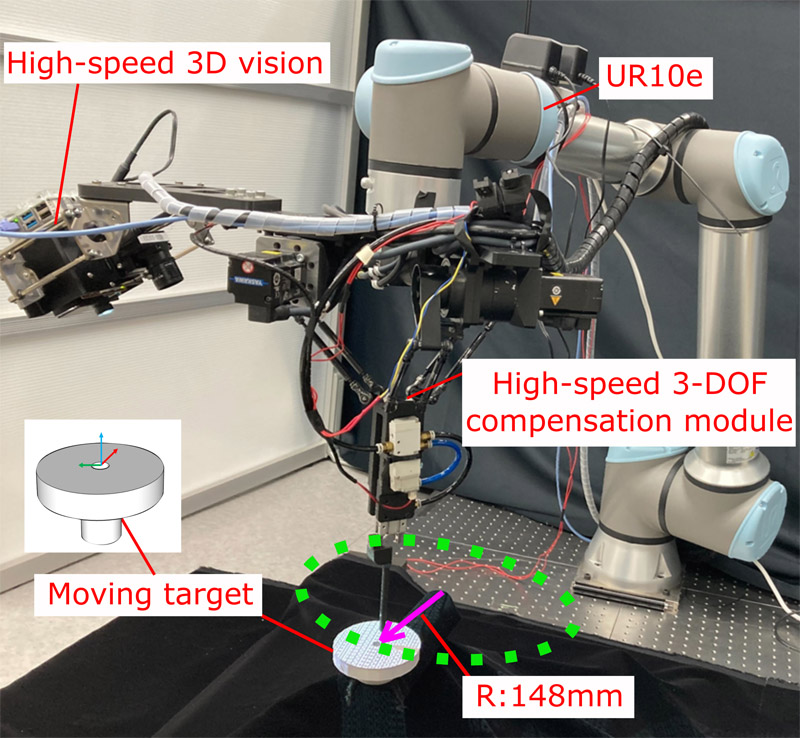

Robot vision (2D/3D) is one of the most important components for robotic arm applications, especially for accurate and intelligent manipulation in unstructured working environments. In this study, we focused on the great potential of high-speed 3D vision with frame rates exceeding 500 fps for commercial robotic arm applications. By utilizing a UR10e robotic arm with a newly developed high-speed 3D vision system that is capable of achieving 1,000 fps 3D sensing, we investigated the impact of feedback rate on tracking performance towards a dynamic moving target. The integration of the high-speed 3D vision for robotic arm applications was implemented under the direct and the dynamic compensation approaches. Comparative studies demonstrated that higher feedback rates improve tracking accuracy up to a particular saturation feedback rate, with the dynamic compensation approach reducing tracking error by 70% compared with direct integration.

Overview of the developed system

- [1] M. Ishikawa, “High-speed vision and its applications toward high-speed intelligent systems,” J. Robot. Mechatron., Vol.34, No.5, pp. 912-935, 2022. https://doi.org/10.20965/jrm.2022.p0912

- [2] K. Ito, T. Sueishi, Y. Yamakawa, and M. Ishikawa, “Tracking and recognition of a human hand in dynamic motion for Janken (rock-paper-scissors) robot,” 2016 IEEE Int. Conf. on Automation Science and Engineering (CASE), pp. 891-896, 2016. https://doi.org/10.1109/COASE.2016.7743496

- [3] T. Tamada et al., “High-speed bipedal robot running using high-speed visual feedback,” 2014 14th IEEE-RAS Int. Conf. on Humanoid Robots, pp. 140-145, 2014. https://doi.org/10.1109/HUMANOIDS.2014.7041350

- [4] S. Huang, N. Bergström, Y. Yamakawa, T. Senoo, and M. Ishikawa, “Robotic contour tracing with high-speed vision and force-torque sensing based on dynamic compensation scheme,” IFAC-PapersOnLine, Vol.50, No.1, pp. 4616-4622, 2017. https://doi.org/10.1016/j.ifacol.2017.08.654

- [5] S. Huang et al., “Dynamic compensation robot with a new high-speed vision system for flexible manufacturing,” The Int. J. of Advanced Manufacturing Technology, Vol.95, No.9, pp. 4523-4533, 2018. https://doi.org/10.1007/s00170-017-1491-7

- [6] S. Huang, M. Ishikawa, and Y. Yamakawa, “A coarse-to-fine framework for accurate positioning under uncertainties—From autonomous robot to human-robot system,” The Int. J. of Advanced Manufacturing Technology, Vol.108, No.9, pp. 2929-2944, 2020. https://doi.org/10.1007/s00170-020-05376-w

- [7] S. Huang, K. Murakami, M. Ishikawa, and Y. Yamakawa, “Robotic assistance for peg-and-hole alignment by mimicking annular solar eclipse process,” J. Robot. Mechatron., Vol.34, No.5, pp. 946-955, 2022. https://doi.org/10.20965/jrm.2022.p0946

- [8] S. Huang, Y. Yamakawa, T. Senoo, and M. Ishikawa, “Robotic needle threading manipulation based on high-speed motion strategy using high-speed visual feedback,” 2015 IEEE/RSJ Int. Conf. on Intelligent Robots and Systems (IROS), pp. 4041-4046, 2015. https://doi.org/10.1109/IROS.2015.7353947

- [9] S. Hutchinson, G. D. Hager, and P. I. Corke, “A tutorial on visual servo control,” IEEE Trans. on Robotics and Automation, Vol.12, No.5, pp. 651-670, 1996. https://doi.org/10.1109/70.538972

- [10] F. Chaumette and S. Hutchinson, “Visual servo control. I. Basic approaches,” IEEE Robotics & Automation Magazine, Vol.13, No.4, pp. 82-90, 2006. https://doi.org/10.1109/MRA.2006.250573

- [11] F. Chaumette and S. Hutchinson, “Visual servo control. II. Advanced approaches [tutorial],” IEEE Robotics & Automation Magazine, Vol.14, No.1, pp. 109-118, 2007. https://doi.org/10.1109/MRA.2007.339609

- [12] C. C. Cheah, M. Hirano, S. Kawamura, and S. Arimoto, “Approximate Jacobian control for robots with uncertain kinematics and dynamics,” IEEE Trans. on Robotics and Automation, Vol.19, No.4, pp. 692-702, 2003. https://doi.org/10.1109/TRA.2003.814517

- [13] H. Wang, Y.-H. Liu, and D. Zhou, “Adaptive visual servoing using point and line features with an uncalibrated eye-in-hand camera,” IEEE Trans. on Robotics, Vol.24, No.4, pp. 843-857, 2008. https://doi.org/10.1109/TRO.2008.2001356

- [14] K. Deguchi, “Optimal motion control for image-based visual servoing by decoupling translation and rotation,” 1998 IEEE/RSJ Int. Conf. on Intelligent Robots and Systems (IROS): Innovations in Theory, Practice and Applications, Vol.2, pp. 705-711, 1998. https://doi.org/10.1109/IROS.1998.727274

- [15] P. I. Corke and S. A. Hutchinson, “A new partitioned approach to image-based visual servo control,” IEEE Trans. on Robotics and Automation, Vol.17, No.4, pp. 507-515, 2001. https://doi.org/10.1109/70.954764

- [16] G. Chesi, K. Hashimoto, D. Prattichizzo, and A. Vicino, “Keeping features in the field of view in eye-in-hand visual servoing: A switching approach,” IEEE Trans. on Robotics, Vol.20, No.5, pp. 908-914, 2004. https://doi.org/10.1109/TRO.2004.829456

- [17] C. P. Bechlioulis, S. Heshmati-Alamdari, G. C. Karras, and K. J. Kyriakopoulos, “Robust image-based visual servoing with prescribed performance under field of view constraints,” IEEE Trans. on Robotics, Vol.35, No.4, pp. 1063-1070, 2019. https://doi.org/10.1109/TRO.2019.2914333

- [18] L. Miyashita, S. Tabata, and M. Ishikawa, “High-speed and low-latency 3D sensing with a parallel-bus pattern,” 2022 Int. Conf. on 3D Vision (3DV), pp. 291-300, 2022. https://doi.org/10.1109/3DV57658.2022.00041

- [19] K. Murakami, S. Huang, M. Ishikawa, and Y. Yamakawa, “Fully automated bead art assembly for smart manufacturing using dynamic compensation approach,” J. Robot. Mechatron., Vol.34, No.5, pp. 936-945, 2022. https://doi.org/10.20965/jrm.2022.p0936

- [20] B. L. Welch, “The generalization of ‘student’s’ problem when several different population variances are involved,” Biometrika, Vol.34, Nos.1-2, pp. 28-35, 1947. https://doi.org/10.2307/2332510

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.