Paper:

Multi-End Effector Selection by Depth-Aware Convolution

Yukiyasu Domae

National Institute of Advanced Industrial Science and Technology

2-3-26 Aomi, Koto-ku, Tokyo 135-0064, Japan

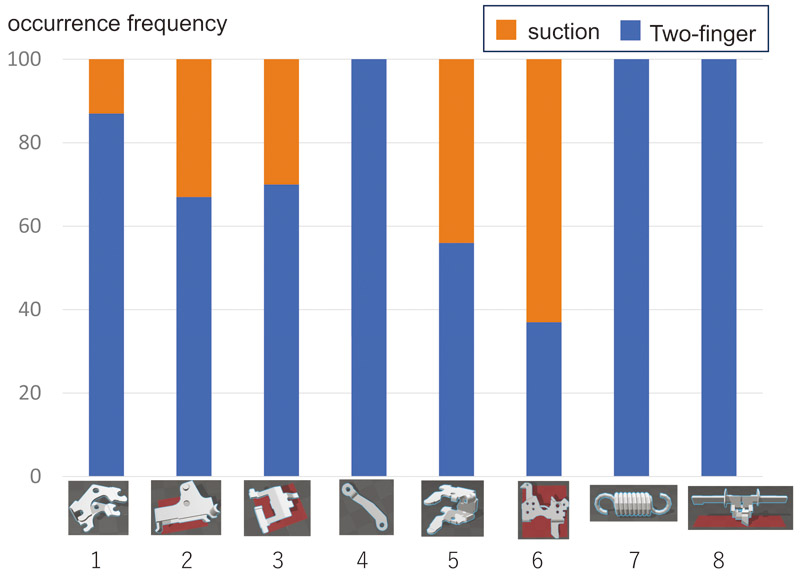

In this paper, we propose a method for selecting end-effectors based on depth images for a robot that performs picking tasks using multiple end-effectors. The proposed method evaluates the graspability of each end-effector in a scene by convolving a hand model, represented as a two-dimensional binary structure, with the depth image of the target scene. A key feature of the method is that it requires no pre-training and does not rely on object or environmental models, operating solely with simple models of the end-effectors. In picking experiments involving eight types of electronic components commonly used in factory automation, the proposed method effectively alternated between suction and two-finger grippers. Compared to other training-free end-effector selection methods and approaches using a single end-effector, the proposed method demonstrated an improvement of over 14% in grasp success rate compared to the second-best method.

A diagram showing the selection results of two types of end effectors

- [1] N. Correll, K. E. Bekris, D. Berenson, O. Brock, A. Causo, K. Hauser, K. Okada, A. Rodriguez, J. M. Romano, and P. R. Wurman, “Analysis and Observations from the First Amazon Picking Challenge,” IEEE Trans. on Automation Science and Engineering (T-ASE), Vol.15, No.1, pp. 172-188, 2018. https://doi.org/10.1109/TASE.2016.2600527

- [2] C. Eppner, S. Hofer, R. Jonschkowski, R. Martín-Martín, A. Sieverling, V. Wall, and O. Brock, “Four aspects of building robotic systems: Lessons from the Amazon Picking Challenge 2015,” Trans. on Autonomous Robots, Vol.42, pp. 1459-1475, 2018. https://doi.org/10.1007/s10514-018-9761-2

- [3] C. Hernandez, M. Bharatheesha, W. Ko, H. Gaiser, J. Tan, K. van Deurzen, M. de Vries, B. van Mil, J. van Egmond, R. Burger, M. Morariu, J. Ju, X. Gerrmann, R. Ensing, J. van Frankenhuyzen, and M. Wisse, “Team Delft’s robot winner of the Amazon Picking Challenge 2016,” Proc. of RoboCup 2016: Robot World Cup XX, pp. 613-624, 2017. https://doi.org/10.1007/978-3-319-68792-6_51

- [4] A. Zeng, K.-T. Yu, S. Song, D. Suo, E. Walker, A. Rodriguez, and J. Xiao, “Multi-View Self-Supervised Deep Learning for 6D Pose Estimation in the Amazon Picking Challenge,” Proc. of the IEEE Int. Conf. on Robotics and Automation (ICRA), 2017. https://doi.org/10.1109/ICRA.2017.7989165

- [5] H. Fujiyoshi, T. Yamashita, S. Akizuki, M. Hashimoto, Y. Domae, R. Kawanishi, M. Fujita, R. Kojima, and K. Shiratsuchi, “Team C2M: Two Cooperative Robots for Picking and Stowing in Amazon Picking Challenge 2016,” A. Causo, J. Durham, K. Hauser, K. Okada, and A. Rodriguez (Eds.), “Advances on Robotic Item Picking: Applications in Warehousing & E-Commerce Fulfillment,” pp. 101-112, Springer, 2020. https://doi.org/10.1007/978-3-030-35679-8_9

- [6] M. Fujita, Y. Domae, A. Noda, G. A. Garcia Ricardez, T. Nagatani, A. Zeng, S. Song, A. Rodriguez, A. Causo, I. M. Chen, and T. Ogasawara, “What are the important technologies for bin picking? Technology analysis of robots in competitions based on a set of performance metrics,” Advanced Robotics, Vol.34, Nos.7-8, pp. 560-574, 2020. https://doi.org/10.1080/01691864.2019.1698463

- [7] A. Zeng, S. Song, K.-T. Yu, E. Donlon, F. R. Hogan, M. Bauza, D. Ma, O. Taylor, M. Liu, E. Romo, N. Fazeli, F. Alet, N. C. Dafle, R. Hollanday, I. Morona, P. Q. Nair, D. Green, I. Taylor, W. Liu, T. Funkhouser, and A. Rodriguez, “Robotic Pick-and-Place of Novel Objects in Clutter with Multi-Affordance Grasping and Cross-Domain Image Matching,” Int. J. of Robotics Research (IJRR), Vol.41, No.7, pp. 690-705, 2022. https://doi.org/10.1177/02783649198680

- [8] S. Levine, P. Pastor, A. Krizhevsky, J. Ibarz, and D. Quillen, “Learning hand-eye coordination for robotic grasping with deep learning and large-scale data collection,” Int. J. of Robotics Research, Vol.37, Nos.4-5, pp. 421-436, 2018. https://doi.org/10.1177/0278364917710318

- [9] J. Mahler, F. T. Pokorny, B. Hou, M. Roderick, M. Laskey, M. Aubry, K. Kohlhoff, T. Kröger, J. Kuffner, and K. Goldberg, “Dex-Net 1.0: A cloud-based network of 3D objects for robust grasp planning using a multi-armed bandit model with correlated rewards,” Proc. of the IEEE Int. Conf. on Robotics and Automation (ICRA), pp. 1957-1964, 2016. https://doi.org/10.1109/ICRA.2016.7487342

- [10] J. Mahler, M. Matl, X. Liu, A. Li, D. Gealy, and K. Goldberg, “Dex-Net 3.0: Computing Robust Robot Vacuum Suction Grasp Targets in Point Clouds Using a New Analytic Model and Deep Learning,” Proc. of the IEEE Int. Conf. on Robotics and Automation (ICRA), 2018. https://doi.org/10.1109/ICRA.2018.8460887

- [11] J. Mahler, M. Matl, V. Satish, M. Danielczuk, B. DeRose, S. McKinley, and K. Goldberg, “Learning ambidextrous robot grasping policies,” Science Robotics, Vol.4, No.26, Article No.eaau4984, 2019. https://doi.org/10.1126/scirobotics.aau4984

- [12] M. Fujita, Y. Domae, R. Kawanishi, G. A. Garcia Ricardez, K. Kato, K. Shiratsuchi, R. Haraguchi, R. Araki, H. Fujiyoshi, S. Akizuki, M. Hashimoto, A. Causo, A. Noda, H. Okuda, and T. Ogasawara, “Bin-picking robot using a multi-gripper switching strategy based on object sparseness,” Proc. of the IEEE Int. Conf. on Automation Science and Engineering (CASE), pp. 1540-1547, 2019. https://doi.org/10.1109/COASE.2019.8842977

- [13] J. Xu, W. Wan, K. Koyama, Y. Domae, and K. Harada, “Selecting and designing grippers for an assembly task in a structured approach,” Advanced Robotics, Vol.35, No.6, pp. 381-397, 2021. https://doi.org/10.1080/01691864.2020.1870047

- [14] J. Cramer, B. Decraemer, M. R. Afzal, and K. Kellens, “A user-friendly toolkit to select and design multi-purpose grippers for modular robotic systems,” Proc. of CIRP Conf. on Assembly Technology and Systems, 2024. https://doi.org/10.1016/j.procir.2024.07.002

- [15] F. Drigalski, C. Nakashima, Y. Shibata, Y. Konishi, J. C. Triyonoputro, K. Nie, D. Petit, T. Ueshiba, R. Takase, Y. Domae, T. Yoshioka, Y. Ijiri, I. G. Ramirez-Alpizar, W. Wan, and K. Harada, “Team O2AS at the World Robot Summit 2018: An approach to robotic kitting and assembly tasks using general purpose grippers and tools,” Advanced Robotics, Vol.34, Nos.7-8, pp. 514-530, 2020. https://doi.org/10.1080/01691864.2020.1734481

- [16] Y. Domae, H. Okuda, Y. Taguchi, K. Sumi, and T. Hirai, “Fast graspability evaluation on single depth maps for bin picking with general grippers,” Proc. of the IEEE Int. Conf. on Robotics and Automation (ICRA), pp. 1997-2004, 2014. https://doi.org/10.1109/ICRA.2014.6907124

- [17] Y. Domae, A. Noda, T. Nagatani, and W. Wan, “Robotic general parts feeder: Bin-picking, regrasping, and kitting,” Proc. of the IEEE Int. Conf. on Robotics and Automation (ICRA), pp. 5004-5010, 2020. https://doi.org/10.1109/ICRA40945.2020.9197056

- [18] R. Matsumura, Y. Domae, W. Wan, and K. Harada, “Learning based robotic bin-picking for potentially tangled objects,” Proc. of the IEEE/RSJ Int. Conf. on Intelligent Robots and Systems (IROS), pp. 17990-7997, 2019. https://doi.org/10.1109/IROS40897.2019.8968295

- [19] X. Zhang, Y. Domae, W. Wan, and K. Harada, “Learning efficient policies for picking entangled wire harnesses: An approach to industrial bin picking,” IEEE Robotics and Automation Letters (RA-L), Vol.8, No.1, pp. 73-80, 2022. https://doi.org/10.1109/LRA.2022.3222995

- [20] Y. Domae, K. Makihara, and R. Hanai, “The impact of hand parameter optimization on industrial bin picking,” Advanced Robotics, Vol.38, No.22, pp. 1634-1645, 2024. https://doi.org/10.1080/01691864.2024.2416015

- [21] Y. Domae, “6DoF Graspability Evaluation on a Single Depth Map,” J. Robot. Mechatron., Vol.37, No.2, pp. 367-373, 2025. https://doi.org/10.20965/jrm.2025.p0367

- [22] W. Liu, D. Anguelov, D. Erhan, C. Szegedy, S. Reed, C.-Y. Fu, and A. C. Berg, “SSD: Single Shot MultiBox Detector,” Proc. of European Conf. on Computer Vision (ECCV), pp. 21-37, 2016. https://doi.org/10.1007/978-3-319-46448-0_2

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.