Paper:

Recognizing and Picking Up Deformable Linear Objects Based on Graph

Xiaohang Shi*

and Yuji Yamakawa**

and Yuji Yamakawa**

*Graduate School of Engineering, The University of Tokyo

4-6-1 Komaba, Meguro-ku, Tokyo 153-8505, Japan

**Interfaculty Initiative in Information Studies, The University of Tokyo

4-6-1 Komaba, Meguro-ku, Tokyo 153-8505, Japan

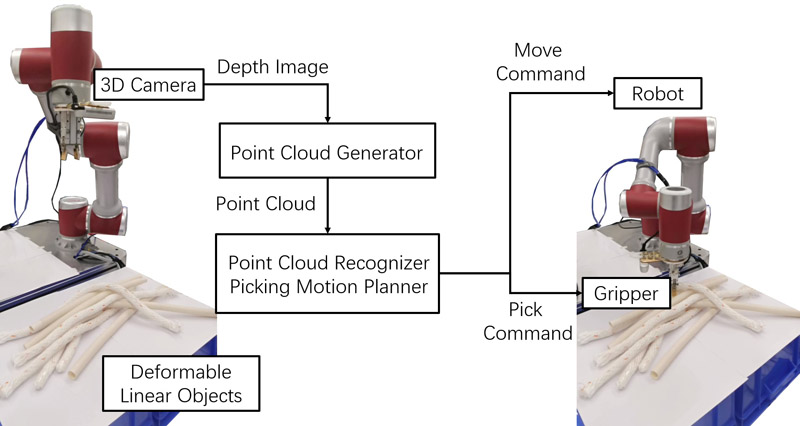

Manipulating deformable linear objects (DLOs) presents significant challenges, particularly in industrial applications. However, most previous research has primarily focused on the manipulation of single DLOs, leaving the problem of picking up stacked DLOs without causing collisions largely unexplored. This task is common in industrial production processes, such as the sorting of pipes or cables. This paper introduces a novel strategy to address this issue using a simple setup with only a depth sensor. The proposed approach begins by acquiring a point cloud of the scene. The supervoxel method is then employed to segment the points into several clusters. Based on these clusters, a graph is constructed and pruned by graph theory to search for DLO axes. The axes belonging to the same DLO are subsequently connected, and occlusion relationships are identified. Finally, the best target, optimal picking point, and motion planning strategy are evaluated. Experimental results demonstrate that this method is highly adaptable to complex scenarios and can accommodate DLOs of varying diameters.

The workflow of our work

- [1] R. Radu Bogdan, N. Blodow, and M. Beetz, “Fast point feature histograms (FPFH) for 3D registration,” 2009 IEEE Int. Conf. on Robotics and Automation, pp. 3212-3217, 2009. https://doi.org/10.1109/ROBOT.2009.5152473

- [2] B. Drost, M. Ulrich, N. Navab, and S. Ilic, “Model globally, match locally: Efficient and robust 3D object recognition,” 2010 IEEE Computer Society Conf. on Computer Vision and Pattern Recognition, pp. 998-1005, 2010. https://doi.org/10.1109/CVPR.2010.5540108

- [3] A. Myronenko and X. Song, “Point set registration: Coherent point drift,” IEEE Trans. on Pattern Analysis and Machine Intelligence, Vol.32, No.12, pp. 2262-2275, 2010. https://doi.org/10.1109/TPAMI.2010.46

- [4] D. De Gregorio, G. Palli, and L. Di Stefano, “Let’s take a walk on superpixels graphs: Deformable linear objects segmentation and model estimation,” Asian Conf. on Computer Vision, pp. 662-677, 2018. https://doi.org/10.1007/978-3-030-20890-5_42

- [5] A. Caporali, K. Galassi, R. Zanella, and G. Palli, “FASTDLO: Fast Deformable Linear Objects Instance Segmentation,” IEEE Robotics and Automation Letters, Vol.7, No.4, pp. 9075-9082, 2022. https://doi.org/10.1109/LRA.2022.3189791

- [6] A. Caporali, R. Zanella, D. De Greogrio, and G. Palli, “Ariadne+: Deep Learning–Based Augmented Framework for the Instance Segmentation of Wires,” IEEE Trans. on Industrial Informatics, Vol.18, No.12, pp. 8607-8617, 2022. https://doi.org/10.1109/TII.2022.3154477

- [7] A. Caporali, K. Galassi, B. L. Žagar, R. Zanella, G. Palli, and A. C. Knoll, “RT-DLO: Real-Time Deformable Linear Objects Instance Segmentation,” IEEE Trans. on Industrial Informatics, Vol.19, No.11, pp. 11333-11342, 2023. https://doi.org/10.1109/TII.2023.3245641

- [8] X. Huang, D. Chen, Y. Guo, X. Jiang, and Y. Liu, “Untangling Multiple Deformable Linear Objects in Unknown Quantities With Complex Backgrounds,” IEEE Trans. on Automation Science and Engineering, Vol.21, No.1, pp. 671-683, 2024. https://doi.org/10.1109/TASE.2023.3233949

- [9] J. Papon, A. Abramov, M. Schoeler, and F. Wörgötter, “Voxel cloud connectivity segmentation – Supervoxels for point clouds,” IEEE Conf. on Computer Vision and Pattern Recognition, pp. 2027-2034, 2013. https://doi.org/10.1109/CVPR.2013.264

- [10] Y. Lin, C. Wang, D. Zhai, W. Li, and J. Li, “Toward better boundary preserved supervoxel segmentation for 3D point clouds,” ISPRS J. of Photogrammetry and Remote Sensing, Vol.143, pp. 39-47, 2018. https://doi.org/10.1016/j.isprsjprs.2018.05.004

- [11] G. Leão, C. M. Costa, A. Sousa, and G. Veiga, “Detecting and solving tube entanglement in bin picking operations,” Applied Sciences, Vol.10, No.7, Article No.2264, 2020. https://doi.org/10.3390/app10072264

- [12] G. Leão, C. M. Costa, A. Sousa, and G. Veiga, “Perception of Entangled Tubes for Automated Bin Picking,” M. Silva, J. Luís Lima, L. Reis, A. Sanfeliu, and D. Tardioli (Eds.), “Robot 2019: Fourth Iberian Robotics Conf.: Advances in Robotics,” pp. 619-631, Springer, 2020. https://doi.org/10.1007/978-3-030-35990-4_50

- [13] G. Leão, C. M. Costa, A. Sousa, L. P. Reis, and G. Veiga, “Using Simulation to Evaluate a Tube Perception Algorithm for Bin Picking,” Robotics, Vol.11, No.2, Article No.46, 2022. https://doi.org/10.3390/robotics11020046

- [14] Y. Zhu, X. Xiao, and W. Wu, “3D Reconstruction of deformable linear objects based on cylindrical fitting,” Signal, Image and Video Processing, Vol.17, No.5, pp. 2617-2625, 2023. https://doi.org/10.1007/s11760-022-02478-8

- [15] W. H. Lui and A. Saxena, “Tangled: Learning to untangle ropes with RGB-D perception,” 2013 IEEE/RSJ Int. Conf. on Intelligent Robots and Systems, pp. 837-844, 2013. https://doi.org/10.1109/IROS.2013.6696448

- [16] Z. Ma and J. Xiao, “Robotic Perception-Motion Synergy for Novel Rope Wrapping Tasks,” IEEE Robotics and Automation Letters, Vol.8, No.7, pp. 4131-4138, 2023. https://doi.org/10.1109/LRA.2023.3280808

- [17] A. Keipour, M. Bandari, and S. Schaal, “Deformable One-Dimensional Object Detection for Routing and Manipulation,” IEEE Robotics and Automation Letters, Vol.7, No.2, pp. 4329-4336, 2022. https://doi.org/10.1109/LRA.2022.3146920

- [18] X. Zhang, Y. Domae, W. Wan, and K. Harada, “A closed-loop bin picking system for entangled wire harnesses using bimanual and dynamic manipulation,” Robotics and Computer-Integrated Manufacturing, Vol.86, Article No.102670, 2024. https://doi.org/10.1016/j.rcim.2023.102670

- [19] A. Caporali, K. Galassi, and G. Palli, “Deformable Linear Objects 3D Shape Estimation and Tracking From Multiple 2D Views,” IEEE Robotics and Automation Letters, Vol.8, No.6, pp. 3852-3859, 2023. https://doi.org/10.1109/LRA.2023.3273518

- [20] M. Yan, Y. Zhu, N. Jin, and J. Bohg, “Self-supervised learning of state estimation for manipulating deformable linear objects,” IEEE Robotics and Automation Letters, Vol.5, No.2, pp. 2372-2379, 2020. https://doi.org/10.1109/LRA.2020.2969931

- [21] Y. Wu, W. Yan, T. Kurutach, L. Pinto, and P. Abbeel, “Learning to manipulate deformable objects without demonstrations,” arXiv preprint, arXiv:1910.13439, 2019. https://doi.org/10.48550/arXiv.1910.13439

- [22] A. Nair, D. Chen, P. Agrawal, P. Isola, P. Abbeel, and J. Malik, “Combining self-supervised learning and imitation for vision-based rope manipulation,” 2017 IEEE Int. Conf. on Robotics and Automation, pp. 2146-2153, 2017. https://doi.org/10.1109/ICRA.2017.7989247

- [23] P. Sundaresan, J. Grannen, B. Thananjeyan, A. Balakrishna, M. Laskey, and K. Stone, “Learning rope manipulation policies using dense object descriptors trained on synthetic depth data,” 2020 IEEE Int. Conf. on Robotics and Automation, pp. 9411-9418, 2020. https://doi.org/10.1109/ICRA40945.2020.9197121

- [24] M. A. Fischler and R. C. Bolles, “Random sample consensus: a paradigm for model fitting with applications to image analysis and automated cartography,” Communications of the ACM, Vol.24, No.6, pp. 381-395, 1981. https://doi.org/10.1145/358669.358692

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.