Paper:

Motion Planning for Dynamic Three-Dimensional Manipulation for Unknown Flexible Linear Object

Kenta Tabata*

, Renato Miyagusuku*

, Renato Miyagusuku*

, and Hiroaki Seki**

, and Hiroaki Seki**

*Graduate School of Engineering, Utsunomiya University

7-1-2 Yoto, Utsunomiya, Tochigi 321-8585, Japan

**Graduate School of Natural Science and Technology, Kanazawa University

Kakuma-machi, Kanazawa, Ishikawa 920-1192, Japan

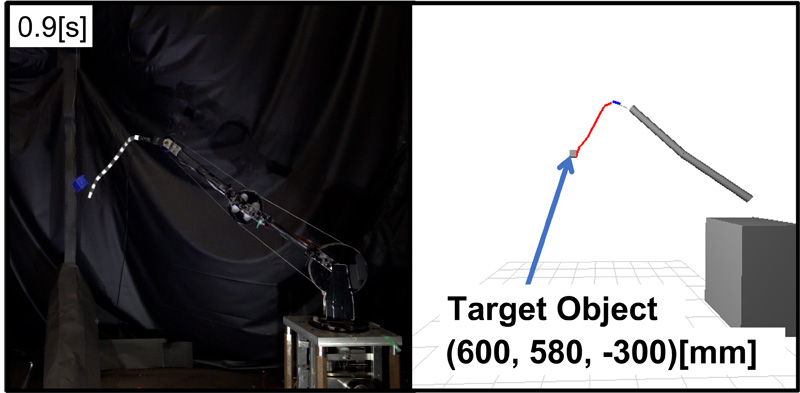

Generally, deformable objects have large and nonlinear deformations. Because of these characteristics, recognition and estimation of their movement are difficult. Many studies have been conducted aimed at manipulating deformable objects at will. However, they have been focused on situations wherein a rope’s properties are already known from prior experiments. In our previous work, we proposed a motion planning algorithm to manipulate unknown ropes using a robot arm. Our approach considered three steps: motion generation, manipulation, and parameter estimation. By repeating these three steps, a parameterized flexible linear object model that can express the actual rope movements was estimated, and manipulation was realized. However, our previous work was limited to 2D space manipulation. In this paper, we extend our previously proposed method to address casting manipulation in a 3D space. Casting manipulation involves targeting the flexible linear object tips at the desired object. While our previous studies focused solely on two-dimensional manipulation, this work examines the applicability of the same approach in 3D space. Moreover, 3D manipulation using an unknown flexible linear object has never been reported for dynamic manipulation with flexible linear objects. In this work, we show that our proposed method can be used for 3D manipulation.

Casting manipulation with the unknown DLO

- [1] W. Zhao, J. P. Queralta, and T. Westerlund, “Sim-to-real transfer in deep reinforcement learning for robotics: A survey,” 2020 IEEE Symp. Series on Computational Intelligence (SSCI), pp. 737-744, 2020. https://doi.org/10.1109/SSCI47803.2020.9308468

- [2] D. Blanco-Mulero et al., “Benchmarking the sim-to-real gap in cloth manipulation,” IEEE Robotics and Automation Letters, Vol.9, No.3, pp. 2981-2988, 2024. https://doi.org/10.1109/LRA.2024.3360814

- [3] S. Chen et al., “DiffSRL: Learning dynamical state representation for deformable object manipulation with differentiable simulation,” IEEE Robotics and Automation Letters, Vol.7, No.4, pp. 9533-9540, 2022. https://doi.org/10.1109/LRA.2022.3192209

- [4] M. Torne et al., “Reconciling reality through simulation: A real-to-sim-to-real approach for robust manipulation,” arXiv:2403.03949, 2024. https://doi.org/10.48550/arXiv.2403.03949

- [5] D. Tong et al., “Sim2Real neural controllers for physics-based robotic deployment of deformable linear objects,” The Int. J. of Robotics Research, Vol.43, No.6, pp. 791-810, 2024. https://doi.org/10.1177/02783649231214553

- [6] K. Tabata, H. Seki, T. Tsuji, T. Hiramitsu, and M. Hikizu, “Dynamic manipulation of unknown string by robot arm: Realizing momentary string shapes,” ROBOMECH J., Vol.7, Article No.39, 2020. https://doi.org/10.1186/s40648-020-00187-w

- [7] K. Tabata, H. Seki, T. Tsuji, and T. Hiramitsu, “Casting manipulation of unknown string by robot arm,” 2021 IEEE/RSJ Int. Conf. on Intelligent Robots and Systems (IROS), pp. 668-674, 2021. https://doi.org/10.1109/IROS51168.2021.9635837

- [8] K. Tabata, H. Seki, T. Tsuji, and T. Hiramitsu, “Realization of swing manipulation by 3-DOF robot arm for unknown string via parameter estimation and motion generation,” Int. J. Automation Technol., Vol.15, No.6, pp. 774-783, 2021. https://doi.org/10.20965/ijat.2021.p0774

- [9] Z. Hu, T. Han, P. Sun, J. Pan, and D. Manocha, “3-D deformable object manipulation using deep neural networks,” IEEE Robotics and Automation Letters, Vol.4, No.4, pp. 4255-4261, 2019. https://doi.org/10.1109/LRA.2019.2930476

- [10] Y. Wu, W. Yan, T. Kurutach, L. Pinto, and P. Abbeel, “Learning to manipulate deformable objects without demonstrations,” arXiv:1910.13439, 2019. https://doi.org/10.48550/arXiv.1910.13439

- [11] X. Ma, D. Hsu, and W. S. Lee, “Learning latent graph dynamics for deformable object manipulation,” arXiv:2104.12149, 2021. https://doi.org/10.48550/arXiv.2104.12149

- [12] B. Thach, A. Kuntz, and T. Hermans, “DeformerNet: A deep learning approach to 3D deformable object manipulation,” arXiv:2107.08067, 2021. https://doi.org/10.48550/arXiv.2107.08067

- [13] C. Wang et al., “Offline-online learning of deformation model for cable manipulation with graph neural networks,” IEEE Robotics and Automation Letters, Vol.7, No.2, pp. 5544-5551, 2022. https://doi.org/10.1109/LRA.2022.3158376

- [14] P. Chang and T. Padif, “Sim2Real2Sim: Bridging the gap between simulation and real-world in flexible object manipulation,” 2020 4th IEEE Int. Conf. on Robotic Computing (IRC), pp. 56-62, 2020. https://doi.org/10.1109/IRC.2020.00015

- [15] J. Hietala, D. Blanco-Mulero, G. Alcan, and V. Kyrki, “Closing the Sim2Real gap in dynamic cloth manipulation,” arXiv:2109.04771, 2021. https://doi.org/10.48550/arXiv.2109.04771

- [16] V. Lim et al., “Real2Sim2Real: Self-supervised learning of physical single-step dynamic actions for planar robot casting,” 2022 Int. Conf. on Robotics and Automation (ICRA), pp. 8282-8289, 2022. https://doi.org/10.1109/ICRA46639.2022.9811651

- [17] C. Chi, B. Burchfiel, E. Cousineau, S. Feng, and S. Song, “Iterative residual policy: For goal-conditioned dynamic manipulation of deformable objects,” arXiv:2203.00663, 2022. https://doi.org/10.48550/arXiv.2203.00663

- [18] Y. Yamakawa, A. Namiki, and M. Ishikawa, “Dynamic high-speed knotting of a rope by a manipulator,” Int. J. of Advanced Robotic Systems, Vol.10, No.10, Article No.361, 2013. https://doi.org/10.5772/56783

- [19] H. Zhang et al., “Robots of the lost arc: Self-supervised learning to dynamically manipulate fixed-endpoint cables,” 2021 IEEE Int. Conf. on Robotics and Automation (ICRA), pp. 4560-4567, 2021. https://doi.org/10.1109/ICRA48506.2021.9561630

- [20] L. Y. Chen et al., “Efficiently learning single-arm fling motions to smooth garments,” A. Billard, T. Asfour, and O. Khatib, “Robotics Research,” pp. 36-51, Springer, 2023. https://doi.org/10.1007/978-3-031-25555-7_4

- [21] H. Seo, M. Takizawa, T. Suehiro, K. Kimura, and S. Kudoh, “Proposal of the teaching method for symmetric repetitive motion in string knotting,” Proc. of the 2023 JSME Annual Conf. on Robotics and Mechatronics (Robomec), Article No.2P2-D24, 2023. https://doi.org/10.1299/jsmermd.2023.2P2-D24

- [22] K. Kondo, M. Takizawa, T. Suehiro, K. Kimura, and S. Kudoh, “Folding the hem of clothes by a dual-arm robot,” Proc. of the 2023 JSME Annual Conf. on Robotics and Mechatronics (Robomec), Article No.2A2-E03, 2023. https://doi.org/10.1299/jsmermd.2023.2A2-E03

- [23] H. Okura, S. Komizunai, T. Senoo, and A. Konno, “Paper handling of paper shapes with multi-fingered robot hand using high-speed image processing,” Proc. of the 2023 JSME Annual Conf. on Robotics and Mechatronics (Robomec), Article No.2A2-E04, 2023. https://doi.org/10.1299/jsmermd.2023.2A2-E04

- [24] Y. Kuribayashi et al., “A dual-armed manipulation system for unfolding and folding rectangular clothes,” Proc. of the 2023 JSME Annual Conf. on Robotics and Mechatronics (Robomec), Article No.2P2-E06, 2023. https://doi.org/10.1299/jsmermd.2023.2P2-E06

- [25] A. Fagiolini, A. Torelli, and A. Bicchi, “Casting robotic end-effectors to reach far objects in space and planetary missions,” Proc. of the 9th ESA Workshop on Advanced Space Technologies for Robotics and Automation, 2006.

- [26] A. Fagiolini et al., “Design and control of a novel 3D casting manipulator,” 2010 IEEE Int. Conf. on Robotics and Automation, pp. 4169-4174, 2010. https://doi.org/10.1109/ROBOT.2010.5509829

- [27] T. Suzuki and Y. Ebihara, “Casting control for hyper-flexible manipulation,” Proc. of 2007 IEEE Int. Conf. on Robotics and Automation, pp. 1369-1374, 2007. https://doi.org/10.1109/ROBOT.2007.363175

- [28] H. Arisumi, K. Yokoi, and K. Komoriya, “Kendama game by casting manipulator,” 2005 IEEE/RSJ Int. Conf. on Intelligent Robots and Systems, pp. 3187-3194, 2005. https://doi.org/10.1109/IROS.2005.1544972

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.