Paper:

Automatic Calibration of Environmentally Installed 3D-LiDAR Group Used for Localization of Construction Vehicles

Masahiro Inagawa*

, Keiichi Yoshizawa**, Tomohito Kawabe*, and Toshinobu Takei*,†

, Keiichi Yoshizawa**, Tomohito Kawabe*, and Toshinobu Takei*,†

*Seikei University

3-3-1 Kichijoji Kitamachi, Musashino-city, Tokyo 180-8633, Japan

†Corresponding author

**Hirosaki University

3 Bunkyo-cho, Hirosaki-city, Aomori 036-8561, Japan

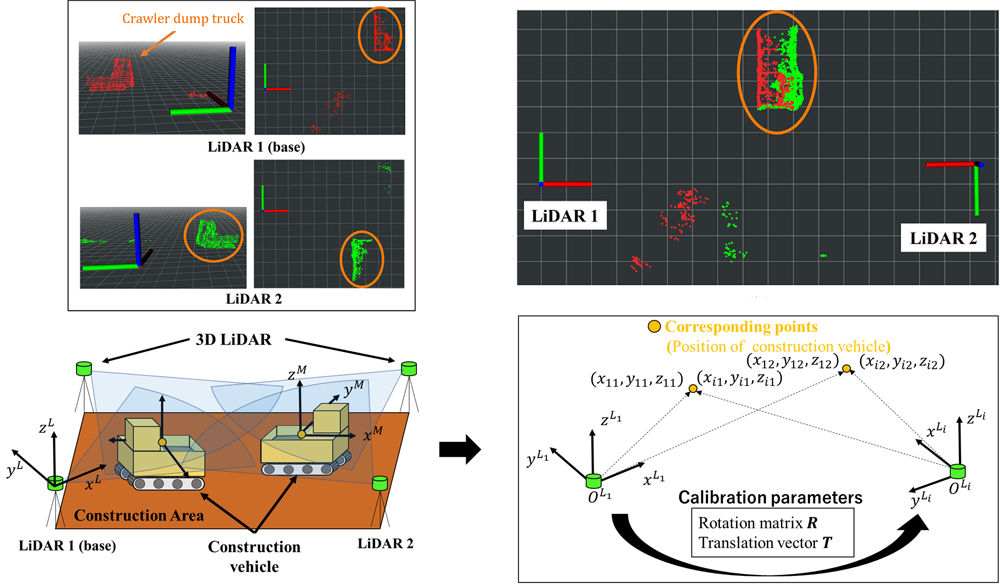

Research and development efforts have been undertaken to develop a method for accurately localizing construction vehicles in various environments using multiple 3D-LiDARs installed in the work environment. In this approach, it is important to calibrate the installed positions and orientations of the multiple LiDARs as accurately as possible to achieve high-accuracy localization. Currently, calibration is performed manually, which results in accuracy variance depending on the operator. Furthermore, manual calibration becomes more time consuming as the number of installed LiDARs increases. Conventional automatic calibration methods require the use of dedicated land markers because stable features are difficult to acquire in civil engineering sites in which the environment is altered by work. This paper proposes an automatic calibration method that calibrates the positions and orientations of 3D-LiDARs installed in the field using multiple construction vehicles on the construction site as land markers. To validate the proposed method, we conducted calibration experiments on a group of 3D-LiDARs installed on uneven ground using actual construction vehicles, and verified the calibration accuracy using a newly proposed accuracy evaluation formula. The results showed that the proposed method can perform sufficiently accurate calibration without the use of dedicated land markers in civil engineering sites, which increase costs and make features difficult to acquire.

Calibration of LiDARs installed in the field

- [1] H. Hatamoto, K. Fujimoto, T. Asuma, Y. Takeshita, T. Amagai, A. Furukawa, and S. Kitahara, “A Study on an Autonomous Crawler Carrier System with AI Based Transportation Control,” Proc. of the 37th Int. Symp. on Automation and Robotics in Construction (ISARC 2020), pp. 530-537, 2020. https://doi.org/10.22260/ISARC2020/0073

- [2] Q. Zhang, T. Liu, Z. Zhang, Z. Huangfu, Q. Li, and Z. An, “Unmanned rolling compaction system for rockfill materials,” Autom. Constr., Vol.100, pp. 103-117, 2019. https://doi.org/10.1016/j.autcon.2019.01.004

- [3] T. Kawabe, T. Takei, and E. Imanishi, “Path planning to expedite the complete transfer of distributed gravel piles with an automated wheel loader,” Advanced Robotics, Vol.35, pp. 1418-1437, 2021. https://doi.org/10.1080/01691864.2021.2008488

- [4] T. Komatsu, Y. Konng, S. Kiribayashi, K. Nagatani, T. Suzuki, K. Ohno, T. Suzuki, N. Miyamoto, Y. Shibata, and K. Asano, “Autonomous Driving of Six-Wheeled Dump Truck with a Retrofitted Robot,” Springer Proc. in Advanced Robotics, Vol.16, pp. 59-72, 2021. https://doi.org/10.1007/978-981-15-9460-1_5

- [5] S. Hong, A. Bangunharcana, J.-M. Park, M. Choi, and H.-S. Shin, “Visual SLAM-based robotic mapping method for planetary construction,” Sensors, Vol.21, No.22, Article No.7715, 2021. https://doi.org/10.3390/s21227715

- [6] T. Suzuki, K. Ohno, S. Kojima, N. Miyamoto, T. Suzuki, T. Komatsu, Y. Shibata, K. Asano, and K. Nagatani, “Estimation of articulated angle in six-wheeled dump trucks using multiple GNSS receivers for autonomous driving,” Advanced Robotics, Vol.35, pp. 1376-1387, 2021. https://doi.org/10.1080/01691864.2021.1974942

- [7] K. You, L. Ding, C. Zhou, Q. Dou, X. Wang, and B. Hu, “5G-based earthwork monitoring system for an unmanned bulldozer,” Autom. Constr., Vol.131, Article No.103891, 2021. https://doi.org/10.1016/j.autcon.2021.103891

- [8] R. Gu, R. Marinescu, C. Seceleanu, and K. Lundqvist, “Formal verification of an autonomous wheel loader by model checking,” Proc. of the 6th Conf. on Formal Methods in Software Engineering (FormaliSE 2018), pp. 74-83, 2018. https://doi.org/10.1145/3193992.3193999

- [9] R. Bao, R. Komatsu, R. Miyagusuku, M. Chino, A. Yamashita, and H. Asama, “Cost-effective and robust visual based localization with consumer-level cameras at construction sites,” Proc. of the IEEE 8th Global Conf. on Consumer Electronics (GCCE 2019), pp. 983-985, 2019. https://doi.org/10.1109/GCCE46687.2019.9015417

- [10] R. Bao, R. Komatsu, R. Miyagusuku, M. Chino, A. Yamashita, and H. Asama, “Stereo camera visual SLAM with hierarchical masking and motion-state classification at outdoor construction sites containing large dynamic objects,” Advanced Robotics, Vol.35, pp. 228-241, 2021. https://doi.org/10.1080/01691864.2020.1869586

- [11] M. Rampinelli, V. B. Covre, F. M. de Queiroz, R. F. Vassallo, T. F. Bastos-Filho, and M. Mazo, “An intelligent space for mobile robot localization using a multi-camera system,” Sensors, Vol.14, No.8, pp. 15039-15064, 2014. https://doi.org/10.3390/s140815039

- [12] H. Deng, Q. Fu, Q. Quan, K. Yang, and K.-Y. Cai, “Indoor Multi-Camera-Based Testbed for 3-D Tracking and Control of UAVs,” IEEE Trans. Instrum. Meas., Vol.69, Issue 6, pp. 3139-3156, 2019. https://doi.org/10.1109/TIM.2019.2928615

- [13] B. Gu, J. Liu, H. Xiong, T. Li, and Y. Pan, “ECPC-ICP: A 6D vehicle pose estimation method by fusing the roadside LiDAR point cloud and road feature” Sensors, Vol.21, No.10, Article No.3489, 2021. https://doi.org/10.3390/s21103489

- [14] J. Zhao, H. Xu, H. Liu, J. Wu, Y. Zheng, and D. Wu, “Detection and tracking of pedestrians and vehicles using roadside LiDAR sensors,” Transp. Res. Part C: Emerg. Technol., Vol.100, pp. 68-87, 2019. https://doi.org/10.1016/j.trc.2019.01.007

- [15] M. Inagawa, T. Kawabe, and T. Takei, “Localization and path following by using installed 3D LiDARs for automated crawler dump,” Proc. of JSME Annual Conf. on Robotics and Mechatronics (ROBOMECH 2022), Session ID:1P1-C03, 2022. https://doi.org/10.1299/jsmermd.2022.1P1-C03

- [16] B. Xue, J. Jiao, Y. Zhu, L. Zhen, D. Han, M. Liu, and R. Fan, “Automatic Calibration of Dual-LiDARs Using Two Poles Stickered with Retro-Reflective Tape,” Proc. of the IEEE Int. Conf. on Imaging Systems and Techniques (IST 2019), 2019. https://doi.org/10.1109/IST48021.2019.9010134

- [17] J. Jiao, Q. Liao, Y. Zhu, T. Liu, Y. Yu, R. Fan, L. Wang, and M. Liu, “A Novel Dual-LiDAR Calibration Algorithm Using Planar Surfaces,” Proc. of the IEEE Intelligent Vehicles Symp. (IV 2019), pp. 1499-1504, 2019. https://doi.org/10.1109/IVS.2019.8814136

- [18] P. Wei, G. Yan, Y. Li, K. Fang, X. Cai, J. Yang, and W. Liu, “CROON: Automatic Multi-LiDAR Calibration and Refinement Method in Road Scene,” Proc. of the IEEE/RSJ Int. Conf. on Intelligent Robots and Systems (IROS 2022), pp. 12857-12863, 2022. https://doi.org/10.1109/IROS47612.2022.9981558

- [19] J. Jiao, Y. Yu, Q. Liao, H. Ye, R. Fan, and M. Liu, “Automatic Calibration of Multiple 3D LiDARs in Urban Environments,” Proc. of the IEEE/RSJ Int. Conf. on Intelligent Robots and Systems (IROS 2019), pp. 15-20, 2019. https://doi.org/10.1109/IROS40897.2019.8967797

- [20] T. Sasaki and H. Hashimoto, “Calibration of Distributed Laser Range Finders Based on Object Tracking,” IFAC Proc. Volumes, Vol.42, Issue 16, pp. 239-244, 2009. https://doi.org/10.3182/20090909-4-JP-2010.00042

- [21] P. J. Besl and N. D. McKay, “A method for registration of 3-D shapes,” IEEE Trans. Pattern. Anal. Mach. Intell., Vol.14, Issue 2, pp. 239-256, 1992. https://doi.org/10.1109/34.121791

- [22] R. B. Rusu and S. Cousins, “3D is Here: Point Cloud Library (PCL),” Proc. of the 2011 IEEE Int. Conf. Robot. Autom., 2011. https://doi.org/10.1109/ICRA.2011.5980567

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.