Development Report:

Experimental Study of Seamless Switch Between GNSS- and LiDAR-Based Self-Localization

Tadahiro Hasegawa, Haruki Miyoshi, and Shin’ichi Yuta

Shibaura Institute of Technology

3-7-5 Toyosu, Kohto-ku, Tokyo 135-8548, Japan

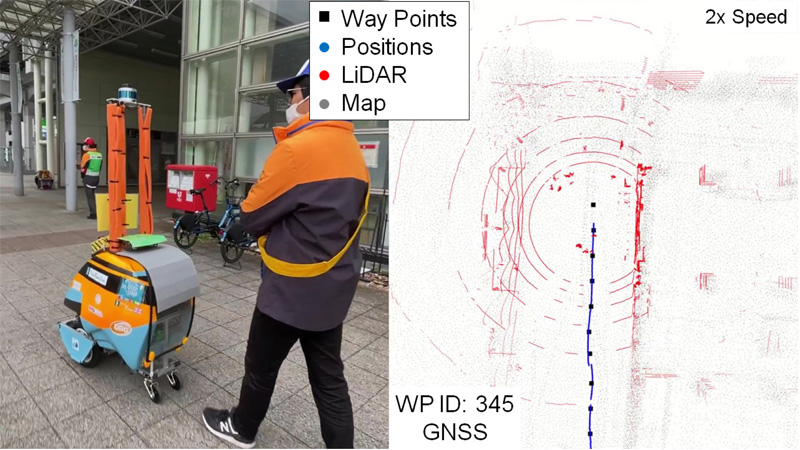

A self-localization method that can seamlessly switch positions and attitudes estimated using normal distributions transform (NDT) scan matching and a real-time kinematic global navigation satellite system (GNSS) is successfully developed. One of the issues encountered in this method is the sharing of global coordinates among the different estimation methods. Therefore, the three-dimensional environmental maps utilized in the NDT scan matching are created based on the planar Cartesian coordinate system used in the GNSS to obtain accurate information regarding the location, shape, and size of the actual terrain and geographic features. Consequently, seamlessly switching between different methods enables mobile robots to stably obtain accurate estimated positions and attitudes. An autonomous driving experiment is conducted using this self-localization method in the Tsukuba Challenge 2022, and the mobile robot completed a designated course involving more than 2 km in an urban area.

Autonomous driving around a station

- [1] A. Takanose, K. Kondo, Y. Hoda, J. Meguro, and K. Takeda, “Localization system for vehicle navigation based on GNSS/IMU using time-series optimization with road gradient constrain,” J. Robot. Mechatron., Vol.35, No.2, pp. 387-397, 2023. https://doi.org/10.20965/jrm.2023.p0387

- [2] B. Reuper, M. Becker, and S. Leinen, “Benefits of multi-constellation/multi-frequency GNSS in a tightly coupled GNSS/IMU/Odometry integration algorithm,” Sensors, Vol.18, No.9, Article No.3052, 2018. https://doi.org/10.3390/s18093052

- [3] G. Falco, M. Pini, and G. Marucco, “Loose and tight GNSS/INS integrations: Comparison of performance assessed in real urban scenarios,” Sensors, Vol.17, No.2, Article No.255, 2017. https://doi.org/10.3390/s17020255

- [4] M. Magnusson, A. Lilienthal, and T. Duckett, “Scan registration for autonomous mining vehicles using 3D-NDT,” J. of Field Robotics, Vol.24, No.10, pp. 803-827, 2007. https://doi.org/10.1002/rob.20204

- [5] F. Moosmann and C. Stiller, “Velodyne SLAM,” 2011 IEEE Intelligent Vehicles Symp. (IV), pp. 393-398, 2011. https://doi.org/10.1109/IVS.2011.5940396

- [6] Y. Xie et al., “RDC-SLAM: A real-time distributed cooperative SLAM system based on 3D LiDAR,” IEEE Trans. on Intelligent Transportation Systems, Vol.23, No.9, pp. 14721-14730, 2022. https://doi.org/10.1109/TITS.2021.3132375

- [7] R. Mur-Artal and J. D. Tardós, “ORB-SLAM2: An Open-Source SLAM System for Monocular, Stereo, and RGB-D Cameras,” IEEE Trans. on Robotics, Vol.33, No.5, pp. 1255-1262, 2017. https://doi.org/10.1109/TRO.2017.2705103

- [8] J. Engel, T. Schöps, and D. Cremers, “LSD-SLAM: Large-scale direct monocular SLAM,” Proc. of the 13th European Conf. on Computer Vision (ECCV 2014), pp. 834-849, 2014. https://doi.org/10.1007/978-3-319-10605-2_54

- [9] C. Forster, Z. Zhang, M. Gassner, M. Werlberger, and D. Scaramuzza, “SVO: Semidirect visual odometry for monocular and multicamera systems,” IEEE Trans. on Robotics, Vol.33, No.2, pp. 249-265, 2017. https://doi.org/10.1109/TRO.2016.2623335

- [10] T. Qin, S. Cao, J. Pan, and S. Shen, “A general optimization-based framework for global pose estimation with multiple sensors,” arXiv: 1901.03642, 2019. https://doi.org/10.48550/arXiv.1901.03642

- [11] S. Lynen, M. W. Achtelik, S. Weiss, M. Chli, and R. Siegwart, “A robust and modular multi-sensor fusion approach applied to MAV navigation,” 2013 IEEE/RSJ Int. Conf. on Intelligent Robots and Systems, pp. 3923-3929, 2013. https://doi.org/10.1109/IROS.2013.6696917

- [12] R. Mascaro, L. Teixeira, T. Hinzmann, R. Siegwart, and M. Chli, “GOMSF: Graph-optimization based multi-sensor fusion for robust UAV pose estimation,” 2018 IEEE Int. Conf. on Robotics and Automation (ICRA), pp. 1421-1428, 2018. https://doi.org/10.1109/ICRA.2018.8460193

- [13] S. Rusinkiewicz and M. Levoy, “Efficient variants of the ICP algorithm,” Proc. of 3rd Int. Conf. on 3-D Digital Imaging and Modeling, pp. 145-152, 2001. https://doi.org/10.1109/IM.2001.924423

- [14] S. Thrun, W. Burgard, and D. Fox, “Probabilistic Robotics,” MIT Press, 2005.

- [15] P. Biber and W. Strasser, “The normal distributions transform: A new approach to laser scan matching,” Proc. 2003 IEEE/RSJ Int. Conf. on Intelligent Robots and Systems (IROS 2003), Vol.3, pp. 2743-2748, 2003. https://doi.org/10.1109/IROS.2003.1249285

- [16] T. Eda, T. Hasegawa, S. Nakamura, and S. Yuta, “Development of autonomous mobile robot ‘MML-05’ based on i-Cart mini in Tsukuba Challenge 2015,” J. Robot. Mechatron., Vol.28, No.4, pp. 461-469, 2016. https://doi.org/10.20965/jrm.2016.p0461

- [17] K. Kawase, “A more concise method of calculation for the coordinate conversion between geographic and plane rectangular coordinates on the Gauss-Krüger projection,” J. of the Geospatial Information Authority of Japan, No.121, pp. 109-124, 2011 (in Japanese).

- [18] T. Ishii et al., “Efficient and wide-area 3D environmental map building and its accuracy evaluation using vehicles mounted with 3D LiDAR,” J. of the Robotics Society of Japan, Vol.40, No.4, pp. 339-342, 2022 (in Japanese). https://doi.org/10.7210/jrsj.40.339

- [19] T. Shan and B. Englot, “LeGO-LOAM: Lightweight and ground-optimized lidar odometry and mapping on variable terrain,” 2018 IEEE/RSJ Int. Conf. on Intelligent Robots and Systems (IROS), pp. 4758-4765, 2018. https://doi.org/10.1109/IROS.2018.8594299

- [20] S. Iida and S. Yuta, “Control of vehicle with power wheeled steering using feedforward dynamics compensation,” Proc. of 1991 Int. Conf. on Industrial Electronics, Control and Instrumentation (IECON’91), Vol.3, pp. 2264-2269, 1991. https://doi.org/10.1109/IECON.1991.238991

- [21] S. Bando, T. Hasegawa, S. Yuta, and S. Kitahara, “Development and experients on autonomous driving system for crawler dumper for unmanned construction,” J. of JCMA, Vol.71, No.8, pp. 90-99, 2019 (in Japanese).

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.