Paper:

A Human-Centered and Adaptive Robotic System Using Deep Learning and Adaptive Predictive Controllers

Sari Toyoguchi*, Enrique Coronado**, and Gentiane Venture***

*Department of Mechanical Systems Engineering, Tokyo University of Agriculture and Technology

2-24-16 Nakamachi, Koganei, Tokyo 184-8588, Japan

**Industrial Cyber-Physical Systems Research Center, National Institute of Advanced Industrial Science and Technology (AIST)

2-4-7 Aomi, Koto-ku, Tokyo 135-0064, Japan

***Graduate School of Engineering, The University of Tokyo

7-3-1 Hongo, Bunkyo-ku, Tokyo 113-8654, Japan

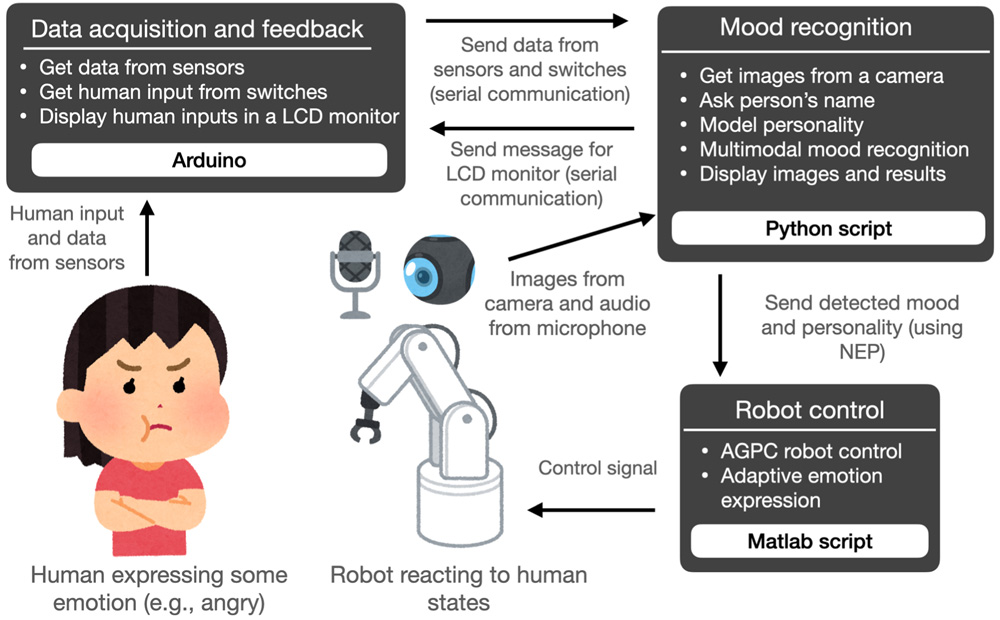

The rise of single-person households coupled with a drop in social interaction due to the coronavirus disease 2019 (COVID-19) pandemic is triggering a loneliness pandemic. This social issue is producing mental health conditions (e.g., depression and stress) not only in the elderly population but also in young adults. In this context, social robots emerge as human-centered robotics technology that can potentially reduce mental health distress produced by social isolation. However, current robotics systems still do not reach a sufficient communication level to produce an effective coexistence with humans. This paper contributes to the ongoing efforts to produce a more seamless human-robot interaction. For this, we present a novel cognitive architecture that uses (i) deep learning methods for mood recognition from visual and voice modalities, (ii) personality and mood models for adaptation of robot behaviors, and (iii) adaptive generalized predictive controllers (AGPC) to produce suitable robot reactions. Experimental results indicate that our proposed system influenced people’s moods, potentially reducing stress levels during human-robot interaction.

Software architecture of the system

- [1] R. Ronald, “The remarkable rise and particular context of younger one–person households in Seoul and Tokyo,” City & Community, Vol.16, No.1, pp. 25-46, 2017.

- [2] T. Kamin, N. Perger, L. Debevec, and B. Tivadar, “Alone in a time of pandemic: Solo-living women coping with physical isolation,” Qualitative Health Research, Vol.31, No.2, pp. 203-217, 2021.

- [3] R. Desai, A. John, J. Stott, and G. Charlesworth, “Living alone and risk of dementia: A systematic review and meta-analysis,” Ageing Research Reviews, Vol.62, Article No.101122, 2020.

- [4] I. E. M. Evans, D. J. Llewellyn, F. E. Matthews, R. T. Woods, C. Brayne, L. Clare, and on behalf of the CFAS-Wales Research Team, “Living alone and cognitive function in later life,” Archives of Gerontology and Geriatrics, Vol.81, pp. 222-233, 2019.

- [5] B. Teerawichitchainan, J. Knodel, and W. Pothisiri, “What does living alone really mean for older persons? A comparative study of Myanmar, Vietnam, and Thailand,” Demographic Research, Vol.32, pp. 1329-1360, 2015.

- [6] M. Ligthart, K. Hindriks, and M. A. Neerincx, “Reducing stress by bonding with a social robot: Towards autonomous long-term child-robot interaction,” Companion of the 2018 ACM/IEEE Int. Conf. on Human-Robot Interaction (HRI’18), pp. 305-306, 2018.

- [7] N. Tsoi, J. Connolly, E. Adéníran, A. Hansen, K. T. Pineda, T. Adamson, S. Thompson, R. Ramnauth, M. Vázquez, and B. Scassellati, “Challenges deploying robots during a pandemic: An effort to fight social isolation among children,” Proc. of the 2021 ACM/IEEE Int. Conf. on Human-Robot Interaction (HRI’21), pp. 234-242, 2021.

- [8] P. Khosravi, A. Rezvani, and A. Wiewiora, “The impact of technology on older adults’ social isolation,” Computers in Human Behavior, Vol.63, pp. 594-603, 2016.

- [9] E. Coronado, T. Kiyokawa, G. A. G. Ricardez, I. G. Ramirez-Alpizar, G. Venture, and N. Yamanobe, “Evaluating quality in human-robot interaction: A systematic search and classification of performance and human-centered factors, measures and metrics towards an industry 5.0,” J. of Manufacturing Systems, Vol.63, pp. 392-410, 2022.

- [10] I. Holeman and D. Kane, “Human-centered design for global health equity,” Information Technology for Development, Vol.26, No.3, pp. 477-505, 2020.

- [11] L. Devillers, “Human–robot interactions and affective computing: The ethical implications,” J. v. Braun, M. S. Archer, G. M. Reichberg, and M. S. Sorondo (Eds.), “Robotics, AI, and Humanity: Science, Ethics, and Policy,” pp. 205-211, Springer, 2021.

- [12] I. Leite, G. Castellano, A. Pereira, C. Martinho, and A. Paiva, “Empathic robots for long-term interaction,” Int. J. of Social Robotics, Vol.6, No.3, pp. 329-341, 2014.

- [13] G. V. Caprara, C. Barbaranelli, L. Borgogni, and M. Perugini, “The ‘big five questionnaire’: A new questionnaire to assess the five factor model,” Personality and Individual Differences, Vol.15, No.3, pp. 281-288, 1993.

- [14] K. R. Scherer, “What are emotions? And how can they be measured?,” Social Science Information, Vol.44, No.4, pp. 695-729, 2005.

- [15] C. Beedie, P. Terry, and A. Lane, “Distinction between emotion and mood,” Cognition and Emotion, Vol.19, No.6, pp. 847-878, 2005.

- [16] P. Kaur, H. Kumar, and S. Kaushal, “Affective state and learning environment based analysis of students’ performance in online assessment,” Int. J. of Cognitive Computing in Engineering, Vol.2, pp. 12-20, 2021.

- [17] A. Khattak, M. Z. Asghar, M. Ali, and U. Batool, “An efficient deep learning technique for facial emotion recognition,” Multimedia Tools and Applications, Vol.81, No.2, pp. 1649-1683, 2022.

- [18] D. Y. Liliana, “Emotion recognition from facial expression using deep convolutional neural network,” J. of Physics: Conf. Series, Vol.1193, Article No.012004, 2018.

- [19] W. Liu, W.-L. Zheng, and B.-L. Lu, “Emotion recognition using multimodal deep learning,” Proc. of the 23rd Int. Conf. on Neural Information Processing (ICONIP 2016), Part 2, pp. 521-529, 2016.

- [20] Y. R. Pandeya and J. Lee, “Deep learning-based late fusion of multimodal information for emotion classification of music video,” Multimedia Tools and Applications, Vol.80, No.2, pp. 2887-2905, 2021.

- [21] S. Zhang, S. Zhang, T. Huang, and W. Gao, “Multimodal deep convolutional neural network for audio-visual emotion recognition,” Proc. of the 2016 ACM on Int. Conf. on Multimedia Retrieval, pp. 281-284, 2016.

- [22] B. Kühnlenz, S. Sosnowski, M. Buß, D. Wollherr, K. Kühnlenz, and M. Buss, “Increasing helpfulness towards a robot by emotional adaption to the user,” Int. J. of Social Robotics, Vol.5, No.4, pp. 457-476, 2013.

- [23] G. Venture and D. Kulić, “Robot expressive motions: A survey of generation and evaluation methods,” ACM Trans. on Human-Robot Interaction, Vol.8, No.4, Article No.20, 2019.

- [24] L. Rincon, E. Coronado, H. Hendra, J. Phan, Z. Zainalkefli, and G. Venture, “Expressive states with a robot arm using adaptive fuzzy and robust predictive controllers,” Proc. of 2018 3rd Int. Conf. on Control and Robotics Engineering (ICCRE), pp. 11-15, 2018.

- [25] S. B. Sial, M. B. Sial, Y. Ayaz, S. I. A. Shah, and A. Zivanovic, “Interaction of robot with humans by communicating simulated emotional states through expressive movements,” Intelligent Service Robotics, Vol.9, No.3, pp. 231-255, 2016.

- [26] R. M. Bergner, “What is personality? Two myths and a definition,” New Ideas in Psychology, Vol.57, No.100759, 2020.

- [27] R. R. McCrae and O. P. John, “An introduction to the five-factor model and its applications,” J. of Personality, Vol.60, No.2, pp. 175-215, 1992.

- [28] E. Coronado, L. Rincon, and G. Venture, “Connecting MATLAB/Octave to perceptual, cognitive and control components for the development of intelligent robotic systems,” Proc. of the 23rd CISM IFToMM Symp., pp. 364-371, 2021.

- [29] E. Coronado and G. Venture, “Towards IoT-aided human–robot interaction using NEP and ROS: A platform-independent, accessible and distributed approach,” Sensors, Vol.20, No.5, Article No.1500, 2020.

- [30] P. Ekman, “Basic emotions,” T, Dalgleish and M. J. Power (Eds.), “Handbook of Cognition and Emotion,” pp. 45-60, John Wiley & Sons, 1999.

- [31] P. Lucey, J. F. Cohn, T. Kanade, J. Saragih, Z. Ambadar, and I. Matthews, “The extended Cohn-Kanade dataset (CK+): A complete dataset for action unit and emotion-specified expression,” Proc, of 2010 IEEE Computer Society Conf. on Computer Vision and Pattern Recognition – Workshops, pp. 94-101, 2010.

- [32] S. R. Livingstone and F. A. Russo, “The Ryerson audio-visual database of emotional speech and song (RAVDESS): A dynamic, multimodal set of facial and vocal expressions in North American English,” PLOS ONE, Vol.13, No.5, Article No.e0196391, 2018.

- [33] N. Sato and Y. Obuchi, “Emotion recognition using mel-frequency cepstral coefficients,” Information and Media Technologies, Vol.2, No.3, pp. 835-848, 2007.

- [34] L. R. Goldberg, “A broad-bandwidth, public domain, personality inventory measuring the lower-level facets of several five-factor models,” Personality Psychology in Europe, Vol.7, pp. 7-28, 1998.

- [35] L. R. Ardila, E. Coronado, H. Hendra, J. Phan, Z. Zainalkefli, and G. Venture, “Adaptive fuzzy and predictive controllers for expressive robot arm movement during human and environment interaction,” Int. J. of Mechanical Engineering and Robotics Research, Vol.8, No.2, pp. 207-219, 2019.

- [36] S. Hagane, L. K. R. Ardila, T. Katsumata, V. Bonnet, P. Fraisse, and G. Venture, “Adaptive generalized predictive controller and Cartesian force control for robot arm using dynamics and geometric identification,” J. Robot. Mechatron., Vol.30, No.6, pp. 927-942, 2018.

- [37] L. Rincon, E. Coronado, C. Law, and G. Venture, “Adaptive cognitive robot using dynamic perception with fast deep-learning and adaptive on-line predictive control,” Proc, of the 15th IFToMM World Cong, on Mechanism and Machine Science, pp. 2429-2438, 2019.

- [38] P. Rodriguez-Ayerbe, “Robustification de lois de commande prédictive par la paramétrisation de Youla,” Ph.D. Thesis, Université Paris XI, 2003 (in French).

- [39] J. R. Leigh, “Control Theory,” 2nd Edition, Institution of Engineering and Technology, 2004.

- [40] T. Hoshino, “Research on Emotional Expression through Movements,” Master’s thesis, Mie University, 2013.

- [41] C. Navarretta, “Mirroring and prediction of gestures from interlocutor’s behavior,” R. Klempous, J. Nikodem, and P. Z. Baranyi (Eds.), “Cognitive Infocommunications, Theory and Applications,” pp. 91-107, Springer, 2019.

- [42] M. Nonaka, F. Inatani, and S. Yamasaki, “Examination of Relation between University Students’ Interpersonal Stress and Stress Buffering Factor —Focusing on the Stress Management Self-Efficacy—,” Kurume University Psychological Research, No.9, pp. 24-32, 2010 (in Japanese).

- [43] M. G. Kim and A. S. Mattila, “The impact of mood states and surprise cues on satisfaction,” Int. J. of Hospitality Management, Vol.29, No.3, pp. 432-436, 2010.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.