Paper:

Investigation of Obstacle Prediction Network for Improving Home-Care Robot Navigation Performance

Mohamad Yani*,**

, Azhar Aulia Saputra**, Wei Hong Chin**

, Azhar Aulia Saputra**, Wei Hong Chin**

, and Naoyuki Kubota**

, and Naoyuki Kubota**

*Department of Computer Engineering, Faculty of Electronics and Intelligent Industry Technology, Institut Teknologi Telkom Surabaya

Surabaya , Indonesia

**Department of Mechanical System Engineering, Faculty of System Design, Tokyo Metropolitan University

6-6 Asahigaoka, Hino, Tokyo 191-0065, Japan

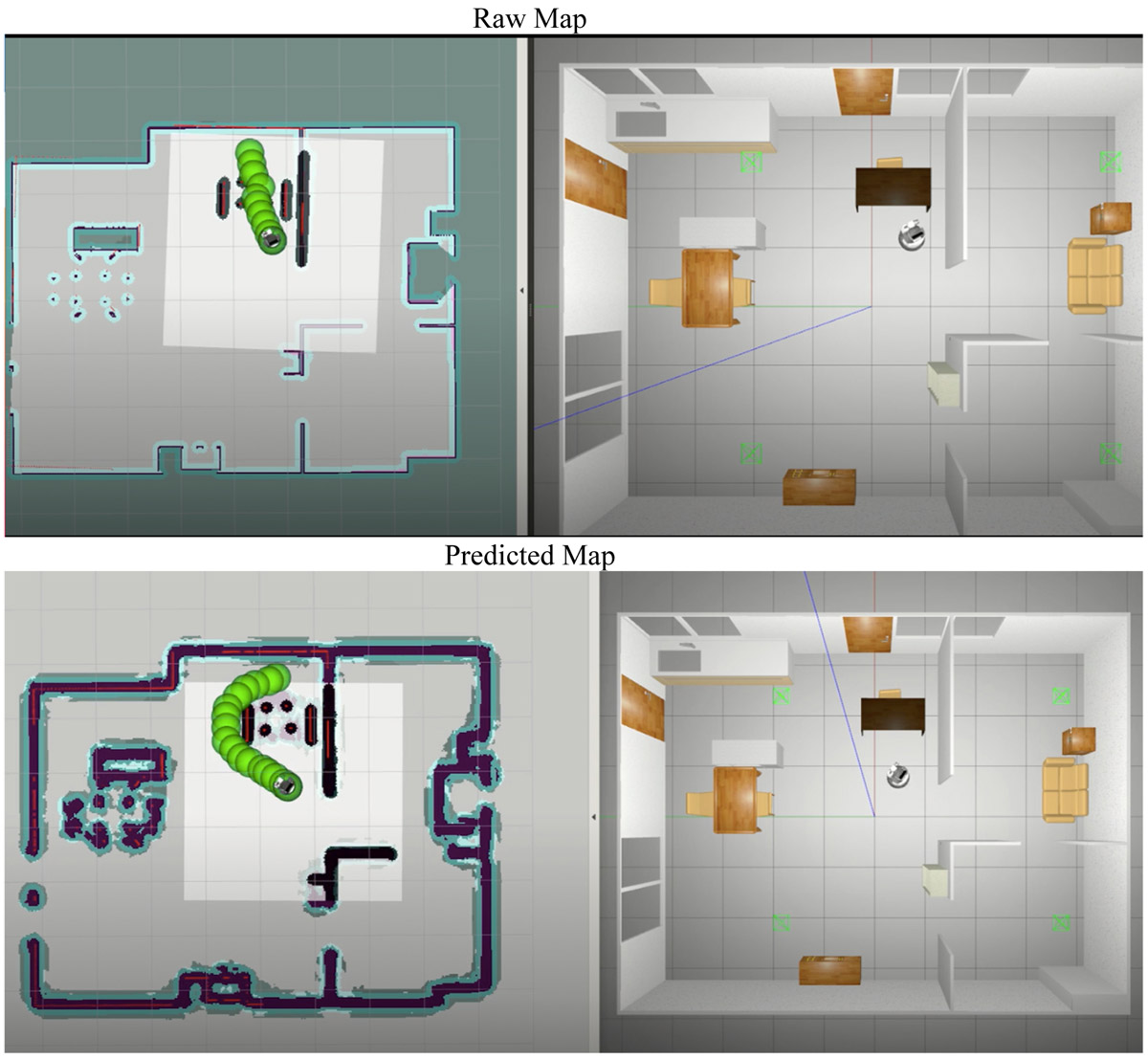

Home-care manipulation robot requires exploring and performing the navigation task safely to reach the grasping target and ensure human safety in the home environment. An indoor home environment has complex obstacles such as chairs, tables, and sports equipment, which make it difficult for robots that rely on 2D laser rangefinders to detect. On the other hand, the conventional approaches overcome the problem by using 3D LiDAR, RGB-D camera, or fusing sensor data. The convolutional neural network has shown promising results in dealing with unseen obstacles in navigation by predicting the unseen obstacle from 2D grid maps to perform collision avoidance using 2D laser rangefinders only. Thus, this paper investigated the predicted grid map from the obstacle prediction network result for improving indoor navigation performance using only 2D LiDAR measurement. This work was evaluated by combining the configuration of the various local planners, type of static obstacles, raw map, and predicted map. Our investigation demonstrated that using the predicted grid map enabled all the local planners to achieve a better collision-free path by using the 2D laser rangefinders only rather than the RGB-D camera with 2D laser rangefinders with a raw map. This advanced investigation considers that the predicted map is potentially helpful for future work in the learning-based local navigation system.

2D Lidar for unseen obstacles

- [1] T. Yamamoto, K. Terada, A. Ochiai, F. Saito, Y. Asahara, and K. Murase, “Development of the Research Platform of a Domestic Mobile Manipulator Utilized for International Competition and Field Test,” IEEE Int. Conf. Intell. Robot. Syst., pp. 7675-7682, 2018. https://doi.org/10.1109/IROS.2018.8593798

- [2] T. Yamamoto, K. Terada, A. Ochiai, F. Saito, Y. Asahara, and K. Murase, “Development of Human Support Robot as the research platform of a domestic mobile manipulator,” ROBOMECH J., Vol.6, Article No.4, 2019. https://doi.org/10.1186/s40648-019-0132-3

- [3] J. Kindle, F. Furrer, T. Novkovic, J. J. Chung, R. Siegwart, and J. Nieto, “Whole-Body Control of a Mobile Manipulator using End-to-End Reinforcement Learning,” arXiv preprint, arXiv:2003.02637, 2020.

- [4] J. Bohren et al., “Towards autonomous robotic butlers: Lessons learned with the PR2,” Proc. IEEE Int. Conf. Robot. Autom., pp. 5568-5575, 2011. https://doi.org/10.1109/ICRA.2011.5980058

- [5] F. Xia, C. Li, R. Martin-Martin, O. Litany, A. Toshev, and S. Savarese, “ReLMoGen: Integrating Motion Generation in Reinforcement Learning for Mobile Manipulation,” 2021 IEEE Int. Conf. on Robotics and Automation (ICRA), pp. 4583-4590, 2021. https://doi.org/10.1109/icra48506.2021.9561315,

- [6] M. Wise, M. Ferguson, D. King, E. Diehr, and D. Dymesich, “Fetch & Freight: Standard Platforms for Service Robot Applications,” Work. Auton. Mob. Serv. Robot., held at 2016 Int. Jt. Conf. Artif. Intell., pp. 2-7, 2016.

- [7] W. Garage, “PR2 User Manual,” 2012.

- [8] K. Blomqvist et al., “Go Fetch: Mobile Manipulation in Unstructured Environments,” arXiv preprint, arXiv:2004.00899, 2020.

- [9] R. Guldenring, M. Gorner, N. Hendrich, N. J. Jacobsen, and J. Zhang, “Learning local planners for human-aware navigation in indoor environments,” IEEE Int. Conf. Intell. Robot. Syst., pp. 6053-6060, 2020. https://doi.org/10.1109/IROS45743.2020.9341783

- [10] P. Singamaneni, A. Favier, and R. Alami, “Human-Aware Navigation Planner for Diverse Human-Robot Interaction Contexts,” 2021 IEEE/RSJ Int. Conf. on Intelligent Robots and Systems (IROS), Prague, Czech Republic, pp. 5817-5824, 2021. https://doi.org/10.1109/IROS51168.2021.9636613

- [11] P. Long, T. Fanl, X. Liao, W. Liu, H. Zhang, and J. Pan, “Towards optimally decentralized multi-robot collision avoidance via deep reinforcement learning,” Proc. IEEE Int. Conf. Robot. Autom., pp. 6252-6259, 2018. https://doi.org/10.1109/ICRA.2018.8461113

- [12] F. de A. M. Pimentel and P. T. Aquino-Jr., “Evaluation of ROS Navigation Stack for Social Navigation in Simulated Environments,” J. Intell. Robot. Syst. Theory Appl., Vol.102, No.4, 2021. https://doi.org/10.1007/s10846-021-01424-z

- [13] L. Kästner, T. Buiyan, X. Zhao, L. Jiao, Z. Shen, and J. Lambrecht, “Arena-Rosnav: Towards Deployment of Deep-Reinforcement-Learning-Based Obstacle Avoidance into Conventional Autonomous Navigation Systems,” 2021 IEEE/RSJ Int. Conf. on Intelligent Robots and Systems (IROS 2021), 2021. https://doi.org/10.1109/iros51168.2021.9636226

- [14] M. Kollmitz, D. Buscher, and W. Burgard, “Predicting Obstacle Footprints from 2D Occupancy Maps by Learning from Physical Interactions,” Proc. IEEE Int. Conf. Robot. Autom., pp. 10256-10262, 2020. https://doi.org/10.1109/ICRA40945.2020.9197474

- [15] E. Marder-Eppstein, E. Berger, T. Foote, B. Gerkey, and K. Konolige, “The office marathon: Robust navigation in an indoor office environment,” Proc. IEEE Int. Conf. Robot. Autom., pp. 300-307, 2010. https://doi.org/10.1109/ROBOT.2010.5509725

- [16] D. Fox, W. Burgard, and S. Thrun, “The Dynamic Window Approach to Collision Avoidance,” IEEE Robot. Autom. Mag., Vol.4, No.1, pp. 23-33, 1997. https://doi.org/10.1109/100.580977

- [17] S. Quinlan and O. Khatib, “Elastic bands: connecting path planning and control,” 1993 IEEE Int. Conf. on Robotics and Automation, 1993. https://doi.org/10.1109/ROBOT.1993.291936

- [18] C. Rösmann, F. Hoffmann, and T. Bertram, “Timed-Elastic-Bands for Time-Optimal Point-to-Point Nonlinear Model Predictive Control,” 2015 European Control Conf. (ECC), pp. 3352-3357, 2015.

- [19] U. Patel, N. K. S. Kumar, A. J. Sathyamoorthy, and D. Manocha, “DWA-RL: Dynamically Feasible Deep Reinforcement Learning Policy for Robot Navigation among Mobile Obstacles,” 2021 IEEE Int. Conf. on Robotics and Automation (ICRA), Xi’an, China, pp. 6057-6063, 2021. https://doi.org/10.1109/ICRA48506.2021.9561462

- [20] S. B. Banisetty, V. Rajamohan, F. Vega, and D. Feil-Seifer, “A deep learning approach to multi-context socially-aware navigation,” 2021 30th IEEE Int. Conf. on Robot and Human Interactive Communication (RO-MAN 2021), pp. 23-30, 2021. https://doi.org/10.1109/RO-MAN50785.2021.9515424

- [21] K. Li, Y. Xu, J. Wang, and M. Q. H. Meng, “SARL*: Deep reinforcement learning based human-aware navigation for mobile robot in indoor environments,” IEEE Int. Conf. Robot. Biomimetics (ROBIO 2019), pp. 688-694, 2019. https://doi.org/10.1109/ROBIO49542.2019.8961764

- [22] H. Darweesh et al., “Open source integrated planner for autonomous navigation in highly dynamic environments,” J. Robot. Mechatron., Vol.29, No.4, pp. 668-684, 2017. https://doi.org/10.20965/jrm.2017.p0668

- [23] K. Tobita, Y. Shikanai, and K. Mima, “Study on automatic operation of manual wheelchair prototype and basic experiments,” J. Robot. Mechatron., Vol.33, No.1, pp. 69-77, 2021. https://doi.org/10.20965/jrm.2021.p0069

- [24] J. Lundell, F. Verdoja, and V. Kyrki, “Hallucinating Robots: Inferring Obstacle Distances from Partial Laser Measurements,” IEEE Int. Conf. Intell. Robot. Syst., pp. 4781-4787, 2018. https://doi.org/10.1109/IROS.2018.8594399

- [25] F. Verdoja, J. Lundell, and V. Kyrki, “Deep network uncertainty maps for indoor navigation,” IEEE-RAS Int. Conf. Humanoid Robot, Vol.2019-Oct., pp. 112-119, 2019. https://doi.org/10.1109/Humanoids43949.2019.9035016

- [26] L. Kästner, T. Buiyan, X. Zhao, Z. Shen, C. Marx, and J. Lambrecht, “Connecting Deep-Reinforcement-Learning-based Obstacle Avoidance with Conventional Global Planners using Waypoint Generators,” 2021 IEEE/RSJ Int. Conf. on Intelligent Robots and Systems (IROS 2021), 2021. https://doi.org/10.1109/iros51168.2021.9636039

- [27] G. Grisetti, C. Stachniss, and W. Burgard, “Improved Techniques for Grid Mapping With Rao-Blackwellized Particle Filters,” IEEE Trans. on Robotics, Vol.23, No.1, pp. 34-46, 2007. https://doi.org/10.1109/TRO.2006.889486

- [28] S. Kohlbrecher, O. Von Stryk, J. Meyer, and U. Klingauf, “A Flexible and Scalable SLAM System with Full 3D Motion Estimation,” IEEE Int. Symp. on Safety, Security, and Rescue Robotics (SSRR 2011), pp. 155-160, 2011.

- [29] W. Hess, D. Kohler, H. Rapp, and D. Andor, “Real-time loop closure in 2D LIDAR SLAM,” Proc. IEEE Int. Conf. on Robotics and Automation, Vol.2016-June, pp. 1271-1278, 2016. https://doi.org/10.1109/ICRA.2016.7487258

- [30] A. Handa, V. Patraucean, S. Stent, and R. Cipolla, “Scenenet: An annotated model generator for indoor scene understanding,” Proc. IEEE Int. Conf. Robot. Autom., Vol.2016-June, pp. 5737-5743, 2016. https://doi.org/10.1109/ICRA.2016.7487797

- [31] D. V. Lu, D. Hershberger, and W. D. Smart, “Layered costmaps for context-sensitive navigation,” IEEE Int. Conf. Intell. Robot. Syst., No.Iros, pp. 709-715, 2014. https://doi.org/10.1109/IROS.2014.6942636

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.