Paper:

Structured Light Field by Two Projectors Placed in Parallel for High-Speed and Precise 3D Feedback

Hiromu Kijima and Hiromasa Oku

Gunma University

1-5-1 Tenjin-cho, Kiryu, Gunma 376-8515, Japan

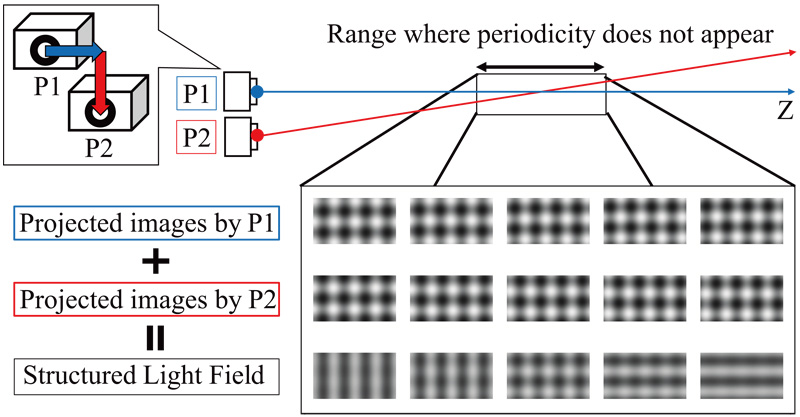

In recent years, it is required to acquire three-dimensional information at high speed in various fields. Previously, a structured light field (SLF) method for high-speed three dimensional measurement in 1 ms was proposed by our group. However, the SLF method has a drawback of worse depth estimation error by several tens millimeters. In this paper, a novel method to generate SLF with two projectors placed in parallel is proposed. This arrangement could produce bigger pattern change depending on the depth and made more precise estimation possible. The depth estimation experiments for precision evaluation and dynamic projection mapping experiment successfully demonstrated precise depth estimation with the error of several millimeters and high-speed estimation within 1 ms, though the measurement range was limited to approximately 100 mm.

Proposed novel method to generate SLF with two projectors placed in parallel

- [1] S. Ramos, S. Gehrig, P. Pinggera, U. Franke, and C. Rother, “Detecting unexpected obstacles for self-driving cars: Fusing deep learning and geometric modeling,” Proc. IEEE Intelligent Vehicles Symposium, pp. 1025-1032, 2017.

- [2] M. Hansard, S. Lee, O. Choi, and R. Horaud, “Time of Flight Cameras: Principles , Methods , and Applications,” London: Springer London, 2013.

- [3] A. Orriordan, T. Newe, G. Dooly, and D. Toal, “Stereo vision sensing: Review of existing systems,” Proc. the Int. Conf. on Sensing Technology (ICST), Vol.2018-Decem, pp. 178-184, 2019.

- [4] R. Ranftl, K. Lasinger, D. Hafner, K. Schindler, and V. Koltun, “Towards Robust Monocular Depth Estimation: Mixing Datasets for Zero-shot Cross-dataset Transfer,” IEEE Trans. on Pattern Analysis and Machine Intelligence, doi: 10.1109/TPAMI.2020.3019967, 2022.

- [5] H. Li, A. Gordon, H. Zhao, V. Casser, and A. Angelova, “Unsupervised Monocular Depth Learning in Dynamic Scenes,” arXiv, arXiv:2010.16404, 2020.

- [6] M. Klingner, J. A. Termöhlen, J. Mikolajczyk, and T. Fingscheidt, “Self-supervised Monocular Depth Estimation: Solving the Dynamic Object Problem by Semantic Guidance,” Lecture Notes in Computer Science (including subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics), Vol.12365 LNCS, pp. 582-600, 2020.

- [7] C. S. Bamji, S. Mehta, B. Thompson, T. Elkhatib, S. Wurster, O. Akkaya, A. Payne, J. Godbaz, M. Fenton, V. Rajasekaran, L. Prather, S. Nagaraja, V. Mogallapu, D. Snow, R. McCauley, M. Mukadam, I. Agi, S. McCarthy, Z. Xu, T. Perry, W. Qian, V.-H. Chan, P. Adepu, G. Ali, M. Ahmed, A. Mukherjee, S. Nayak, D. Gampell, S. Acharya, L. Kordus, and P. O’Connor, “IMpixel 65nm BSI 320MHz demodulated TOF Image sensor with 3μm global shutter pixels and analog binning,” 2018 IEEE Int. Solid-State Circuits Conf. (ISSCC), pp. 94-96, 2018.

- [8] T. Senoo, Y. Yamakawa, Y. Watanabe, H. Oku, and M. Ishikawa, “High-Speed Vision and its Application Systems,” J. Robot. Mechatron., Vol.26, No.3, pp. 287-301, 2014.

- [9] M. Ishikawa, I. Ishii, Y. Sakaguchi, M. Shimojo, H. Shinoda, H. Yamamoto, T. Komuro, H. Oku, Y. Nakajima, and Y. Watanabe, “Dynamic Information Space Based on High-Speed Sensor Technology,” in Human-Harmonized Information Technology, Vol.1, pp. 97-136, Tokyo: Springer Japan, 2016.

- [10] T. Senoo, Y. Yamakawa, S. Huang, K. Koyama, M. Shimojo, Y. Watanabe, L. Miyashita, M. Hirano, T. Sueishi, and M. Ishikawa, “Dynamic Intelligent Systems Based on High-Speed Vision,” J. Robot. Mechatron., Vol.31, No.1, pp. 45-56, 2019.

- [11] I. Gyongy, S. W. Hutchings, A. Halimi, M. Tyler, S. Chan, F. Zhu, S. McLaughlin, R. K. Henderson, and J. Leach, “High-speed 3D sensing via hybrid-mode imaging and guided upsampling,” Optica, Vol.7, No.10, p. 1253-1260, 2020.

- [12] G. Mora-Martín, A. Turpin, A. Ruget, A. Halimi, R. Henderson, J. Leach, and I. Gyongy, “High-speed object detection with a single-photon time-of-flight image sensor,” Optics Express, Vol.29, No.21, p. 33184-33196, 2021.

- [13] S. Heist, P. Lutzke, I. Schmidt, P. Dietrich, P. Kühmstedt, A. Tünnermann, and G. Notni, “High-speed three-dimensional shape measurement using GOBO projection,” Optics and Lasers in Engineering, Vol.87, pp. 90-96, 2016.

- [14] J.-S. Hyun, G. T.-C. Chiu, and S. Zhang, “High-speed and high-accuracy 3D surface measurement using a mechanical projector,” Optics Express, Vol.26, No.2, pp. 1474-1487, 2018.

- [15] S. Kagami, “Range-Finding Projectors: Visualizing Range Information without Sensors,” Proc. IEEE Int. Symposium on Mixed and Augmented Reality 2010, pp. 239-240, 2010.

- [16] H. Masuyama, H. Kawasaki, and R. R. Furukawa, “Depth from Projector’s Defocus Based on Multiple Focus Pattern Projection,” IPSJ Trans. on Computer Vision and Applications, Vol.6, pp. 88-92, 2014.

- [17] H. Kawasaki, S. Ono, Y. Horita, Y. Shiba, R. Furukawa, and S. Hiura, “Active one-shot scan for wide depth range using a light field projector based on coded aperture,” Proc. the IEEE Int. Conf. on Computer Vision, pp. 3568-3576, 2015.

- [18] H. Kawasaki, Y. Horita, H. Morinaga, Y. Matugano, S. Ono, M. Kimura, and Y. Takane, “Structured light with coded aperture for wide range 3D measurement,” Proc. IEEE Conf. on Image Processing (ICIP), pp. 2777-2780, 2012.

- [19] M. Tateishi, H. Ishiyama, and K. Umeda, “A 200 Hz Compact Range Image Sensor Using a Multi-Spot Laser Projector,” Trans. of the JSME, Series C, Vol.74, No.739, pp. 499-505, 2008.

- [20] Y. Watanabe, T. Komuro, and M. Ishikawa, “955-fps Real-time Shape Measurement of Moving/Deforming Object using High-speed Vision for Numerous-point Analysis,” Proc. the IEEE Int. Conf. on Robotics and Automation, pp. 3192-3197, 2007.

- [21] S. Tabata, S. Noguchi, Y. Watanabe, and M. Ishikawa, “High-speed 3D sensing with three-view geometry using a segmented pattern,” Proc. the IEEE Int. Conf. on Intelligent Robots and Systems, Vol.2015-Decem, pp. 3900-3907, 2015.

- [22] J. Takei, S. Kagami, and K. Hashimoto, “3,000-fps 3-D shape measurement using a high-speed camera-projector system,” Proc. the 2007 IEEE/RSJ Int. Conf. on Intelligent Robots and Systems, pp. 3211-3216, 2007.

- [23] Y. Liu, H. Gao, Q. Gu, T. Aoyama, T. Takaki, and I. Ishii, “High-Frame-Rate Structured Light 3-D Vision for Fast Moving Objects,” J. Robot. Mechatron., Vol.26, No.3, pp. 311-320, 2014.

- [24] A. Obara, X. Yang, and H. Oku, “Structured light field generated by two projectors for high-speed three dimensional measurement,” J. Robot. Mechatron., Vol.28, No.4, pp. 523-532, 2016.

- [25] G. Narita, Y. Watanabe, and M. Ishikawa, “Dynamic Projection Mapping onto Deforming Non-Rigid Surface Using Deformable Dot Cluster Marker,” IEEE Trans. on Visualization and Computer Graphics, Vol.23, No.3, pp. 1235-1248, 2017.

- [26] D. Comaniciu, V. Ramesh, and P. Meer, “Real-Time Tracking of Non-Rigid Objects using Mean Shift,” Proc. the IEEE Computer Society Conf. on Computer Vision and Pattern Recognition, Vol.2, No.7, pp. 142-149, 2000.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.