Paper:

Tunnel Lining Surface Monitoring System Deployable at Maximum Vehicle Speed of 100 km/h Using View Angle Compensation Based on Self-Localization Using White Line Recognition

Tomohiko Hayakawa*, Yushi Moko*, Kenta Morishita**, Yuka Hiruma*, and Masatoshi Ishikawa*,***

*Information Technology Center, The University of Tokyo

7-3-1 Hongo, Bunkyo-ku, Tokyo 113-8656, Japan

**Central Nippon Expressway Company Limited

2-18-19 Nishiki, Naka-ku, Nagoya-shi, Aichi 460-0003, Japan

***Tokyo University of Science

1-3 Kagurazaka, Shinjuku-ku, Tokyo 162-8601, Japan

Vehicle-mounted inspection systems have been developed to perform daily inspections of tunnel lining. However, it is difficult to continuously scan images at the desired target angle with the camera’s limited visual field because the vehicle does not always maintain the same position in the car lane while it runs. In this study, we propose a calibration-free optical self-localization method based on white line recognition, which can stabilize the scanning angle and thus enable the scanning of the entire tunnel lining surface. Self-localization based on the present method has a processing time of 27.3 ms, making it valid for real-time view angle compensation inside tunnels, where global positioning systems cannot operate. It is robust against changes in brightness at tunnel entrances and exits and capable of dealing with car lanes with dotted lines and regular white lines. We confirmed that it is possible to perform self-localization with 97.4% accuracy using the scanned data of white lines captured while the vehicle is running. When this self-localization was combined with an inspection system capable of motion blur correction, we were able to sequentially scan the tunnel lining surface at a stable camera angle at high speed, and it was shown that the acquired images could be stitched together to produce an expansion image that can be used for inspection.

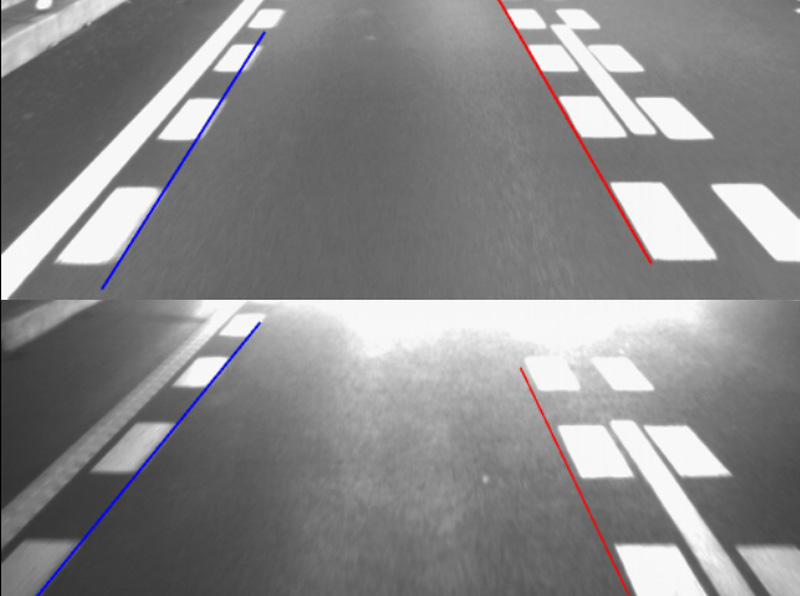

Results of white line recognition in the tunnel

- [1] T. Hayakawa and M. Ishikawa, “Motion-blur-compensated structural health monitoring system for tunnels at a speed of 100 km/h,” Proc. SPIE, Vol.10169, Nondestructive Characterization and Monitoring of Advanced Materials, Aerospace, and Civil Infrastructure 2017, pp. 101692G:1-101692G:8, doi: 10.1117/12.2259780, 2017.

- [2] T. Hayakawa and M. Ishikawa, “Motion-blur-compensated structural health monitoring system for tunnels using pre-emphasis technique,” Proc. of the Fifth Int. Symposium on Life -Cycle Civil Engineering, pp. 1627-1633, doi: 10.1201/9781315375175-235, 2016.

- [3] T. Hayakawa, Y. Moko, K. Morishita, and M. Ishikawa, “Pixel-wise deblurring imaging system based on active vision for structural health monitoring at a speed of 100 km/h,” Proc. of 10th Int. Conf. on Machine Vision (ICMV 2017), pp. 1069624:1-1069624:7, doi: 10.1117/12.2309522, 2017.

- [4] T. Yasuda, H. Yamamoto, and Y. Shigeta, “Tunnel Inspection System by using High-speed Mobile 3D Survey Vehicle: MIMM-R,” J. of the Robotics Society of Japan, Vol.34, No.9, pp. 589-590, doi: 10.7210/jrsj.34.589, 2016.

- [5] S. Nakamura, “Inspection Test of a Tunnel with an Inspection Vehicle for Tunnel Lining Concrete,” J. Robot. Mechatron., Vol.31, No.6, pp. 762-771, doi: 10.20965/jrm.2019.p0762, 2019.

- [6] K. Suzuki, H. Yamaguchi, K. Yamamoto, and N. Okamoto, “Tunnel inspection support service using 8K area sensor camera,” J. of the Japan Society of Photogrammetry, Vol.60, No.2, pp. 40-41, doi: 10.4287/jsprs.60.40, 2021.

- [7] T. Ogawa and K. Takagi, “Lane recognition using on-vehicle lidar,” Proc. of IEEE Intelligent Vehicles Symposium 2006, pp. 540-545, doi: 10.1109/IVS.2006.1689684, 2006.

- [8] A. V. Reyher, A. Joos, and H. Winner, “A lidar-based approach for near range lane detection,” Proc. of IEEE Intelligent Vehicles Symposium 2005, pp. 147-152, doi: 10.1109/IVS.2005.1505093, 2005.

- [9] N. Suganuma and T. Uozumi, “Lane marking detection and curvature estimation using laser range finder,” Trans. of the Society of Automotive Engineers of Japan, Vol.42, No.2, pp. 563-568, doi: 10.11351/jsaeronbun.42.563, 2011.

- [10] S. Akamatsu and T. Tomizawa, “A study on traffic lines recognition and prediction using LIDAR,” The Proc. of JSME annual Conf. on Robotics and Mechatronics (Robomec), doi: 10.1299/jsmermd.2014._2A2-H05_1, 2014.

- [11] Y. Wang, E. K. Teoh, and D. Shen, “Lane detection and tracking using B-Snake,” Image and Vision Computing, Vol.22, No.4, pp. 269-280, doi: 10.1016/j.imavis.2003.10.003, 2004.

- [12] A. Borkar, M. Hayes, and M. T. Smith, “Robust lane detection and tracking with RANSAC and Kalman filter,” 2009 16th IEEE Int. Conf. on Image Processing (ICIP), pp. 3261-3264, doi: 10.1109/ICIP.2009.5413980, 2009.

- [13] Q. Chen and H. Wang, “A real-time lane detection algorithm based on a hyperbola-pair model,” Proc. of IEEE Intelligent Vehicles Symposium 2006, pp. 510-515, doi: 10.1109/IVS.2006.1689679, 2006.

- [14] A. Borkar, M. Hayes, and M. T. Smith, “A novel lane detection system with efficient ground truth generation,” IEEE Trans. on Intelligent Transportation Systems, Vol.13, No.1, pp. 365-374, doi: 10.1109/TITS.2011.2173196, 2012.

- [15] J. Adachi and J. Sato, “Estimation of vehicle positions from uncalibrated cameras,” The IEICE Trans. on Information and Systems (Japanese edition), Vol.D89, No.1, pp. 74-83, 2006.

- [16] T. Hayakawa, Y. Moko, K. Morishita, and M. Ishikawa, “Real-time Robust Lane Detection Method at a Speed of 100 km/h for a Vehicle-mounted Tunnel Surface Inspection System,” 2019 IEEE Sensors Applications Symposium (SAS2019), doi: 10.1109/SAS.2019.8705966, 2019.

- [17] N. Otsu, “A threshold selection method from gray-level histograms,” IEEE Trans. on systems, man, and cybernetics, Vol.9, No.1, pp. 62-66, doi: 10.1109/TSMC.1979.4310076, 1979.

- [18] K. Miura, “Design and construction of mountain tunnels in Japan,” Tunnelling and Underground Space Technology, Vol.18, No.2-3, pp. 115-126, doi: 10.1016/S0886-7798(03)00038-5, 2003.

- [19] T. Hayakawa, T. Watanabe, and M. Ishikawa, “Real-time high-speed motion blur compensation system based on back-and-forth motion control of galvanometer mirror,” Opt. Express, Vol.23, No.25, pp. 31648-31661, doi: 10.1364/OE.23.031648, 2015.

- [20] M. Aly, “Real time detection of lane markers in urban streets,” Proc. of IEEE Intelligent Vehicles Symposium 2008, pp. 7-12, doi: 10.1109/IVS.2008.4621152, 2008.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.