Paper:

Improved 3D Human Motion Capture Using Kinect Skeleton and Depth Sensor

Alireza Bilesan*, Shunsuke Komizunai*, Teppei Tsujita**, and Atsushi Konno*

*Graduate School of information Science and Technology, Hokkaido University

Kita 14, Nishi 9, Kita-ku, Sapporo, Hokkaido 060-0814, Japan

**Department of Mechanical Engineering, National Defense Academy of Japan

1-10-20 Hashirimizu, Yokosuka, Kanagawa 239-8686, Japan

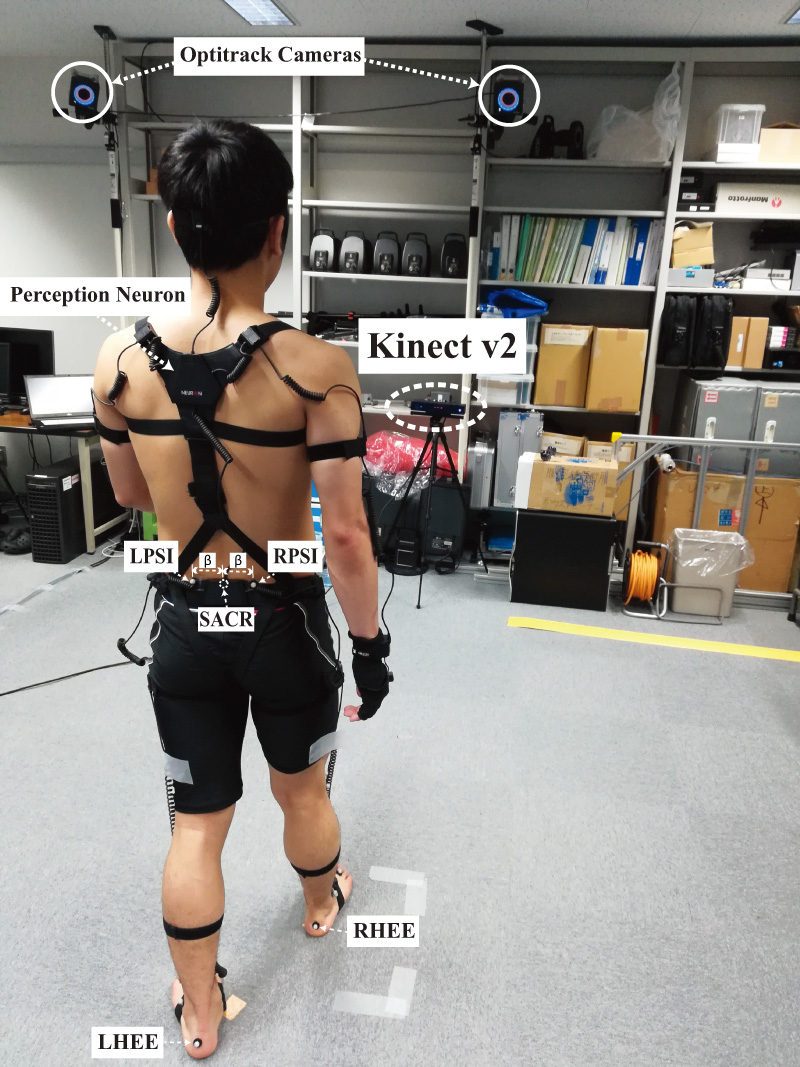

Kinect has been utilized as a cost-effective, easy-to-use motion capture sensor using the Kinect skeleton algorithm. However, a limited number of landmarks and inaccuracies in tracking the landmarks’ positions restrict Kinect’s capability. In order to increase the accuracy of motion capturing using Kinect, joint use of the Kinect skeleton algorithm and Kinect-based marker tracking was applied to track the 3D coordinates of multiple landmarks on human. The motion’s kinematic parameters were calculated using the landmarks’ positions by applying the joint constraints and inverse kinematics techniques. The accuracy of the proposed method and OptiTrack (NaturalPoint, Inc., USA) was evaluated in capturing the joint angles of a humanoid (as ground truth) in a walking test. In order to evaluate the accuracy of the proposed method in capturing the kinematic parameters of a human, lower body joint angles of five healthy subjects were extracted using a Kinect, and the results were compared to Perception Neuron (Noitom Ltd., China) and OptiTrack data during ten gait trials. The absolute agreement and consistency between each optical system and the robot data in the robot test and between each motion capture system and OptiTrack data in the human gait test were determined using intraclass correlations coefficients (ICC3). The reproducibility between systems was evaluated using Lin’s concordance correlation coefficient (CCC). The correlation coefficients with 95% confidence intervals (95%CI) were interpreted substantial for both OptiTrack and proposed method (ICC > 0.75 and CCC > 0.95) in humanoid test. The results of the human gait experiments demonstrated the advantage of the proposed method (ICC > 0.75 and RMSE = 1.1460°) over the Kinect skeleton model (ICC < 0.4 and RMSE = 6.5843°).

A human gait is captured by Kinect, Perception Neuron, and OptiTrack

- [1] H. Zhou and H. Hu, “Human motion tracking for rehabilitation – a survey,” Biomed Signal Process Control, Vol.3, No.1, pp. 1-18, 2008.

- [2] K. Miura, M. Morisawa, F. Kanehiro, S. Kajita, K. Kaneko, and K. Yokoi, “Human-like Walking with Toe Supporting for Humanoids,” Proc. of the IEEE/RSJ Int. Conf. on Intelligent Robots and Systems, pp. 4428-4435, 2011.

- [3] S. Kagami, M. Mochimaru, and Y. Ehara, “Measurement and Comparison of Human and Humanoid Walking,” Proc. of the IEEE Int. Symp. on Computational Intelligence in Robotics and Automation, Computational Intelligence in Robotics and Automation for the New Millennium, Vol.2, pp. 918-922, 2003.

- [4] J. Lee and K. H. Lee, “Precomputing avatar behavior from human motion data,” Graphical Models, Vol.68, No.2, pp. 158-174, 2006.

- [5] S. Calinon, F. D’halluin, E. L. Sauser, D. G. Caldwell, and A. G. Billard, “Learning and reproduction of gestures by imitation,” IEEE Robotics & Automation Magazine, Vol.17, No.2, pp. 44-54, 2010.

- [6] J. G. Richards, “The measurement of human motion: a comparison of commercially available systems,” Human Movement Science, Vol.18, No.5, pp. 589-602, 1999.

- [7] J. Shotton, “Real-Time Human Pose Recognition in Parts from a Single Depth Image,” Proc. IEEE Conf. Computer Vision and Pattern Recognition (CVPR), pp. 1297-1304, 2011.

- [8] S. Zennaro, M. Munaro, S. Milani, P. Zanuttigh, M. Bernardi, S. Ghidoni, and E. Menegatti, “Performance evaluation of the 1st and 2nd generation Kinect for multimedia applications,” Proc. of the IEEE Int. Conf. on Multimedia and Expo (ICME), pp. 1-6, 2015.

- [9] M. Jebeli, A. Bilesan, and A. Arshi, “A study on validating kinectV2 in comparison of vicon system as a motion capture system for using in health engineering in industry,” Nonlinear Engineering, Vol.6, No.2, pp. 95-99, 2017.

- [10] X. Xu and R. W. McGorry, “The validity of the first and second generation Microsoft Kinect™ for identifying joint center locations during static postures,” Applied Ergonomics, Vol.49, pp. 47-54, 2015.

- [11] T. M. Guess, S. Razu, A. Jahandar, M. Skubic, and Z. Huo, “Comparison of 3D joint angles measured with the kinect 2.0 skeletal tracker versus a marker-based motion capture system,” J. of Applied Biomechanics, Vol.33, No.2, pp. 176-181, 2017.

- [12] A. Bilesan, S. Behzadipour, T. Tsujita, S. Komizunai, and A. Konno, “Markerless Human Motion Tracking Using Microsoft Kinect SDK and Inverse Kinematics,” Proc. of the 12th Asian Control Conf., MoC 2.4, pp. 504-509, 2019.

- [13] M. do Carmo Vilas-Boas, H. M. P. Choupina, A. P. Rocha, J. M. Fernandes, and J. P. S. Cunha, “Full-body motion assessment: Concurrent validation of two body tracking depth sensors versus a gold standard system during gait,” J. of Biomechanics, Vol.87, pp. 189-196, 2019.

- [14] M. Eltoukhy, J. Hoon, C. Kuenze, and J. Signorile, “Improved kinect-based spatiotemporal and kinematic treadmill gait assessment,” Gait & Posture, Vol.51, pp. 77-83, 2017.

- [15] M. Kharazi, A. Memari, A. Shahrokhi, H. Nabavi, S. Khorami, A. Rasooli, H. Barnamei, A. Jamshidian, and M. Mirbagheri, “Validity of Microsoft KinectTM for measuring gait parameters,” Proc. of the 22nd Iranian Conf. on Biomedical Engineering (ICBME), pp. 375-379, 2015.

- [16] Z. Jamali and S. Behzadipour, “Quantitative evaluation of parameters affecting the accuracy of microsoft kinect in gait analysis,” Proc. of the 23rd Iranian Conf. on Biomedical Engineering (ICBME), pp. 306-311, 2016.

- [17] S. Springer and G. Y. Seligmann, “Validity of the kinect for gait assessment: A focused review,” Sensors, Vol.16, No.2, p. 194, 2016.

- [18] P. N. Pathirana, S. Li, H. M. Trinh, and A. Seneviratne, “Robust real-time bio-kinematic movement tracking using multiple kinects for tele-rehabilitation,” IEEE Trans. Ind. Electron., Vol.63, pp. 1822-1833, 2016.

- [19] D. J. Geerse, B. H. Coolen, and M. Roerdink, “Kinematic validation of a multi-Kinect v2 instrumented 10-meter walkway for quantitative gait assessments,” PLoS One, Vol.10, No.10, e0139913, 2015.

- [20] S. Moon, Y. Park, D. W. Ko, and I. H. Suh, “Multiple Kinect Sensor Fusion for Human Skeleton Tracking Using Kalman Filtering,” Int. J. of Advanced Robotic Systems, Vol.13, No.2, 65, 2016.

- [21] F. Destelle, A. Ahmadi, N. E. O’Connor, K. Moran, A. Chatzitofis, D. Zarpalas et al., “Low-cost accurate skeleton tracking based on fusion of kinect and wearable inertial sensors,” Proc. of 22nd European Signal Processing Conf. (EUSIPCO), pp. 371-375, 2014.

- [22] A. Timmi, G. Coates, K. Fortin, D. Ackland, A. L. Bryant, I. Gordon, and P. Pivonka, “Accuracy of a novel marker tracking approach based on the low-cost Microsoft Kinect v2 sensor,” Medical Engineering & Physics, Vol.59, pp. 63-69, 2018.

- [23] A. Bilesan, M. Owlia, S. Behzadipour, S. Ogawa, T. Tsujita, S. Komizunai, and A. Konno, “Marker-based motion tracking using Microsoft Kinect,” Proc. of the 12th IFAC Symp. on Robot Control, Vol.51, No.22, pp. 399-404, 2018.

- [24] A. Bilesan, S. Komizunai, T. Tsujita, and A. Konno, “Accurate Human Body Motion Capture Using a Single Eye IR Camera,” Proc. of the 37th Annual Conf. of the Robotics Society of Japan (RSJ), AC1F3-01, 2019.

- [25] G. Wu and P. R. Cavanagh, “ISB recommendations for standardization in the reporting of kinematic data,” J. of Biomechanics, Vol.28, No.10, pp. 1257-1261, 1995.

- [26] M. P. Kadaba, H. K. Ramakrishnan, and M. E. Wootten, “Measurement of lower extremity kinematics during level walking,” J. of Orthopaedic Research, Vol.8, No.3, pp. 383-392, 1990.

- [27] M. R. Naeemabadi, B. Dinesen, O. K. Andersen, and J. Hansen, “Investigating the impact of a motion capture system on Microsoft Kinect v2 recordings: A caution for using the technologies together,” PloS One, Vol.13, No.9, e0204052, 2018.

- [28] M. Dunn, D. Pavan, P. Ramirez, S. Rava, and A. Sharin, “An automated method to extract three-dimensional position data using an infrared time-of-flight camera,” Proc. of Multidisciplinary Digital Publishing Institute, Vol.2, No.6, p. 502, 2018.

- [29] K. Kaneko, F. Kanehiro, S. Kajita, H. Hirukawa, T. Kawasaki, M. Hirata, K. Akachi, and T. Isozumi, “Humanoid Robot HRP-2,” Proc. of the IEEE Int. Conf. on Robotics and Automation, pp. 1083-1090, 2004.

- [30] T. W. Lu and J. J. O’Connor, “Bone position estimation from skin marker co-ordinates using global optimisation with joint constraints,” J. of Biomechanics, Vol.32, No.2, pp. 129-134, 1999.

- [31] R. B. Davis, S. Ounpuu, D. Tyburski, and J. R. Gage, “A gait analysis data collection and reduction technique,” Human Movement Science, Vol.10, pp. 171-178, 1991.

- [32] L. Lin, “A concordance correlation coefficient to evaluate reproducibility,” Biometrics, Vol.45, No.1, pp. 255-268, 1989.

- [33] J. J. Bartko, “The intraclass correlation coefficient as a measure of reliability,” Psychological Reports, Vol.19, No.1, pp. 3-11, 1966.

- [34] C. C. Chen and H. K. Barmhart, “Comparison of ICC and CCC for assessing agreement for data without and with replications,” Comput. Stat. Data Anal., Vol.53, No.2, pp. 554-564, 2008.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.