Paper:

Gap Traversing Motion via a Hexapod Tracked Mobile Robot Based on Gap Width Detection

Taiga Sasaki and Toyomi Fujita

Department of Electrical and Electronic Engineering, Faculty of Engineering, Tohoku Institute of Technology

35-1 Yagiyama Kasumi-cho, Taihaku-ku, Sendai 982-8577, Japan

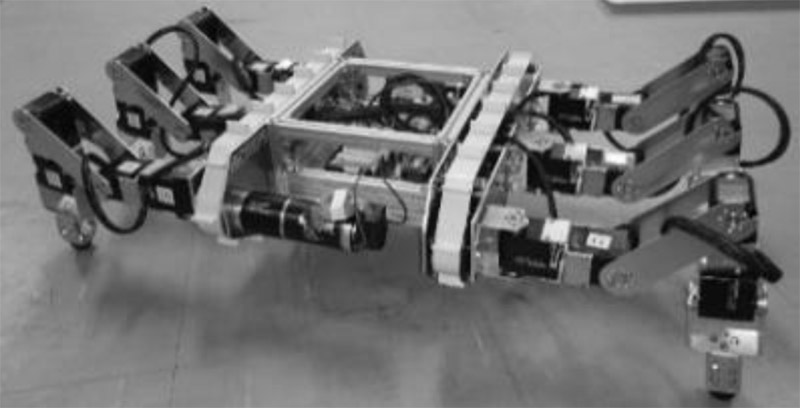

The authors developed a hexapod tracked mobile robot: a tracked mobile robot which is equipped with six legs attached to the robot’s body. In a transportation task, this robot can traverse a wide gap by supporting track driving with four front and rear legs while holding the target object with its two middle legs. To realize autonomous actions with this robot, we developed a two-dimensional distance measurement system using an infrared sensor. This system is very simple, with the sensor attached to a servomotor, such that it does not require high computing power for measurement. In addition, the system can be equipped at a lower cost than laser range finders and depth cameras. This paper describes the selection of the gap traversing mode according to gap width detected by the system. In this study, we conducted a gap width detection experiment and an autonomous gap traversing experiment using the hexapod tracked mobile robot with the proposed system. The obtained results confirm the effectiveness of the proposed system and autonomous traversing, which corresponds with the gap width detection.

Hexapod tracked mobile robot

- [1] K. Ueda, M. Guarnieri, T. Inoh, P. Debenest, R. Hodoshima, E. Fukushima, and S. Hirose, “Development of HELIOS IX: An Arm-Equipped Tracked Vehicle,” J. Robot. Mechatron., Vol.23, No.6, pp. 1031-1040, 2011.

- [2] T. Kamegawa, T. Yamasaki, and F. Matsuno, “Evaluation of Snake-like Rescue Robot “KOHGA” for Usability of Remote Control,” Proc. of IEEE Int. Workshop on Safety, Security and Rescue Robotics (SSRR 2005), pp. 25-30, 2005.

- [3] A. Sinha and P. Papadakis, “Mind the gap: Detection and traversability analysis of terrain gaps using LIDAR for safe robot navigation,” Robotica, Vol.31, Issue 7, pp. 1085-1101, 2013.

- [4] E. Mihankhah, A. Kalantari, E. Aboosaeedan, H. D. Taghirad, S. Ali, and A. Moosavian, “Autonomous staircase detection and stair climbing for a tracked mobile robot using fuzzy controller,” 2008 IEEE Int. Conf. on Robotics and Biomimetics, pp. 1980-1985, 2009.

- [5] T. Fujita, T. Sasaki, and Y. Tsuchiya, “Hybrid Motions by a Quadruped Tracked Mobile Robot,” Proc. of 2015 IEEE Int. J. on Safety, Security, and Rescue Robotics (SSRR 2015), pp. 1-6, 2015.

- [6] T. Fujita and T. Sasaki, “Consideration on a Crawler Robot with Six Legs,” Proc. of the 2016 Int. Conf. on Artificial Life and Robotics (ICAROB 2016), pp. 88-91, 2016.

- [7] T. Fujita and T. Sasaki, “Development of Hexapod Tracked Mobile Robot and Its Hybrid locomotion with Object-Carrying,” 2017 IEEE Int. Symp. on Robotics and Intelligent Sensors (IRIS 2017), pp. 69-73, 2017.

- [8] I. Hayasi, “Tank-Technology,” Sankaido, 1992 (in Japanese).

- [9] T. Kinugasa et al., “Shelled Structure for Flexible Mono-tread Mobile Track,” Int. J. of Applied Electromagnetics and Mechanics, Vol.52, Nos.3-4, pp. 891-896, 2016.

- [10] T. Kamegawa, T. Yarnasaki, H. Igarashi, and F. Matsuno, “Development of the snake-like rescue robot “kohga”,” Proc. of 2004 IEEE Int. Conf. on Robotics and Automation (ICRA ’04), Vol.5, pp. 5081-5086, 2004.

- [11] R. Hodoshima, Y. Fukumura, H. Amano, and S. Hirose, “Development of track-changeable quadruped walking robot TITAN X-design of leg driving mechanism and basic experiment,” 2010 IEEE/RSJ Int. Conf. on Intelligent Robots and Systems, pp. 3340-3345, 2010.

- [12] E. Rohmer et al., “Quince: A collaborative mobile robotic platform for rescue robots research and development,” 2010 JSME/RMD Int. Conf. on Advanced Mechatronics (ICAM 2010), Vol.2010.5, pp. 225-230, 2010.

- [13] S. Suzuki, S. Hasegawa, and M. Okugawa, “Remote control system of disaster response robot with passive sub-crawlers considering falling down avoidance,” ROBOMECH J., Vol.1, No.1, pp. 1-12, 2014.

- [14] S. Murakami, T. Ono, and T. Kimura, “Development of open source oriented working components for small mobile robots – Case study in RoboCup2019 Japan Open Nagaoka Rescue Real Machine League –,” Proc. of the 2020 JSME Conf. on Robotics and Mechatronics, 2P2-C07, 2020 (in Japanese).

- [15] S. Kobayashi, Y. Kobayashi, Y. Yamamoto, T. Watasue, Y. Ohtsubo, T. Inoue, and T. Takamori, “Development of a door opening system on rescue robot for search “UMRS-2007”,” 2008 SICE Annual Conf., pp. 2062-2065, 2008.

- [16] A. Motila and K. Kurt, “Real-time localization in outdoor environments using stereo vision and inexpensive gps,” 18th Int. Conf. on Pattern Recognition (ICPR’06), Vol.3, pp. 1063-1068, 2006.

- [17] K. N. Al-Mutib, E. A. Mattar, M. M. Alsulaiman, and H. Ramdane, “Stereo vision SLAM based indoor autonomous mobile robot navigation,” 2014 IEEE Int. Conf. on Robotics and Biomimetics (ROBIO 2014), pp. 1584-1589, 2014.

- [18] B. Joydeep and V. Manuela, “Depth camera based indoor mobile robot localization and navigation,” IEEE Int. Conf. on Robotics and Automation, pp. 1697-1702, 2012.

- [19] P. Kim, J. Chen, and Y. K. Cho, “SLAM-driven robotic mapping and registration of 3D point clouds,” Automation in Construction, Vol.89, pp. 38-48, 2018.

- [20] M. Nomatsu, Y. Suganuma, Y. Yui, and Y. Uchimura, “Development of an Autonomous Mobile Robot with Self-Localization and Searching Target in a Real Environment,” J. Robot. Mechatron., Vol.27, No.4, pp. 356-364, 2015.

- [21] K. Kurashiki, M. Aguilar, and S. Soontornvanichkit, “Visual Navigation of a Wheeled Mobile Robot Using Front Image in Semi-Structured Environment,” J. Robot. Mechatron., Vol.27, No.4, pp. 392-400, 2015.

- [22] N. Andreas, L. Kai, H. Joachim, and S. Hartmut, “6D SLAM – 3D mapping outdoor environments,” J. of Field Robotics, Vol.24, Nos.8-9, pp. 699-722, 2007.

- [23] T. Luka, Š. Igor, and K. Gregor, “Using a LRF sensor in the Kalman-filtering-based localization of a mobile robot,” ISA Trans., Vol.49, No.1, pp. 145-153, 2010.

- [24] H. Yu, H. Hsieh, Y. Tasi, Z.-H. Ou, Y. Huang, and T. Fukuda, “Visual Localization for Mobile Robots Based on Composite Map,” J. Robot. Mechatron., Vol.25, No.1, pp. 25-37, 2013.

- [25] Z. Taha, J. Chew, and H. Yap, “Omnidirectional Vision for Mobile Robot Navigation,” J. of Advanced Computational Intelligence and Intelligent Informatics, Vol.14, No.1, pp. 55-62, 2010.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.