Paper:

Investigation of Preliminary Motions from a Static State and Their Predictability

Chaoshun Xu*, Masahiro Fujiwara**, Yasutoshi Makino**,***, and Hiroyuki Shinoda**

*Graduate School of Information Science and Technology, The University of Tokyo

7-3-1 Hongo, Bunkyo-ku, Tokyo 113-8656, Japan

**Graduate School of Frontier Sciences, The University of Tokyo

5-1-5 Kashiwanoha, Kashiwa-shi, Chiba 277-8561, Japan

***JST PRESTO

7 Gobancho, Chiyoda-ku, Tokyo 102-0076, Japan

Humans observe the actions of others and predict their movements slightly ahead of time in everyday life. Many studies have been conducted to automate such a prediction ability computationally using neural networks; however, they implicitly assumed that preliminary motions occurred before significant movements. In this study, we quantitatively investigate when and how long a preliminary motion appears in motions from static states and what kinds of motion can be predicted in principle. We consider this knowledge fundamental for movement prediction in interaction techniques. We examined preliminary motions of basic movements such as kicking and jumping, and confirmed the presence of preliminary motions by using them as inputs to a neural network. As a result, although we did not find preliminary motion for a hand-moving task, a left-right jumping task had the most preliminary motion, up to 0.4 s before the main movement.

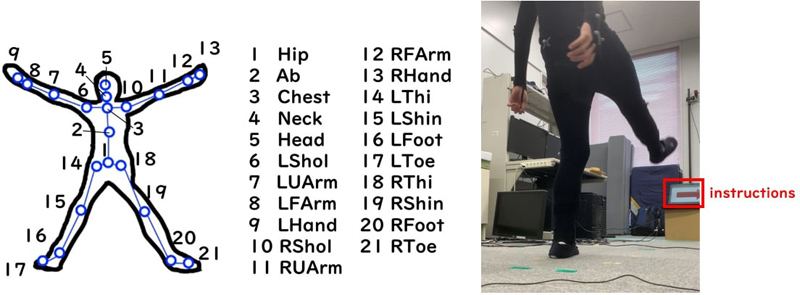

Type of movements measured in the paper

- [1] T. Fong, I. Nourbakhsh, and K. Dautenhahn, “A survey of socially interactive robots,” Robotics and Autonomous Systems, Vol.42, No.3-4, pp. 143-166, 2003.

- [2] C. Breazeal, “Robot in society: Friend or appliance?,” Proc. of the Agents99 Workshop on Emotion-Based Agent Architectures, pp. 18-26, 1999.

- [3] N. Imaoka, K. Kitazawa, M. Kamezaki, S. Sugano, and T. Ando, “Autonomous Mobile Robot Moving Through Static Crowd: Arm with One-DoF and Hand with Involute Shape to Maneuver Human Position,” J. Robot. Mechatron., Vol.32, No.1, pp. 59-67, 2020.

- [4] Y. H. Weng, C. H. Chen, and C. T. Sun, “Toward the human-robot co-existence society: On safety intelligence for next generation robots,” Int. J. of Social Robotics, Vol.1, No.4, pp. 267-282, 2009.

- [5] G. K. Still, “Crowd dynamics,” Ph.D. Dissertation, University of Warwick, 2000.

- [6] J. Martinez, M. J. Black, and J. Romero, “On human motion prediction using recurrent neural networks,” Proc. of the IEEE Conf. on Computer Vision and Pattern Recognition, pp. 2891-2900, 2017.

- [7] T. Vicsek and A. Zafeiris, “Collective motion,” Physics Reports, Vol.517, No.3-4, pp. 71-140, 2012.

- [8] M. Ballerini et al., “Interaction ruling animal collective behavior depends on topological rather than metric distance: Evidence from a field study,” Proc. of the National Academy of Sciences, Vol.105, No.4, pp. 1232-1237, 2008.

- [9] R. Lukeman, Y. X. Li, and L. Edelstein-Keshet, “Inferring individual rules from collective behavior,” Proc. of the National Academy of Sciences, Vol.107, No.28, pp. 12576-12580, 2010.

- [10] K. Fragkiadaki, S. Levine, P. Felsen, and J. Malik, “Recurrent network models for human dynamics,” Proc. of the IEEE Int. Conf. on Computer Vision, pp. 4346-4354, 2015.

- [11] H. Chiu, E. Adeli, B. Wang, D. Huang, and J. C. Niebles, “Action-agnostic human pose forecasting,” Proc. of the 2019 IEEE Winter Conf. on Applications of Computer Vision (WACV), pp. 1423-1432, 2019.

- [12] C. Ionescu, D. Papava, V. Olaru, and C. Sminchisescu, “Human3.6M: Large Scale Datasets and Predictive Methods for 3D Human Sensing in Natural Environments,” IEEE Trans. on Pattern Analysis and Machine Intelligence, Vol.36, No.7, pp. 1325-1339, 2014.

- [13] E. Barsoum, J. Kender, and Z. Liu, “HP-GAN: Probabilistic 3D human motion prediction via GAN,” Proc. of the IEEE Conf. on Computer Vision and Pattern Recognition Workshops, pp. 1418-1427, 2018.

- [14] Y. Chao, J. Yang, B. Price, S. Cohen, and J. Deng, “Forecasting human dynamics from static images,” Proc. of the IEEE Conf. on Computer Vision and Pattern Recognition, pp. 548-556, 2017.

- [15] M. Bataineh, T. Marler, K. Abdel-Malek, and J. Arora, “Neural network for dynamic human motion prediction,” Expert Systems with Applications, Vol.48, pp. 26-34, 2016.

- [16] Y. Horiuchi, Y. Makino, and H. Shinoda, “Computational foresight: Forecasting human body motion in real-time for reducing delays in interactive system,” Proc. of the 2017 ACM Int. Conf. on Interactive Surfaces and Spaces, pp. 312-317, 2017.

- [17] S. Suda, Y. Makino, and H. Shinoda, “Prediction of Volleyball Trajectory Using Skeletal Motions of Setter Player,” Proc. of the 10th Augmented Human Int. Conf. 2019, pp. 1-8, 2019.

- [18] E. Wu and H. Koike, “FuturePong: Real-time Table Tennis Trajectory Forecasting using Pose Prediction Network,” Proc. of the Extended Abstracts of the 2020 CHI Conf. on Human Factors in Computing Systems, pp. 1-8, 2020.

- [19] E. Wu and H. Koike, “FuturePose-Mixed Reality Martial Arts Training Using Real-Time 3D Human Pose Forecasting With a RGB Camera,” Proc. of the 2019 IEEE Winter Conf. on Applications of Computer Vision (WACV), pp. 1384-1392, 2019.

- [20] A. Namiki and F. Takahashi, “Motion Generation for a Sword-Fighting Robot Based on Quick Detection of Opposite Player’s Initial Motions,” J. Robot. Mechatron., Vol.27, No.5, pp. 543-55, 2015.

- [21] S. Tachi, K. Tanie, K. Komoriya, and M. Kaneko, “Tele-existence (I): Design and evaluation of a visual display with sensation of presence,” A. Morecki, G. Bianchi, and K. Kędzior (Eds.), “Theory and Practice of Robots and Manipulators,” MIT Press, pp. 245-254, 1985.

- [22] T. Kurai, Y. Shioi, Y. Makino, and H. Shinoda, “Temporal Conditions Suitable for Predicting Human Motion in Walking,” Proc. of the 2019 IEEE Int. Conf. on Systems, Man and Cybernetics (SMC), pp. 2986-2991, 2019.

- [23] W. Mao, M. Liu, M. Salzmann, and H. Li, “Learning trajectory dependencies for human motion prediction,” Proc. of the IEEE Int. Conf. on Computer Vision, pp. 9489-9497, 2019.

- [24] M. Argyle, “Non-verbal communication in human social interaction,” Cambridge University Press, 1972.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.