Paper:

Pallet Handling System with an Autonomous Forklift for Outdoor Fields

Ryosuke Iinuma*, Yusuke Kojima*, Hiroyuki Onoyama*, Takanori Fukao*, Shingo Hattori**, and Yasunori Nonogaki**

*Ritsumeikan University

1-1-1 Nojihigashi, Kusatsu-shi, Shiga 525-8577, Japan

**Toyota Industries Corporation

2-1-1 Toyota-chou, Takahama-shi, Aichi 444-1393, Japan

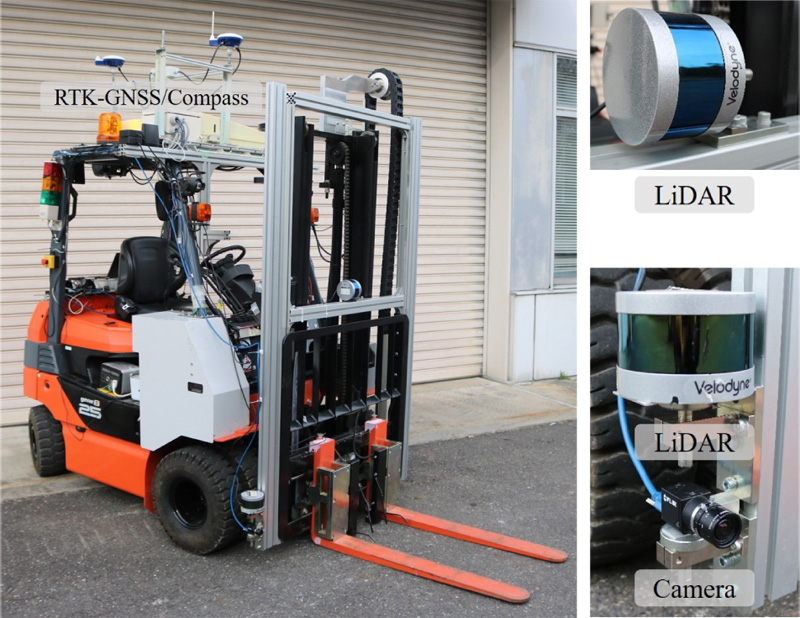

In Japan, the aging and depopulation of its workforce are issues. Therefore, the development of autonomous agricultural robots is required for saving manpower and labor. In this paper, we described an autonomous pallet handling system for forklift, which can automatically unload and convey pallets for harvesting vegetables outdoors. Because of inserting the forks into a narrow pallet hole, accurate pallet posture estimation and accurate control of a forklift and the forks are required. The system can detect the pallet by deep learning based object detection from an image. Based on the results of object detection and measurement by horizontal 3D light detection and ranging (LiDAR), the system accurately estimates a distance as well as horizontal and vertical deviation between the forklift and the pallet in the outside field. The forklift is controlled by sliding mode control (SMC) which is robust to disturbances. Furthermore, the vertical LiDAR scans the pallet for precisely adjusting the height of the fork. The system requires the environment with no or little preparation for the automation process. We confirmed the effectiveness of the system through an experiment. The experiment is assumed that the forklift unloads the pallet from the vehicle as the real task of agriculture. The experimental results indicated the suitability of the system in real agricultural environments.

Pallet handling system with an autonomous forklift

- [1] A. Milioto, P. Lottes, and C. Stachniss, “Real-time semantic segmentation of crop and weed for precision agriculture robots leveraging background knowledge in cnns,” Proc. of IEEE Int. Conf. on Robotics and Automation, pp. 2229-2235, 2018.

- [2] H. Sori, H. Inoue, H. Hatta, and Y. Ando, “Effect for a paddy weeding robot in wet rice culture,” J. Robot. Mechatron., Vol.30, No.2, pp. 198-205, 2018.

- [3] T. Fujinaga, S. Yasukawa, B. Li, and K. Ishii, “Image mosaicing using multi-modal images for generation of tomato growth state map,” J. Robot. Mechatron., Vol.30, No.2, pp. 187-197, 2018.

- [4] J. Pagès, X. Armangue, J. Salvi, J. Freixenet, and J. Marti, “A computer vision system for autonomous forklift vehicles in industrial environments,” Proc. of the 9th Mediterranean Conf. on Control and Automation, pp. 1-6, 2001.

- [5] M. Seelinger and J. Yoder, “Automatic visual guidance of a forklift engaging a pallet,” Robotics and Autonomous Systems, Vol.54, pp. 1026-1038, 2006.

- [6] G. Garibotto, S. Masciangelo, M. Ilic, and P. Bassino, “Robolift: a vision guided autonomous fork-lift for pallet handling,” Proc. of IEEE/RSJ Int. Conf. on Intelligent Robots and Systems (IROS ’96), Vol.2, pp. 656-663, 1996.

- [7] G. Cui, L. Lu, Z. He, L. Yao, C. Yang, B. Huang, and Z. Hu, “A robust autonomous mobile forklift pallet recognition,” Proc. of the 2010 2nd Int. Asia Conf. on Informatics in Control, Automation and Robotics, Vol.3, pp. 286-290, 2010.

- [8] G. Garibotto, S. Masciangelo, P. Bassino, C. Coelho, A. Pavan, and M. Marson, “Industrial exploitation of computer vision in logistic automation: autonomous control of an intelligent forklift truck,” Proc. of 1998 IEEE Int. Conf. on Robotics and Automation, Vol.2, pp. 1459-1464, 1998.

- [9] R. Bostelman, T. Hong, and T. Chang, “Visualization of pallets,” Intelligent Robots and Computer Vision XXIV: Algorithms, Techniques, and Active Vision, Vol.6384, pp. 78-89, 2006.

- [10] J. Syu, H. Li, J. Chiang, C. Hsia, P. Wu, C. Hsieh, and S. Li, “A computer vision assisted system for autonomous forklift vehicles in real factory environment,” Multimedia Tools and Applications, Vol.76, No.18, pp. 18387-18407, 2017.

- [11] T. Tamba, B. Hong, and K. Hong, “A path following control of an unmanned autonomous forklift,” Int. J. of Control, Automation and Systems, Vol.7, pp. 113-122, 2009.

- [12] A. Correa, M. Walter, L. Fletcher, J. Glass, S. Teller, and R. Davis, “Multimodal interaction with an autonomous forklift,” Proc. of the ACM/IEEE Int. Conf. on Human-Robot Interaction, pp. 243-250, 2010.

- [13] W. Liu, D. Anguelov, D. Erhan, C. Szegedy, S. Reed, and A. C. Berg, “SSD: Single shot multibox detector,” Proc. of European Conf. on Computer Vision, pp. 21-37, 2016.

- [14] K. Simonyan and A. Zisserman, “Very deep convolutional networks for large-scale image recognition,” Proc. of Int. Conf. on Learning Representations, pp. 1-14, 2015.

- [15] J. Redmon, S. Divvala, R. Girshick, and A. Farhadi, “You only look once: Unified, real-time object detection,” Proc. of IEEE/CVF Int. Conf. on Computer Vision and Pattern Recognition, pp. 779-788, 2016.

- [16] S. Ren, K. He, R. Girshick, and J. Sun, “Faster r-cnn: Towards real-time object detection with region proposal networks,” IEEE Trans. on Pattern Analysis and Machine Intelligence, Vol.39, No.6, pp. 1137-1149, 2017.

- [17] M. Fischler and R. Bolles, “Random sample consensus: A paradigm for model fitting with applications to image analysis and automated cartography,” Commun. ACM, Vol.24, No.6, pp. 381-395, 1981.

- [18] L. Huang and M. Barth, “A novel multi-planar lidar and computer vision calibration procedure using 2d patterns for automated navigation,” 2009 IEEE Intelligent Vehicles Symp., pp. 117-122, 2009.

- [19] D. H. Shin, S. Singh, and W. Whittaker, “Path generation for robot vehicles using composite clothoid segments,” IFAC Proc. Volumes, Vol.25, Issue 6, pp. 443-448, 1992.

- [20] M. Hamaguchi and T. Taniguchi, “Emergency avoidance control system for an automatic vehicle – slip ratio control using sliding mode control and real-number-coded immune algorithm –,” J. Robot. Mechatron., Vol.27, No.6, pp. 645-652, 2015.

- [21] H. Ashrafiuon and K. R. Muske, “Sliding mode tracking control of surface vessels,” 2008 American Control Conf., pp. 556-561, 2008.

- [22] C. Edwards and S. Spurgeon, “Sliding Mode Control: Theory And Applications (Series in Systems and Control),” Taylor & Francis, 1998.

- [23] H. Irie, K. Kurashiki, T. Fukao, and N. Murakami, “Automatic driving systems using radio frequency tags for an agricultural crawler vehicle,” J. of the Japanese Society of Agricultural Machinery and Food Engineers, Vol.76, No.2, pp. 163-169, 2014 (in Japanese).

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.