Paper:

Gaze-Height and Speech-Timing Effects on Feeling Robot-Initiated Touches

Masahiro Shiomi*1, Takahiro Hirano*1,*2, Mitsuhiko Kimoto*1,*3, Takamasa Iio*1,*4,*5, and Katsunori Shimohara*1,*2

*1Advanced Telecommunications Research Institute International (ATR)

2-2-2 Hikaridai, Keihanna Science City, Kyoto 619-0288, Japan

*2Graduate School of Science and Engineering, Doshisha University

1-3 Tatara Miyakodani, Kyotanabe, Kyoto 610-0321, Japan

*3Keio University

3-14-1 Hiyoshi, Kohoku-ku, Yokohama, Kanagawa 223-8522, Japan

*4JST PRESTO

Research Division Gobancho Building, 7 Gobancho, Chiyoda-ku, Tokyo 102-0076, Japan

*5University of Tsukuba

1-1-1 Tennodai, Tsukuba, Ibaraki 305-8577, Japan

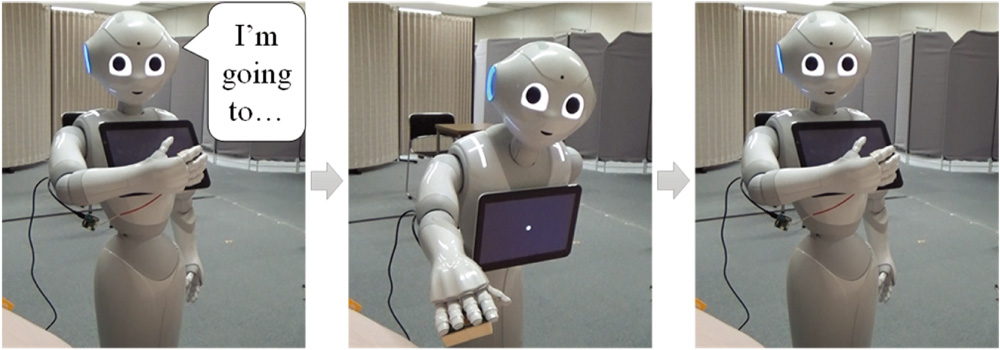

This paper reports the effects of communication cues on robot-initiated touch interactions at close distance by focusing on two factors: gaze-height for making eye contact and speech timing before and after touches. Although both factors are essential to achieve acceptable touches in human-human touch interaction, their effectiveness remains unknown in human-robot touch interaction contexts. To investigate the effects of these factors, we conducted an experiment whose results showed that being touched with before-touch timing is preferred to being touched with after-touch timing, although gaze-height did not significantly improve the feelings of robot-initiated touch.

Touch with before-touch timing and crouching-down

- [1] K. M. Grewen, B. J. Anderson, S. S. Girdler, and K. C. Light, “Warm partner contact is related to lower cardiovascular reactivity,” Behavioral Medicine, Vol.29, No.3, pp. 123-130, 2003.

- [2] S. Cohen, D. Janicki-Deverts, R. B. Turner, and W. J. Doyle, “Does hugging provide stress-buffering social support? A study of susceptibility to upper respiratory infection and illness,” Psychological science, Vol.26, No.2, pp. 135-147, 2015.

- [3] B. K. Jakubiak and B. C. Feeney, “Keep in touch: The effects of imagined touch support on stress and exploration,” J. of Experimental Social Psychology, Vol.65, pp. 59-67, 2016.

- [4] A. Gallace and C. Spence, “The science of interpersonal touch: an overview,” Neuroscience & Biobehavioral Reviews, Vol.34, No.2, pp. 246-259, 2010.

- [5] K. C. Light, K. M. Grewen, and J. A. Amico, “More frequent partner hugs and higher oxytocin levels are linked to lower blood pressure and heart rate in premenopausal women,” Biological Psychology, Vol.69, No.1, pp. 5-21, 2005.

- [6] T. Field, “Touch for socioemotional and physical well-being: A review,” Developmental Review, Vol.30, No.4, pp. 367-383, 2010.

- [7] R. Yu et al., “Use of a Therapeutic, Socially Assistive Pet Robot (PARO) in Improving Mood and Stimulating Social Interaction and Communication for People With Dementia: Study Protocol for a Randomized Controlled Trial,” JMIR Research Protocols, Vol.4, No.2, e45, 2015.

- [8] M. Shiomi, K. Nakagawa, K. Shinozawa, R. Matsumura, H. Ishiguro, and N. Hagita, “Does A Robot’s Touch Encourage Human Effort?,” Int. J. of Social Robotics, Vol.9, pp. 5-15, 2017.

- [9] H. Sumioka, A. Nakae, R. Kanai, and H. Ishiguro, “Huggable communication medium decreases cortisol levels,” Scientific Reports, Vol.3, p. 3034, 2013.

- [10] M. Shiomi, A. Nakata, M. Kanbara, and N. Hagita, “A Hug from a Robot Encourages Prosocial Behavior,” Proc. of 2017 26th IEEE Int. Symp. on Robot and Human Interactive Communication (RO-MAN), pp. 418-423, 2017.

- [11] M. Shiomi and N. Hagita, “Audio-Visual Stimuli Change not Only Robot’s Hug Impressions but Also Its Stress-Buffering Effects,” Int. J. of Social Robotics, pp. 1-8, 2019.

- [12] T. Hirano et al., “How Do Communication Cues Change Impressions of Human-Robot Touch Interaction?,” Int. J. of Social Robotics, Vol.10, No.1, pp. 21-31, 2018.

- [13] Y. Tamura, T. Akashi, and H. Osumi, “Where Robot Looks Is Not Where Person Thinks Robot Looks,” J. Adv. Comput. Intell. Intell. Inform., Vol.21, No.4, pp. 660-666, 2017.

- [14] H. Sumioka et al., “Learning of joint attention from detecting causality based on transfer entropy,” J. Robot. Mechatron., Vol.20, No.3, p. 378, 2008.

- [15] T. L. Chen, C.-H. A. King, A. L. Thomaz, and C. C. Kemp, “An Investigation of Responses to Robot-Initiated Touch in a Nursing Context,” Int. J. of Social Robotics, Vol.6, No.1, pp. 141-161, 2013.

- [16] T. L. Chen et al., “Older adults’ acceptance of a robot for partner dance-based exercise,” PLoS ONE, Vol.12, No.10, e0182736, 2017.

- [17] T. L. Chen et al., “Evaluation by expert dancers of a robot that performs partnered stepping via haptic interaction,” PLoS ONE, Vol.10, No.5, e0125179, 2015.

- [18] K. Kosuge, T. Hayashi, Y. Hirata, and R. Tobiyama, “Dance partner robot – Ms DanceR,” Proc. 2003 IEEE/RSJ Int. Conf. on Intelligent Robots and Systems (IROS 2003), Vol.4, pp. 3459-3464, 2003.

- [19] K. Funakoshi, K. Kobayashi, M. Nakano, S. Yamada, Y. Kitamura, and H. Tsujino, “Smoothing human-robot speech interactions by using a blinking-light as subtle expression,” Proc. of the 10th Int. Conf. on Multimodal Interfaces (ICMI 2008), pp. 293-296, 2008.

- [20] Z. N. Kain et al., “Healthcare provider-child-parent communication in the preoperative surgical setting,” Pediatric Anesthesia, Vol.19, No.4, pp. 376-384, 2009.

- [21] E. B. Wright, C. Holcombe, and P. Salmon, “Doctors’ communication of trust, care, and respect in breast cancer: qualitative study,” BMJ, Vol.328, No.7444, p. 864, 2004.

- [22] R. F. Brown and C. L. Bylund, “Communication skills training: describing a new conceptual model,” Academic Medicine, Vol.83, No.1, pp. 37-44, 2008.

- [23] I. Rae, L. Takayama, and B. Mutlu, “The influence of height in robot-mediated communication,” 2013 8th ACM/IEEE Int. Conf. on Human-Robot Interaction (HRI), pp. 1-8, 2013.

- [24] Y. Hiroi and A. Ito, “Influence of the Height of a Robot on Comfortableness of Verbal Interaction,” IAENG Int. J. of Computer Science, Vol.43, No.4, pp. 447-455, 2016.

- [25] C. O’lynn and L. Krautscheid, “‘How should I touch you?’: a qualitative study of attitudes on intimate touch in nursing care,” AJN The American J. of Nursing, Vol.111, No.3, pp. 24-31, 2011.

- [26] B. Mutlu, T. Shiwa, T. Kanda, H. Ishiguro, and N. Hagita, “Footing in human-robot conversations: how robots might shape participant roles using gaze cues,” Proc. of the 4th ACM/IEEE Int. Conf. on Human Robot Interaction, pp. 61-68, 2009.

- [27] Y. Kuno, K. Sadazuka, M. Kawashima, K. Yamazaki, A. Yamazaki, and H. Kuzuoka, “Museum guide robot based on sociological interaction analysis,” Proc. of the SIGCHI Conf. on Human Factors in Computing Systems, pp. 1191-1194, 2007.

- [28] T. Komatsubara, M. Shiomi, T. Kanda, H. Ishiguro, and N. Hagita, “Can a social robot help children’s understanding of science in classrooms?,” Proc. of the 2nd Int. Conf. on Human-Agent Interaction, pp. 83-90, 2014.

- [29] Y. Tamura, M. Kimoto, M. Shiomi, T. Iio, K. Shimohara, and N. Hagita, “Effects of a Listener Robot with Children in Storytelling,” Proc. of the 5th Int. Conf. on Human Agent Interaction, pp. 35-43, 2017.

- [30] S. Satake, T. Kanda, D. F. Glas, M. Imai, H. Ishiguro, and N. Hagita, “A Robot that Approaches Pedestrians,” IEEE Trans. on Robotics, Vol.29, No.2, pp. 508-524, 2013.

- [31] Y. Miyaji and K. Tomiyama, “Implementation Approach of Affective Interaction for Caregiver Support Robot,” J. Robot. Mechatron., Vol.25, No.6, pp. 1060-1069, 2013.

- [32] K. Hayashi, M. Shiomi, T. Kanda, and N. Hagita, “Friendly patrolling: A model of natural encounters,” H. Durrant-Whyte, N. Roy, and P. Abbeel (Eds.), “Robotics: Science and Systems VII,” pp. 121-128, 2012.

- [33] M. Gharbi, P. V. Paubel, A. Clodic, O. Carreras, R. Alami, and J. M. Cellier, “Toward a better understanding of the communication cues involved in a human-robot object transfer,” 24th IEEE Int. Symp. on Robot and Human Interactive Communication (RO-MAN), pp. 319-324, 2015.

- [34] Y. Okuno, T. Kanda, M. Imai, H. Ishiguro, and N. Hagita, “Providing route directions: design of robot’s utterance, gesture, and timing,” Proc. of the 4th ACM/IEEE Int. Conf. on Human Robot Interaction, 2009, pp. 53-60. 2009.

- [35] T. Shiwa, T. Kanda, M. Imai, H. Ishiguro, and N. Hagita, “How Quickly Should a Communication Robot Respond? Delaying Strategies and Habituation Effects,” Int. J. of Social Robotics, Vol.1, No.2, pp. 141-155, 2009.

- [36] R. Nakanishi, K. Inoue, S. Nakamura, K. Takanashi, and T. Kawahara, “Generating fillers based on dialog act pairs for smooth turn-taking by humanoid robot,” Proc. of Int. Workshop on Spoken Dialogue System Technology, pp. 91-101, 2018.

- [37] M. Shimada and T. Kanda, “What is the appropriate speech rate for a communication robot?,” Interaction Studies, Vol.13, No.3, pp. 408-435, 2012.

- [38] C. Bartneck, D. Kulić, E. Croft, and S. Zoghbi, “Measurement instruments for the anthropomorphism, animacy, likeability, perceived intelligence, and perceived safety of robots,” Int. J. of Social Robotics, Vol.1, No.1, pp. 71-81, 2009.

- [39] M. Shiomi, K. Shatani, T. Minato, and H. Ishiguro, “How should a Robot React before People’s Touch?: Modeling a Pre-Touch Reaction Distance for a Robot’s Face,” IEEE Robotics and Automation Letters, Vol.3, No.4, pp. 3773-3780, 2018.

- [40] D. C. Barnlund, “Communicative styles of Japanese and Americans: Images and realities,” Wadsworth/Thomson Learning, 1989.

- [41] T. Hatta and S. J. Dimond, “Differences in face touching by Japanese and British people,” Neuropsychologia, Vol.22, No.4, pp. 531-534, 1984.

- [42] T. Hatamaya and T. Glover, “A Bodywork Program for Nursing School Students,” Annals of Nanzan University, No.13, pp. 149-162, 2017.

- [43] X. Zheng, M. Shiomi, T. Minato, and H. Ishiguro, “What Kinds of Robot’s Touch Will Match Expressed Emotions?,” IEEE Robotics and Automation Letters, Vol.5, No.1, pp. 127-134, 2019.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.