Paper:

Effects of Robot’s Awareness and its Subtle Reactions Toward People’s Perceived Feelings in Touch Interaction

Masahiro Shiomi*, Takashi Minato*, and Hiroshi Ishiguro*,**

*Advanced Telecommunications Research Institute International (ATR)

2-2-2 Hikaridai, Keihanna Science City, Kyoto 619-0288, Japan

**Osaka University

1-3 Machikaneyama, Toyonaka, Osaka 560-8531, Japan

This study addresses the effects of a robot’s awareness and its subtle reactions toward the perceived feelings of people who touch a robot. When another unexpectedly touches us, we subtly and involuntarily react. Because such reactions are involuntary, it is impossible to eliminate them for humans. However, intentionally using them for robots might positively affect their perceived feelings, in particular, when a robot has a human-like appearance that evokes human-like reactions. We investigate the relationship between subtle reactions and the awareness of the existence of a human, i.e., whether a robot’s awareness and its subtle reactions influence people’s impressions of the robot when they touch it. Our experimental results with 20 participants and an android with a female-like appearance showed significant effects between awareness and subtle reactions. When the robot did not show awareness, its subtle reaction increased the perceived human-likeness. Moreover, when the robot did not show subtle reactions, showing awareness beforehand increased the perceived human-likeness.

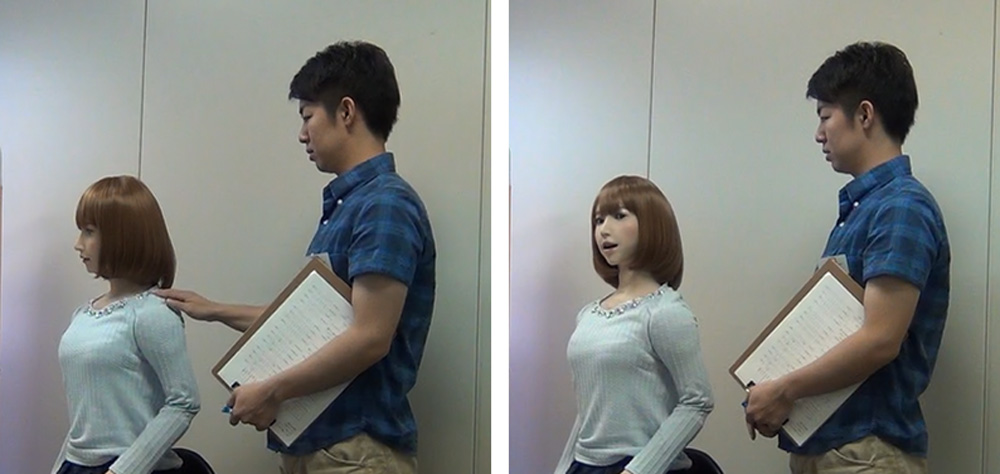

ERICA reacted to the touch of the participant

- [1] T. Goodman and R. Spence, “The effect of system response time on interactive computer aided problem solving,” ACM SIGGRAPH Computer Graphics, Vol.12, No.3, pp. 100-104, 1978.

- [2] J. L. Guynes, “Impact of system response time on state anxiety,” Communications of the ACM, Vol.31, No.3, pp. 342-347, 1988.

- [3] R. B. Miller, “Response time in man-computer conversational transactions,” Proc. of the December 9-11, 1968, Fall Joint Computer Conf., Part I, pp. 267-277, 1968.

- [4] T. Shiwa, T. Kanda, M. Imai, H. Ishiguro, and N. Hagita, “How Quickly Should a Communication Robot Respond? Delaying Strategies and Habituation Effects,” Int. J. of Social Robotics, Vol.1, No.2, pp. 141-155, 2009.

- [5] M. Shiomi, T. Minato, and H. Ishiguro, “Subtle Reaction and Response Time Effects in Human-Robot Touch Interaction,” Int. Conf. on Social Robotics (ICSR 2017), pp. 242-251, 2017.

- [6] D. Mazzei, C. De Maria, and G. Vozzi, “Touch sensor for social robots and interactive objects affective interaction,” Sensors and Actuators A: Physical, Vol.251, pp. 92-99, 2016.

- [7] T. Salter, F. Michaud, D. Letourneau, D. Lee, and I. P. Werry, “Using proprioceptive sensors for categorizing human-robot interactions,” 2007 2nd ACM/IEEE Int. Conf. on Human-Robot Interaction (HRI), pp. 105-112, 2007.

- [8] M. D. Cooney, S. Nishio, and H. Ishiguro, “Recognizing affection for a touch-based interaction with a humanoid robot,” 2012 IEEE/RSJ Int. Conf. on Intelligent Robots and Systems, pp. 1420-1427, 2012.

- [9] S. Yohanan and K. E. MacLean, “The role of affective touch in human-robot interaction: Human intent and expectations in touching the haptic creature,” Int. J. of Social Robotics, Vol.4, No.2, pp. 163-180, 2012.

- [10] S. Cohen, D. Janicki-Deverts, R. B. Turner, and W. J. Doyle, “Does hugging provide stress-buffering social support? A study of susceptibility to upper respiratory infection and illness,” Psychological Science, Vol.26, No.2, pp. 135-147, 2015.

- [11] B. K. Jakubiak and B. C. Feeney, “Keep in touch: The effects of imagined touch support on stress and exploration,” J. of Experimental Social Psychology, Vol.65, pp. 59-67, 2016.

- [12] R. Yu, et al., “Use of a Therapeutic, Socially Assistive Pet Robot (PARO) in Improving Mood and Stimulating Social Interaction and Communication for People With Dementia: Study Protocol for a Randomized Controlled Trial,” JMIR Research Protocols, Vol.4, No.2, e45, 2015.

- [13] K. Kuwamura, S. Nishio, and S. Sato, “Can We Talk through a Robot As if Face-to-Face? Long-Term Fieldwork Using Teleoperated Robot for Seniors with Alzheimer’s Disease,” Frontiers in Psychology, Vol.7, p. 1066, 2016.

- [14] R. Yamazaki et al., “Intimacy in Phone Conversations: Anxiety Reduction for Danish Seniors with Hugvie,” Frontiers in Psychology, Vol.7, p. 537, 2016.

- [15] H. Sumioka, A. Nakae, R. Kanai, and H. Ishiguro, “Huggable communication medium decreases cortisol levels,” Scientific Reports, Vol.3, p. 3034, 2013.

- [16] M. Shiomi, A. Nakata, M. Kanbara, and N. Hagita, “A Hug from a Robot Encourages Prosocial Behavior,” Proc. 2017 26th IEEE Int. Symp. on Robot and Human Interactive Communication (RO-MAN), pp. 418-423, 2017.

- [17] C. Bevan and D. S. Fraser, “Shaking hands and cooperation in tele-present human-robot negotiation,” Proc. of the 10th Annual ACM/IEEE Int. Conf. on Human-Robot Interaction, pp. 247-254, 2015.

- [18] M. Shiomi, A. Nakata, M. Kanbara, and N. Hagita, “A Robot that Encourages Self-disclosure by Hug,” Proc. of 9th Int. Conf. on Social Robotics (ICSR 2017), pp. 324-333, 2017.

- [19] M. Shiomi and N. Hagita, “Do Audio-Visual Stimuli Change Hug Impressions?,” Proc. of 9th Int. Conf. on Social Robotics (ICSR 2017), pp. 345-354, 2017.

- [20] H. Fukuda, M. Shiomi, K. Nakagawa, and K. Ueda, “‘Midas touch’ in human-robot interaction: Evidence from event-related potentials during the ultimatum game,” Proc. of 2012 7th ACM/IEEE Int. Conf. on Human-Robot Interaction (HRI), pp. 131-132, 2012.

- [21] M. Shiomi, K. Nakagawa, K. Shinozawa, R. Matsumura, H. Ishiguro, and N. Hagita, “Does A Robot’s Touch Encourage Human Effort?,” Int. J. of Social Robotics, Vol.9, pp. 5-15, 2016.

- [22] M. D. Duong, K. Terashima, T. Miyoshi, and T. Okada, “Rehabilitation system using teleoperation with force-feedback-based impedance adjustment and EMG-moment model for arm muscle strength assessment,” J. Robot. Mechatron., Vol.22, No.1, p. 10, 2010.

- [23] J. Furusho and M. Haraguchi, “Development of Rehabilitation Systems for the Limbs Using Functional Fluids,” J. Robot. Mechatron., Vol.26, No.3, pp. 302-309, 2014.

- [24] T. Hirano et al., “How Do Communication Cues Change Impressions of Human-Robot Touch Interaction?,” Int. J. of Social Robotics, September 19, 2017.

- [25] T. L. Chen, C.-H. A. King, A. L. Thomaz, and C. C. Kemp, “An Investigation of Responses to Robot-Initiated Touch in a Nursing Context,” Int. J. of Social Robotics, Vol.6, No.1, pp. 141-161, 2013.

- [26] K. Funakoshi, K. Kobayashi, M. Nakano, S. Yamada, Y. Kitamura, and H. Tsujino, “Smoothing human-robot speech interactions by using a blinking-light as subtle expression,” Proc. of the 10th Int. Conf. on Multimodal interfaces (ICMI 2008), pp. 293-296, 2008.

- [27] M. Shiomi, K. Nakagawa, and N. Hagita, “Design of a gaze behavior at a small mistake moment for a robot,” Interaction Studies, Vol.14, No.3, pp. 317-328, 2013.

- [28] M. Gharbi, P. V. Paubel, A. Clodic, O. Carreras, R. Alami, and J. M. Cellier, “Toward a better understanding of the communication cues involved in a human-robot object transfer,” 2015 24th IEEE Int. Symp. on Robot and Human Interactive Communication (RO-MAN), pp. 319-324, 2015.

- [29] M. Shiomi, K. Shatani, T. Minato, and H. Ishiguro, “How Should a Robot React Before People’s Touch?: Modeling a Pre-Touch Reaction Distance for a Robot’s Face,” IEEE Robotics and Automation Letters, Vol.3, No.4, pp. 3773-3780, 2018.

- [30] A. Skurvydas, B. Gutnik, A. Zuoza, D. Nash, I. Zuoziene, and D. Mickeviciene, “Relationship between simple reaction time and body mass index,” HOMO-J. of Comparative Human Biology, Vol.60, No.1, pp. 77-85, 2009.

- [31] J. Shelton and G. P. Kumar, “Comparison between auditory and visual simple reaction times,” Neuroscience & Medicine, Vol.1, No.1, pp. 30-32, 2010.

- [32] N. Misra, K. Mahajan, and B. Maini, “Comparative study of visual and auditory reaction time of hands and feet in males and females,” Indian J. Physiol. Pharmacol., Vol.29, i4, 1885.

- [33] M. L. Walters, D. S. Syrdal, K. Dautenhahn, R. Te Boekhorst, and K. L. Koay, “Avoiding the uncanny valley: robot appearance, personality and consistency of behavior in an attention-seeking home scenario for a robot companion,” Autonomous Robots, Vol.24, No.2, pp. 159-178, 2008.

- [34] J. B. F. van Erp and A. Toet, “Social Touch in Human-Computer Interaction,” Frontiers in Digital Humanities, Vol.2, No.2, p. 2, 2015.

- [35] T. Hirano et al., “Communication Cues in a Human-Robot Touch Interaction,” Proc. of the 4th Int. Conf. on Human Agent Interaction (HAI ’16), pp. 201-206, 2016.

- [36] D. S. Stier and J. A. Hall, “Gender differences in touch: An empirical and theoretical review,” J. of Personality and Social Psychology, Vol.47, No.2, pp. 440-459, 1984.

- [37] A. S. Ebesu Hubbard, A. A. Tsuji, C. Williams, and V. Seatriz, “Effects of Touch on Gratuities Received in Same – Gender and Cross – Gender Dyads,” J. of Applied Social Psychology, Vol.33, No.11, pp. 2427-2438, 2003.

- [38] H. Okazaki et al., “Toward understanding pedagogical relationship in human-robot interaction,” J. Robot. Mechatron., Vol.28, No.1, pp. 69-78, 2016.

- [39] Y. Yamashita et al., “Investigation of causal relationship between touch sensations of robots and personality impressions by path analysis,” Int. J. of Social Robotics, Vol.11, No.1, pp. 141-150, 2019.

- [40] J. Inthiam, A. Mowshowitz, and E. Hayashi, “Mood Perception Model for Social Robot Based on Facial and Bodily Expression Using a Hidden Markov Model,” J. Robot. Mechatron., Vol.31, No.4, pp. 629-638, 2019.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.