Paper:

Motivation System for Students to Learn Control Engineering and Image Processing

Sam Ann Rahok*, Hirohisa Oneda**, Shigeji Osawa**, and Koichi Ozaki***

*National Institute of Technology, Oyama College

771 Nakakuki, Oyama, Tochigi 323-0806, Japan

**National Institute of Technology, Yuge College

1000 Yuge, Kamijima, Ehime 794-2593, Japan

***Utsunomiya University

712 Yoto, Utsunomiya, Tochigi 321-8585, Japan

Our aim was to motivate students to study control engineering and image processing. To this end, we designed a motivation system based on the ARCS model, developed by John M. Keller, to motivate learners. We used a drone as the control object. The ARCS model consists of four steps: attention, relevance, confidence, and satisfaction. The control process is performed by capturing images from the drone’s camera on a PC via Wi-Fi, and detecting a target color using an image processing technique. Then, the PC sends the control inputs from a PID controller back to the drone to track the target color by keeping it at the center of the images. With this system, students can gain knowledge on control engineering and image processing by tuning the parameters of PID controller and image processing, and observing the responses of the drone. To assess the effectiveness of our system, we requested 150 first-grade technical college students, who had no prior knowledge of control engineering and image processing, to attend our lecture. After the lecture, the students were asked to answer a questionnaire on their interests. The result demonstrated that over 80% of them expressed an interest in learning these two techniques.

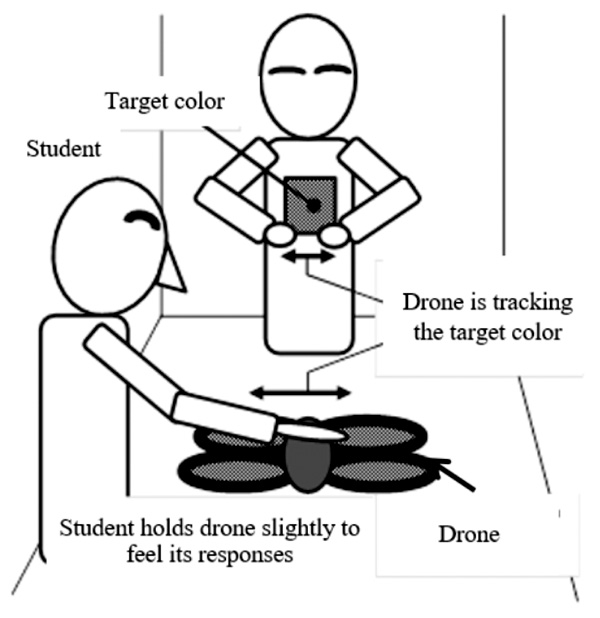

Bodily sensations of the drone responses

- [1] Y. Seki, H. Aoyama, K. Ishikawa, S. Ishimura, T. Wadasako, Y. Adachi, K. Yokota, K. Ozaki, and S. Yamamoto, “Development of the Indoor Mobile Robot Navigating by Vision and Magnetic Tags,” J. of the Robotics Society of Japan, Vol.27, No.7, pp. 833-841, 2009 (in Japanese).

- [2] S. Osawa, S. Kazama, S. Kato, S. Watanabe, K. Ozaki, Y. Ishikawa, M. Tanaka, T. Tamiki, Y. Suwa, and A. Hirose, “Development of Scratch Inspection System for Cylindrical Products by Image Processing,” J. of the Japan Society for Precision Engineering, Vol.78, No.8, pp. 955-959, 2010 (in Japanese).

- [3] J. M. Keller, “Development and Use of the ARCS Model of Instructional Design,” J. of Instructional Development, Vol.10, No.3, pp. 2-10, 1987.

- [4] K. Suzuki, “On the Framework of Designing and Developing ‘appealing instruction,’ the ARCS Motivation Model,” Japanese J. of Educational Media Research, Vol.1, No.1, pp. 50-61, 1995 (in Japanese).

- [5] S. Inamori, K. Chida, T. Noguchi, M. Arai, and M. Koshimizu, “Development of Sequence Control Educational Materials with Module Structure for Engineering Experiments,” Japanese Society of Engineering Education, Vol.54, No.4, pp. 21-26, 2006 (in Japanese).

- [6] T. Tasaki, S. Watanabe, Y. Shikanai, and K. Ozaki, “Development of Control Teaching Material for Mechatronics Education Based on Experience,” Japanese Society of Engineering Education, Vol.58, No.4, pp. 98-102, 2010 (in Japanese).

- [7] E. Altuğ, J. P. Ostrowski, and R. Mohony, “Control of a quadrotor helicopter using visual feedback,” Proc. of the IEEE Int. Conf. on Robotics and Automation, pp. 72-77, 2002.

- [8] K. Watanabe, Y. Yoshihata, Y. Iwatani, and K. Hashimoto, “Image-based visual PID Control of a Micro helicopter Using a stationary Camera,” Adv. Robotics, Vol.22, pp. 381-393, 2008.

- [9] P. J. Garcia-Pardo, G. S. Sukhatme, and J. F. Montgomery, “Towards vision-based safe landing for an autonomous helicopter,” Robotics and Autonomous Systems, Vol.38, pp. 19-29, 2002.

- [10] T. Kuroda and T. Watanabe, “Method for Lib Extraction from Face Image Using HSV Color Space,” J. of the Japan Society of Machanical Engineers, Series C, Vol.61, No.592, pp. 150-155, 1995 (in Japanese).

- [11] L. Sigal, S. Sclaroff, and V. Athitsos, “Skin Color-Based Video Segmentation under Time-Varying Illuminition,” IEEE Trans. on Pattern Analysis and Machine Intelligence, Vol.26, No.6, pp. 862-877, 2004.

- [12] S. Arivazhagan, R. N. Shebiah, S. S. Nidhyanandhan, and L. Ganesan, “Fruit Recognition using Color and Texture Feathures,” J. of Emerging Trends in Computing and Information Sciences, Vol.1, No.2, pp. 90-94, 2010.

- [13] U. Zakir, I. Zafa, and E. A. Edirisinghe, “Road Sign Detection and Recognition by Using Local Energy based Shape Histogram (LESH),” Int. J. of Image Processing, Vol.4, No.6, pp. 576-583, 2011.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.