Paper:

Acquisition of Disaster Emergency Information Using a Terrain Database by Flying Robots

Yasuki Miyazaki*, Takafumi Hirano**, Takaaki Kobayashi*, Yoshihiro Imai*, Shin Usuki*, Yuichi Kobayashi*, Kenji Terabayashi***, and Kenjiro T. Miura*

*Shizuoka University

3-5-1 Johoku, Naka-ku, Hamamatsu, Shizuoka 432-8561, Japan

**Yokohama National University

79-5 Tokiwadai, Hodogaya-ku, Yokohama, Kanagawa 240-8501, Japan

***University of Toyama

3190 Gofuku, Toyama-shi, Toyama 930-8555, Japan

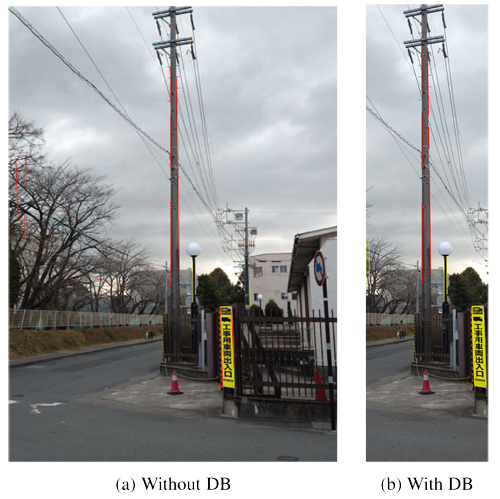

Because of the frequent occurrence of large-scale disasters, such as earthquakes, tsunamis, volcanic eruptions, and river floods, there is an increased demand for emergency response, restoration, and disaster prevention using robotic technology. One such technology involves assessment of the damage status using flying robots, which have undergone rapid development in recent years. In this study, using images of the disaster site obtained from a flying robot and a terrain database consisting of predisaster 3-D data, we aim to detect efficiently the collapse of electric utility poles, which are man-made objects, and the water level difference before and after river flooding, which is part of the natural landscape. By detecting these disaster-related events, we show the validity of the proposed method to assess the damage situation using the terrain database.

Poles were detected with a multiresolution terrain database

- [1] F. Matsuno et al., On special issue “Rescue Robotics in Great East Japan Earthquake I,” J. of the Robotics Society of Japan, Vol.32, No.1, 2014 (in Japanese).

- [2] F. Matsuno, et al., On special issue “Rescue Robotics in Great East Japan Earthquake II,” J. of the Robotics Society of Japan, Vol.32, No.2, 2014 (in Japanese).

- [3] K. Nonami, F. Kendoul, S. Suzuki, W. Wang, and D. Nakazawa, “Autonomous Flying Robots – Unmanned Aerial Vehicles and Micro Aerial Vehicles,” Springer, 2010.

- [4] K. Nonami, “Rotary Wing Aerial Robotics,” J. of the Robotics Society of Japan, Vol.24, No.8, pp. 890-896, 2006 (in Japanese).

- [5] K. Fukui, “Edge Extraction Method Based on Separability of Image Features,” IEICE Trans. on Information and Systems, Vol.78, No.12, pp. 1533-1538, December 1995.

- [6] K. Fukui, “Contour Extraction Method Based on Separability of Image Features,” The IEICE Trans. (Japanese Edition), Vol.J80-D-2, No.6, pp.1406-1414, 1997.

- [7] F. Aurenhammer, R. Klein, and D.-T. Lee, “Voronoi Diagrams and Delaunay Triangulations,” World Scientific, 2013.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.