Paper:

Assessment of MUSIC-Based Noise-Robust Sound Source Localization with Active Frequency Range Filtering

Kotaro Hoshiba*1, Kazuhiro Nakadai*2,*3, Makoto Kumon*4, and Hiroshi G. Okuno*5

*1Department of Electrical, Electronics and Information Engineering, Faculty of Engineering, Kanagawa University

3-27-1 Rokkakubashi, Kanagawa-ku, Yokohama-shi, Kanagawa 221-8686, Japan

*2Department of Systems and Control Engineering, School of Engineering, Tokyo Institute of Technology

2-12-1 Ookayama, Meguro-ku, Tokyo 152-8552, Japan

*3Honda Research Institute Japan Co., Ltd.

8-1 Honcho, Wako-shi, Saitama 351-0188, Japan

*4Faculty of Advanced Science and Technology, Kumamoto University

2-39-1 Kurokami, Chuo-ku, Kumamoto-shi, Kumamoto 860-8555, Japan

*5Faculty of Science and Engineering, Waseda University

2-4-12 Okubo, Shinjuku-ku, Tokyo 169-8555, Japan

We have studied sound source localization, using a microphone array embedded on a UAV (unmanned aerial vehicle), for the purpose of detecting for people to rescue from disaster-stricken areas or other dangerous situations, and we have proposed sound source localization methods for use in outdoor environments. In these methods, noise robustness and real-time processing have a trade-off relationship, which is a problem to be solved for the practical application of the methods. Sound source localization in a disaster area requires both noise robustness and real-time processing. For this we propose a sound source localization method using an active frequency range filter based on the MUSIC (MUltiple Signal Classification) method. Our proposed method can successively create and apply a frequency range filter by simply using the four arithmetic operations, so it can ensure both noise robustness and real-time processing. As numerical simulations carried out to compare the successful localization rate and the processing delay with conventional methods have affirmed the usefulness of the proposed method, we have successfully produced a sound source localization method that has both noise robustness and real-time processing.

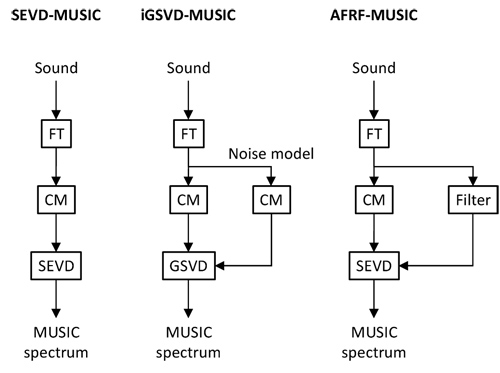

The flowchart of proposed AFRF-MUSIC

- [1] K. Nakadai, T. Lourens, H. G. Okuno, and H. Kitano, “Active audition for humanoid,” Proc. of 17th National Conf. on Artificial Intelligence (AAAI-2000), pp. 832-839, 2000.

- [2] H. G. Okuno and K. Nakadai, “Robot audition: Its rise and perspectives,” In Proc. of the 2015 IEEE Int. Conf. on Acoustics, Speech and Signal Processing (ICASSP 2015), pp. 5610-5614, 2015.

- [3] H. G. Okuno and K. Nakadai, “Special Issue on Robot Audition Technologies,” J. Robot. Mechatron., Vol.29, No.1, pp. 15-267, doi:10.20965/jrm.2017.p0015, 2017.

- [4] K. Okutani, T. Yoshida, K. Nakamura, and K. Nakadai, “Outdoor auditory scene analysis using a moving microphone array embedded in a quadrocopter,” Proc. of the IEEE/RSJ Int. Conf. on Robots and Intelligent Systems (IROS), pp. 3288-3293, 2012.

- [5] T. Ohata, K. Nakamura, T. Mizumoto, T. Tezuka, and K. Nakadai, “Improvement in outdoor sound source detection using a quadrotor-embedded microphone array,” Proc. of the IEEE/RSJ Int. Conf. on Robots and Intelligent Systems (IROS), pp. 1902-1907, 2014.

- [6] R. O. Schmidt, “Multiple emitter location and signal parameter estimation,” IEEE Trans. on Antennas and Propagation, Vol.34, No.3, pp. 276-280, doi:10.1109/TAP.1986.1143830, 1986.

- [7] K. Hoshiba, O. Sugiyama, A. Nagamine, R. Kojima, M. Kumon, and K. Nakadai, “Design and assessment of sound source localization system with a UAV-embedded microphone array,” J. Robot. Mechatron., Vol.29, No.1, pp. 154-167, doi:10.20965/jrm.2017.p0154, 2017.

- [8] K. Hoshiba, K. Washizaki, M. Wakabayashi, T. Ishiki, M. Kumon, Y. Bando, D. Gabriel, K. Nakadai, and H. G. Okuno, “Design of UAV-Embedded Microphone Array System for Sound Source Localization in Outdoor Environments,” Sensors, Vol.17, No.11, pp. 1-16, doi:10.3390/s17112535, 2017.

- [9] K. Nakamura, K. Nakadai, F. Asano, Y. Hasegawa, and H. Tsujino, “Intelligent sound source localization for dynamic environments,” Proc. of the IEEE/RSJ Int. Conf. on Robots and Intelligent Systems (IROS), pp. 664-669, 2009.

- [10] K. Nakamura, K. Nakadai, and G. Ince, “Real-time super-resolution Sound Source Localization for robots,” Proc. of the IEEE/RSJ Int. Conf. on Robots and Intelligent Systems (IROS), pp. 694-699, 2012.

- [11] K. Furulawa, K. Okutani, K. Nagira, T. Otsuka, K. Itoyama, K. Nakadai, and H. G. Okuno, “Noise correlation matrix estimation for improving sound source localization by multirotor UAV,” Proc. of the IEEE/RSJ Int. Conf. on Robots and Intelligent Systems (IROS), pp. 3943-3948, 2013.

- [12] L. Wang and A. Cavallaro, “Time-frequency processing for sound source localization from a micro aerial vehicle,” Proc. of the 2017 IEEE Int. Conf. on Acoustics, Speech and Signal Processing (ICASSP 2017), pp. 496-500, 2017.

- [13] C. Xu, X. Xiao, S. Sun, W. Rao, E. S. Chng, and H. Li, “Weighted Spatial Covariance Matrix Estimation for MUSIC based TDOA Estimation of Speech Source,” Proc. of the INTERSPEECH 2017, pp. 1894-1898, 2017.

- [14] K. Nakadai, T. Takahashi, H. G. Okuno, H. Nakajima, Y. Hasegawa, and H. Tsujino, “Design and Implementation of Robot Audition System ‘HARK’ – Open Source Software for Listening to Three Simultaneous Speakers,” Advanced Robotics, Vol.24, Nos.5-6, pp. 739-761, doi:10.1163/016918610X493561, 2010.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.