Paper:

A Non-Linear Manifold Alignment Approach to Robot Learning from Demonstrations

Ndivhuwo Makondo*1,*2, Michihisa Hiratsuka*3, Benjamin Rosman*2,*4, and Osamu Hasegawa*5

*1Interdisciplinary Graduate School of Science and Engineering, Tokyo Institute of Technology

4259 Nagatsuta-cho, Midori-ku, Yokohama 226-8503, Japan

*2Mobile Intelligent Autonomous Systems, Council for Scientific and Industrial Research

Meiring Naude Road, Brummeria, Pretoria 0001, South Africa

*3Data Engineering Group, Recruit Lifestyle Co., Ltd.

1-9-2 Marunouchi, Chiyoda-ku 100-6640, Japan

*4School of Computer Science and Applied Mathematics, University of the Witwatersrand

Meiring Naude Road, Brummeria, Pretoria 0001, South Africa

*5Faculty of Engineering, Department of Systems and Control Engineering, Tokyo Institute of Technology

4259 Nagatsuta-cho, Midori-ku, Yokohama 226-8503, Japan

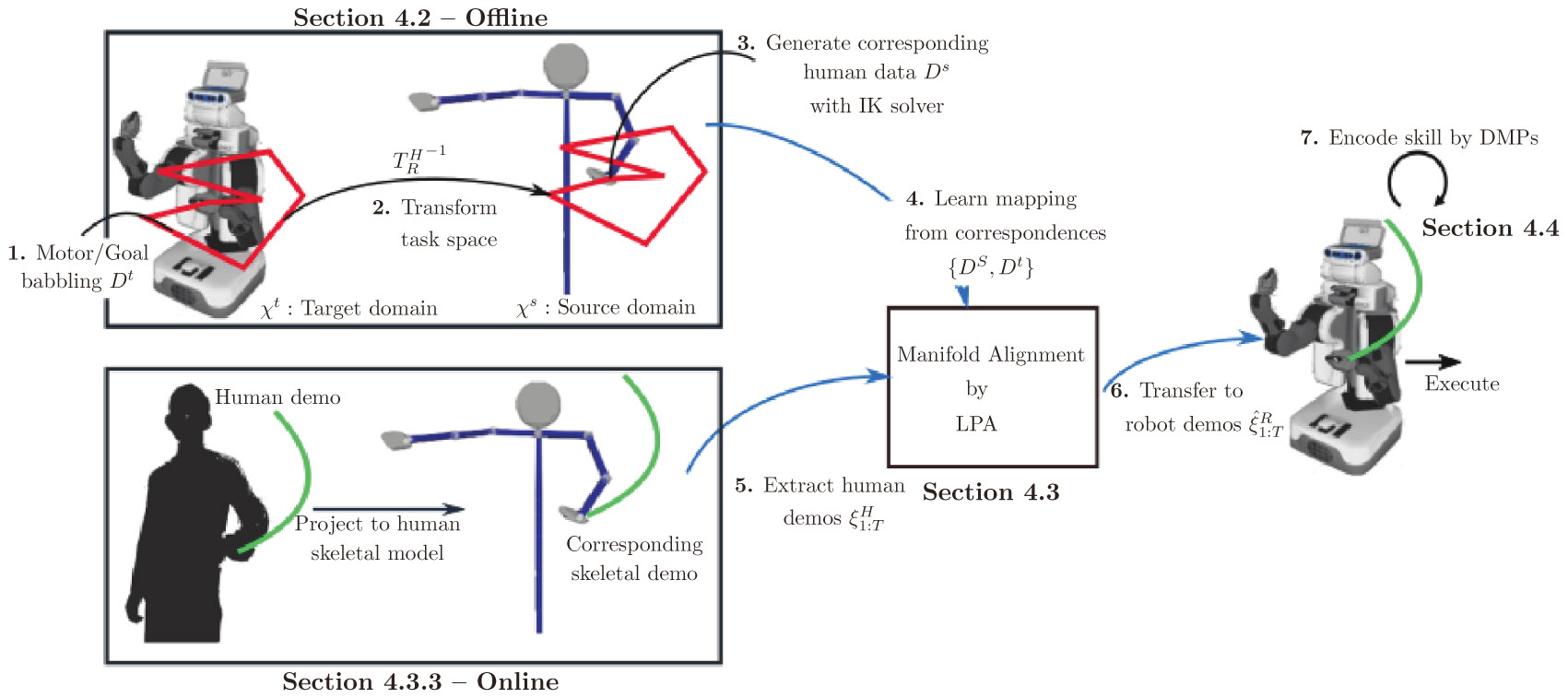

The number and variety of robots active in real-world environments are growing, as well as the skills they are expected to acquire, and to this end we present an approach for non-robotics-expert users to be able to easily teach a skill to a robot with potentially different, but unknown, kinematics from humans. This paper proposes a method that enables robots with unknown kinematics to learn skills from demonstrations. Our proposed method requires a motion trajectory obtained from human demonstrations via a vision-based system, which is then projected onto a corresponding human skeletal model. The kinematics mapping between the robot and the human model is learned by employing Local Procrustes Analysis, a manifold alignment technique which enables the transfer of the demonstrated trajectory from the human model to the robot. Finally, the transferred trajectory is encoded onto a parameterized motion skill, using Dynamic Movement Primitives, allowing it to be generalized to different situations. Experiments in simulation on the PR2 and Meka robots show that our method is able to correctly imitate various skills demonstrated by a human, and an analysis of the transfer of the acquired skills between the two robots is provided.

Manifold alignment for robot learning

- [1] B. D. Argall, S. Chernova, M. Veloso, and B. Browning, “A survey of robot learning from demonstration,” Robotics and Autonomous Systems, Vol.57, Issue 5, pp. 469-483, 2009.

- [2] R. S. Sutton and A. G. Barto, “Reinforcement Learning: An Introduction,” Vol.1, Cambridge: MIT Press, 1998.

- [3] M. Rolf, J. J. Steil, and M. Gienger, “Goal babbling permits direct learning of inverse kinematics,” IEEE Trans. on Autonomous Mental Development, Vol.2, No.3, pp. 216-229, 2010.

- [4] J. H. Figueroa Heredia, H. Sahloul, and J. Ota, “Teaching mobile robots using custom-made tools by a semi-direct method,” J. of Robotics and Mechatronics, Vol.28, No.2, pp. 242-254, 2016.

- [5] S. Calinon, “A tutorial on task-parameterized movement learning and retrieval,” Intelligent Service Robotics, Vol.9, Issue 1, pp. 1-29, 2016.

- [6] G. Maeda, M. Ewerton, D. Koert, and J. Peters, “Acquiring and Generalizing the Embodiment Mapping from Human Observations to Robot Skills,” IEEE Robotics and Automation Letters, Vol.1, Issue 2, pp. 784-791, 2016.

- [7] C. Nehaniv and K. Dautenhahn, “Like me? – measures of correspondence and imitation,” Cybernetics and Systems, Vol.32, Issue 1-2, pp. 11-51, 2001.

- [8] S. Calinon, F. D’halluin, E. Sauser, D. Caldwell, and A. Billard, “Learning and reproduction of gestures by imitation: An approach based on Hidden Markov Model and Gaussian Mixture Regression,” IEEE Robotics and Automation Magazine, Vol.17, No.2, pp. 44-54, 2010.

- [9] S. Khansari-Zadeh and A. Billard, “Learning stable nonlinear dynamical systems with Gaussian mixture models,” IEEE Trans. on Robotics, Vol.27, No.5, pp. 943-957, 2011.

- [10] A. J. Ijspeert, J. Nakanishi, H. Hoffmann, P. Pastor, and S. Schaal, “Dynamical movement primitives: learning attractor models for motor behaviors,” Neural computation, Vol.25, No.2, pp. 328-373, 2013.

- [11] A. Ude, B. Nemec, T. Petrić, and J. Morimoto, “Orientation in Cartesian space dynamic movement primitives,” IEEE Int. Conf. on Robotics and Automation (ICRA), Hong Kong, pp. 2997-3004, 2014.

- [12] N. H. Pham and T. Yoshimi, “Adaptive learning of hand movement in human demonstration for robot action,” J. of Robotics and Mechatronics, Vol.29, No.5, pp. 919-927, 2017.

- [13] B. Dariush, M. Gienger, A. Arumbakkam, C. Goerick, Youding Zhu, and K. Fujimura, “Online and markerless motion retargeting with kinematic constraints,” IEEE/RSJ Int. Conf. on Intelligent Robots and Systems (IROS), pp. 191-198, 2008.

- [14] T. Tosun, R. Mead, and R. Stengel, “A general method for kinematic retargetting: Adapting poses between humans and robots,” Proc. Int. Mechanical Engineering Congress and Exposition (IMECE), pp. 1-10, 2014.

- [15] C. Stanton, A. Bogdanovych, and E. Ratanasena, “Teleoperation of a humanoid robot using full-body motion capture, example movements, and machine learning,” Proc. of Australasian Conf. on Robotics and Automation (ACRA), 2012.

- [16] B. Delhaisse, D. Esteban, L. Rozo, and D. Caldwell, “Transfer Learning of Shared Latent Spaces between Robots with Similar Kinematic Structure,” Proc. of the Int. Joint Conf. on Neural Networks, pp. 4142-4149, 2017.

- [17] A. Shon, K. Grochow, and R. Rao, “Robotic imitation from human motion capture using gaussian processes,” IEEE-RAS Int. Conf. on Humanoid Robots (Humanoids), pp. 129-134, 2005.

- [18] N. Makondo, J. Claassens, N. Tlale, and M. Braae, “Geometric technique for the kinematic modeling of a 5 DOF redundant manipulator,” 5th IEEE Conf. on Robotics and Mechatronics (ROBMECH), pp. 1-7, 2012.

- [19] J. Sturm, C. Plagemann, and W. Burgard, “Body schema learning for robotic manipulators from visual self-perception,” J. of Physiology – Paris, Vol.103, Issue 3-5, pp. 220-231, 2009.

- [20] N. G. Tsagarakis, S. Morfey, G. M. Cerda, L. Zhibin, and D. G. Caldwell, “COMpliant huMANoid COMAN: Optimal joint stiffness tuning for modal frequency control,” IEEE Int. Conf. on Robotics and Automation (ICRA), pp. 673-678, 2013.

- [21] G. Metta, G. Sandini, D. Vernon, L. Natale, and F. Nori, “The iCub humanoid robot: an open platform for research in embodied cognition,” Proc. of the Workshop on Performance Metrics for Intelligent Systems (PerMIS), pp. 50-56, 2008.

- [22] M. Hiratsuka, N. Makondo, B. Rosman, and O. Hasegawa, “Trajectory Learning from Human Demonstrations via Manifold Mapping,” IEEE/RSJ Int. Conf. on Intelligent Robots and Systems (IROS), pp. 3935-3940, 2016.

- [23] S. Tak and H. S. Ko, “A Physically-Based Motion Retargeting Filter,” ACM Trans. on Graphics, Vol.24, Issue 1, pp. 98-117, 2005.

- [24] M. Do, P. Azad, T. Asfour, and R. Dillmann, “Imitation of human motion on a humanoid robot using non-linear optimization,” IEEE-RAS Int. Conf. on Humanoid Robots (Humanoids), pp. 545-552, 2008.

- [25] L. Zhang, Z. Cheng, Y. Gan, G. Zhu, P. Shen, and J. Song, “Fast Human Whole Body Motion Imitation Algorithm for Humanoid Robots,” IEEE Int. Conf. on Robotics and Biomimetics (ROBIO), pp. 1430-1435, 2016.

- [26] A. Shon, K. Grochow, A. Hertzmann, and R. Rao, “Learning shared latent structure for image synthesis and robotic imitation,” Adv. Neural Information Processing Systems (NIPS), pp. 1233-1240, 2006.

- [27] T. Hirose and T. Taniguchi, “Abstraction Multimodal Low-Dimensional Representation from High-Dimensional Posture Information and Visual Images,” J. of Robotics and Mechatronics, Vol.25, No.1, pp. 80-88, 2013.

- [28] M. Field, D. Stirling, and Z. Pan, “Learning Trajectories for Robot Programing by Demonstration Using a Coordinated Mixture of Factor Analyzers,” IEEE Trans. on Cybernetics, Vol.46, Issue 3, pp. 706-717, 2016.

- [29] N. Makondo, B. Rosman, and O. Hasegawa, “Knowledge Transfer for Learning Robot Models via Local Procrustes Analysis,” IEEE-RAS Int. Conf. on Humanoid Robots (Humanoids), pp. 1075-1082, 2015.

- [30] B. Bócsi, L. Csató, and J. Peters, “Alignment-based Transfer Learning for Robot Models,” Proc. of the IEEE Int. Joint Conf. on Neural Networks (IJCNN), pp. 1-7, 2013.

- [31] S. J. Pan and Q. Yang, “A Survey on Transfer Learning,” IEEE Trans. on Knowledge and Data Engineering, Vol.22, No.10, pp. 1345-1359, Oct. 2010.

- [32] P. Azad, T. Asfour, and R. Dillmann, “Toward an Unified Representation for Imitation of Human Motion on Humanoids,” IEEE Int. Conf. on Robotics and Automation (ICRA), pp. 2558-2563, 2007.

- [33] S. Gärtner, M. Do, and T. Asfour, “Generation of human-like motion for humanoid robots based on marker-based motion capture data,” Proc. Int. Symp. Robotics (ROBOTIK), pp. 1-8, 2010.

- [34] Ö. Terlemez, S. Ulbrich, C. Mandery, M. Do, N. Vahrenkamp, and T. Asfour, “Master Motor Map (MMM) – Framework and Toolkit for Capturing, Representing, and Reproducing Human Motion on Humanoid Robots,” IEEE-RAS Int. Conf. on Humanoid Robots (Humanoids), pp. 894-901, 2014.

- [35] C. Mandery, Ö. Terlemez, M. Do, N. Vahrenkamp, and T. Asfour, “Unifying Representations and Large-Scale Whole-Body Motion Databases for Studying Human Motion,” IEEE Trans. on Robotics, Vol.32, Issue 4, pp. 796-809, 2016.

- [36] J. Koenemann, F. Burget, and M. Bennewitz, “Real-time Imitation of Human Whole-Body Motions by Humanoids,” IEEE Int. Conf. on Robotics and Automation (ICRA), pp. 2806-2812, 2014.

- [37] C. Moulin-Frier and P. Y. Oudeyer, “Exploration strategies in developmental robotics: A unified probabilistic framework,” IEEE 3rd Joint Int. Conf. on Development and Learning and Epigenetic Robotics (ICDL-Epirob), pp. 1-6, 2013.

- [38] C. Wang and S. Mahadevan, “Manifold alignment using Procrustes analysis,” Proc. of the 25th Int. Conf. on Machine learning (IJCAI), pp. 1120-1127, 2008.

- [39] C. Wang and S. Mahadevan, “Manifold alignment without correspondence,” Int. Joint Conf. on Artificial Intelligence (IJCAI), pp. 1273-1278, 2009.

- [40] F. Diaz and D. Metzler, “Pseudo-aligned multilingual corpora,” The Int. Joint Conf. on Artificial Intelligence (IJCAI) pp. 2727-2732, 2007.

- [41] D. Zhai, B. Li, H. Chang, S. Shan, X. Chen, and W. Gao, “Manifold alignment via corresponding projections,” Proc. of the British Machine Vision Conf., pp. 3.1-3.11, 2010.

- [42] Z. Cui, H. Chang, S. Shan, and X. Chen, “Generalized unsupervised manifold alignment,” Advances in Neural Information Processing Systems, pp. 2429-2437, 2014.

- [43] C. G. Atkeson, A. W. Moore, and S. Schaal, “Locally weighted learning for control,” Artificial Intelligence Review, Vol.11, pp. 75-113, 1997.

- [44] J. Ting, S. Vijayakumar, and S. Schaal, “Locally weighted regression for control,” Encyclopedia of Machine Learning, pp. 613-624, Springer US, Boston MA, 2010.

- [45] J. Verbeek, “Learning nonlinear image manifolds by global alignment of local linear models,” IEEE Trans. on Pattern Analysis and Machine Intelligence, Vol.28, No.8, pp. 1236-1250, 2006.

- [46] S. Chernova and M. Veloso, “Confidence-based multi-robot learning from demonstration,” Int. J. of Social Robotics, Vol.2, No.2, pp. 195-215, 2010.

- [47] J. H. Figueroa Heredia, J. I. U. Rubrico, S. Shirafuji, and J. Ota, “Teaching tasks to multiple small robots by classifying and splitting a human example,” J. of Robotics and Mechatronics, Vol.29, No.2, pp. 419-433, 2017.

- [48] S. Calinon and A. Billard, “Active teaching in robot programming by demonstration,” RO-MAN 2007 – The 16th IEEE Int. Symp. on Robot and Human Interactive Communication, pp. 702-707, Jeju, 2007.

- [49] S. Griffith, K. Subramanian, J. Scholz, C. Isbell, and A. L. Thomaz, “Policy Shaping: Integrating human feedback with reinforcement learning,” Advances in Neural Information Processing Systems, pp. 2625-2633, 2013.

- [50] R. Loftin, B. Peng, J. MacGlashan, M. L. Littman, M. E. Taylor, J. Huang, and D. L. Roberts, “Learning behaviors via human-delivered discrete feedback: modeling implicit feedback strategies to speed up learning,” Autonomous Agents and Multi-Agent Systems, Vol.30, No.1, pp. 30-59, 2016.

- [51] M. E. Taylor, H. B. Suay, and S. Chernova, “Integrating reinforcement learning with human demonstrations of varying ability,” Proc. of 10th Int. Conf. on Autonomous Agents and Multiagent Systems (AAMAS), Tumer, Yolum, Sonenberg and Stone (Eds.), pp. 617-624, Taipei, Taiwan, May 26, 2011.

- [52] T. Brys, A. Harutyunyan, V. U. Brussel, and M. E. Taylor, “Reinforcement learning from demonstration through shaping,” Proc. of 24th Int. Joint Conf. on Artificial Intelligence (IJCAI), pp. 3352-3358, 2015.

- [53] S. M. Nguyen and P. Y. Oudeyer, “Socially guided intrinsic motivation for robot learning of motor skills,” Autonomous Robots, Vol.36, No.3, pp. 273-294, 2014.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.