Paper:

Robust and Accurate Monocular Vision-Based Localization in Outdoor Environments of Real-World Robot Challenge

Adi Sujiwo*, Eijiro Takeuchi*, Luis Yoichi Morales**, Naoki Akai**, Hatem Darweesh*, Yoshiki Ninomiya**, and Masato Edahiro*

*Graduate School of Informatics, Nagoya University

Furo-cho, Chikusa-ku, Nagoya 464-8603, Japan

**Institute of Innovation for Future Society, Nagoya University

Furo-cho, Chikusa-ku, Nagoya 464-8603, Japan

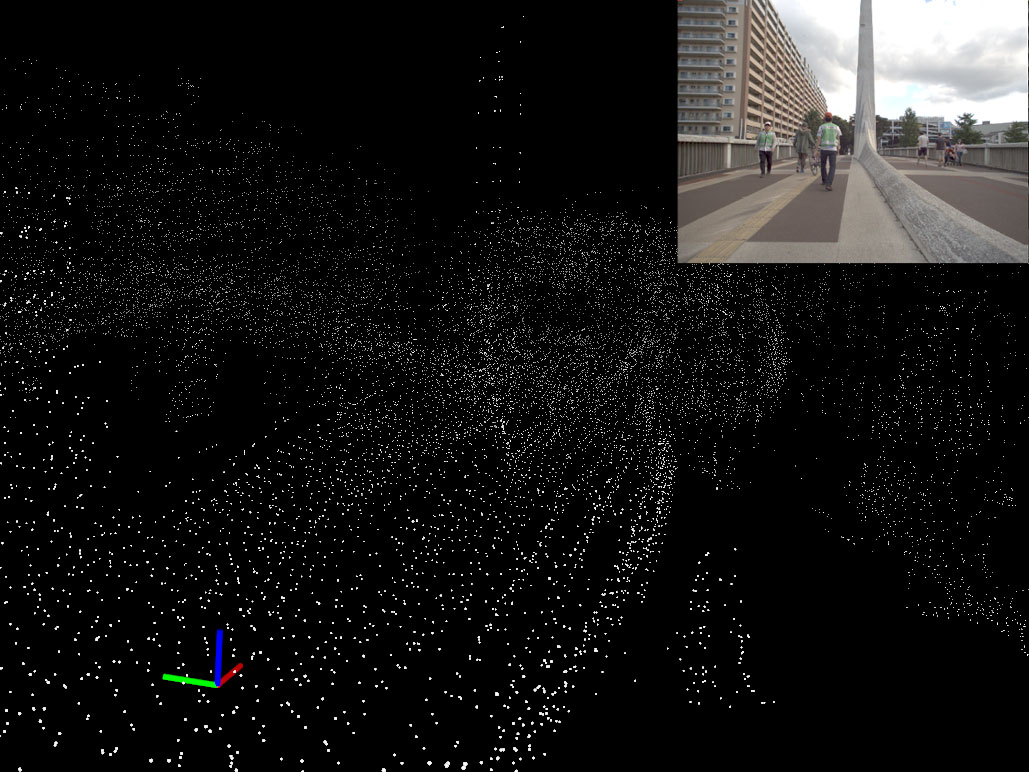

This paper describes our approach to perform robust monocular camera metric localization in the dynamic environments of Tsukuba Challenge 2016. We address two issues related to vision-based navigation. First, we improved the coverage by building a custom vocabulary out of the scene and improving upon place recognition routine which is key for global localization. Second, we established possibility of lifelong localization by using previous year’s map. Experimental results show that localization coverage was higher than 90% for six different data sets taken in different years, while localization average errors were under 0.2 m. Finally, the average of coverage for data sets tested with maps taken in different years was of 75%.

Visual localization within metric point cloud map

- [1] M. Milford, “Vision-based place recognition: how low can you go?,” The Int. J. of Robotics Research, Vol.32, No.7, pp. 766-789, 2013.

- [2] M. Buerki, I. Gilitschenski, E. Stumm, R. Siegwart, and J. Nieto, “Appearance-Based Landmark Selection for Efficient Long-Term Visual Localization,” IEEE/RSJ Int. Conf. on Intelligent Robots and Systems (IROS), Daejeon, Korea, October 2015.

- [3] C. Linegar, W. Churchill, and P. Newman, “Work Smart, Not Hard: Recalling Relevant Experiences for Vast-Scale but Time-Constrained Localisation,” Proc. of the IEEE Int. Conf. on Robotics and Automation (ICRA), Seattle, WA, USA, May 2015.

- [4] C. Valgren and A. J. Lilienthal, “SIFT, SURF & seasons: Appearance-based long-term localization in outdoor environments,” Robotics and Autonomous Systems, Vol.58, No.2, pp. 149-156, 2010.

- [5] K. Irie, T. Yoshida, and M. Tomono, “Outdoor Localization Using Stereo Vision Under Various Illumination Conditions,” Advanced Robotics, Vol.26, No.3-4, pp. 327-348, 2012.

- [6] N. Akai, K. Yamauchi, and K. Inoue, “Development of Mobile Robot “SARA” that Completed Mission in Real World Robot Challenge 2014,” Special Issue on Real World Robot Challenge in Tsukuba: Autonomous Technology for Useful Mobile Robot, J. of Robotics and Mechatronics, Vol.27, No.4, pp. 327-336, Aug. 2015.

- [7] A. Sujiwo, T. Ando, E. Takeuchi, Y. Ninomiya, and M. Edahiro, “Monocular Vision-Based Localization Using ORB-SLAM with LIDAR-Aided Mapping in Real-World Robot Challenge,” Special Issue on Real World Robot Challenge in Tsukuba: Autonomous Technology for Coexistence with Human Beings, J. of Robotics and Mechatronics, Vol.28, No.4, pp. 479-490, 2016.

- [8] R. Mur-Artal, J. M. M. Montiel, and J. D. Tardos, “ORB-SLAM: a Versatile and Accurate Monocular SLAM System,” IEEE Trans. on Robotics, 2015.

- [9] E. Takeuchi and T. Tsubouchi, “A 3-D Scan Matching using Improved 3-D Normal Distributions Transform for Mobile Robotic Mapping,” 2006 IEEE/RSJ Int. Conf. on Intelligent Robots and Systems, pp. 3068-3073, 2006.

- [10] R. Kümmerle, M. Ruhnke, B. Steder, C. Stachniss, and W. Burgard, “Autonomous Robot Navigation in Highly Populated Pedestrian Zones,” J. of Field Robotics, 2014.

- [11] Y. Morales, A. Carballo, E. Takeuchi, A. Aburadani, and T. Tsubouchi, “Autonomous robot navigation in outdoor cluttered pedestrian walkways,” J. of Field Robotics, Vol.26, No.8, pp. 609-635, 2009.

- [12] K. Konolige and J. Bowman, “Towards lifelong visual maps,” 2009 IEEE/RSJ Int. Conf. on Intelligent Robots and Systems, pp. 1156-1163, Oct. 2009.

- [13] P. Mühlfellner, M. Bürki, M. Bosse, W. Derendarz, R. Philippsen, and P. Furgale, “Summary Maps for Lifelong Visual Localization,” J. of Field Robotics, Vol.33, No.5, pp. 561-590, 2016.

- [14] B. Paden, M. Cap, S. Z. Yong, D. Yershov, and E. Frazzoli, “A Survey of Motion Planning and Control Techniques for Self-driving Urban Vehicles,” IEEE Trans. on Intelligent Vehicles, 2016.

- [15] H. Strasdat, J. M. M. Montiel, and A. J. Davison, “Scale Drift-Aware Large Scale Monocular SLAM,” Robotics: Science and Systems, Vol.2, p. 5, 2010.

- [16] R. Hartley and A. Zisserman, “Multiple view geometry in computer vision,” Cambridge university press, 2003.

- [17] C. Siagian and L. Itti, “Biologically Inspired Mobile Robot Vision Localization,” IEEE Trans. on Robotics, Vol.25, No.4, pp. 861-873, 2009.

- [18] C.-K. Chang, C. Siagian, and L. Itti, “Mobile robot vision navigation & localization using gist and saliency,” 2010 IEEE/RSJ Int. Conf. on Intelligent Robots and Systems (IROS), pp. 4147-4154, 2010.

- [19] T. Caselitz, B. Steder, M. Ruhnke, and W. Burgard, “Monocular Camera Localization in 3D LiDAR Maps,” 2016 IEEE/RSJ Int. Conf. on Intelligent Robots and Systems (IROS), October 9, 2016.

- [20] S. Lowry, N. Sünderhauf, P. Newman, J. J. Leonard, D. Cox, P. Corke, and M. J. Milford, “Visual Place Recognition: A Survey,” IEEE Trans. on Robotics, Vol.32, No.1, pp. 1-19, 2016.

- [21] C. Kanan and G. W. Cottrell, “Color-to-Grayscale: Does the Method Matter in Image Recognition?,” PLOS ONE, Vol.7, No.1, e29740, 2012.

- [22] W. Maddern, A. Stewart, C. McManus, B. Upcroft, W. Churchill, and P. Newman, “Illumination invariant imaging: Applications in robust vision-based localisation, mapping and classification for autonomous vehicles,” Proc. of the Visual Place Recognition in Changing Environments Workshop, IEEE Int. Conf. on Robotics and Automation (ICRA), Hong Kong, China, Vol.2, p. 3, 2014.

- [23] J. Fuentes-Pacheco, J. Ruiz-Ascencio, and J. M. Rendón-Mancha, “Visual simultaneous localization and mapping: a survey,” Artificial Intelligence Review, Vol.43, No.1, pp. 55-81, 2015.

- [24] G. Ros, A. Sappa, D. Ponsa, and A. M. Lopez, “Visual slam for driverless cars: A brief survey,” Intelligent Vehicles Symposium (IV) Workshops, 2012.

- [25] E. Rublee, V. Rabaud, K. Konolige, and G. Bradski, “ORB: An efficient alternative to SIFT or SURF,” 2011 IEEE Int. Conf. on Computer Vision (ICCV), pp. 2564-2571, 2011.

- [26] J. Heinly, E. Dunn, and J.-M. Frahm, “Comparative evaluation of binary features,” Computer Vision – ECCV 2012, pp. 759-773, Springer, 2012.

- [27] M. Lourakis and X. Zabulis, “Accurate scale factor estimation in 3D reconstruction,” Computer Analysis of Images and Patterns, pp. 498-506, Springer, 2013.

- [28] R. Szeliski and S. B. Kang, “Shape ambiguities in structure from motion,” IEEE Trans. on Pattern Analysis and Machine Intelligence, Vol.19, No.5, pp. 506-512, 1997.

- [29] B. Williams, M. Cummins, J. Neira, P. Newman, I. Reid, and J. Tardós, “A comparison of loop closing techniques in monocular SLAM,” Robotics and Autonomous Systems, Vol.57, No.12, pp. 1188-1197, 2009.

- [30] D. Galvez-López and J. Tardos, “Bags of Binary Words for Fast Place Recognition in Image Sequences,” IEEE Trans. on Robotics, Vol.28, No.5, pp. 1188-1197, 2012.

- [31] J. Sivic and A. Zisserman, “Efficient visual search of videos cast as text retrieval,” IEEE Trans. on Pattern Analysis and Machine Intelligence, Vol.31, No.4, pp. 591-606, 2009.

- [32] D. Nister and H. Stewenius, “Scalable Recognition with a Vocabulary Tree,” 2006 IEEE Computer Society Conf. on Computer Vision and Pattern Recognition (CVPR’06), Vol.2, pp. 2161-2168, 2006.

- [33] J. Sivic and A. Zisserman, “Video Google: a text retrieval approach to object matching in videos,” Proc. Ninth IEEE Int. Conf. on Computer Vision, pp. 1470-1477, Vol.2, Oct. 2003.

- [34] A. Adams and R. Baker, “The negative,” New York Graphic Society, 1981.

- [35] G. Klein and D. Murray, “Parallel Tracking and Mapping for Small AR Workspaces,” 6th IEEE and ACM Int. Symposium on Mixed and Augmented Reality 2007 (ISMAR 2007), pp. 225-234, 2007.

- [36] S. Kato, E. Takeuchi, Y. Ishiguro, Y. Ninomiya, K. Takeda, and T. Hamada, “An open approach to autonomous vehicles,” IEEE Micro, Vol.35, No.6, pp. 60-68, 2015.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.