Paper:

Influence of Different Impulse Response Measurement Signals on MUSIC-Based Sound Source Localization

Takuya Suzuki*, Hiroaki Otsuka*, Wataru Akahori*, Yoshiaki Bando**, and Hiroshi G. Okuno***

*Faculty of Science and Engineering, Waseda University

3-4-1 Okubo, Shinjuku, Tokyo 169-8555, Japan

**Graduate School of Informatics, Kyoto University

Yoshida-honmachi, Sakyo, Kyoto 606-8501, Japan

***Graduate Program for Embodiment Informatics, Waseda University

2-4-12 Okubo, Shinjuku, Tokyo 169-0072, Japan

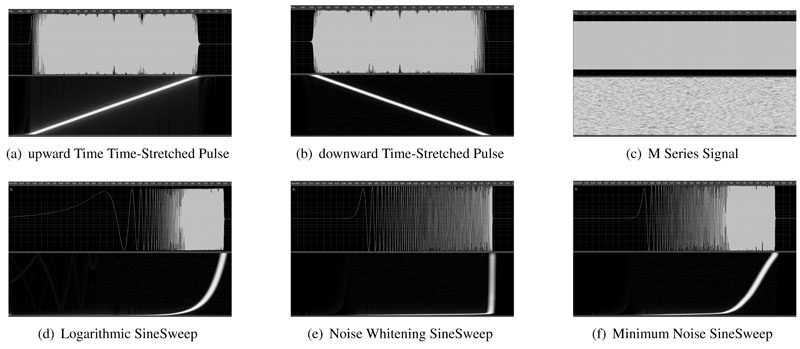

Six impulse response measurement signals

- [1] K. Nakadai, T. Lourens, H. G. Okuno, and H. Kitano, “Active Audition for Humanoid,” Proc. of the Seventeenth National Conf. on Artificial Intelligence (AAAI-2000), pp. 832-839, 2000.

- [2] K. Nakadai, H. G. Okuno, and H. Kitano, “Real-Time Auditory and Visual Multiple-Speaker Tracking For Human-Robot Interaction,” J. of Robotics and Mechatronics, Vol.14, No.5, pp. 479-489, 2002.

- [3] I. Nishimuta, K. Yoshii, K. Itoyama, and H. G. Okuno, “Toward a Quizmaster Robot for Speech-based Multiparty Interaction,” Advanced Robotics, Vol.29, Issue 18, pp. 1205-1219, Sep. 2015.

- [4] H. G. Okuno and K. Nakadai, “Robot Audition: Its Rise and Perspectives,” Proc. of 2015 Int. Conf. on Acoustics, Speech and Signal Processing (ICASSP 2015), pp. 5610-5614, 2015.

- [5] K. Nakadai, T. Takahashi, H. G. Okuno, H. Nakajima, Y. Hasegawa, and H. Tsujino, “Design and Implementation of Robot Audition System “HARK” – Open Source Software for Listening to Three Simulteaneous Speakers,” Advanced Robotics, Vol.24, Issue 5-6, pp. 739-761, Jan. 2010.

- [6] K. Nakamura, K. Nakadai, F. Asano, and G. Ince, “Intelligent Sound Source Localization and Its Application to Multimodal Human Tracking,” Proc. of 2011 IEEE/RSJ Int. Conf. on Intelligent Robots and Systems (IROS 2011), pp. 143-148, 2011.

- [7] R. O. Schmidt, “Multiple emitter location and signal parameter estimation,” IEEE Trans. on Antennas and Propagation, Vol.34, No.3, pp. 276-280, 1986.

- [8] F. Asano, M. Goto, and H. Aso, “Real-time Sound Source Localization and Separation System and Its Application to Automatic Speech Recognition,” Proc. of EUROSPEECH-2001, pp. 1013-1016, 2001.

- [9] S. Müller, “Measuring Transfer-Functions and Impulse Responses,” Chapter 5, Springer Handbook of Acoustics, p. 1000, Springer, 2009.

- [10] S. Weinzierl, A. Giese, and A. Lindau, “Generalized multiple sweep measurement,” Proc. of 126th AES Convention, p. 7767, 2009.

- [11] P. Majdak, P. Balazs, and B. Laback, “Multiple Exponential Sweep Method for Fast Measurement of Head-Related Transfer Functions,” J. of Audio Engineering Society, Vol.55, Issue 7/8, pp. 623-637, 2007.

- [12] Y. Kaneda, “Measurement signals for an acoustical impulse response,” invited talk, IEICE Technical Report, EA2015-68, SIP2015-117, SP2015-96, Mar. 2016 (in Japanese).

- [13] K. Nakamura, K. Nakadai, and H. G. Okuno, “A real-tome super-resolution robot audition system that improves the robustness of simultaneous speech recognition,” Advanced Robotics, Vol.27, Issue 12, pp. 933-945, 2013.

- [14] G.-B. Stan, J.-J. Embrechts, and D. Archambeau, “Comparison of different impulse response measurement techniques,” J. of Acoustic Society of America, Vol.50, No.4, pp. 249-262, Apr. 2002.

- [15] M. R. Schroeder, “Integrated-impulse method for measuring sound decay without using impulses,” J. of Acoustic Society of America, Vol.66, No.2, pp. 497-500, 1933.

- [16] M. R. Schroeder, “Number Theory in Science and Communication,” Spriger-Verlag, 1984.

- [17] N. Aoshima, “Computer-generated pulse signal applied for sound measurement,” J. of Acoustic Society of America, Vol.69, No.5, pp. 1483-1488, 1981.

- [18] Y. Suzuki, F. Asano, H.-Y. Kim, and T. Sone, “An optimum computer-generated pulse signal suitable for the measurement of very long impulse responses,” J. of Acoustic Society of America, Vol.97, No.2, pp. 1119-1123, 1995.

- [19] S. Müller and P. Massarani, “Transfer Function Measurement with Sweeps,” J. of Audio Engineering Society, Vol.49, No.6, pp. 443-471, 2011.

- [20] A. Farina, “Simultaneous Measurement of Impulse Response and Distortion with a Swept-Sine Techniques,” Proc. of 108th Convention of Audio Engineering Society, p. 5093, Paris, February 2000.

- [21] N. Moriya and Y. Kaneda, “Optimum signal for impulse response measurement that minimizes error caused by ambient noise,” J. of Acoustic Society of Japan, Vol.64, No.12, pp. 695-701, 2008 (in Japanese).

- [22] H. Krim and M. Viberg, “Two decades of array signal processing research: the parametric approach,” IEEE Signal Processing Magazine, Vol.13, No.4, pp. 67-94, 1996.

- [23] K. Nakamura, K. Nakadai, F. Asano, and H. Tsujino, “Intelligent Sound Source Localization for Dynamic Environments,” Proc. of 2009 IEEE/RSJ Int. Conf. on Intelligent Robots and Systems (IROS 2009), pp. 664-669, 2009.

- [24] W. Akahori, T. Masuda, H. G. Okuno, and S. Morishima, “The Evaluation of influence of Measurement Methods of the Transfer Function on Sound Source Localization and Separation,” Proc. of the 77th Annual Meeting of Information Processing Society of Japan, 5P-03, pp. 119-120, Mar. 2015.

- [25] K. Ito, M. Yamamoto, K. Takeda, T. Takezawa, T. Matsuoka, T. Kobayashi, K. Shikano, and S. Itahashi, “JNAS: Japanese speech corpus for large vocabulary continuous speech recognition,” J. of Acoustic Society of Japan (E), Vol.20, No.3, pp. 199-206, 1999.

- [26] T. Takahshi, K. Nakadai, C. T. Ishi, and H. G. Okuno, “Investigation of Sound Source Localization and Separation under a Real Environment,” Proc. of 29th Annual Meeting of Robotics Society of Japan, AC1EF3-3, 2011 (in Japanese).

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.