Paper:

Visual Servoing for Underwater Vehicle Using Dual-Eyes Evolutionary Real-Time Pose Tracking

Myo Myint, Kenta Yonemori, Akira Yanou, Khin Nwe Lwin, Mamoru Minami, and Shintaro Ishiyama

Graduate School of Natural Science and Technology, Okayama University

3-1-1 Tsushima-naka, Kita-ku, Okayama 700-8530, Japan

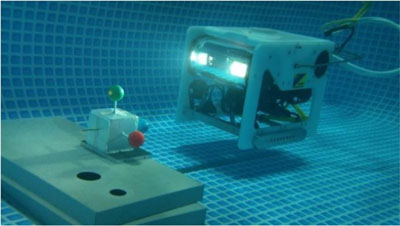

ROV with dual-eyes cameras and 3D marker

- [1] F. Chaumette and S. Hutchinson, “Visual servo control I: Basic approaches,” IEEE Robot. Autom. Mag., Vol.13, No.4, pp. 82-90, Dec. 2006.

- [2] F. Chaumette and S. Hutchinson, “Visual servo control II: Advanced approaches,” IEEE Robot. Autom. Mag., Vol.14, No.1, pp. 109-118, Mar. 2007.

- [3] F. Janabi-Sharifi, L. Deng, and W. J. Wilson, “Comparison of basic visual servoing methods,” IEEE/ASME Trans. on Mechatronics, Vol.16, No.5, October 2011.

- [4] Y. Okuda, H. Kamada, S. Takahashi, S. Kaneko, K. Kawabata, and F. Takemura, “Method of dynamic image processing for ecology observation of marine life,” J. of Robotics and Mechatronics, Vol.25, No.5, pp. 820-829, 2013.

- [5] D. Lee, X. Tao, H. Cho, and Y. Cho, “A dual imaging system for flip-chip alignment using visual servoing,” J. of Robotics and Mechatronics, Vol.18, No.6, pp. 779-786, 2006.

- [6] G. L. Foresti, S. Gentili, and M. Zampato, “A vision-based system for autonomous underwater vehicle navigation,” OCEANS ’98 Conf. Proc. (Vol.1), pp. 195-199, 1998.

- [7] J.-Y. Park, B.-H. Jun, P.-M. Lee, and J. Oh, “Experiments on vision guided docking of an autonomous underwater vehicle using one camera,” Ocean Eng., Vol.36, No.1, pp. 48-61, Jan. 2009.

- [8] T. Ura, Y. Kurimoto, H. Kondo, Y. Nose, T. Sakamaki, and Y. Kuroda, “Observation behavior of an AUV for ship wreck investigation,” Proc. of the OCEANS 2005 MTS/IEEE Conf., Vol.3, pp. 2686-2691, 2005.

- [9] N. Palomeras, P. Ridao, D. Ribas, and G. Vallicrosa, “Autonomous I-AUV docking for fixed-base manipulation,” Preprints of the Int. Federation of Automatic Control, pp. 12160-12165, 2014.

- [10] S. Sagara, R. B. Ambar, and F. Takemura, “A stereo vision system for underwater vehicle-manipulator systems – proposal of a novel concept using pan-tilt-slide cameras –,” J. of Robotics and Mechatronics, Vol.25, No.5, pp. 785-794, 2013.

- [11] Y. Li, Y. Jiang, J. Cao, B. Wang, and Y. Li, “AUV docking experiments based on vision positioning using two cameras,” Ocean Engineering, Vol.110, pp. 163-173, December 2015.

- [12] F. Maire, D. Prasser, M. Dunbabin, and M. Dawson, “A vision based target detection system for docking of an autonomous underwater vehicle,” Australasian Conf. on Robotics and Automation (ACRA), Sydney, Australia, December 2-4, 2009.

- [13] S. Ishibashi, “The stereo vision system for an underwater vehicle,” Oceans 2009, Europe, Bremen, pp. 1343-1348, 2009.

- [14] S. Cowen, S. Briest, and J. Dombrowski, “Underwater docking of autonomous undersea vehicle using optical terminal guidance,” Proc. IEEE Oceans Engineering, Vol.2, pp. 1143-1147, 1997.

- [15] K. Teo, B. Goh, and O. K. Chai, “Fuzzy docking guidance using augmented navigation system on an AUV,” IEEE J. of Oceans Engineering, Vol.37, No.2, April 2015.

- [16] M. D. Feezor, F. Y. Sorrell, P. R. Blankinship, and J. G. Bellingham, “Autonomous underwater vehicle homing/docking via electromagnetic guidance,” IEEE J. of Oceans Engineering, Vol.26, No.4, pp. 515-521, October 2001.

- [17] R. S. McEwen, B. W. Hobson, L. McBride, and J. G. Bellingham, “Docking Control System for a 54-cm-Diameter (21-in) AUV,” IEEE J. of Oceanic Engineering, Vol.33, No.4, pp. 550-562, October 2008.

- [18] K. Teo, E. An, and P.-P. J. Beaujean, “A robust fuzzy autonomous underwater vehicle (AUV) docking approach for unknown current disturbances,” IEEE J. of Oceanic Engineering, Vol.37, No.2, pp. 143-155, April 2012.

- [19] J.-Y. Park , B.-H. Jun, P.-M. Lee, F.-Y. Lee, and J.-h. Oh, “Experiment on underwater docking of an autonomous underwater vehicle ‘ISiMI’ using optical terminal guidance,” IEEE Oceanic Engineering, Europe, pp. 1-6, 2007.

- [20] A. Negre, C. Pradalier, and M. Dunbabin, “Robust vision-based underwater homing using self similar landmarks,” J. of Field Robotics, Wiley-Blackwell, Special Issue on Field and Service Robotics, Vol.25, No.6-7, pp. 360-377, 2008.

- [21] M. Dunbabin, B. Lang, and B. Wood, “Vision-based docking using an autonomous surface vehicle,” IEEE Int. Conf. on Robotics and Automation, Pasadena, CA, USA, 2008.

- [22] P. Batista, C. Silvestre, and P. Oliveira, “A two-step control strategy for docking of autonomous underwater vehicles,” American Control Conf., Fairmont Queen Elizabeth, Montréal, Canada, 2012.

- [23] N. Palomeras, A. Peñalver, M. Massot-Campos, G. Vallicrosa, P. L. Negre, J. J. Fernández, P. Ridao, P. J. Sanz, G. Oliver-Codina, and A. Palomer, “I-AUV docking and intervention in a subsea panel,” IEEE/RSJ Int. Conf. on Intelligent Robots and Systems, Chicago, IL, pp 2279-2285, 2014.

- [24] S.-C. Yu, T. Ura, T. Fujii, and H. Kondo, “Navigation of autonomous underwater vehicles based on artificial underwater landmarks,” OCEANS MTS/IEEE Conf. and Exhibition, Vol.1, pp. 409-416, 2001.

- [25] L. Vacchetti and V. Lepetit, “Stable real-time 3D tracking using online and offline information,” Trans. on Pattern Analysis and machine Intelligence, Vol.26, No.10, pp. 1385-1391, 2004.

- [26] C. Choi, S.-M. Baek, and S. Lee, “Real-time 3D object pose estimation and tracking for natural landmark based visual servo,” IEEE/RSJ Int. Conf. on Intelligent Robots and Systems, pp. 3983-3989, 2008.

- [27] N. P. Papanikilopoulos, P. K. Khosla, and T. Kanade, “Visual tracking of a moving target by a camera mounted on a robot: a combination of control and vision,” IEEE Trans. on Robotics and Automation, Vol.9, No.1, pp. 14-35, Feb. 1993.

- [28] G. Panin and A. Knoll, “Fully automatic real-time 3D object tracking using active contour and appearance models,” J. of Multimedia, Vol.1, No.7, pp. 62-70, 2006.

- [29] H. Hajimolahoseini, R. Amirfattahi, and S. Khorshidi, “Real-time pose estimation and tracking of rigid objects in 3D space using extended Kalman filter,” 22nd Iranian Conf. on Electrical Engineering (ICEE), pp. 1545-1549, 2014.

- [30] W. Song, M. Minami, and S. Aoyagi, “Feedforward on-line pose evolutionary recognition based on quaternion,” J. of the Robot Society of Japan, Vol.28, No.1, pp. 55-64, 2010 (in Japanese).

- [31] W. Song and M. Minami, “On-line motion-feedforward pose recognition invariant for dynamic hand-eye motion,” IEEE/ASME Int. Conf. on Advanced Intelligent Mechatronics, pp. 1047-1052, 2008.

- [32] W. Song and M. Minami, “Stability/precision improvement of 6-DoF visual servoing by motion feedforward compensation and experimental evaluation,” IEEE Int. Conf. on Robotics and Automation, pp. 722-729, 2009.

- [33] W. Song and M. Minami, “Hand and eye-vergence dual visual servoing to enhance observability and stability,” IEEE Int. Conf. on Robotics and Automation, pp. 714-721, 2009.

- [34] W. Song and M. Minami, “3-D visual servoing using feedforward evolutionary recognition,” J. of the Robot Society of Japan, Vol.28, No.5, pp. 591-598, 2010 (in Japanese).

- [35] F. Yu, M. Minami, W. Song, J. Zhu, and A. Yanou, “On-line head pose estimation with binocular hand-eye robot based on evolutionary model-based matching,” J. of Computer and Information Technology, Vol.2, No.1, pp. 43-54, 2012.

- [36] W. Song, M. Minami, F. Yu, Y. Zhang, and A. Yanou, “3-D hand and eye-vergence approaching visual servoing with Lyapunov-stable pose tracking,” IEEE Int. Conf. on Robotics and Automation (ICRA), pp. 5210-5217, 2011.

- [37] H. Suzuki and M. Minami, “Visual servoing to catch fish using global/local GA search,” IEEE/ASME Trans. on Mechatronics, Vol.10, Issue 3, pp. 352-357, 2005.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.