Research Paper:

Research on the Application of Intelligent Object Recognition System in Classroom Attendance and Student Behavior Analysis in Universities

Lin Yang*, Gai Hang**, and Xuehui Zhang*,†

*School of Electronic Information and Automation, Guilin University of Aerospace Technology

2 Jinji Road, Qixing District, Guilin, Guangxi 541004, China

†Corresponding author

**School of Management, Guilin University of Aerospace Technology

2 Jinji Road, Qixing District, Guilin, Guangxi 541004, China

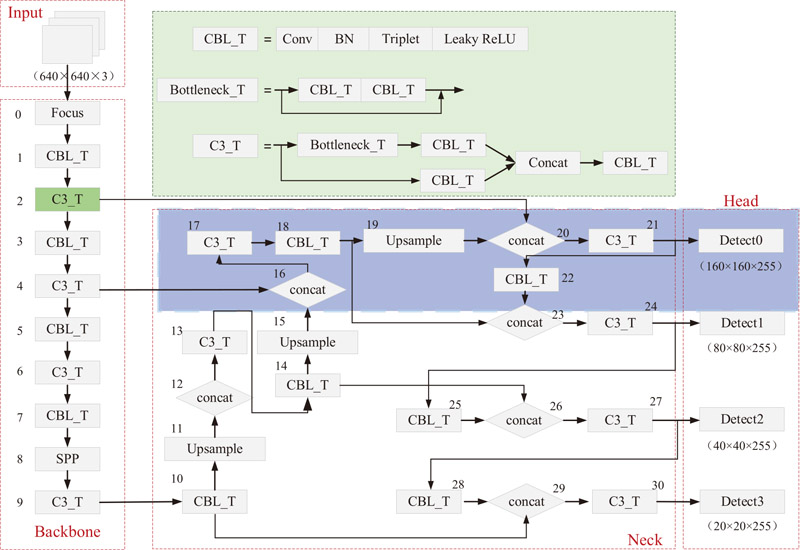

In order to better understand the overall learning status of students, evaluate classroom attendance in universities, and promote the high-quality development of higher education, analyzing student behavior in the classroom is extremely important. Existing research on student behavior recognition primarily focuses on identifying individual students, with insufficient attention given to their interactions with surrounding objects. To more accurately detect the required targets within a classroom, this paper proposes a multi-target detection method based on an improved YOLOv5s model. Firstly, to address the issue of small-scale targets such as mobile phones and pens in the classroom scene, which have limited extractable features, this paper adopted measures to optimize the network structure. Secondly, considering the interference of irrelevant information such as classroom backgrounds and varying student attire in real classroom environments, which makes it difficult for the network to extract effective features, the triplet attention mechanism was introduced to enhance the network’s feature extraction capability. Finally, experiments were conducted on both a self-constructed dataset and a public dataset. The experimental results show that the mAP values of the improved network increased by 4.5 percentage point and 3.2 percentage point, respectively, verifying the effectiveness of the improvements.

YOLOv5s-SA network structure diagram

- [1] K. Matsui, T. Kasai, and K. Sakai, “Challenges for data collecting of teacher and student’ behavior in different types of class using video and wearable device,” 2019 Joint 8th Int. Conf. on Informatics, Electronics & Vision (ICIEV) and 3rd Int. Conf. on Imaging, Vision & Pattern Recognition (icIVPR), pp. 56-61, 2019. https://doi.org/10.1109/ICIEV.2019.8858558

- [2] C. Meurisch, A. Gogel, B. Schmidt, T. Nolle, F. Janssen, I. Schweizer, and M. Mühlhäuser, “Capturing daily student life by recognizing complex activities using smartphones,” Proc. of the 14th EAI Int. Conf. on Mobile and Ubiquitous Systems: Computing, Networking and Services, pp. 156-165, 2017. https://doi.org/10.1145/3144457.314447

- [3] A. Damasceno, A. Ferreira, T. Gama, C. Medeiros, E. Pereira, and F. M. Catunda de Oliveira, “Understanding the correlation between teacher and student behavior in the classroom and its consequent academic performance,” 2019 IEEE Frontiers in Education Conf., 2019. https://doi.org/10.1109/FIE43999.2019.9028571

- [4] C. H. H. M. Heemskerk, D. Lubans, S. Strand, and L.-E. Malmberg, “The effect of physical education lesson intensity and cognitive demand on subsequent learning behaviour,” J. of Science and Medicine in Sport, Vol.23, No.6, pp. 586-590, 2020. https://doi.org/10.1016/j.jsams.2019.12.012

- [5] T. B. Abdallah, I. Elleuch, and R. Guermazi, “Student behavior recognition in classroom using deep transfer learning with VGG-16,” Procedia Computer Science, Vol.192, pp. 951-960, 2021. https://doi.org/10.1016/j.procs.2021.08.098

- [6] M. Cantabella, R. Martínez-España, B. Ayuso, J. A. Yáñez, and A. Muñoz, “Analysis of student behavior in learning management systems through a big data framework,” Future Generation Computer Systems, Vol.90, pp. 262-272, 2019. https://doi.org/10.1016/j.future.2018.08.003

- [7] M. H. Zarif Mohd Fodli, F. Hafizhelmi Kamaru Zaman, N. K. Mun, and L. Mazalan, “Driving behavior recognition using multiple deep learning models,” 2022 IEEE 18th Int. Colloquium on Signal Processing & Applications, pp. 138-143, 2022. https://doi.org/10.1109/CSPA55076.2022.9781995

- [8] D. H. Kim, S. Lee, J. Jeon, and B. C. Song, “Real-time purchase behavior recognition system based on deep learning-based object detection and tracking for an unmanned product cabinet,” Expert Systems with Application, Vol.143, Article No.113063, 2020. https://doi.org/10.1016/j.eswa.2019.113063

- [9] G. Anitha and S. Baghavathi Priya, “Posture based health monitoring and unusual behavior recognition system for elderly using dynamic Bayesian network,” Cluster Computing, Vol.22, pp. 13583-13590, 2019. https://doi.org/10.1007/s10586-018-2010-9

- [10] L. Li, M. Liu, L. Sun, Y. Li and N. Li, “ET-YOLOv5s: Toward deep identification of students’ in-class behaviors,” IEEE Access, Vol.10, pp. 44200-44211, 2022. https://doi.org/10.1109/ACCESS.2022.3169586

- [11] S. Liu, J. Zhang, and W. Su, “An improved method of identifying learner’ s behaviors based on deep learning,” The J. of Supercomputing, Vol.78, pp. 12861-12872, 2022. https://doi.org/10.1007/s11227-022-04402-w

- [12] R. Afdhal, A. Bahar, R. Ejbali, and M. Zaied, “Face detection using beta wavelet filter and cascade classifier entrained with Adaboost,” Proc. of 8th Int. Conf. on Machine Vision, Article No. 98750T, 2015. https://doi.org/10.1117/12.2229620

- [13] S.-H. Lee, M. Bang, K.-H. Jung, and K. Yi, “An efficient selection of HOG feature for SVM classification of vehicle,” 2015 IEEE Int. Symposium on Consumer Electronics (ISCE), 2015. https://doi.org/:10.1109/ISCE.2015.7177766

- [14] J. Dai, Y. Li, K. He, and J. Sun, “R-FCN: Object detection via region-based fully convolutional networks,” Proc. Advances in Neural Information Processing Systems 29, 2016.

- [15] S. Ren, K. He, R. Girshick, and J. Sun, “Faster R-CNN: Towards real-time object detection with region proposal networks,” Proc. of the 29th Int. Conf. on Neural Information Processing Systems, Vol.1, pp. 91-99, 2015.

- [16] A. Bochkovskiy, C.-Y. Wang, and H.-Y. M. Liao, “YOLOv4: Optimal speed and accuracy of object detection,” arXiv:2004.10934, 2020. https://doi.org/10.48550/arXiv.2004.10934

- [17] W. Liu, D. Anguelov, D. Erhan, C. Szegedy, S. Reed, C.-Y. Fu, and A. C. Berg, “SSD: Single shot multibox detector,” B. Leibe, J. Matas, N. Sebe, and M. Welling (Eds.), “Lecture Notes in Computer Science,” Vol.9905, pp. 21-37, Springer, 2016. https://doi.org/10.1007/978-3-319-46448-0_2

- [18] L. Zhao, L. Zhi, C. Zhao, and W. Zheng, “Fire-YOLO: A small target object detection method for fire inspection,” Sustainability, Vol.14, No.9, Article No.4930, 2022. https://doi.org/10.3390/su14094930

- [19] X. Shi, H. Lu, P. Qin, and Z. Leng, “A long-distance pedestrian small target detection method,” Chinese J. of Scientific Instrument, Vol.43, No.5, pp. 136-146, 2022.

- [20] W. Li, Y. Zhang, W. Shi, and S. Coleman, “A CAM-guided parameter-free attention network for person re-identification,” IEEE Signal Processing Letters, Vol.29, pp. 1559-1563, 2022. https://doi.org/10.1109/LSP.2022.3186273

- [21] Z. Xu, J. Yu, W. Xiang, S. Zhu, M. Hussain, B. Liu, and J. Li, “A novel SE-CNN attention architecture for sEMG-based hand gesture recognition,” Computer Modeling in Engineering & Sciences, Vol.134, No.1, pp. 157-177, 2022. https://doi.org/10.32604/cmes.2022.020035

- [22] Z. Zou, K. Chen, Z. Shi, Y. Guo, and J. Ye, “Object detection in 20 years: A survey,” Proc. of the IEEE, Vol.111, No.3, pp. 257-276, 2023. https://doi.org/10.1109/JPROC.2023.3238524

- [23] J. Redmon, S. Divvala, R. Girshick, and A. Farhadi, “You Only Look Once: Unified, real-time object detection,” Proc. of 2016 IEEE Conf. on Computer Vision and Pattern Recognition (CVPR), pp. 779-788, 2016. https://doi.org/10.1109/CVPR.2016.91

- [24] C.-Y. Wang, A. Bochkovskiy, and H.-Y. M. Liao, “YOLOv7: Trainable bag-of-freebies sets new state-of-the-art for real-time object detectors,” arXiv:2207.02696, 2022. https://doi.org/10.48550/arXiv.2207.02696

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.