Research Paper:

Color Visual Expression in Product Packaging Design Based on Feature Fusion Network

Zemei Liu

School of Art and Design, Pingdingshan University

Southern Section of Weilai Road, Xinhua District, Pingdingshan, Henan 467000, China

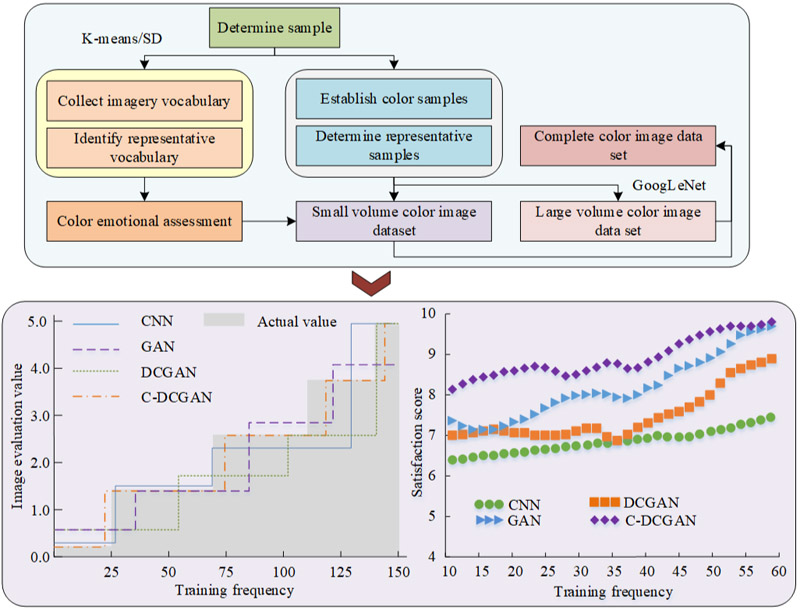

To improve the effectiveness of visual representation schemes for product packaging colors and enhance the competitiveness and attractiveness of products, this study proposed to construct a color intent dataset based on multiple fusion algorithms. On this basis, a product packaging color visual expression model based on a conditional deep convolution generative adversarial network was constructed. The empirical analysis of the model showed that its accuracy was 94.36% and the running time was 50.2 seconds, indicating better performance than the comparative models. In addition, this study also rated its satisfaction and found that the average satisfaction score of the model was 9.2, higher than the other comparative models. The proposed visual expression model of product packaging color based on conditional depth convolution generative adversarial network had better accuracy and computing speed performance than other comparison models. The color scheme provided by this model better met user needs compared to other models and had great potential for application, providing a certain theoretical basis for product packaging color design.

Visual expression model of packaging color

- [1] B. S. Heidari, S. M. Davachi, R. Sahraean, M. Esfandeh, H. Rashedi, and J. Seyfi, “Investigating thermal and surface properties of low-density polythene/nanocomposites for packaging applications,” Polymer Composites, Vol.40, Issue 7, pp. 2929-2937, 2019. https://doi.org/10.1002/pc.25126

- [2] J. Liu, X. Lu, W. Wang, Z. Su, B. Xing, and H. Zheng, “Tailoring particle distribution for white LEDs with high color-uniformity by selective curing,” IEEE Photonics Technology Letters, Vol.33, Issue 4, pp. 193-196, 2020. https://doi.org/10.1109/LPT.2020.3046740

- [3] J. Song, “Cross-border integration: Research on visual communication design from the perspectives of brand and ecosystem,” Advanced Management Science, Vol.12, No.1, pp. 41-44, 2023. https://doi.org/10.7508/ams.01.2023.41.44

- [4] J. Huang, F. Wang, J. Sui, and X. Wan, “Functional and structural basis of the color-flavor incongruency effect in visual search,” Neuropsychologia, Vol.127, pp. 66-74, 2019. https://doi.org/10.1016/j.neuropsychologia.2019.02.013

- [5] Z. Mu, “Research on the design of wearable life-saving furniture on water based on UCD,” Applied Computer Letters, Vol.7, No.2, pp. 1-7, 2023. https://doi.org/10.7508/acl.02.2023.01.07

- [6] S. Mukherjee, N. Pathak, K. Ali, D. Das, and D. Dutta, “Tailoring defect structure and dopant composition and the generation of variable color characteristics in Eu3+ and Tb3+ dosed MgF2 phosphors,” Physical Chemistry Chemical Physics, Vol.24, Issue 18, pp. 10915-10927, 2022. https://doi.org/10.1039/D2CP01031C

- [7] M. Tokutake, T. Kajiyama, and N. Ouchi, “A method for revising package image colors to express brand perceptions better,” Color Research & Application, Vol.44, Issue 5, pp. 798-810, 2019. https://doi.org/10.1002/col.22400

- [8] V. K. Garg and T. L. Wickramarathne, “ENSURE: A deep learning approach for enhancing situational awareness in surveillance applications with ubiquitous high-dimensional sensing,” IEEE J. of Selected Topics in Signal Processing, Vol.16, No.4, pp. 869-878, 2022. https://doi.org/10.1109/JSTSP.2022.3184174

- [9] H. Ran, S. Wen, K. Shi, and T. Huang, “Stable and compact design of memorial GoogLeNet neural network,” Neurocomputing, Vol.441, No.21, pp. 52-63, 2021. https://doi.org/10.1016/j.neucom.2021.01.122

- [10] J. Yu, A. W. Schumann, S. M. Sharpe, X. Li, and N. S. Boyd, “Detection of grassy weeds in bermudagrass with deep convolutional neural networks,” Weed Science, Vol.68, Issue 5, pp. 545-552, 2020. https://doi.org/10.1017/wsc.2020.46

- [11] H. Cui, G. Yuan, N. Liu, M. Xu, and H. Song, “Convolutional neural network for recognizing highway traffic congestion,” J. of Intelligent Transportation Systems, Vol.24, Issue 3, pp. 279-289, 2020. https://doi.org/10.1080/15472450.2020.1742121

- [12] V. Paidi, H. Fleyeh, and R. G. Nyberg, “Deep learning-based vehicle occupancy detection in an open parking lot using thermal camera,” IET Intelligent Transport Systems, Vol.14, Issue 10, pp. 1295-1302, 2020. https://doi.org/10.1049/iet-its.2019.0468

- [13] W. Tang, S. Huang, Q. Zhao, R. Li, and L. Huangfu, “An iteratively optimized patch label inference network for automatic pavement distress detection,” IEEE Trans. on Intelligent Transportation Systems, Vol.23, No.7, pp. 8652-8661, 2022. https://doi.org/10.1109/TITS.2021.3084809

- [14] S. Kunz, S. Haasova, and A. Florack, “Fifth shares of food: The influence of package color saturation on health and taste in consumer judgments,” Psychology & Marketing, Vol.37, Issue 7, pp. 900-912, 2019. https://doi.org/10.1002/mar.21317

- [15] M. Chung and R. Saini, “Color darkness and hierarchy perceptions: How consumers associate darker colors with higher hierarchy,” Psychology & Marketing, Vol.39, Issue 4, pp. 820-837, 2021. https://doi.org/10.1002/mar.21623

- [16] Ç. K. Söz, Z. Özomay, S. Unal, M. Uzun, and S. Sönmez, “Development of a nonwetting coating for packaging substrate surfaces using a novel and easy to implement method,” Nordic Pulp & Paper Research J., Vol.36, Issue 2, pp. 331-342, 2021. https://doi.org/10.1515/npprj-2021-0017

- [17] D. Liu, L. Yang, M. Shang, and Y. Zhong, “Research progress of packaging indicating materials for real-time monitoring of food quality,” Materials Express, Vol.9, No.5, pp. 377-396, 2019. https://doi.org/10.1166/mex.2019.1523

- [18] J.-B. Woillard, M. Labriffe, J. Debord, and P. Marquet, “Mycophenolic acid exposure prediction using machine learning,” Clinical Pharmacy & Therapeutics, Vol.110, No.2, pp. 370-379, 2021. https://doi.org/10.1002/cpt.2216

- [19] X. Wang, X. Xu, K. Sun, Z. Jiang, M. Li, and J. Wen, “A color image encryption and hiding algorithm based on hyperchaotic system and discrete cosine transform,” Nonlinear Dynamics, Vol.111, pp. 14513-14536, 2023. https://doi.org/10.1007/s11071-023-08538-z

- [20] J. Zan, “Research on robot path perception and optimization technology based on whale optimization algorithm,” J. of Computational and Cognitive Engineering, Vol.1, No.4, pp. 201-208, 2022. https://doi.org/10.47852/bonviewJCCE597820205514

- [21] F. Masood, J. Masood, H. Zahir, K. Driss, N. Mehmood, and H. Farooq, “Novel approach to evaluate classification algorithms and feature selection filter algorithms using medical data,” J. of Computational and Cognitive Engineering, Vol.2, No.1, pp. 57-67, 2022. https://doi.org/10.47852/bonviewJCCE2202238

- [22] Y. Gao, B. Kong, and K. M. Mosalam, “Deep leaf-bootstrapping generative adversarial network for structural image data augmentation,” Computer-Aided Civil and Infrastructure Engineering, Vol.34, Issue 9, pp. 755-773, 2019. https://doi.org/10.1111/mice.12458

- [23] X. Gao, J. Mou, S. Banerjee, and Y. Zhang, “Color-gray multi-image hybrid compression–encryption scheme based on BP neural network and knight tour,” IEEE Trans. on Cybernetics, Vol.53, No.8, pp. 5037-5047, 2023. https://doi.org/10.1109/TCYB.2023.3267785

- [24] L. Xu and F. Ding, “Decomposition and composition modeling algorithms for control systems with colored noises,” Int. J. of Adaptive Control and Signal Processing, Vol.38, Issue 1, pp. 255-278, 2023. https://doi.org/10.1002/acs.3699

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.