Research Paper:

Human Behavior Recognition Algorithm Based on Multi-Modal Sensor Data Fusion

Dingchao Zheng†

, Caiwei Chen, and Jianzhe Yu

, Caiwei Chen, and Jianzhe Yu

Zhejiang Dongfang Polytechnic

No.433 Jinhai 3rd Road, Longwan District, Wenzhou, Zhejiang 325000, China

†Corresponding author

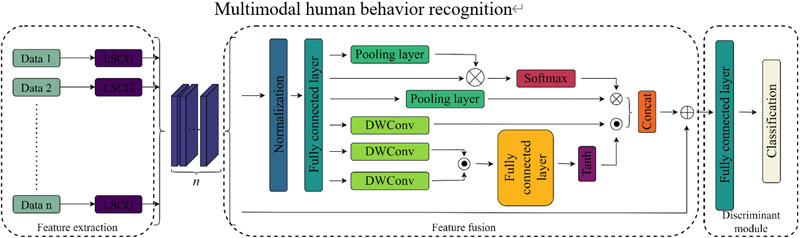

With the advances in artificial intelligence and computers, sensor-based human behavior recognition technology has been gradually applied to many emerging cross-cutting fields such as smart healthcare and motion monitoring. First, we design a deep learning model for human behavior recognition single mode based on lifting wavelet transform (lifting scheme convolutional neural networks-gated recurrent unit, LSCG) to address the problem of inaccurate and insufficient feature extraction from sensor data in the human behavior recognition network model. The structure of the LSCG network model consists of a wavelet decomposition module and a feature fusion module. Then, we further address the limited ability of a single modality for human behavior recognition by designing a multimodal human behavior recognition model based on the LSCG model (multimodal lifting scheme convolutional neural networks gated recurrent unit, MultiLSCG). The structure of the MultiLSCG network model consists of a feature extraction module and a multimodal feature fusion module. The feature extraction module consists of the LSCG model, which enables the model to extract features from different modal human behavior data. The multimodal feature fusion module enables the model to obtain more features from the multimodal behavior signals by extracting the global feature information of the human behavior signals and the local feature information of the human behavior signals. Finally, the experimental results show that in the public dataset OPPORTUNITY, the accuracy of the motion pattern dataset reaches 91.58%, and the accuracy of the gesture recognition dataset reaches 88.53%, which is higher than the existing mainstream neural networks, on the UCI-HAR and WISDM data sets, the accuracy of our proposed model reached 96.38% and 97.48%, which further verified the validity and applicability of our proposed model.

Multimodal human behavior recognition

- [1] J. Tong, “Research on human falling behavior recognition based on wearable sensors,” Master’s Thesis, Harbin Engineering University, 2021 (in Chinese). https://doi.org/10.27061/d.cnki.ghgdu.2021.007019

- [2] Y. Guo et al., “Wearable sensor data based human behavior recognition: A method of data feature extraction,” J. of Computer Aided Design & Computer Graphics, Vol.33, No.8, pp. 1246-1253, 2021 (in Chinese).

- [3] T. Lu, “Research on activity recognition analysis based on intelligent perception,” Ph.D. thesis, Beijing University of Posts and Telecommunications, 2021 (in Chinese).

- [4] M. M. Arzani, M. Fathy, A. A. Azirani, and E. Adeli, “Switching structured prediction for simple and complex human activity recognition,” IEEE Trans. on Cybernetics, Vol.51, No.12, pp. 5859-5870, 2021. https://doi.org/10.1109/TCYB.2019.2960481

- [5] S. Yosry, L. Elrefaei, R. ElKamaar, and R. R. Ziedan, “Various frameworks for integrating image and video streams for spatiotemporal information learning employing 2D–3D residual networks for human action recognition,” Discover Applied Sciences, Vol.6, No.4, Article No.141, 2024. https://doi.org/10.1007/s42452-024-05774-9

- [6] X. Chen, T. Zhang, X. Zhu, and L. Mo, “Human behavior recognition method based on fusion model,” Sensors and Microsystem Technologies, Vol.40, No.1, pp. 136-139+143, 2019 (in Chinese). https://doi.org/10.13873/J.1000-9787(2021)01-0136-04

- [7] Y. Tang, Q. Teng, L. Zhang, F. Min, and J. He, “Layer-wise training convolutional neural networks with smaller filters for human activity recognition using wearable sensors,” IEEE Sensors J., Vol.21, No.1, pp. 581-592, 2021. https://doi.org/10.1109/JSEN.2020.3015521

- [8] X. Wang et al., “Deep convolutional networks with tunable speed–accuracy tradeoff for human activity recognition using wearables,” IEEE Trans. on Instrumentation and Measurement, Vol.71, pp. 1-12, 2022. https://doi.org/10.1109/TIM.2021.3132088

- [9] H. Peng, “The study on user activity recognition based on intelligent perception,” Master’s thesis, Hangzhou Dianzi University, 2022 (in Chinese). https://doi.org/10.27075/d.cnki.ghzdc.2022.000927

- [10] A. Tehrani, M. Yadollahzadeh-Tabari, A. Zehtab-Salmasi, and R. Enayatifar, “Wearable sensor-based human activity recognition system employing Bi-LSTM algorithm,” The Computer J., Vol.67, No.3, pp. 961-975, 2024. https://doi.org/10.1093/comjnl/bxad035

- [11] T. R. Gadekallu et al., “Hand gesture classification using a novel CNN-crow search algorithm,” Complex & Intelligent Systems, Vol.7, No.4, pp. 1855-1868, 2021. https://doi.org/10.1007/s40747-021-00324-x

- [12] S. Suh, V. F. Rey, and P. Lukowicz, “TASKED: Transformer-based Adversarial learning for human activity recognition using wearable sensors via Self-KnowledgE Distillation,” Knowledge-Based Systems, Vol.260, Article No.110143, 2023. https://doi.org/10.1016/j.knosys.2022.110143

- [13] Y. Qu, Y. Tang, X. Yang, Y. Wen, and W. Zhang, “Context-aware mutual learning for semi-supervised human activity recognition using wearable sensors,” Expert Systems with Applications, Vol.219, Article No.119679, 2023. https://doi.org/10.1016/j.eswa.2023.119679

- [14] A. Sarkar, S. K. S. Hossain, and R. Sarkar, “Human activity recognition from sensor data using spatial attention-aided CNN with genetic algorithm,” Neural Computing and Applications, Vol.35, No.7, pp. 5165-5191, 2023. https://doi.org/10.1007/s00521-022-07911-0

- [15] J. Park, W.-S. Lim, D.-W. Kim, and J. Lee, “GTSNet: Flexible architecture under budget constraint for real-time human activity recognition from wearable sensor,” Engineering Applications of Artificial Intelligence, Vol.124, Article No.106543, 2023. https://doi.org/10.1016/j.engappai.2023.106543

- [16] W. Zheng, L. Yan, C. Gou, and F.-Y. Wang, “Meta-learning meets the Internet of Things: Graph prototypical models for sensor-based human activity recognition,” Information Fusion, Vol.80, pp. 1-22, 2022. https://doi.org/10.1016/j.inffus.2021.10.009

- [17] W. Huang, L. Zhang, Q. Teng, C. Song, and J. He, “The convolutional neural networks training with channel-selectivity for human activity recognition based on sensors,” IEEE J. of Biomedical and Health Informatics, Vol.25, No.10, pp. 3834-3843, 2021. https://doi.org/10.1109/JBHI.2021.3092396

- [18] W. Gao et al., “Deep neural networks for sensor-based human activity recognition using selective kernel convolution,” IEEE Trans. on Instrumentation and Measurement, Vol.70, Article No.2512313, 2021. https://doi.org/10.1109/TIM.2021.3102735

- [19] H, Park, N. Kim, G. H. Lee, and J. K. Choi, “MultiCNN-FilterLSTM: Resource-efficient sensor-based human activity recognition in IoT applications,” Future Generation Computer Systems, Vol.139, pp. 196-209, 2023. https://doi.org/10.1016/j.future.2022.09.024

- [20] M. A. A. Al-Qaness, A. Dahou, M. A. Elaziz, and A. M. Helmi, “Multi-ResAtt: Multilevel residual network with attention for human activity recognition using wearable sensors,” IEEE Trans. on Industrial Informatics, Vol.19, No.1, pp. 144-152, 2023. https://doi.org/10.1109/TII.2022.3165875

- [21] P. Chen, Z. Li, S. Togo, H. Yokoi, and Y. Jiang, “A layered sEMG-FMG hybrid sensor for hand motion recognition from forearm muscle activities,” IEEE Trans. on Human-Machine Systems, Vol.53, No.5, pp. 935-944, 2023. https://doi.org/10.1109/THMS.2023.3287594

- [22] A. N. Tarekegn, M. Ullah, F. A. Cheikh, and M. Sajjad, “Enhancing human activity recognition through sensor fusion and hybrid deep learning model,” 2023 IEEE Int. Conf. on Acoustics, Speech, and Signal Processing, 2023. https://doi.org/10.1109/ICASSPW59220.2023.10193698

- [23] E. Essa and I. R. Abdelmaksoud, “Temporal-channel convolution with self-attention network for human activity recognition using wearable sensors,” Knowledge-Based Systems, Vol.278, Article No.110867, 2023. https://doi.org/10.1016/j.knosys.2023.110867

- [24] J. Cao, Y. Wang, H. Tao, and X. Guo, “Sensor-based human activity recognition using graph LSTM and multi-task classification model,” ACM Trans. on Multimedia Computing, Communications and Applications, Vol.18, No.3s, Article No.139, 2022.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.