Research Paper:

Adding Noise to Super-Resolution Training Set: Method to Denoise Super Resolution for Structure from Motion Preprocessing

Kaihang Zhang*,†

, Hajime Nobuhara*

, Hajime Nobuhara*

, and Muhammad Haris**

, and Muhammad Haris**

*University of Tsukuba

1-1-1 Tennodai, Tsukuba, Ibaraki 305-8577, Japan

†Corresponding author

**Universitas Nusa Mandiri

Jatiwaringin, Cipinang, Jakarta Timur 13620, Indonesia

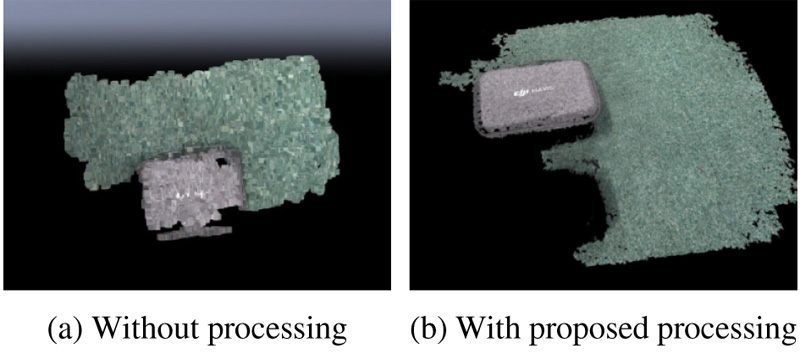

The resolution and noise levels of input images directly affect the three-dimensional (3D) structure-from-motion (SfM) reconstruction performance. Conventional super-resolution (SR) methods focus too little on denoising, and latent image noise becomes worse when resolution is improved. This study proposes two SR denoising training algorithms to simultaneously improve resolution and noise: add-noise-before-downsampling and downsample-before-adding-noise. These portable methods preprocess low-resolution training images using real-world noise samples instead of altering the basic neural network. Hence, they concurrently improve resolution while reducing noise for an overall cleaner SfM performance. We applied these methods to the existing SR network: super-resolution convolutional neural network, enhanced deep residual super-resolution, residual channel attention network, and efficient super-resolution transformer, comparing their performances with those of conventional methods. Impressive peak signal-to-noise and structural similarity improvements of 0.12 dB and 0.56 were achieved on the noisy images of Smartphone Image Denoising Dataset, respectively, without altering the network structure. The proposed methods caused a very small loss (<0.01 dB) on clean images. Moreover, using the proposed SR algorithm makes the 3D SfM reconstruction more complete. Upon applying the methods to non-preprocessed and conventionally preprocessed models, the mean projection error was reduced by a maximum of 27% and 4%, respectively, and the number of 3D densified points was improved by 310% and 7%, respectively.

Improve performance on noisy SR and SfM

- [1] K. Zhang and H. Nobuhara, “A denoisable super resolution method: A way to improve structure from motion’s performance against CMOS’s noise,” 2022 IEEE Int. Conf. on Systems, Man, and Cybernetics, pp. 3080-3085, 2022. https://doi.org/10.1109/SMC53654.2022.9945277

- [2] Y. Furukawa and J. Ponce, “Accurate, dense, and robust multiview stereopsis,” IEEE Trans. on Pattern Analysis and Machine Intelligence, Vol.32, No.8, pp. 1362-1376, 2010. https://doi.org/10.1109/TPAMI.2009.161

- [3] Z. Wang, J. Chen, and S. C. H. Hoi, “Deep learning for image super-resolution: A survey,” IEEE Trans. on Pattern Analysis and Machine Intelligence, Vol.43, No.10, pp. 3365-3387, 2021. https://doi.org/10.1109/TPAMI.2020.2982166

- [4] C. Dong, C. C. Loy, K. He, and X. Tang, “Image super-resolution using deep convolutional networks,” IEEE Trans. on Pattern Analysis and Machine Intelligence, Vol.38, No.2, pp. 295-307, 2016. https://doi.org/10.1109/TPAMI.2015.2439281

- [5] S. Son, J. Kim, W.-S. Lai, M.-H. Yang, and K. M. Lee, “Toward real-world super-resolution via adaptive downsampling models,” IEEE Trans. on Pattern Analysis and Machine Intelligence, Vol.44, No.11, pp. 8657-8670, 2022. https://doi.org/10.1109/TPAMI.2021.3106790

- [6] C. Ledig et al., “Photo-realistic single image super-resolution using a generative adversarial network,” 2017 IEEE Conf. on Computer Vision and Pattern Recognition, pp. 105-114, 2017. https://doi.org/10.1109/CVPR.2017.19

- [7] N. C. Rakotonirina and A. Rasoanaivo, “ESRGAN+: Further improving enhanced super-resolution generative adversarial network,” 2020 IEEE Int. Conf. on Acoustics, Speech and Signal Processing, pp. 3637-3641, 2020. https://doi.org/10.1109/ICASSP40776.2020.9054071

- [8] X. Wang et al., “ESRGAN: Enhanced super-resolution generative adversarial networks,” Computer Vision – ECCV 2018 Workshops, Part 5, pp. 63-79, 2019. https://doi.org/10.1007/978-3-030-11021-5_5

- [9] B. Lim, S. Son, H. Kim, S. Nah, and K. M. Lee, “Enhanced deep residual networks for single image super-resolution,” 2017 IEEE Conf. on Computer Vision and Pattern Recognition Workshops, pp. 1132-1140, 2017. https://doi.org/10.1109/CVPRW.2017.151

- [10] Y. Zhang et al., “Image super-resolution using very deep residual channel attention networks,” Computer Vision – ECCV 2018, Part 7, pp. 294-310, 2018. https://doi.org/10.1007/978-3-030-01234-2_18

- [11] M. Haris, G. Shakhnarovich, and N. Ukita, “Deep back-projection networks for super-resolution,” 2018 IEEE/CVF on Computer Vision and Pattern Recognition, pp. 1664-1673, 2018. https://doi.org/10.1109/CVPR.2018.00179

- [12] M. Haris, G. Shakhnarovich, and N. Ukita, “Recurrent back-projection network for video super-resolution,” 2019 IEEE/CVF Conf. on Computer Vision and Pattern Recognition, pp. 3892-3901, 2019. https://doi.org/10.1109/CVPR.2019.00402

- [13] X. Kong, X. Liu, J. Gu, Y. Qiao, and C. Dong, “Reflash dropout in image super-resolution,” 2022 IEEE/CVF Conf. on Computer Vision and Pattern Recognition, pp. 5992-6002. https://doi.org/10.1109/CVPR52688.2022.00591

- [14] L. Wang et al., “Unsupervised degradation representation learning for blind super-resolution,” 2021 IEEE/CVF Conf. on Computer Vision and Pattern Recognition, pp. 10576-10585, 2021. https://doi.org/10.1109/CVPR46437.2021.01044

- [15] Y. Yan et al., “SRGAT: Single image super-resolution with graph attention network,” IEEE Trans. on Image Processing. Vol.30, pp. 4905-4918, 2021. https://doi.org/10.1109/TIP.2021.3077135

- [16] W. Wen et al., “Video super-resolution via a spatio-temporal alignment network,” IEEE Trans. on Image Processing, Vol.31, pp. 1761-1773, 2022. https://doi.org/10.1109/TIP.2022.3146625

- [17] R. Keys, “Cubic convolution interpolation for digital image processing,” IEEE Trans. on Acoustics, Speech, and Signal Processing, Vol.29, No.6, pp. 1153-1160, 1981. https://doi.org/10.1109/TASSP.1981.1163711

- [18] K. Irie, A. E. McKinnon, K. Unsworth, and I. M. Woodhead, “A model for measurement of noise in CCD digital-video cameras,” Measurement Science and Technology, Vol.19, No.4, Article No.045207, 2008. https://doi.org/10.1088/0957-0233/19/4/045207

- [19] Y. M. Blanter and M. Büttiker, “Shot noise in mesoscopic conductors,” Physics Reports, Vol.336, Nos.1-2, pp. 1-166, 2000. https://doi.org/10.1016/S0370-1573(99)00123-4

- [20] F. Wang and A. Theuwissen, “Linearity analysis of a CMOS image sensor,” Electronic Imaging, Vol.29, No.11, pp. 84-90, 2017. https://doi.org/10.2352/ISSN.2470-1173.2017.11.IMSE-191

- [21] S. Farsiu, M. D. Robinson, M. Elad, and P. Milanfar, “Fast and robust multiframe super resolution,” IEEE Trans. on Image Processing, Vol.13, No.10, pp. 1327-1344, 2004. https://doi.org/10.1109/TIP.2004.834669

- [22] J. Cai, H. Zeng, H. Yong, Z. Cao, and L. Zhang, “Toward real-world single image super-resolution: A new benchmark and a new model,” 2019 IEEE/CVF Int. Conf. on Computer Vision, pp. 3086-3095, 2019. https://doi.org/10.1109/ICCV.2019.00318

- [23] C. Chen, Z. Xiong, X. Tian, Z.-J. Zha, and F. Wu, “Camera lens super-resolution,” 2019 IEEE/CVF Conf. on Computer Vision and Pattern Recognition, pp. 1652-1660, 2019. https://doi.org/10.1109/CVPR.2019.00175

- [24] P. Wei et al., “Component divide-and-conquer for real-world image super-resolution,” Computer Vision – ECCV 2020, Part 8, pp. 101-117, 2020. https://doi.org/10.1007/978-3-030-58598-3_7

- [25] K. Zhang, W. Zuo, and L. Zhang, “Learning a single convolutional super-resolution network for multiple degradations,” 2018 IEEE/CVF Conf. on Computer Vision and Pattern Recognition, pp. 3262-3271, 2018. https://doi.org/10.1109/CVPR.2018.00344

- [26] J. Yoo, N. Ahn, and K.-A. Sohn, “Rethinking data augmentation for image super-resolution: A comprehensive analysis and a new strategy,” 2020 IEEE/CVF Conf. on Computer Vision and Pattern Recognition, pp. 8372-8381, 2020. https://doi.org/10.1109/CVPR42600.2020.00840

- [27] T. Kim et al., “Learning temporally invariant and localizable features via data augmentation for video recognition,” Computer Vision – ECCV 2020 Workshops, Part 2, pp. 386-403, 2020. https://doi.org/10.1007/978-3-030-66096-3_27

- [28] Z. Lu et al., “Transformer for single image super-resolution,” 2022 IEEE/CVF Conf. on Computer Vision and Pattern Recognition Workshops, pp. 456-465, 2022. https://doi.org/10.1109/CVPRW56347.2022.00061

- [29] J. Korhonen and J. You, “Peak signal-to-noise ratio revisited: Is simple beautiful?,” 2012 4th Int. Workshop on Quality of Multimedia Experience, pp. 37-38, 2012. https://doi.org/10.1109/QoMEX.2012.6263880

- [30] Z. Wang, A. C. Bovik, H. R. Sheikh, and E. P. Simoncelli, “Image quality assessment: From error visibility to structural similarity,” IEEE Trans. on Image Processing, Vol.13, No.4, pp. 600-612, 2004. https://doi.org/10.1109/TIP.2003.819861

- [31] H. Chang, D.-Y. Yeung, and Y. Xiong, “Super-resolution through neighbor embedding,” Proc. of the 2004 IEEE Computer Society Conf. on Computer Vision and Pattern Recognition, 2004. https://doi.org/10.1109/CVPR.2004.1315043

- [32] X. Kong, H. Zhao, Y. Qiao, and C. Dong, “ClassSR: A general framework to accelerate super-resolution networks by data characteristic,” 2021 IEEE/CVF Conf. on Computer Vision and Pattern Recognition, pp. 12011-12020, 2021. https://doi.org/10.1109/CVPR46437.2021.01184

- [33] D. Song, “AdderSR: Towards energy efficient image super-resolution,” 2021 IEEE/CVF Conf. on Computer Vision and Pattern Recognition, pp. 15643-15652, 2021. https://doi.org/10.1109/CVPR46437.2021.01539

- [34] K. He, X. Zhang, S. Ren, and J. Sun, “Deep residual learning for image recognition,” 2016 IEEE Conf. on Computer Vision and Pattern Recognition, pp. 770-778, 2016. https://doi.org/10.1109/CVPR.2016.90

- [35] A. Vaswani et al., “Attention is all you need,” Proc. of the 31st Int. Conf. on Neural Information Processing Systems, pp. 6000-6010, 2017.

- [36] A. Dosovitskiy et al., “An image is worth 16×16 words: Transformers for image recognition at scale,” arXiv:2010.11929, 2020. https://doi.org/10.48550/arXiv.2010.11929

- [37] V. Jain and H. S. Seung, “Natural image denoising with convolutional networks,” Proc. of the 21st Int. Conf. on Neural Information Processing Systems, pp. 769-776, 2008.

- [38] A. Krizhevsky, I. Sutskever, and G. E. Hinton, “ImageNet classification with deep convolutional neural networks,” Proc. of the 25th Int. Conf. on Neural Information Processing Systems, Vol.1, pp. 1097-1105, 2012.

- [39] P. Tang, H. Wang, and S. Kwong, “G-MS2F: GoogLeNet based multi-stage feature fusion of deep CNN for scene recognition,” Neurocomputing, Vol.225, pp. 188-197, 2017. https://doi.org/10.1016/j.neucom.2016.11.023

- [40] A. E. Ilesanmi and T. O. Ilesanmi, “Methods for image denoising using convolutional neural network: A review,” Complex & Intelligent Systems, Vol.7, No.5, pp. 2179-2198, 2021. https://doi.org/10.1007/s40747-021-00428-4

- [41] B. Guo et al., “NERNet: Noise estimation and removal network for image denoising,” J. of Visual Communication and Image Representation, Vol.71, Article No.102851, 2020. https://doi.org/10.1016/j.jvcir.2020.102851

- [42] J. Chen, J. Chen, H. Chao, and M. Yang, “Image blind denoising with generative adversarial network based noise modeling,” 2018 IEEE/CVF Conf. on Computer Vision and Pattern Recognition, pp. 3155-3164, 2018. https://doi.org/10.1109/CVPR.2018.00333

- [43] J. L. Schönberger and J.-M. Frahm, “Structure-from-motion revisited,” 2016 IEEE Conf. on Computer Vision and Pattern Recognition, pp. 4104-4113, 2016. https://doi.org/10.1109/CVPR.2016.445

- [44] A. Abdelhamed, S. Lin, and M. S. Brown, “A high-quality denoising dataset for smartphone cameras,” 2018 IEEE/CVF Conf. on Computer Vision and Pattern Recognition, pp. 1692-1700, 2018. https://doi.org/10.1109/CVPR.2018.00182

- [45] E. Agustsson and R. Timofte, “NTIRE 2017 challenge on single image super-resolution: Dataset and study,” 2017 IEEE Conf. on Computer Vision and Pattern Recognition Workshops, pp. 1122-1131, 2017. https://doi.org/10.1109/CVPRW.2017.150

- [46] D. R. I. M. Setiadi, “PSNR vs SSIM: Imperceptibility quality assessment for image steganography,” Multimedia Tools and Applications, Vol.80, No.6, pp. 8423-8444, 2021. https://doi.org/10.1007/s11042-020-10035-z

- [47] R. Zeyde, M. Elad, and M. Protter, “On single image scale-up using sparse-representations,” Curves and Surfaces. pp. 711-730, 2012. https://doi.org/10.1007/978-3-642-27413-8_47

- [48] J.-B. Huang, A. Singh, and A. Ahuja, “Single image super-resolution from transformed self-exemplars,” 2015 IEEE Conf. on Computer Vision and Pattern Recognition, pp. 5197-5206, 2015. https://doi.org/10.1109/CVPR.2015.7299156

- [49] D. Martin, C. Fowlkes, D. Tal, and J. Malik, “A database of human segmented natural images and its application to evaluating segmentation algorithms and measuring ecological statistics,” 8th IEEE Int. Conf. on Computer Vision. Vol.2, pp. 416-423, 2001. https://doi.org/10.1109/ICCV.2001.937655

- [50] Y. Matsui et al., “Sketch-based manga retrieval using manga109 dataset,” Multimedia Tools and Applications, Vol.76, No.20, pp. 21811-21838, 2017. https://doi.org/10.1007/s11042-016-4020-z

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.