Research Paper:

Can Large Language Models Assess Serendipity in Recommender Systems?

Yu Tokutake

and Kazushi Okamoto†

and Kazushi Okamoto†

Graduate School of Informatics and Engineering, The University of Electro-Communications

1-5-1 Chofugaoka, Chofu, Tokyo 182-8585, Japan

†Corresponding author

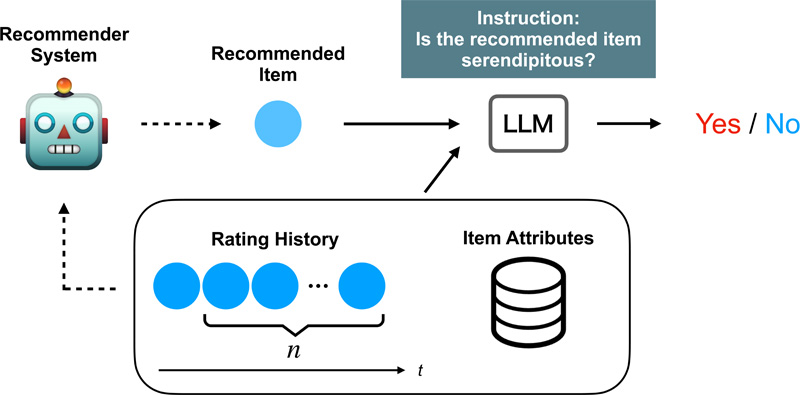

Serendipity-oriented recommender systems aim to counteract the overspecialization of user preferences. However, evaluating a user’s serendipitous response to a recommended item can be challenging owing to its emotional nature. In this study, we address this issue by leveraging the rich knowledge of large language models (LLMs) that can perform various tasks. First, it explores the alignment between the serendipitous evaluations made by LLMs and those made by humans. In this study, a binary classification task was assigned to the LLMs to predict whether a user would find the recommended item serendipitously. The predictive performances of three LLMs were measured on a benchmark dataset in which humans assigned the ground truth to serendipitous items. The experimental findings revealed that LLM-based assessment methods do not have a very high agreement rate with human assessments. However, they performed as well as or better than the baseline methods. Further validation results indicate that the number of user rating histories provided to LLM prompts should be carefully chosen to avoid both insufficient and excessive inputs and that interpreting the output of LLMs showing high classification performance is difficult.

Framework of the proposed method

- [1] R. J. Ziarani and R. Ravanmehr, “Serendipity in recommender systems: A systematic literature review,” J. Comput. Sci. Technol., Vol.36, No.2, pp. 375-396, 2021. https://doi.org/10.1007/s11390-020-0135-9

- [2] Z. Fu, X. Niu, and M. L. Maher, “Deep learning models for serendipity recommendations: A survey and new perspectives,” ACM Comput. Surv., Vol.56, No.1, Article No.19, 2023. https://doi.org/10.1145/3605145

- [3] A. Said, B. Fields, B. J. Jain, and S. Albayrak, “User-centric evaluation of a k-Furthest Neighbor collaborative filtering recommender algorithm,” Proc. 2013 Conf. Comput. Support. Coop. Work, pp. 1399-1408, 2013. https://doi.org/10.1145/2441776.2441933

- [4] Q. Zheng, C.-K. Chan, and H. H. S. Ip, “An unexpectedness-augmented utility model for making serendipitous recommendation,” Proc. 15th Ind. Conf. Data Min., pp. 216-230, 2015. https://doi.org/10.1007/978-3-319-20910-4_16

- [5] R. J. Ziarani and R. Ravanmehr, “Deep neural network approach for a serendipity-oriented recommendation system,” Expert Syst. Appl., Vol.185, Article No.115660, 2021. https://doi.org/10.1016/j.eswa.2021.115660

- [6] M. Zhang et al., “SNPR: A serendipity-oriented next POI recommendation model,” Proc. 30th ACM Int. Conf. Inf. Knowl. Manag., pp. 2568-2577, 2021. https://doi.org/10.1145/3459637.3482394

- [7] Z. Fu, X. Niu, and L. Yu, “Wisdom of crowds and fine-grained learning for serendipity recommendations,” Proc. 46th Int. ACM SIGIR Conf. Res. Dev. Inf. Retr., pp. 739-748, 2023. https://doi.org/10.1145/3539618.3591787

- [8] D. Kotkov, J. Veijalainen, and S. Wang, “How does serendipity affect diversity in recommender systems? A serendipity-oriented greedy algorithm,” Computing, Vol.102, No.2, pp. 393-411, 2020. https://doi.org/10.1007/s00607-018-0687-5

- [9] Y. Tokutake and K. Okamoto, “Serendipity-oriented recommender system with dynamic unexpectedness prediction,” 2023 IEEE Int. Conf. Syst. Man Cybern., pp. 1247-1252, 2023. https://doi.org/10.1109/SMC53992.2023.10394368

- [10] D. Kotkov, J. A. Konstan, Q. Zhao, and J. Veijalainen, “Investigating serendipity in recommender systems based on real user feedback,” Proc. 33rd Annu. ACM Symp. Appl. Comput., pp. 1341-1350, 2018. https://doi.org/10.1145/3167132.3167276

- [11] W. Hua, L. Li, S. Xu, L. Chen, and Y. Zhang, “Tutorial on large language models for recommendation,” Proc. 17th ACM Conf. Recomm. Syst., pp. 1281-1283, 2023. https://doi.org/10.1145/3604915.3609494

- [12] J. Liu et al., “Is ChatGPT a good recommender? A preliminary study,” arXiv:2304.10149, 2023. https://doi.org/10.48550/arXiv.2304.10149

- [13] Y. Xi et al., “Towards open-world recommendation with knowledge augmentation from large language models,” arXiv:2306:10933, 2023. https://doi.org/10.48550/arXiv.2306.10933

- [14] A. Zhang et al., “On generative agents in recommendation,” arXiv:2310.10108, 2023. https://doi.org/10.48550/arXiv.2310.10108

- [15] D. Carraro and D. Bridge, “Enhancing recommendation diversity by re-ranking with large language models,” arXiv:2401.11506, 2024. https://doi.org/10.48550/arXiv.2401.11506

- [16] D. Kotkov, A. Medlar, and D. Glowacka, “Rethinking serendipity in recommender systems,” Proc. 2023 Conf. Hum. Inf. Interact. Retr., pp. 383-387, 2023. https://doi.org/10.1145/3576840.3578310

- [17] P. Adamopoulos and A. Tuzhilin, “On unexpectedness in recommender systems: Or how to better expect the unexpected,” ACM Trans. Intell. Syst. Technol., Vol.5, No.4, Article No.54, 2014. https://doi.org/10.1145/2559952

- [18] J. Wang et al., “Is ChatGPT a good NLG evaluator? A preliminary study,” Proc. 4th New Front. Summ. Workshop, pp. 1-11, 2023. https://doi.org/10.18653/v1/2023.newsum-1.1

- [19] L. Zheng et al., “Judging LLM-as-a-judge with MT-bench and chatbot arena,” arXiv:2306.05685, 2023. https://doi.org/10.48550/arXiv.2306.05685

- [20] G. Faggioli et al., “Perspectives on large language models for relevance judgment,” Proc. 2023 ACM SIGIR Int. Conf. Theory Inf. Retr., pp. 39-50, 2023. https://doi.org/10.1145/3578337.3605136

- [21] W. Sun et al., “Is ChatGPT good at search? Investigating large language models as re-ranking agents,” Proc. 2023 Conf. Empir. Methods Nat. Lang. Process., pp. 14918-14937, 2023. https://doi.org/10.18653/v1/2023.emnlp-main.923

- [22] D. Trautmann, A. Petrova, and F. Schilder, “Legal prompt engineering for multilingual legal judgement prediction,” arXiv:2212.02199, 2022. https://doi.org/10.48550/arXiv.2212.02199

- [23] C. Jiang and X. Yang, “Legal syllogism prompting: Teaching large language models for legal judgment prediction,” Proc. of the 19th Int. Conf. on Artif. Intell. and Law, pp. 417-421, 2023. https://doi.org/10.1145/3594536.3595170

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.