Paper:

Detection of Japanese Quails (Coturnix japonica) in Poultry Farms Using YOLOv5 and Detectron2 Faster R-CNN

Ivan Roy S. Evangelista*,†, Lenmar T. Catajay**, Maria Gemel B. Palconit*, Mary Grace Ann C. Bautista*, Ronnie S. Concepcion II***, Edwin Sybingco*, Argel A. Bandala*, and Elmer P. Dadios***

*Department of Electronics and Computer Engineering, De La Salle University

2401 Taft Avenue, Malate, Manila 1004, Philippines

**Computer Engineering Department, Sultan Kudarat State University

E.J.C. Montilla, Isulan, Sultan Kudarat 9805, Philippines

***Department of Manufacturing and Management Engineering, De La Salle University

2401 Taft Avenue, Malate, Manila 1004, Philippines

†Corresponding author

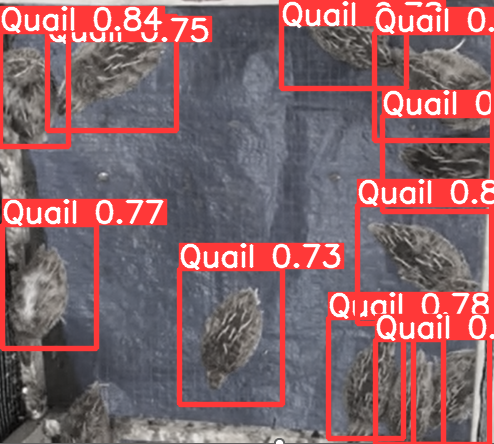

Poultry, like quails, is sensitive to stressful environments. Too much stress can adversely affect birds’ health, causing meat quality, egg production, and reproduction to degrade. Posture and behavioral activities can be indicators of poultry wellness and health condition. Animal welfare is one of the aims of precision livestock farming. Computer vision, with its real-time, non-invasive, and accurate monitoring capability, and its ability to obtain a myriad of information, is best for livestock monitoring. This paper introduces a quail detection mechanism based on computer vision and deep learning using YOLOv5 and Detectron2 (Faster R-CNN) models. An RGB camera installed 3 ft above the quail cages was used for video recording. The annotation was done in MATLAB video labeler using the temporal interpolator algorithm. 898 ground truth images were extracted from the annotated videos. Augmentation of images by change of orientation, noise addition, manipulating hue, saturation, and brightness was performed in Roboflow. Training, validation, and testing of the models were done in Google Colab. The YOLOv5 and Detectron2 reached average precision (AP) of 85.07 and 67.15, respectively. Both models performed satisfactorily in detecting quails in different backgrounds and lighting conditions.

Vision-based monitoring of Quails

- [1] H. Lukanov, “Domestic quail (Coturnix japonica domestica), is there such farm animal?,” World’s Poultry Science J., Vol.75, No.4, pp. 547-558, 2019.

- [2] F. Batool et al., “An updated review on behavior of domestic quail with reference to the negative effect of heat stress,” Animal Biotechnology, 2021.

- [3] T. C. Santos et al., “Productive performance and surface temperatures of Japanese quail exposed to different environment conditions at start of lay,” Poultry Science, Vol.98, No.7, pp. 2830-2839, 2019.

- [4] F. Minvielle, “The future of Japanese quail for research and production,” World’s Poultry Science J., Vol.60, No.4, pp. 500-507, 2004.

- [5] T. C. dos Santos et al., “Meat quality traits of European quails reared under different conditions of temperature and air velocity,” Poultry Science, Vol.99, No.2, pp. 848-856, 2020.

- [6] R. García et al., “A systematic literature review on the use of machine learning in precision livestock farming,” Computers and Electronics in Agriculture, Vol.179, Article No.105826, 2020.

- [7] J. Astill et al., “Smart poultry management: Smart sensors, big data, and the internet of things,” Computers and Electronics in Agriculture, Vol.170, Article No.105291, 2020.

- [8] D. Berckmans, “General introduction to precision livestock farming,” Animal Frontiers, Vol.7, No.1, pp. 6-11, 2017.

- [9] R. S. Concepcion et al., “The Technology Adoption and Governance of Artificial Intelligence in the Philippines,” 2019 IEEE 11th Int. Conf. on Humanoid, Nanotechnology, Information Technology, Communication and Control, Environment, and Management (HNICEM 2019), 2019.

- [10] J. Alejandrino et al., “A Hybrid Data Acquisition Model using Artificial Intelligence and IoT Messaging Protocol for Precision Farming,” 2020 IEEE 12th Int. Conf. on Humanoid, Nanotechnology, Information Technology, Communication and Control, Environment, and Management (HNICEM 2020), 2020.

- [11] R. Concepcion et al., “Towards the Integration of Computer Vision and Applied Artificial Intelligence in Postharvest Storage Systems: Non-invasive Harvested Crop Monitoring,” 2021 IEEE 13th Int. Conf. on Humanoid, Nanotechnology, Information Technology, Communication and Control, Environment, and Management (HNICEM 2021), 2021.

- [12] R. S. Concepcion et al., “Adaptive fertigation system using hybrid vision-based lettuce phenotyping and fuzzy logic valve controller towards sustainable aquaponics,” J. Adv. Comput. Intell. Intell. Inform., Vol.25, No.5, pp. 610-617, 2021.

- [13] H. L. Aquino et al., “Trend Forecasting of Computer Vision Application in Aquaponic Cropping Systems Industry,” 2020 IEEE 12th Int. Conf. on Humanoid, Nanotechnology, Information Technology, Communication and Control, Environment, and Management (HNICEM 2020), 2020.

- [14] D. A. B. Oliveira et al., “A review of deep learning algorithms for computer vision systems in livestock,” Livestock Science, Vol.253, Article No.104700, 2021.

- [15] J. Chai et al., “Deep learning in computer vision: A critical review of emerging techniques and application scenarios,” Machine Learning with Applications, Vol.6, Article No.100134, 2021.

- [16] S. Lauguico et al., “Machine Vision-Based Prediction of Lettuce Phytomorphological Descriptors using Deep Learning Networks,” 2020 IEEE 12th Int. Conf. on Humanoid, Nanotechnology, Information Technology, Communication and Control, Environment, and Management (HNICEM 2020), 2020.

- [17] C. H. Mendigoria et al., “OryzaNet: Leaf Quality Assessment of Oryza sativa Using Hybrid Machine Learning and Deep Neural Network,” 2021 IEEE 13th Int. Conf. on Humanoid, Nanotechnology, Information Technology, Communication and Control, Environment, and Management (HNICEM 2021), 2021.

- [18] M. L. Enriquez et al., “Prediction of Weld Current Using Deep Transfer Image Networks Based on Weld Signatures for Quality Control,” 2021 IEEE 13th Int. Conf. on Humanoid, Nanotechnology, Information Technology, Communication and Control, Environment, and Management (HNICEM 2021), 2021.

- [19] C. H. Mendigoria et al., “Vision-based Postharvest Analysis of Musa Acuminata Using Feature-based Machine Learning and Deep Transfer Networks,” 2021 IEEE Region 10 Humanitarian Technology Conf. (R10-HTC), 2021.

- [20] M. G. B. Palconit et al., “Counting of Uneaten Floating Feed Pellets in Water Surface Images using ACF Detector and Sobel Edge Operator,” 2021 IEEE Region 10 Humanitarian Technology Conf. (R10-HTC), 2021.

- [21] R. L. Galvez et al., “Object Detection Using Convolutional Neural Networks,” 2018 IEEE Region 10 Annual Int. Conf. (TENCON 2018), pp. 2023-2027, 2018.

- [22] R. Dohmen, C. Catal, and Q. Liu, “Computer vision-based weight estimation of livestock: a systematic literature review,” New Zealand Journal of Agricultural Research, Vol.65, No.2-3, pp. 227-247, doi: 10.1080/00288233.2021.1876107, 2022.

- [23] C. Okinda et al., “A machine vision system for early detection and prediction of sick birds: A broiler chicken model,” Biosystems Engineering, Vol.188, pp. 229-242, 2019.

- [24] C. Chen, W. Zhu, and T. Norton, “Behaviour recognition of pigs and cattle: Journey from computer vision to deep learning,” Computers and Electronics in Agriculture, Vol.187, Article No.106255, 2021.

- [25] K. Chen et al., “Grading of Chicken Carcass Weight Based on Machine Vision,” Nongye Jixie Xuebao / Trans. of the Chinese Society for Agricultural Machinery, Vol.48, No.6, 2017.

- [26] J. L. Dioses et al., “Performance of Classification Models in Japanese Quail Egg Sexing,” 2021 IEEE 17th Int. Colloquium on Signal Processing and Its Applications (CSPA 2021), pp. 29-34, 2021.

- [27] N. Mizuno and Y. Nakano, “Development of Visual Egg Inspection System for Poultry Farmer Using CNN with Deep Learning,” Proc. of the 2020 IEEE/SICE Int. Symp. on System Integration (SII 2020), pp. 195-200, 2020.

- [28] C. Okinda et al., “A review on computer vision systems in monitoring of poultry: A welfare perspective,” Artificial Intelligence in Agriculture, Vol.4, pp. 184-208, 2020.

- [29] J. E. del Valle et al., “Unrest index for estimating thermal comfort of poultry birds (Gallus gallus domesticus) using computer vision techniques,” Biosystems Engineering, Vol.206, pp. 123-134, 2021.

- [30] A. Nasiri et al., “Pose estimation-based lameness recognition in broiler using CNN-LSTM network,” Computers and Electronics in Agriculture, Vol.197, Article No.106931, 2022.

- [31] J. Xu et al., “Research on the lying pattern of grouped pigs using unsupervised clustering and deep learning,” Livestock Science, Vol.260, Article No.104946, 2022.

- [32] S. Neethirajan, “ChickTrack – A quantitative tracking tool for measuring chicken activity,” Measurement, Vol.191, Article No.110819, 2022.

- [33] L. Ting et al., “Ship Detection Algorithm based on Improved YOLO V5,” Proc. of 2021 6th Int. Conf. on Automation, Control and Robotics Engineering (CACRE 2021), pp. 483-487, 2021.

- [34] P. Jiang et al., “A Review of Yolo Algorithm Developments,” Procedia Computer Science, Vol.199, pp. 1066-1073, 2022.

- [35] Y. Wu et al., “Detectron2,” 2019. https://github.com/facebookresearch/detectron2 [accessed June 2, 2022]

- [36] V. Pham, C. Pham, and T. Dang, “Road Damage Detection and Classification with Detectron2 and Faster R-CNN,” Proc. of 2020 IEEE Int. Conf. on Big Data, Big Data 2020, pp. 5592-5601, 2020.

- [37] M. A. Rosales et al., “Faster R-CNN based Fish Detector for Smart Aquaculture System,” 2021 IEEE 13th Int. Conf. on Humanoid, Nanotechnology, Information Technology, Communication and Control, Environment, and Management (HNICEM 2021), 2021.

- [38] H. Honda, “Digging into Detectron 2 – Part 1-5,” 2020. https://medium.com/@hirotoschwert/digging-into-detectron-2-part-5-6e220d762f9 [accessed May 28, 2022]

- [39] P. Maharjan and Y. Liang, “Precision Livestock Farming: The Opportunities in Poultry Sector,” J. of Agricultural Science and Technology A, Vol.10, No.2, pp. 45-53, 2020.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.