Paper:

An Approach to NMT Re-Ranking Using Sequence-Labeling for Grammatical Error Correction

Bo Wang, Kaoru Hirota, Chang Liu, Yaping Dai†, and Zhiyang Jia

School of Automation, Beijing Institute of Technology

No.5 Zhongguancun South Street, Haidian District, Beijing 100081, China

†Corresponding author

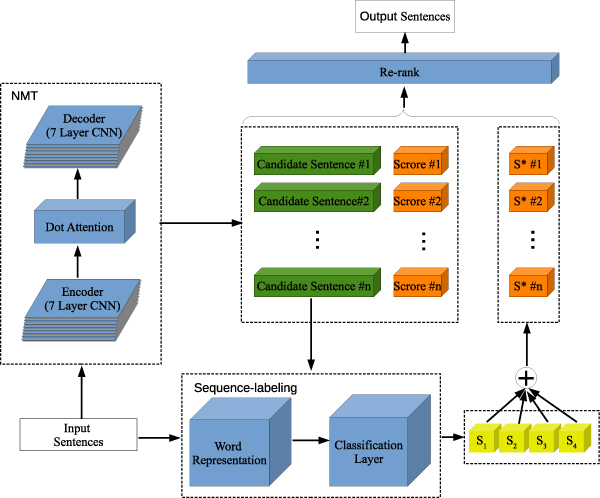

An approach to N-best hypotheses re-ranking using a sequence-labeling model is applied to resolve the data deficiency problem in Grammatical Error Correction (GEC). Multiple candidate sentences are generated using a Neural Machine Translation (NMT) model; thereafter, these sentences are re-ranked via a stacked Transformer following a Bidirectional Long Short-Term Memory (BiLSTM) with Conditional Random Field (CRF). Correlations within the sentences are extracted using the sequence-labeling model based on the Transformer, which is particularly suitable for long sentences. Meanwhile, the knowledge from a large amount of unlabeled data is acquired through the pre-trained structure. Thus, completely revised sentences are adopted instead of partially modified sentences. Compared with conventional NMT, experiments on the NUCLE and FCE datasets demonstrate that the model improves the F0.5 score by 8.22% and 2.09%, respectively. As an advantage, the proposed re-ranking method has the advantage of only requires a small set of easily computed features that do not need linguistic inputs.

Re-ranking algorithm

- [1] N. Han, M. Chodorow, and C. Leacock, “Detecting errors in English article usage by non-native speakers,” Natural Language Engineering, Vol.12, No.2, pp. 115-129, 2006.

- [2] C. Brockett, W. B. Dolan, and M. Gamon, “Correcting ESL errors using phrasal SMT techniques,” Proc. of the 21st Int. Conf. on Computational Linguistics and 44th Annual Meeting of the Association for Computational Linguistics, pp. 249-256, 2006.

- [3] M. Junczys-Dowmunt and R. Grundkiewicz, “Phrase-based machine translation is state-of-the-art for automatic grammatical error correction,” Proc. of the 2016 Conf. on Empirical Methods on Natural Language Processing, pp. 1546-1556, 2016.

- [4] Z. Yuan and M. Felice, “Constrained grammatical error correction using statistical machine translation,” Proc. of the 17th Conf. on Computational Natural Language Learning: Shared Task, pp. 52-61, 2013.

- [5] I. Yoshimoto, T. Kose, K. Mitsuzawa et al., “NAIST at 2013 CoNLL grammatical error correction shared task,” Proc. of the 17th Conf. on Computational Natural Language Learning: Shared Task, pp. 26-33, 2013.

- [6] N. Madnani, J. Tetreault, and M. Chodorow, “Exploring grammatical error correction with not-so-crummy machine translation,” Proc. of the 17th Workshop on Building Educational Applications Using NLP, pp. 44-53, 2012.

- [7] Q. Qin and J. Vychodil, “Pedestrian Detection Algorithm Based on Improved Convolutional Neural Network,” J. Adv. Comput. Intell. Intell. Inform., Vol.21, No.5, pp. 834-839, 2017.

- [8] Z. Yan and Y. Wu, “A Neural N-Gram Network for Text Classification,” J. Adv. Comput. Intell. Intell. Inform., Vol.22, No.3, pp. 380-386, 2018.

- [9] R. Yu, X. Xu, and Z. Wang, “Influence of Object Detection in Deep Learning,” J. Adv. Comput. Intell. Intell. Inform., Vol.22, No.5, pp. 683-688, 2018.

- [10] M. Kitahashi and H. Handa, “Estimating Classroom Situations by Using CNN with Environmental Sound Spectrograms,” J. Adv. Comput. Intell. Intell. Inform., Vol.22, No.2, pp. 242-248, 2018.

- [11] R. Aminpour and E. Dadios, “A Comparative Sensor Based Multi-Classes Neural Network Classifications for Human Activity Recognition,” J. Adv. Comput. Intell. Intell. Inform., Vol.22, No.5, pp. 711-717, 2018.

- [12] Z. Yuan and T. Briscoe, “Grammatical error correction using neural machine translation,” Proc. of the 2016 Conf. of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, pp. 380-386, 2016.

- [13] J. Ji, Q. Wang, K. Toutanova, Y. Gong, S. Truong, and J. Gao, “A nested attention neural hybrid model for grammatical error correction,” Proc. of the 55th Annual Meeting of the Association for Computational Linguistics, Vol.1: Long Papers, pp. 753-762, 2017.

- [14] S. Chollampatt and H. T. Ng, “A Multilayer Convolutional Encoder-Decoder Neural Network for Grammatical Error Correction,” Association for the Advancement of Artificial Intelligence, 2018.

- [15] R. Grundkiewicz and M. Junczys-Dowmunt, “Near human-level performance in grammatical error correction with hybrid machine translation,” Proc. of the 2018 Conf. of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Vol.2 (Short Papers), pp. 284-290, 2018.

- [16] S. Chollampatt and H. T. Ng, “Connecting the dots: Towards human-level grammatical error correction,” Proc. of the 12th Workshop on Innovative Use of NLP for Building Educational Applications, pp. 327-333, 2017.

- [17] F. Mariano, Y. Zheng, Ø. E. Andersen, H. Yannakoudakis, and E. Kochmar, “Grammatical error correction using hybrid systems and type filtering,” Proc. of the 18th Conf. on Computational Natural Language Learning: Shared Task, pp. 15-24, 2014.

- [18] Z. Yuan, T. Briscoe, and M. Felice, “Candidate re-ranking for SMT-based grammatical error correction,” Proc. of the 11th Workshop on Innovative Use of NLP for Building Educational Applications, pp. 256-266, 2016.

- [19] S. Chollampatt, D. T. Hoang, and H. T. Ng, “Adapting grammatical error correction based on the native language of writers with neural network joint models,” Proc. of the 2016 Conf. on Empirical Methods in Natural Language Processing, pp. 1901-1911, 2016.

- [20] A. Rozovskaya and D. Roth, “Grammatical error correction: Machine translation and classifiers,” Proc. of the 54th Annual Meeting of the Association for Computational Linguistics, Vol.1: Long Papets, pp. 2205-2215, 2016.

- [21] Z. Xie, A. Avati, N. Arivazhagan, D. Jurafsky, and A. Y. Ng, “Neural language correction with character-based attention,” arXiv preprint, arXiv:1603.09727, 2016.

- [22] A. Vaswani, N. Shazeer, N. Parmar, J. Uszkoreit, L. Jones, A. N. Gomez, L. Kaiser, and I. Polosukhin, “Attention is All you Need,” 31st Conf. on Neural Information Processing Systems (NIPS 2017), pp. 6000-6010, 2017.

- [23] Z. Huang, W. Xu, and K. Yu, “Bidirectional LSTM-CRF Models for Sequence Tagging,” arXiv preprint, arXiv:1508.01991, 2015.

- [24] T. Mizumoto, M. Komachi, M. Nagata, and Y. Matsumoto, “Mining revision log of language learning SNS for automated Japanese error correction of second language learners,” Proc. of 5th Int. Joint Conf. on Natural Language Processing, pp. 147-155, 2011.

- [25] D. Dahlmeier, H. T. Ng, and S. M. Wu, “Building a large annotated corpus of learner English: The NUS corpus of learner English,” Proc. of the 8th Workshop on Innovative Use of NLP for Building Educational Applications, pp. 22-31, 2013.

- [26] H. Yannakoudakis, T. Briscoe, and B. Medlock, “A new dataset and method for automatically grading ESOL texts,” Proc. of the 49th Annual Meeting of the Association for Computational Linguistics: Human Language Technologies, pp. 180-189, 2011.

- [27] B. Wang, K. Hirota, C. Liu, Y. Dai, and Z. Jia, “Sequence-Labelling Model Based on Self-attention for Grammatical Error Correction,” Proc. of the 6th Int. Workshop on Advanced Computational Intelligence and Intelligent Informatics (IWACIII), 2019.

- [28] J. Devlin, M.-W. Chang, K. Lee, and K. Toutanova, “BERT: Pre-training of deep bidirectional transformers for language understanding,” Proc. of the 2019 Conf. of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Vol.1 (Long and Short Papers), pp. 4171-4186, 2018.

- [29] K. He, X. Zhang, S. Ren, and J. Sun, “Deep Residual Learning for Image Recognition,” 2016 IEEE Conf. on Computer Vision and Pattern Recognition (CVPR), pp. 770-778, 2016.

- [30] J. Gehring, M. Auli, D. Grangier, D. Yarats, and Y. N. Dauphin, “Convolutional sequence to sequence learning,” Proc. of the 34th Int. Conf. on Machine Learning, Vol.70, pp. 1243-1252, 2017.

- [31] Y. N. Dauphin, A. Fan, M. Auli, and D. Grangier, “Language modeling with gated convolutional networks,” Proc. of the 34th Int. Conf. on Machine Learning, Vol.70, pp. 933-941, 2017.

- [32] R. Sennrich, B. Haddow, and A. Birch, “Neural machine translation of rare words with subword units,” Proc. of the 54th Annual Meeting of the Association for Computational Linguistics, Vol.1: Long Papers, pp. 1715-1725, 2016.

- [33] C. Bryant, M. Felice, and T. Briscoe, “Automatic annotation and evaluation of error types for grammatical error correction,” Proc. of the 55th Annual Meeting of the Association for Computational Linguistics, Vol.1: Long Papers, pp. 793-805, 2017.

- [34] C. Bryant, M. Felice, Ø. E. Andersen, and T. Briscoe, “The BEA-2019 Shared Task on Grammatical Error Correction,” Proc. of the 14th Workshop on Innovative Use of NLP for Building Educational Applications, pp. 52-75, 2019.

- [35] H. T. Ng, S. M. Wu, T. Briscoe, C. Hadiwinoto, R. H. Susanto, and C. Bryant, “The CoNLL-2014 shared task on grammatical error correction,” Proc. of the 18th Conf. on Computational Natural Language Learning: Shared Task, pp. 1-14, 2014.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.